Transparent computing server cache optimization method and system based on association pattern

A technology of transparent computing and association mode, applied in memory systems, computing, instruments, etc., can solve problems such as network and other service resource load, and achieve the effect of avoiding costs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

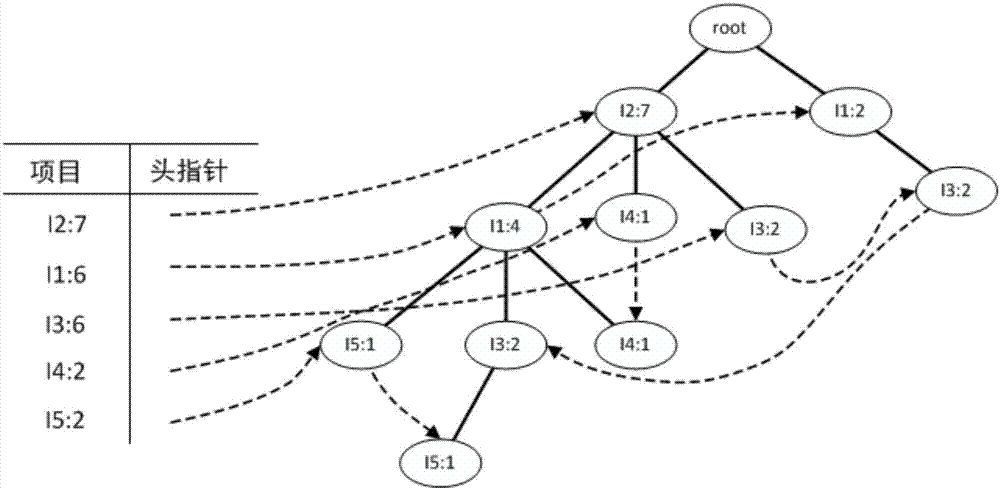

[0060] This embodiment discloses a method for optimizing a cache on a transparent computing server based on an associative mode by relying on the improved FP-Stream. include:

[0061] Step S1: Process the data streams accessed by the user in batches, scan the data sets corresponding to each batch, record the transaction items that meet the screening conditions in the data sets corresponding to each batch, and filter the support count greater than or equal to τ*(σ-ε)*|B i |The data block to the data stream B for n ≥ 2 batches n Construct FP-tree; where σ is the minimum support, ε is the maximum support error, |B i | Represents the width of the data stream for batch i.

[0062] In this step, for n ≥ 2 batches of data stream B n The construction of FP-tree also uses the support coefficient τ to filter the original data stream to avoid the time and space cost of processing infrequently accessed data blocks. where σ, ε and |B above i Parameters such as | can be set specifical...

Embodiment 2

[0088] Corresponding to the above-mentioned Embodiment 1, this embodiment discloses a transparent computing server cache optimization system based on an association mode, including:

[0089] The first processing unit is used to process the data streams accessed by the user in batches, scan the data sets corresponding to each batch, record the transaction items that meet the filtering conditions in the data sets corresponding to each batch, and filter the support degree count greater than or equal to τ*(σ-ε)*|B i |The data block to the data stream B for n ≥ 2 batches n Construct FP-tree; where σ is the minimum support, ε is the maximum support error, |B i | Represents the width of the batch as i data stream;

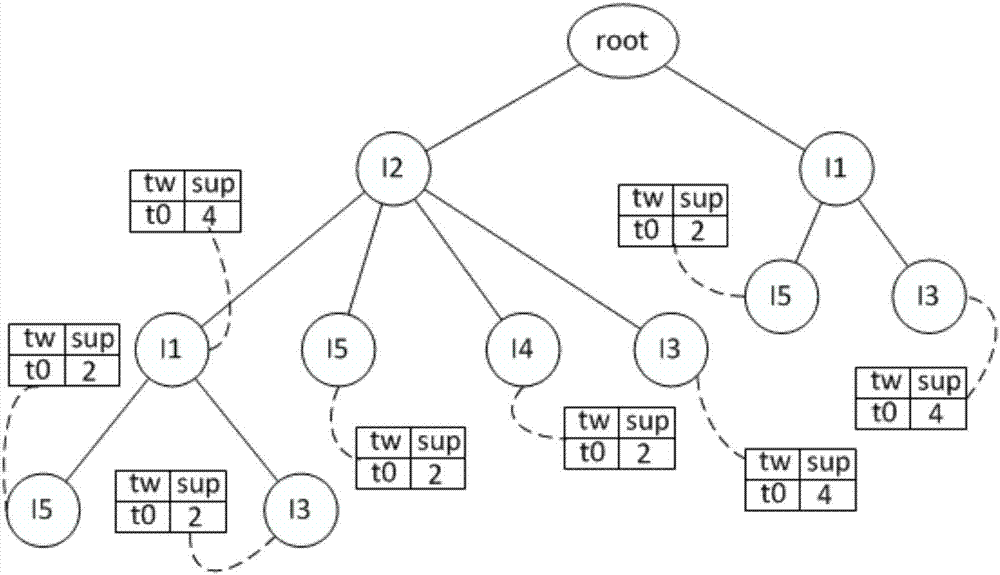

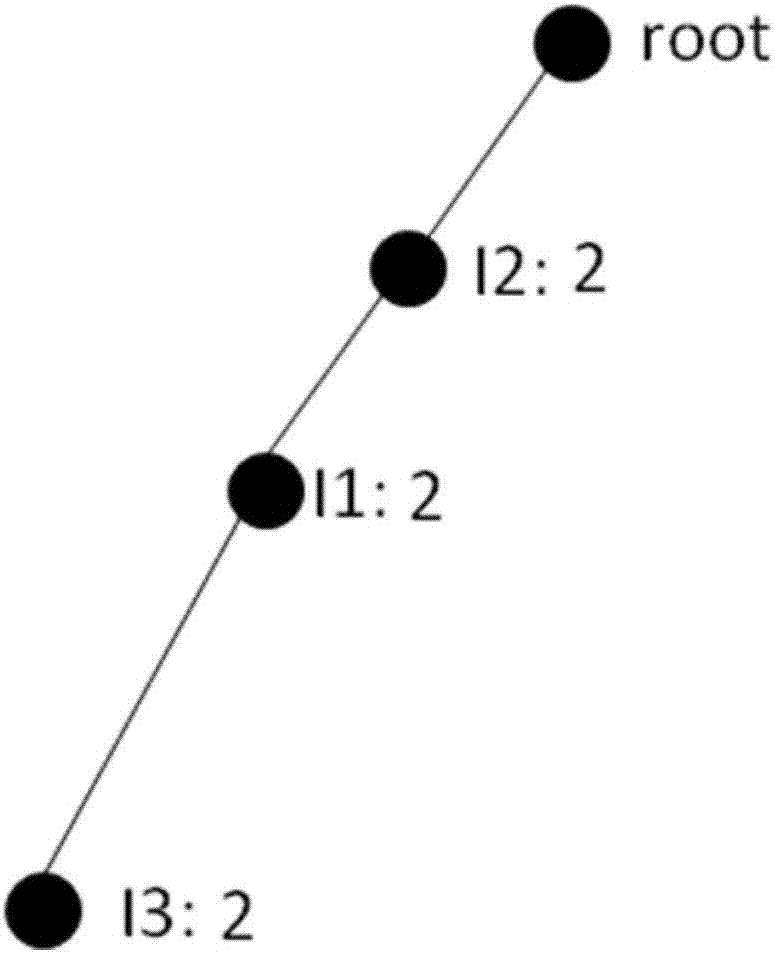

[0090] The second processing unit is used to mine the frequent patterns and support count information of each batch of data streams by using the FP growth method. If a single prefix path appears in any conditional pattern base, and the frequencies of the node elements o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com