Gesture recognition method based on recurrent 3D convolutional neural network

A neural network and three-dimensional convolution technology, applied in the field of human-computer intelligent interaction, can solve the problems of difficult classification and achieve the effect of low equipment cost and convenient non-contact gesture recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

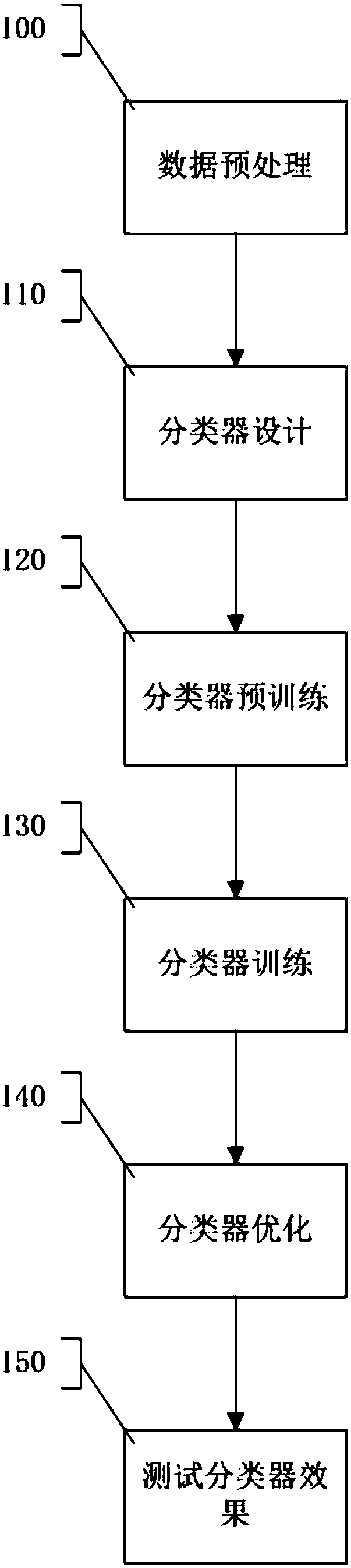

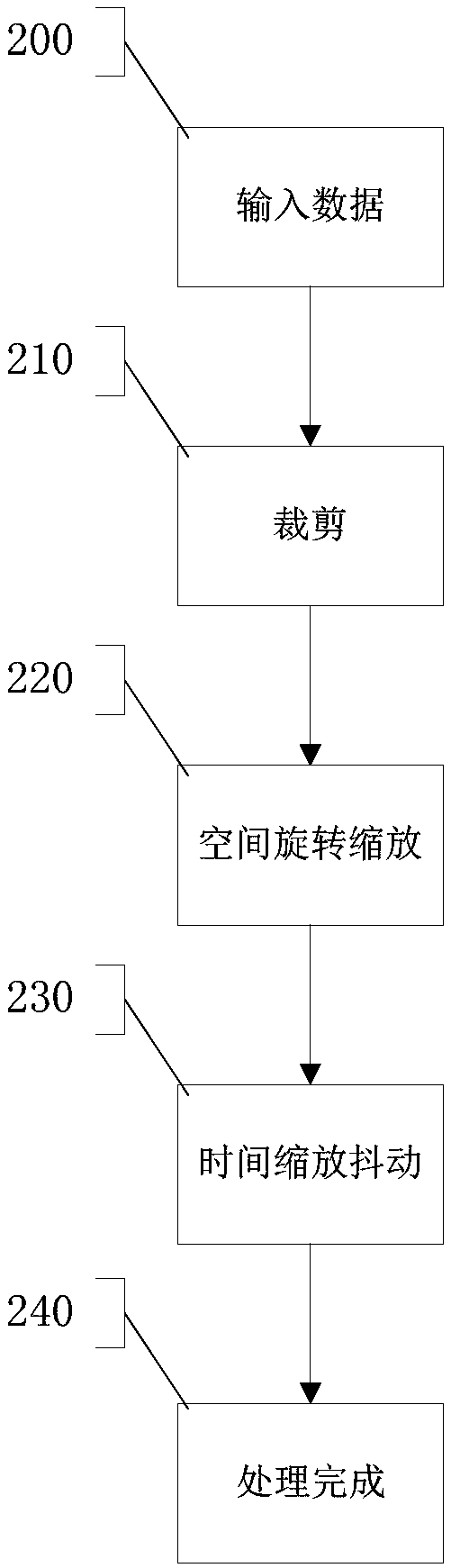

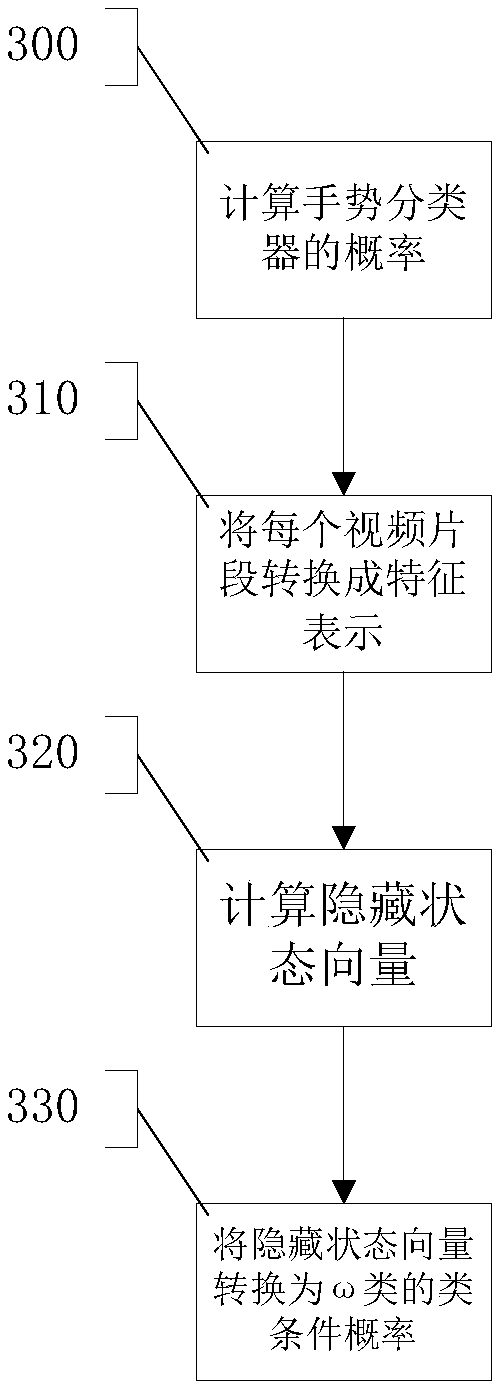

[0053] Such as figure 1 As shown, step 100 is executed to perform data preprocessing, which is used to process the acquired data into a fixed size, which meets the specification requirements of the input layer of the cyclic three-dimensional convolutional neural network. Such as figure 2 As shown, step 200 and step 210 are executed in sequence to crop the input data, and the random cropping size is A×A (in this embodiment, A=112 is set). Step 220 is executed, in order to increase the diversity of training samples, data enhancement is required. Carry out random spatial rotation and scaling to each video, the angle of spatial rotation is ± B ° (in this embodiment, setting B=15), zoom size is ± C% (in this embodiment, setting C=20) . Execute step 230, carry out random time scaling and jitter to each video, zoom size is ± D% (in this embodiment setting D=20), shaking amplitude is ± E frame (in this embodiment setting E= 3). Execute step 240 to obtain data meeting the input s...

Embodiment 2

[0060] Such as Figure 5 As shown, the overall system architecture consists of four parts: a data input module 500 , a data preprocessing module 510 , a recurrent 3D convolutional neural network classifier 520 and an output class label 530 . The loop three-dimensional convolutional neural network classifier 520 can be decomposed into: the loop three-dimensional convolutional neural network classifier design submodule 521, the loop three-dimensional convolutional neural network classifier pre-training submodule 522, the loop three-dimensional convolutional neural network classifier training submodule Module 523 , recurrent three-dimensional convolutional neural network classifier optimization submodule 524 and testing submodule 525 .

[0061] This embodiment proposes a method for gesture recognition based on a cyclic three-dimensional convolutional neural network, including importing video data in the data input module 500, performing data preprocessing in the data preprocessin...

Embodiment 3

[0063] Such as Image 6 As shown, step 600 is executed to collect image data through a camera (such as Figure 6a shown). Execute step 620, carry out gesture clipping to the collected data, remove redundant part, segment gesture image (such as Figure 6b shown). The image is processed in two stages, which are the training stage of the 3D convolutional neural network model and the testing stage of the 3D convolutional neural network model. In the training phase of the cyclic three-dimensional convolutional neural network model, step 620 is performed, and the data is enhanced by using data enhancement techniques, and the processing results are as follows: Figure 6c shown. Step 630 is executed to preprocess the data to extract clear key frames. Execute step 640, train the 3D convolutional neural network first, and then train the overall model. Such as Figure 6d As shown, the training method is to crop the video to obtain several pictures, and the size of random cropping ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com