Data processing method and device for convolutional neural network

A data processing device and convolutional neural network technology, applied in the field of neural networks, can solve the problems of increasing data bandwidth, reducing the data processing capability of a convolutional neural network processing system, and increasing storage space, so as to reduce data bandwidth or storage space. requirements, improve data processing capabilities, and avoid the effect of repeated reading

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0036] This embodiment will describe the perspective of the data processing device of the convolutional neural network. The data processing device can specifically be integrated in a processor, and the processor can be a CPU, FPGA (Field Programmable Gate Array, Field Programmable Gate Array), ASIC (Application Specific Integrated Circuit, application specific integrated circuit), GPU (Graphics Processing Unit, graphics processing unit) or coprocessor.

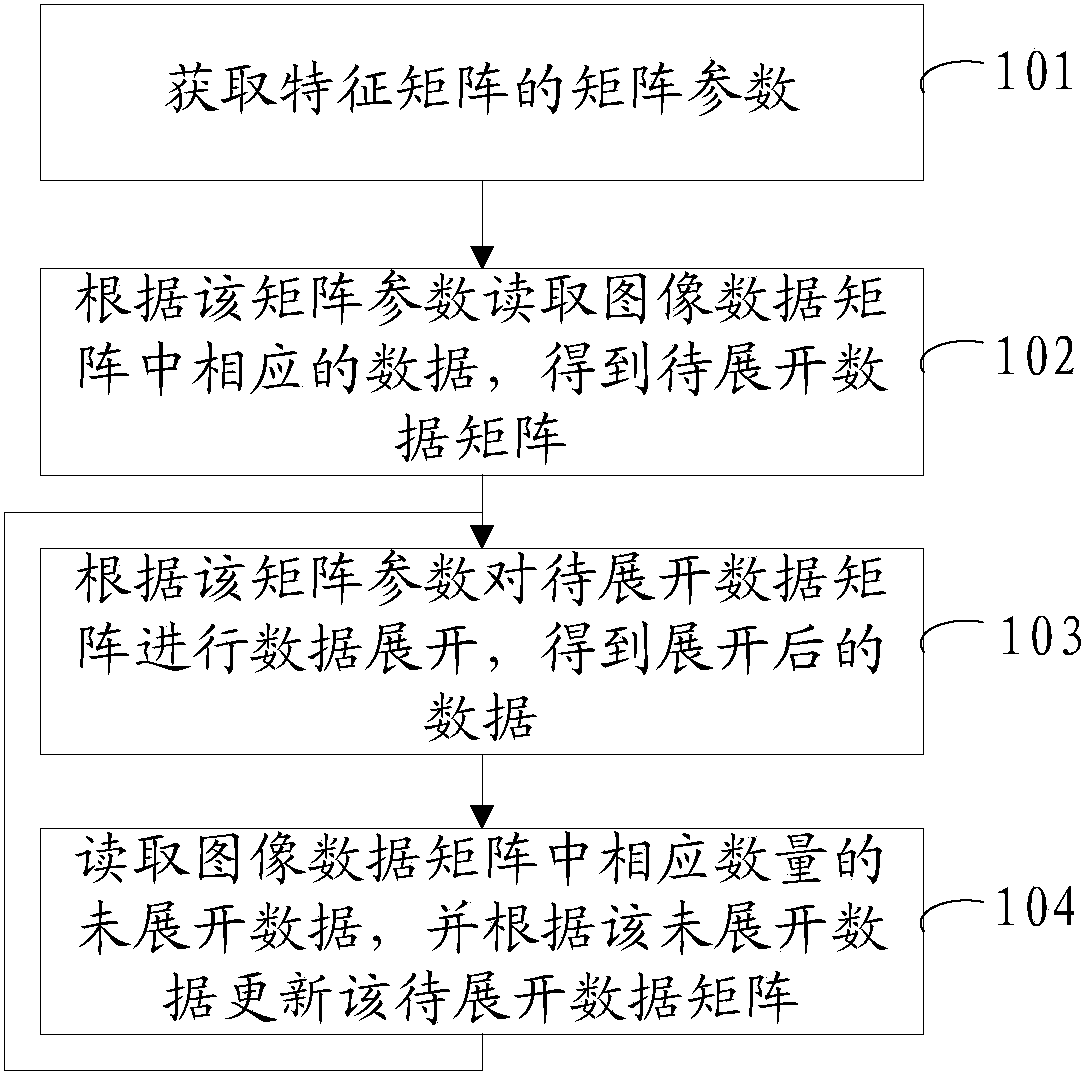

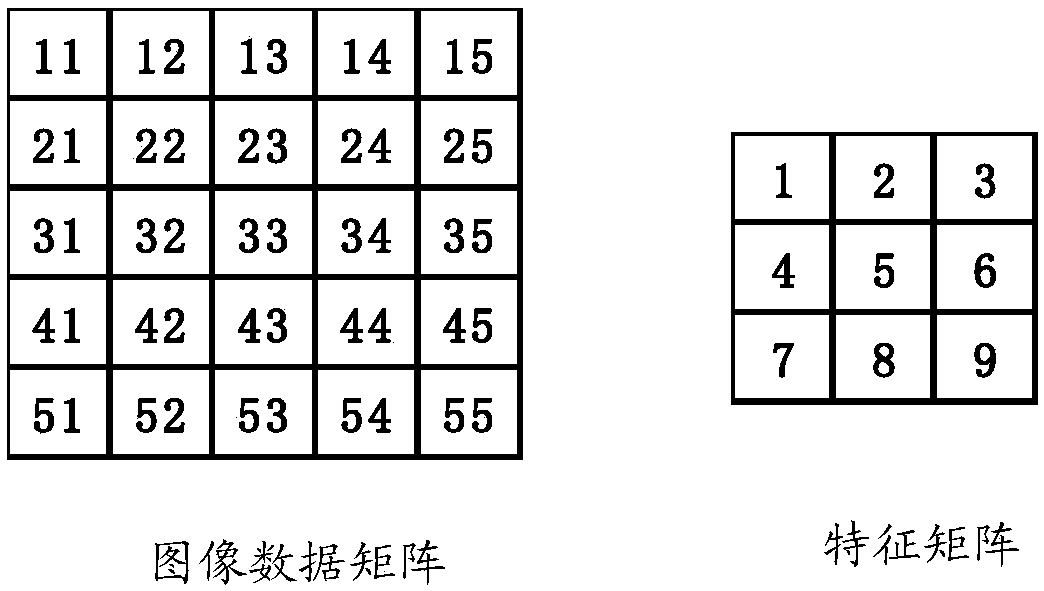

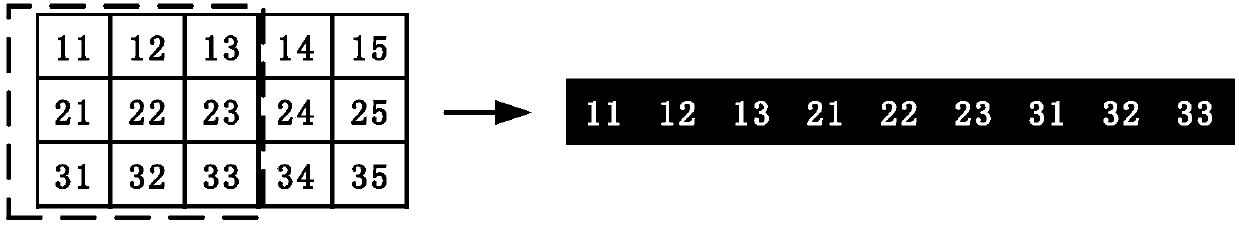

[0037] A data processing method of a convolutional neural network, which obtains matrix parameters of a feature matrix, and then reads corresponding data in an image data matrix according to the matrix parameters to obtain a data matrix to be expanded, and performs data processing on the data matrix to be expanded according to the matrix parameters Expanding, obtaining the expanded data, reading the corresponding amount of unexpanded data in the image data matrix, updating the unexpanded data matrix according to the unexpanded ...

Embodiment 2

[0118] According to the method described in Embodiment 1, an example will be given below for further detailed description.

[0119] In this embodiment, the data processing device of the convolutional neural network will be integrated with a coprocessor to Figure 5 The system architecture shown is illustrated as an example. The coprocessor can be an FPGA, ASIC, or other type of coprocessor.

[0120] In this embodiment, the image data matrix is stored in the DDR memory of the processing system.

[0121] Such as Figure 8a As shown, a data processing method of a convolutional neural network, the specific process can be as follows:

[0122] 201. The coprocessor acquires a system parameter, where the system parameter includes a matrix parameter of a characteristic matrix.

[0123] The matrix parameter may include the number of rows and columns of the feature matrix. In this embodiment, the system parameters may also include the number of rows and columns of the graphic data m...

Embodiment 3

[0147] In order to better implement the above method, an embodiment of the present invention also provides a data processing device for a convolutional neural network, such as Figure 9a As shown, the data processing device of the convolutional neural network may include an acquisition unit 301, a reading unit 302, a data expansion unit 303 and an update unit 304, as follows:

[0148] (1) acquisition unit 301;

[0149] The obtaining unit 301 is configured to obtain matrix parameters of the feature matrix.

[0150] The feature matrix is the convolution kernel of the convolution operation, also called the weight matrix, and the feature matrix can be set according to actual needs. Wherein, the matrix parameter of the feature matrix may include the number of rows and columns of the matrix, which may be called the size of the convolution kernel.

[0151] (2) reading unit 302;

[0152] The reading unit 302 is configured to read corresponding data in the image data matrix accord...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com