Artificial neural network-based LRU Cache prefetching mechanism performance gain assessment method

A technology of artificial neural network and neural network model, applied in the direction of neural learning method, biological neural network model, neural architecture, etc., can solve the problems of long simulation cycle, calculation and modeling affecting stack distance, shorten the evaluation cycle, Quick estimate of performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

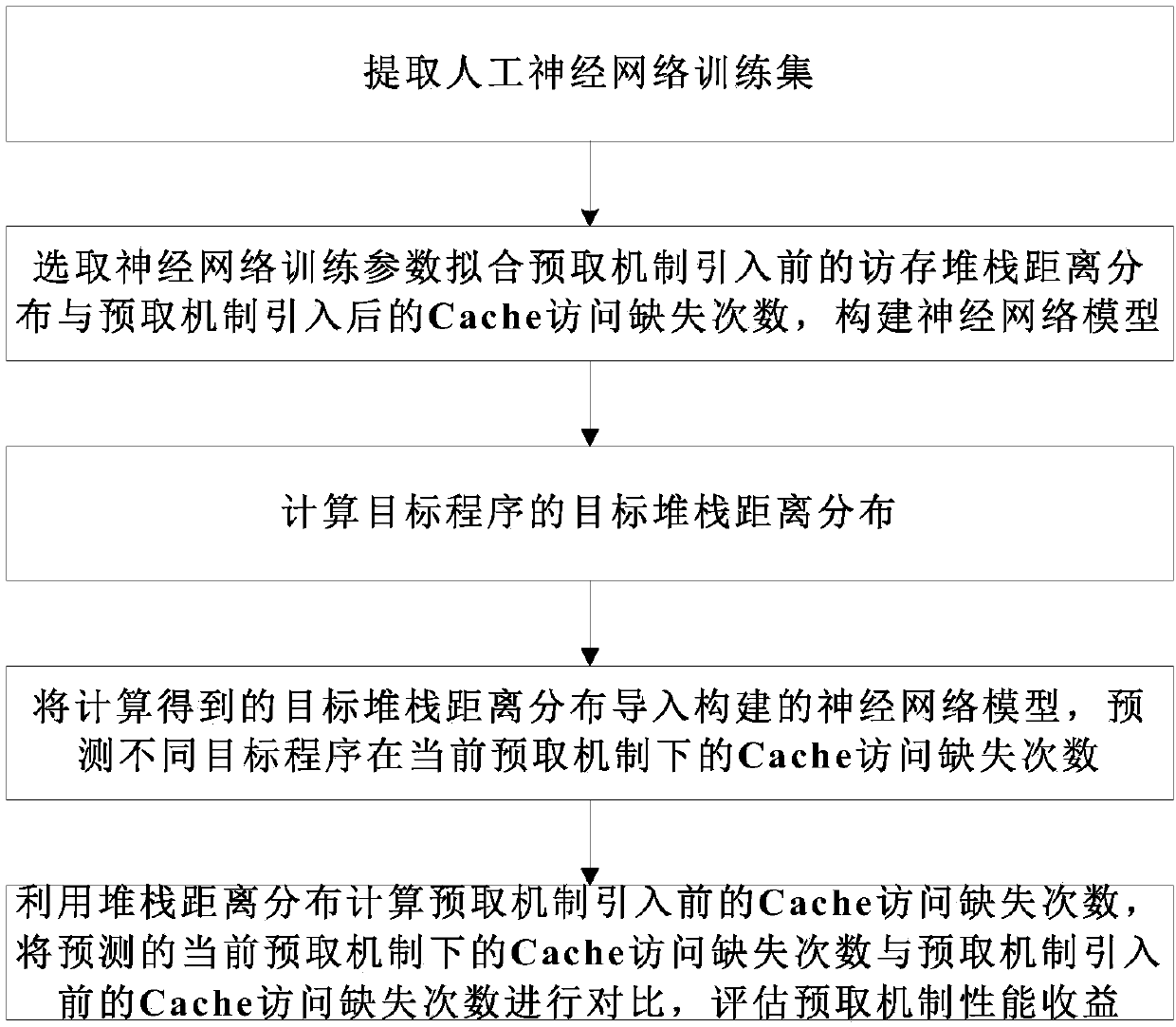

[0029] Such as figure 1 As shown, the LRU Cache prefetching mechanism performance benefit evaluation method based on artificial neural network of the present invention, concrete realization can comprise the following steps:

[0030] (1) Extraction of artificial neural network training set:

[0031] Artificial neural network training needs multiple sets of training data as input to complete the training of neuron weight coefficients. The present invention cuts the complete application program into several program fragments, and extracts two types of information from each program fragment, one is the stack distance distribution before the prefetch mechanism is added, and the other is the number of cache access misses after the prefetch mechanism is added .

[0032] (2) Selection of artificial neural network topology and neuron weight training method:

[0033] The selection of artificial neural network topology and neuron weight training method is realized by traversing all th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com