A robot autonomous navigation system and method based on depth image data

A technology of depth image data and autonomous navigation system, which is applied in the field of visual control and can solve the problems of large amount of calculation and high cost of processing modules

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

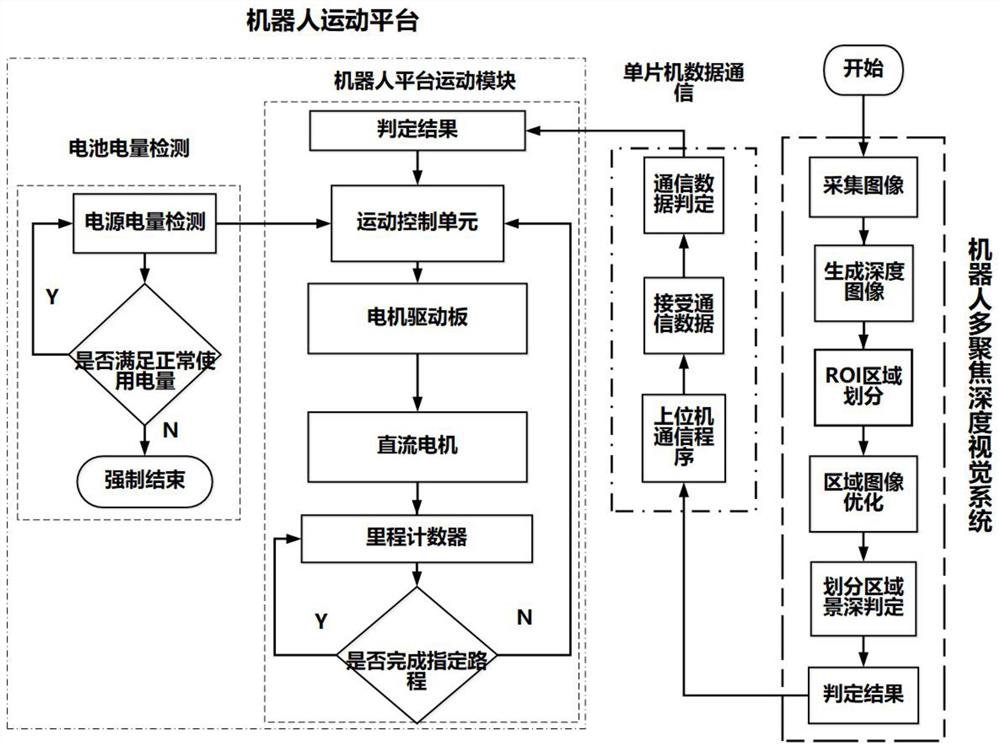

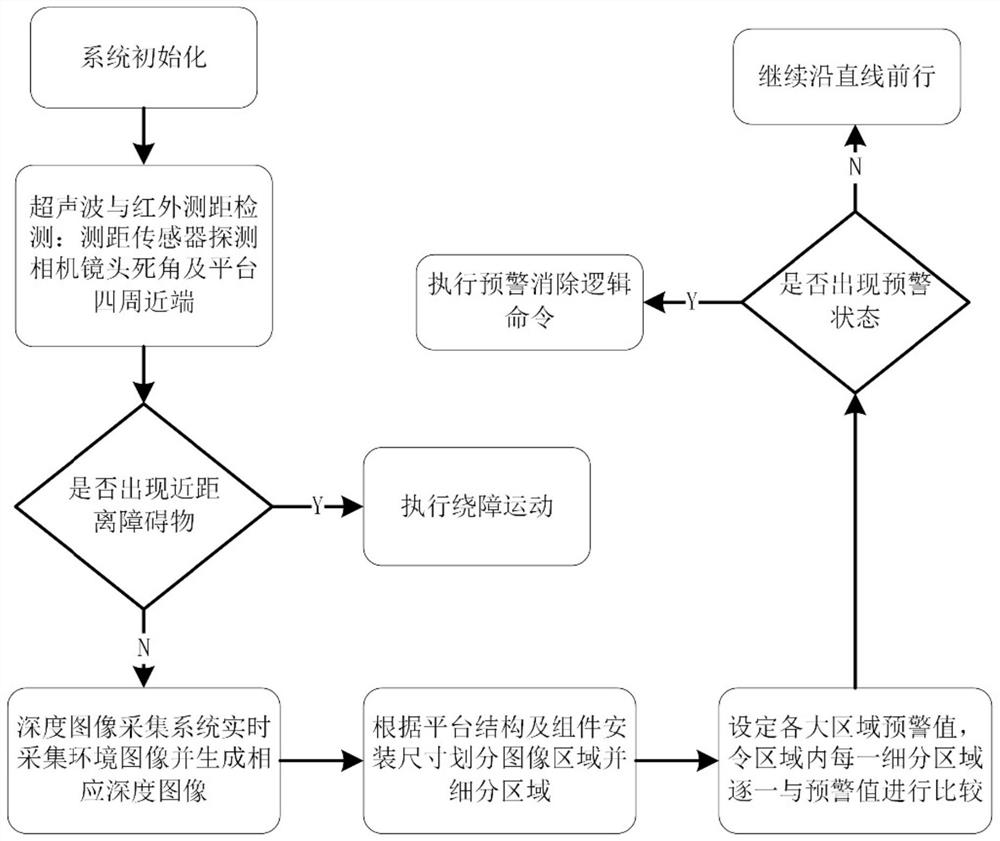

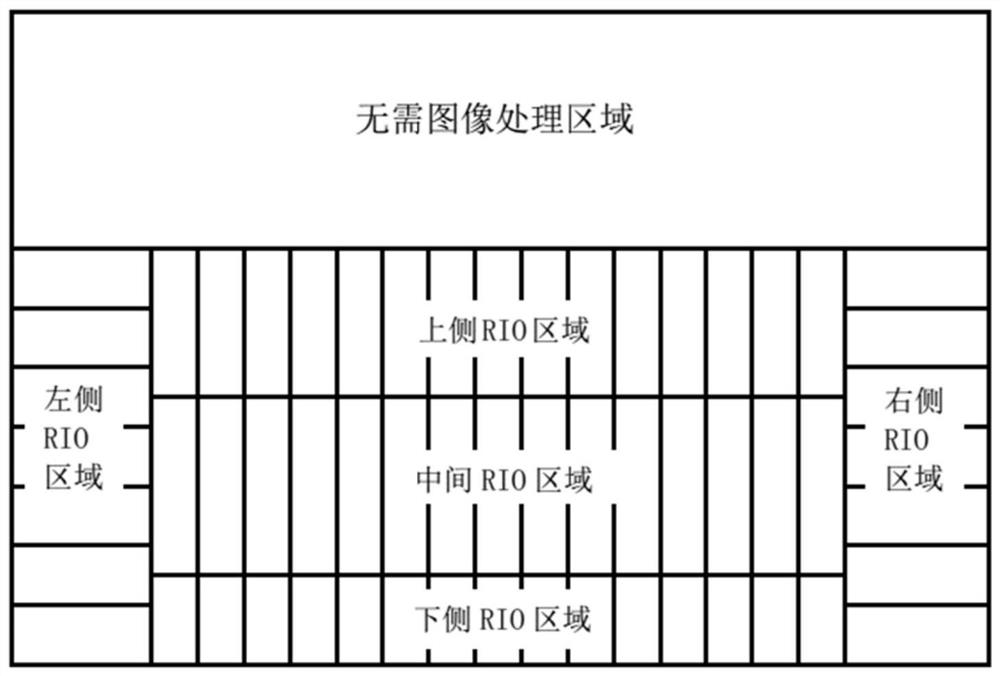

[0068] Such as figure 2 As shown, in this embodiment, the monocular multi-focus robot is autonomously navigated, using a wheeled motion platform and adopting a skid steering method, and setting the image processing area according to the platform size parameters and the camera installation position, taking the horizontal plane of the camera lens axis as The basic interface, and then set the upward movement size of the basic interface according to the proportional relationship between the size of the upper part of the camera and the total size of the device. Below the basic interface is the depth data image processing area. In the horizontal direction, the horizontal area of the image is divided into three main areas on the left, right and middle according to the width of the walking wheels and the width of the platform. The principle of area division is divided according to the size ratio of each part to the total width; the vertical direction is based on the minimum ground ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com