Multi-robot collaborative path following method based on deep learning

A multi-robot and deep learning technology, applied in the computer field, can solve problems such as large recognition delay, inability to recognize scene pictures from perspectives, long request response time, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

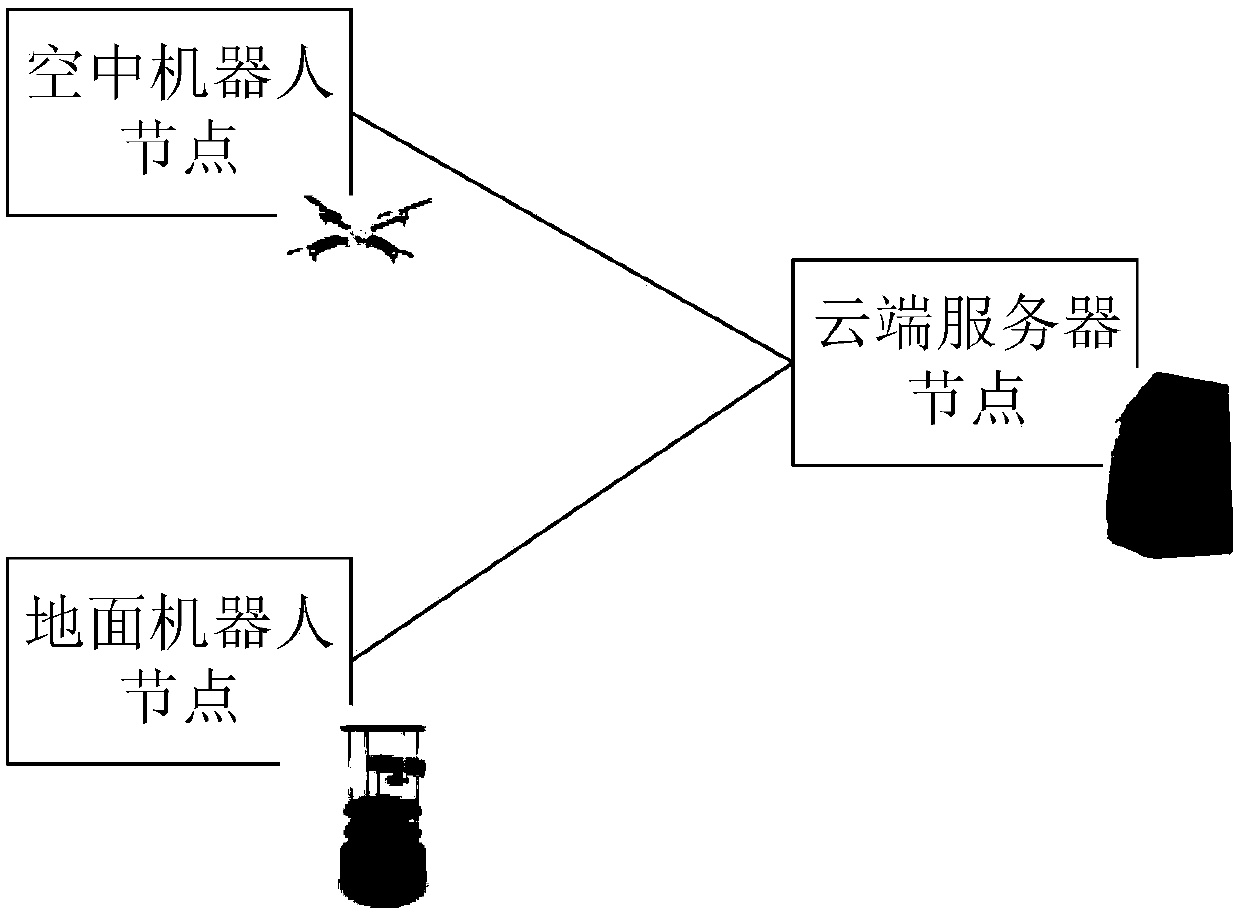

[0072] figure 1 It is the multi-robot environment constructed in the first step of the present invention, which is composed of ground robot nodes, aerial robot nodes and cloud server nodes. Ground / air robot nodes are robot hardware devices (such as unmanned vehicles / drones) that can perceive environmental information and receive speed commands. Cloud server nodes are resource-controllable computing devices with good computing power, which can run computing-intensive Or knowledge-intensive robotics applications.

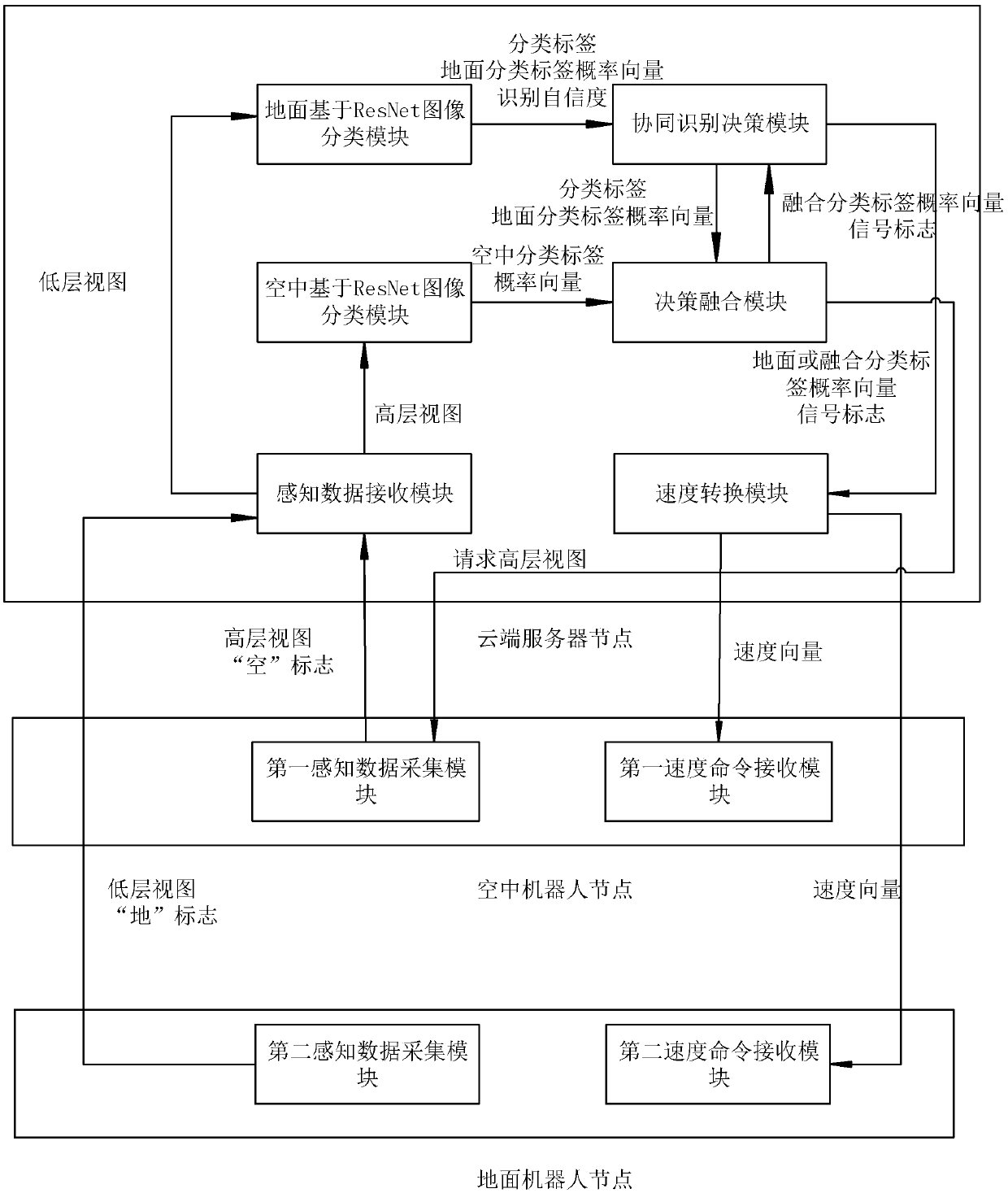

[0073] figure 2 It is a software deployment diagram on ground and aerial robot nodes and cloud server nodes of the present invention. The robot computing node is a robot hardware device that can move in the environment and receive speed commands. It has a camera sensor on it. The cloud server nodes are equipped with the operating system Ubuntu and the Caffe deep learning model framework. In addition, the ground and air robot nodes are also equipped with a percepti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com