A computing device and method

A computing device and computing unit technology, applied in the field of artificial intelligence, can solve the problems such as the inability to further improve the computing speed, the flexibility is not high, the calculation amount is reduced, and the effect of reducing power consumption, high flexibility, and reducing energy consumption is achieved.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

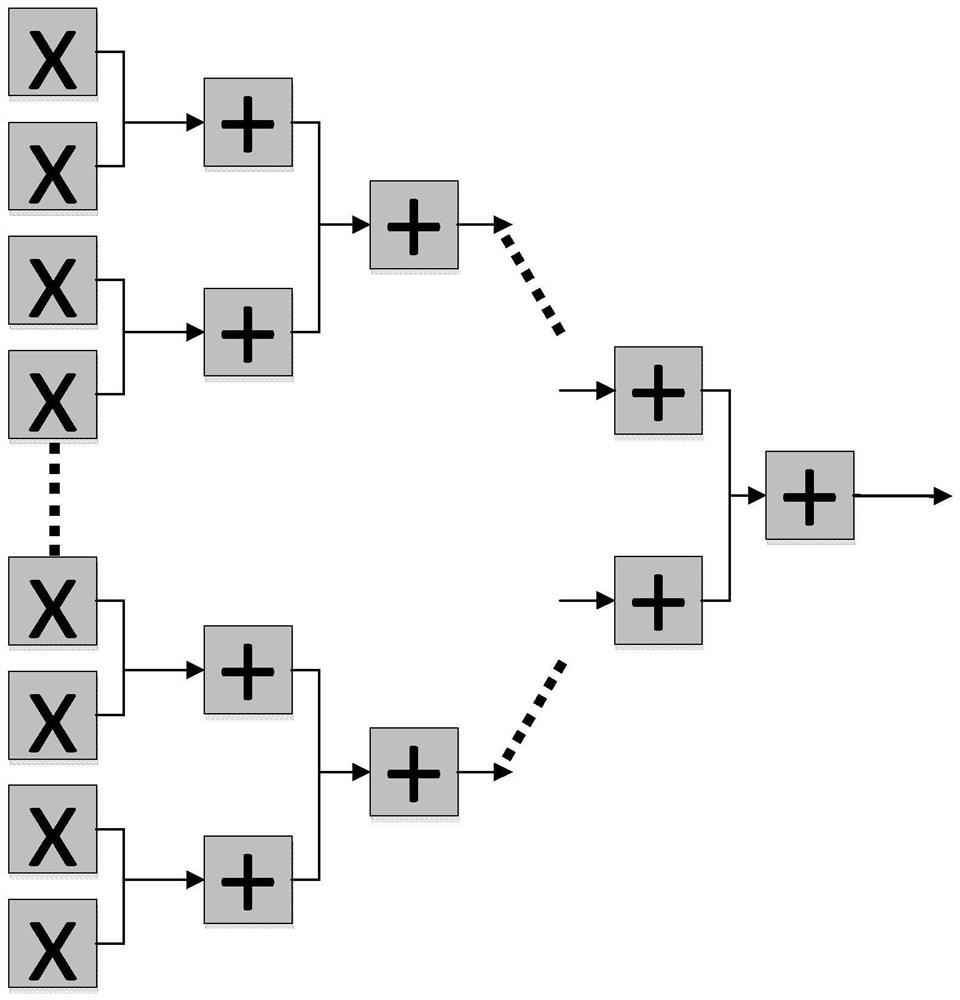

[0080] Please refer to the attached Figure 4 As shown, in Embodiment 1, the arithmetic unit of the arithmetic module includes n (n is a positive integer) multipliers and an addition tree with n inputs. The operation control unit controls the operation module as a whole, and can send control signals to each multiplier. In addition to receiving data signals, each adder and multiplier can also receive control signals, that is, in addition to receiving data to be calculated, it can also receive control signals and send control signals to the next level.

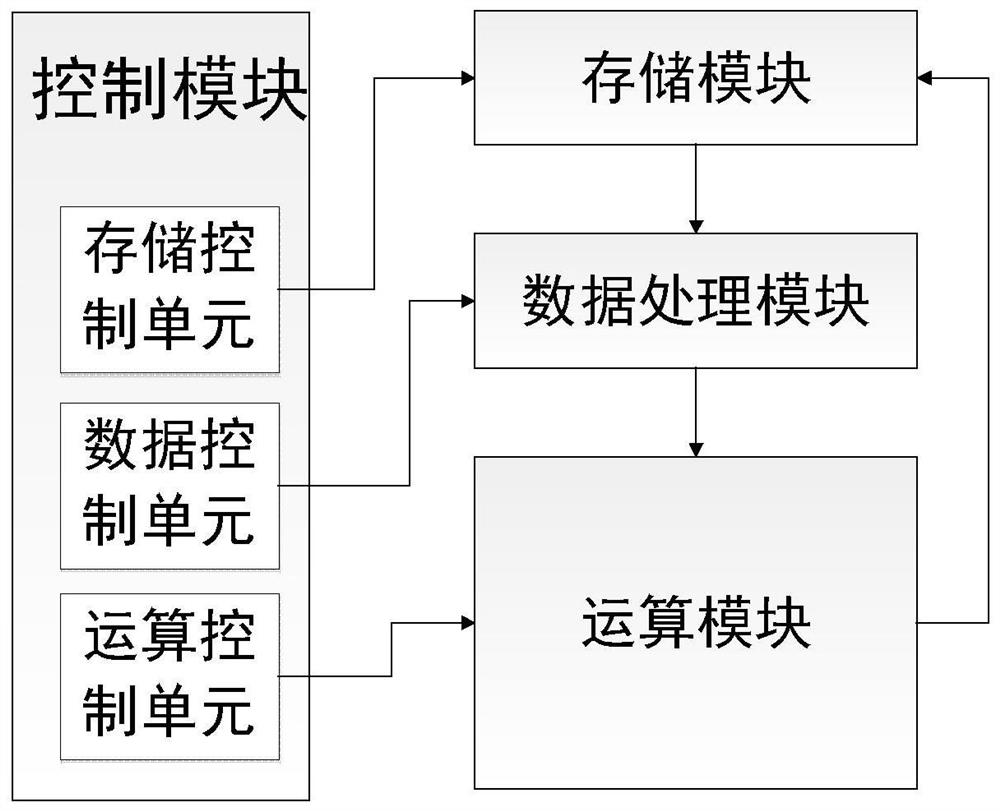

[0081] The specific operation process of the low-power neural network operation device using the operation module of the first embodiment is as follows: first, the storage control unit controls the storage module to read out neuron data and synapse data. If the synaptic data is stored in a sparse coding method, the neuron data and the synaptic index value need to be transmitted to the data processing module, and the neuron data...

Embodiment 2

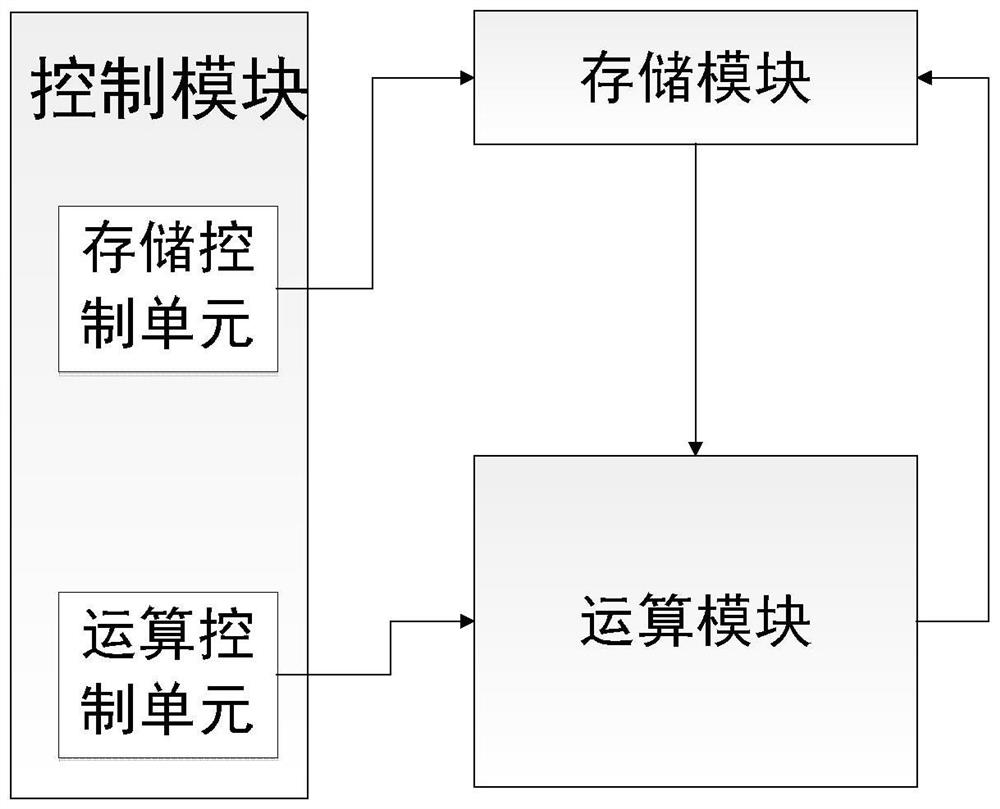

[0084] Please refer to the attached Figure 5 As shown, in Embodiment 2, the arithmetic module includes n (n is a positive integer) arithmetic units, and each arithmetic unit includes a multiplier, an adder, and a temporary cache, and each arithmetic control unit is also split into for each operation group. The synaptic data is transmitted to each computing unit in the form of broadcast, and the neurons are directly transmitted from the storage part.

[0085] The specific calculation process of the low-power neural network computing device using the computing module of Embodiment 2 is as follows: First, the temporary cache in each computing unit is initially set to 0. The storage control unit controls the storage module to read out the neuron data. If the synapse data is stored in a sparse coding manner, the index value of the synapse and the neuron data are transmitted to the data processing module, and the neuron data is stored according to the index. The value is compress...

Embodiment 3

[0087] Please refer to the attached Figure 6 As shown, in Embodiment 3, the computing module includes n computing units. Each arithmetic unit includes a multiplier and an adder and a selector. The operation control unit controls each operation unit separately, and the partial sums are transferred between the operation units.

[0088] The specific operation process of the low-power neural network computing device using the computing module of Embodiment 3 is as follows: First, the storage control unit reads the synaptic value from the storage module and sends it to the data processing module. The data processing module compresses the synapses by setting the compression threshold, that is, only selects the synaptic values whose absolute value is not less than the given compression threshold, transmits them to each computing unit respectively, and keeps the synaptic values unchanged. Then read the neuron value from the storage module, after the corresponding compression by...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com