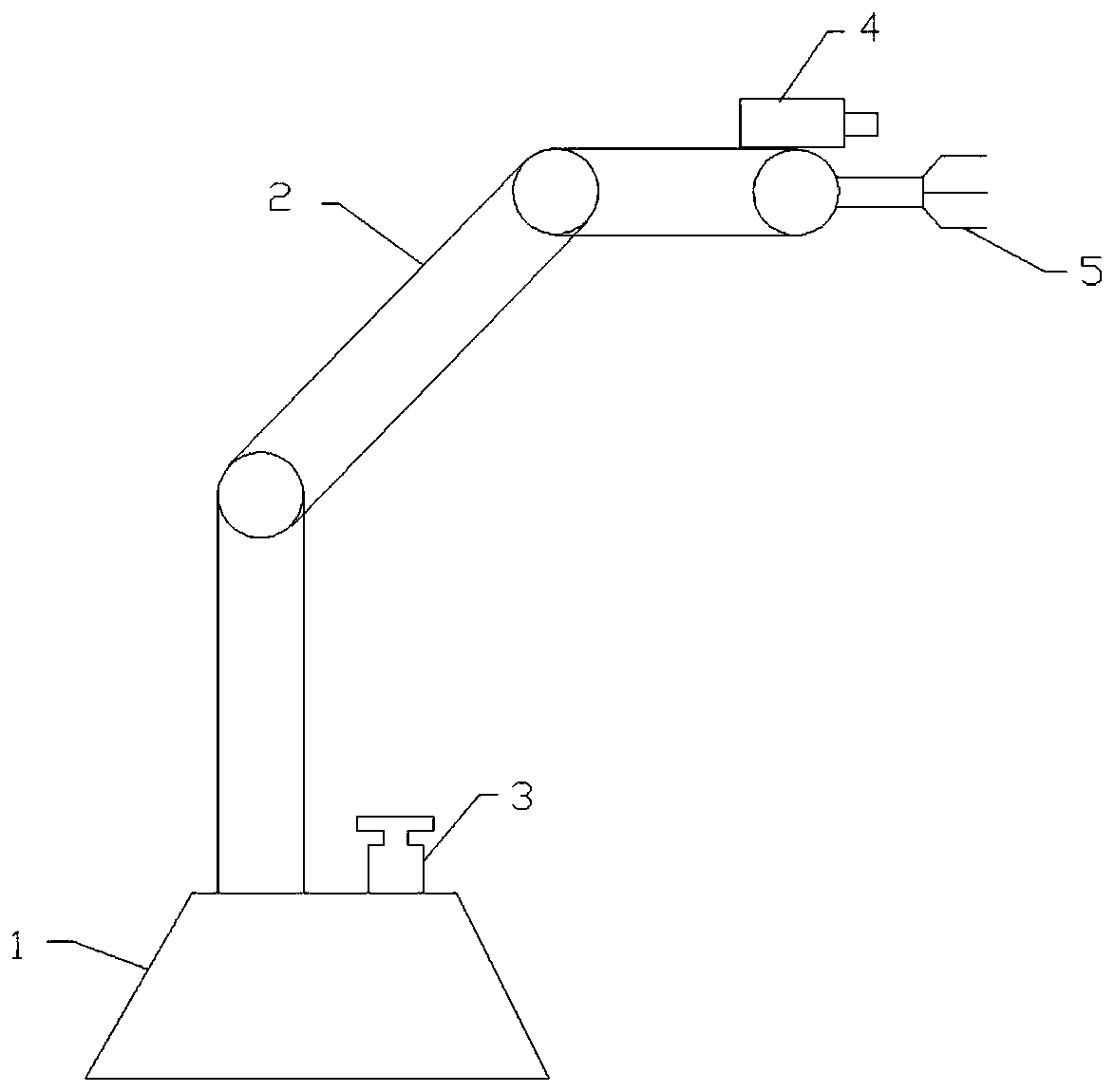

Device and method for active grasping of manipulator based on multimodal fusion

A technology for grasping devices and manipulators, applied in the direction of manipulators, program-controlled manipulators, manufacturing tools, etc., can solve the problems of lack of automatic real-time interaction and learning process, difficulty in grasping, sensor disturbance, etc., and achieve improved positioning and active grasping ability, avoid strong light interference, and improve the effect of grasping success rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The present invention will be described in detail below in conjunction with specific embodiments. The following examples will help those skilled in the art to further understand the present invention, but do not limit the present invention in any form. It should be noted that for those of ordinary skill in the art, several changes and improvements can be made without departing from the concept of the present invention. These all belong to the protection scope of the present invention.

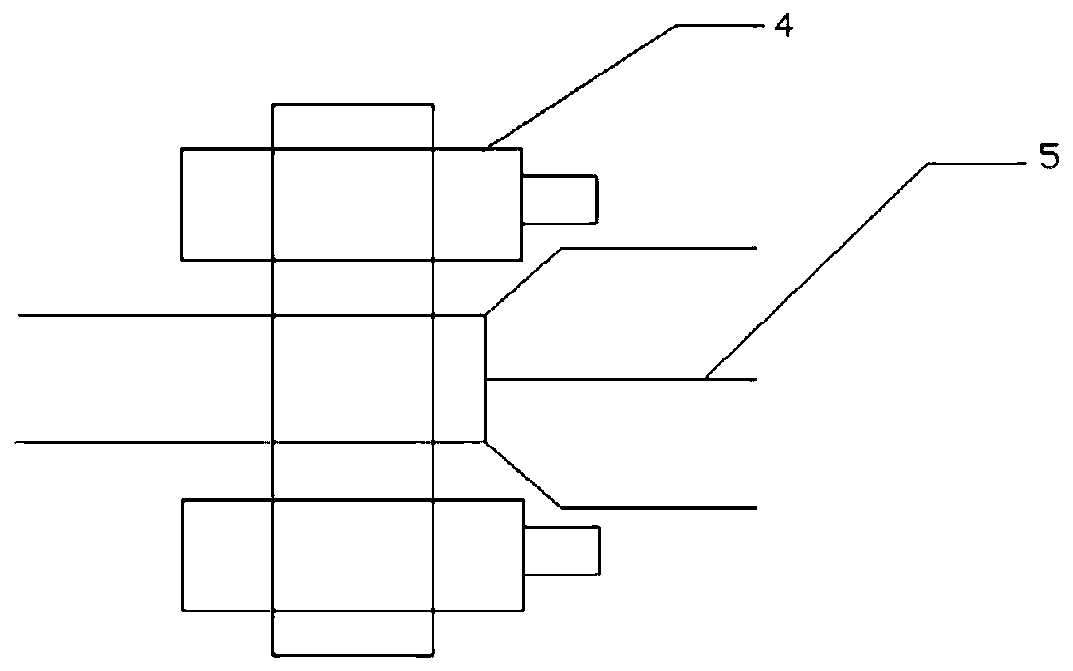

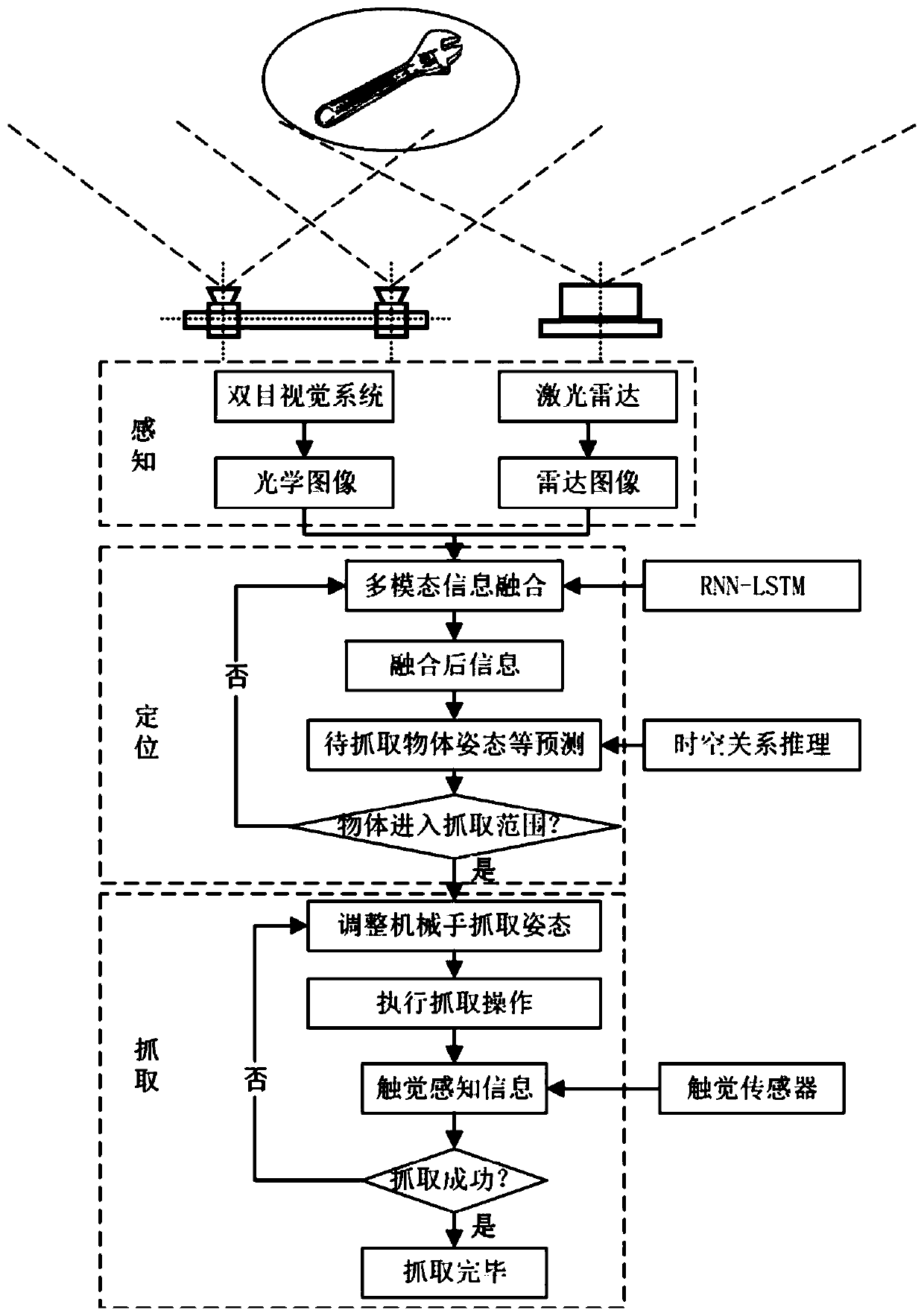

[0035] The invention aims at the problem that the binocular vision system is difficult to accurately obtain the information of the moving object to be grasped due to environmental factors such as bad light in space and electromagnetic field, and monitors the surrounding objects in a microgravity environment in real time by introducing a lidar, and uses a cyclic neural network-long-short-term memory network The algorithm is the RNN-LSTM algorithm for information fusion of radar images and v...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com