Fixed wing aircraft vision-auxiliary landing navigation method under low visibility

A low-visibility, navigation method technology, applied in directions such as navigation, navigation by speed/acceleration measurement, mapping and navigation, etc., which can solve the problem of being susceptible to reflections from surrounding terrain, GPS signals being susceptible to interference or shielding, and low ILS navigation accuracy. and other problems, to achieve the effect of significant perspective effect, low cost, and elimination of inertial accumulation errors.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] In the fixed-wing aircraft vision-assisted landing navigation method under low visibility of the present invention, mainly include the following aspects:

[0018] 1. Visual landing navigation method framework

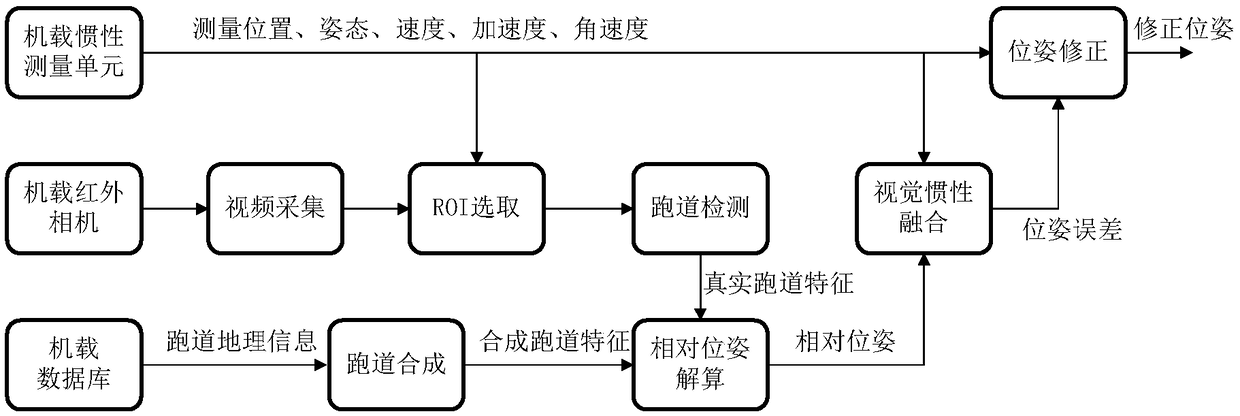

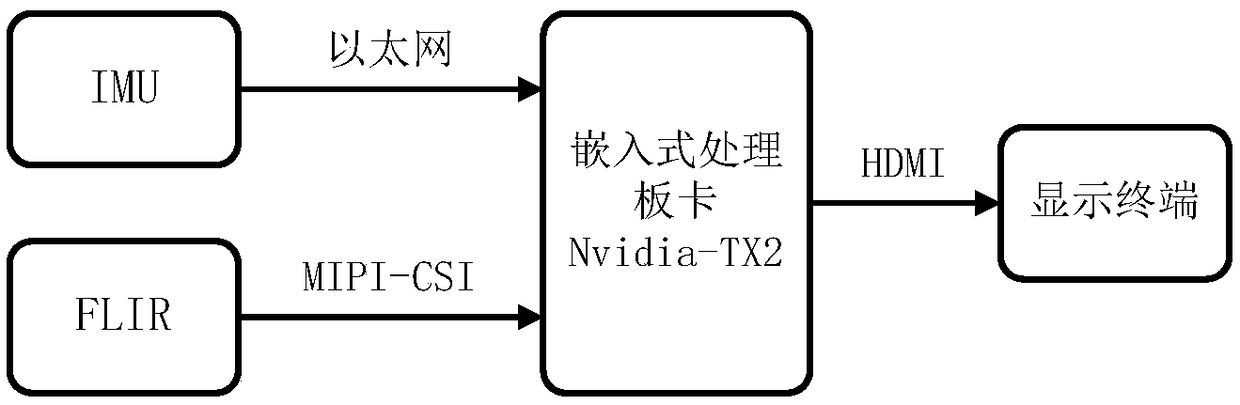

[0019] The input data of this method comes from the airborne inertial measurement unit (IMU), airborne forward-looking infrared camera (FLIR) and airborne navigation database, and the output data is the corrected position and attitude. The whole algorithm includes video acquisition, runway area Main parts such as selection (ROI), runway detection, runway synthesis, relative pose calculation, visual and inertial fusion, pose correction, etc., see the flow chart for details figure 1 . The specific information processing flow is as follows:

[0020] 1) Infrared video data stream: After the infrared video captured by FLIR is collected, the ROI is selected from the entire image by using the method assisted by inertial parameters, and then the image features of the f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com