Network processor and network operation method

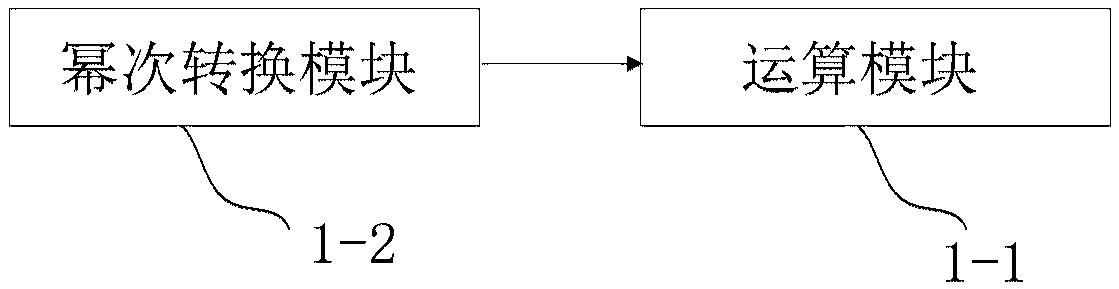

An operation method and processor technology, applied in the field of artificial intelligence, can solve problems such as complex processor structure, waste of hardware resources, and large optimization space, and achieve the effects of reducing power consumption, simplifying multiplication operations, and speeding up operations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific example 1

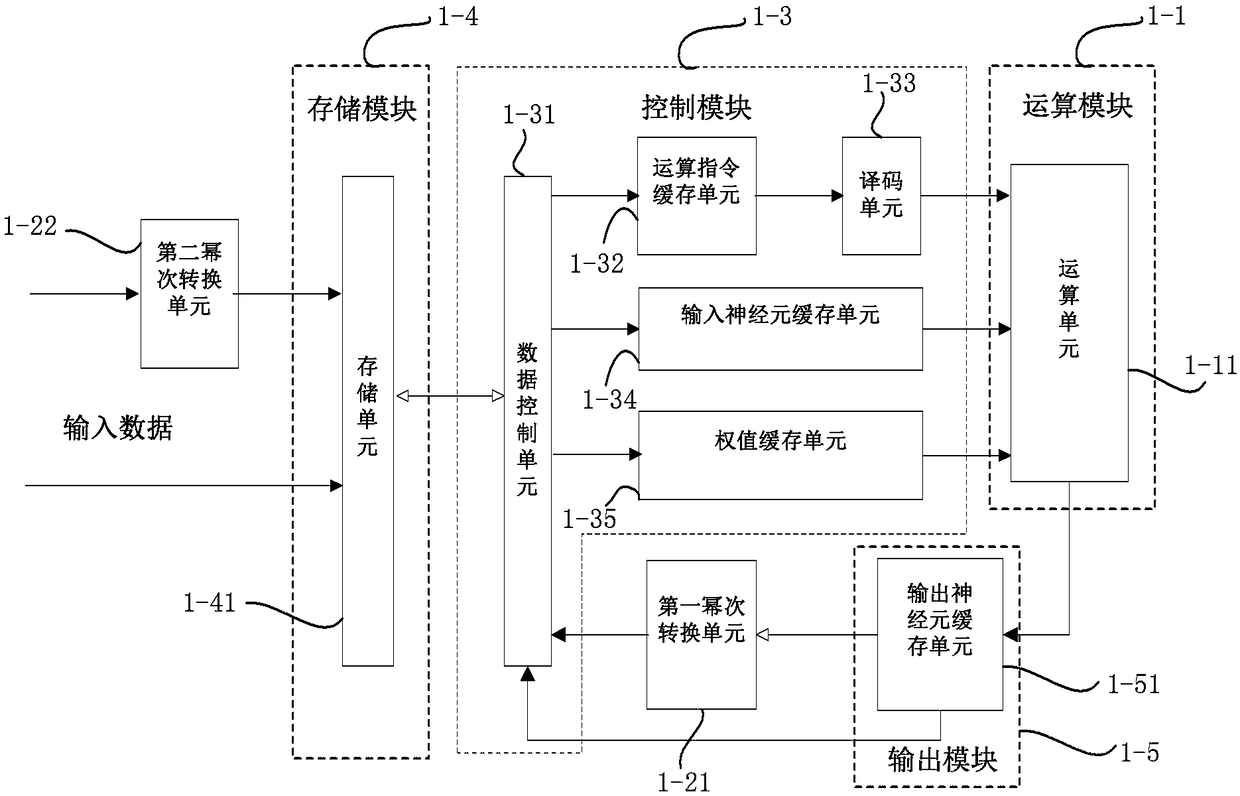

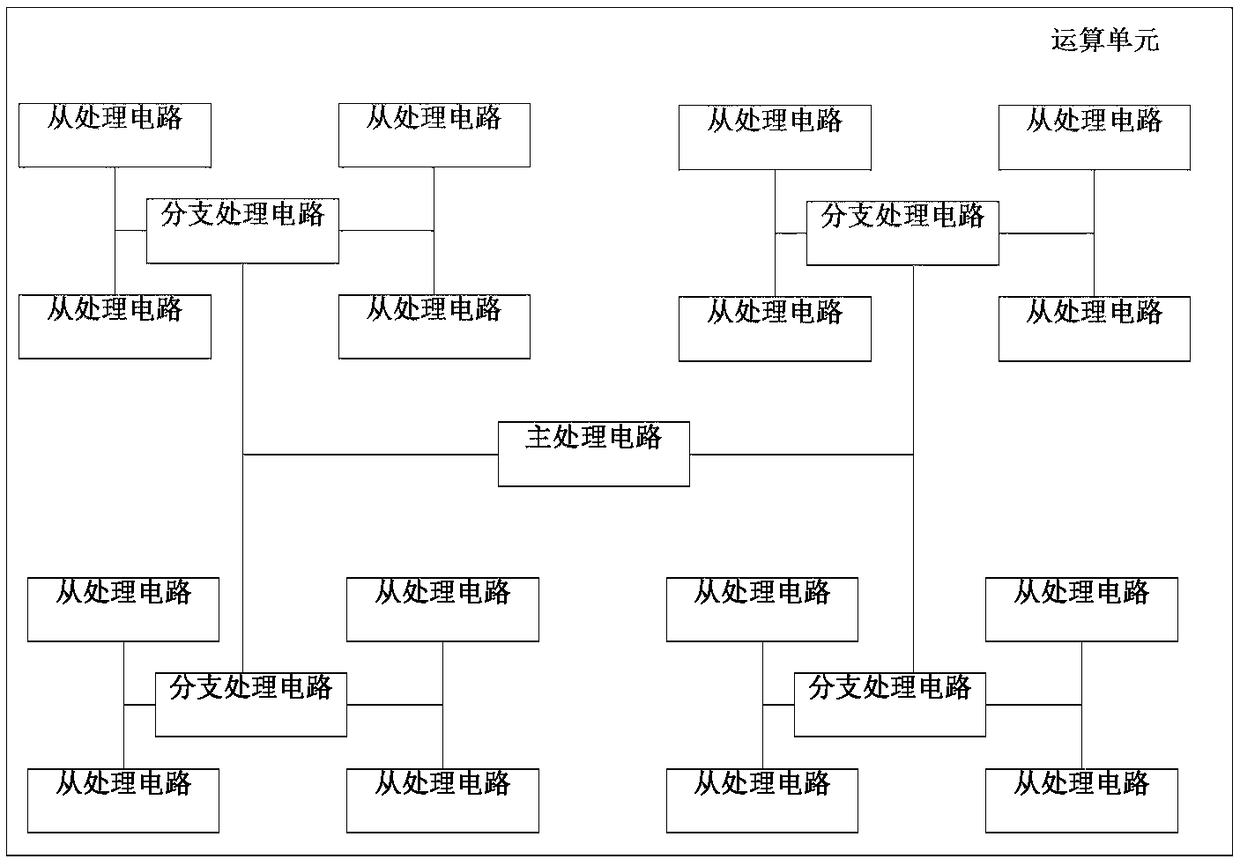

[0187] Specific examples are as Figure 1N As shown, the weight data is 16-bit floating-point data, the sign bit is 0, the power bit is 10101, and the effective bit is 0110100000, so the actual value it represents is 1.40625*2 6 . The power neuron data sign bit is 1 bit, and the power bit data bit is 5 bits, that is, m is 5. The coding table is that when the power bit data is 11111, the corresponding power neuron data is 0, and when the power bit data is other values, the power bit data corresponds to the corresponding binary complement. If the power neuron is 000110, the actual value it represents is 64, which is 2 6 . The result of adding the power bit of the weight to the power bit of the power neuron is 11011, and the actual value of the result is 1.40625*2 12 , which is the product result of neurons and weights. Through this arithmetic operation, the multiplication operation becomes an addition operation, which reduces the amount of calculation required for calculati...

specific example 2

[0188] Specific example two such as Figure 1O As shown, the weight data is 32-bit floating-point data, the sign bit is 1, the power bit is 10000011, and the effective bit is 10000000000000000, so the actual value it represents is -1.5703125*2 4 . The power neuron data sign bit is 1 bit, and the power bit data bit is 5 bits, that is, m is 5. The coding table is that when the power bit data is 11111, the corresponding power neuron data is 0, and when the power bit data is other values, the power bit data corresponds to the corresponding binary complement. If the power neuron is 111100, the actual value it represents is -2-4. (The result of adding the power bit of the weight to the power bit of the power neuron is 01111111, and the actual value of the result is 1.5703125*2 0 , which is the product result of neurons and weights.

[0189] In step S1-3, the first power conversion unit converts the neuron data after the neural network operation into power neuron data.

[0190] ...

example 1

[0366] Assuming that the data to be screened is a vector (1 0 101 34 243), and the components that need to be screened are less than 100, then the input position information data is also a vector, that is, a vector (1 1 0 1 0). The filtered data can still maintain the vector structure, and the vector length of the filtered data can be output at the same time.

[0367] Wherein, the position information vector can be input externally or generated internally. Optionally, the device in the present disclosure may further include a location information generation module, which may be used to generate a location information vector, and the location information generation module is connected to the data screening unit. Specifically, the position information generating module may generate a position information vector through vector operation, and the vector operation may be a vector comparison operation, that is, it is obtained by comparing the components of the vector to be screened ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com