A lens distortion correction and feature extraction method and system

A technology of lens distortion and feature extraction, which is applied to parts of TV systems, character and pattern recognition, and parts of color TVs, etc. It can solve the problem of large data processing volume, high CPU usage, and the inability of robots or drones to perform Real-time positioning and other issues to achieve the effect of improving computing efficiency, reducing hardware costs, and achieving high-precision positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

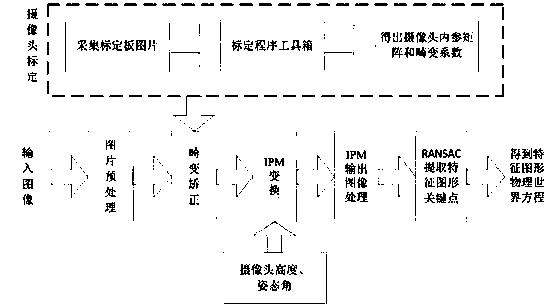

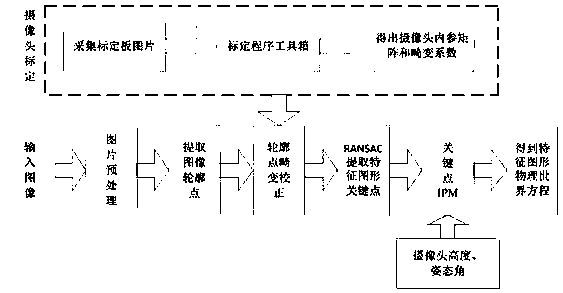

Method used

Image

Examples

Embodiment 1

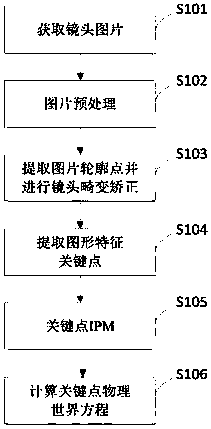

[0081] The application scenario of this embodiment is to perform indoor positioning in an indoor area where ground features can be extracted. First, the camera is used to obtain the feature information of the edge line of the indoor floor tiles, refer to Figure 4 Establish the X-axis and Y-axis directions, and quickly transform to obtain the relative position information from the position of the camera center point to the straight line in the captured picture.

[0082] S101: Acquire a lens image. Capture pictures for subsequent module processing.

[0083] S102: Image preprocessing. Convert the collected lens image from color image to grayscale image; edge detection, display the outline of the image; cut the image, remove the disturbing outline part.

[0084] Converting a picture from a color image to a grayscale image can reduce the amount of calculations on the subsequent data to be processed, speed up the operation efficiency, and the grayscale image is also easier to pr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com