Compiling method based on OPU instruction set and compiler

A compiling method and instruction set technology, which is applied in the field of compiling method and compiler based on OPU instruction set, can solve the problem of high complexity of hardware upgrade, achieve the effect of saving data transmission time and realizing utilization efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0077] A kind of compiling method based on OPU instruction set, comprises the steps:

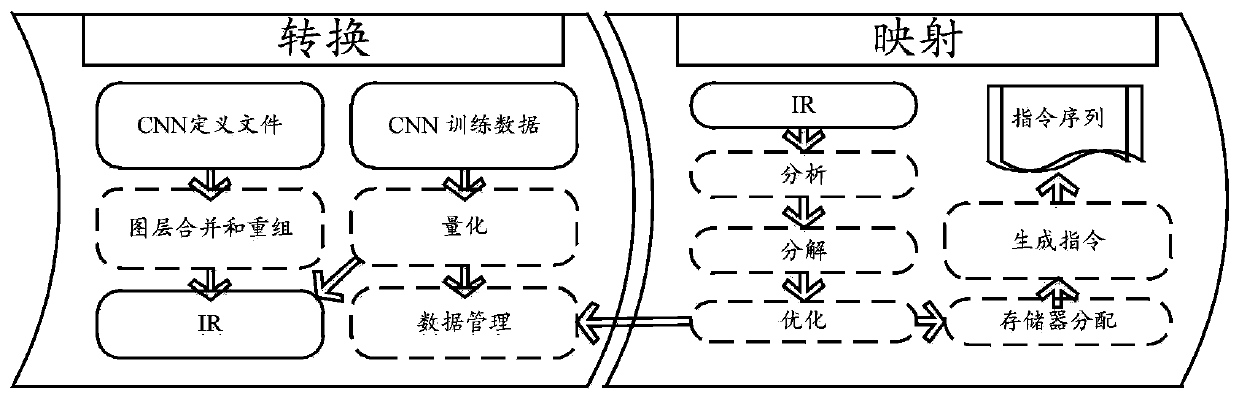

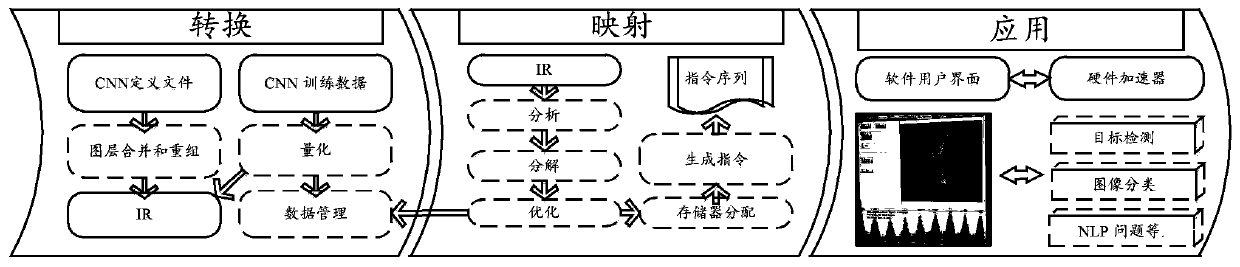

[0078] Convert the CNN definition files of different target networks, and select the optimal accelerator configuration mapping according to the defined OPU instruction set, and generate instructions for different target networks to complete the mapping;

[0079] Wherein, the conversion includes file conversion, network layer reorganization and generation of a unified intermediate representation IR;

[0080] The mapping includes parsing the IR, searching the solution space according to the parsing information to obtain a mapping method that guarantees the maximum throughput, and expressing the above mapping solution as an instruction sequence according to the defined OPU instruction set to generate instructions for different target networks.

[0081] A compiler based on the OPU instruction set, including

[0082] The conversion unit is used for file conversion, network layer reorganization a...

Embodiment 2

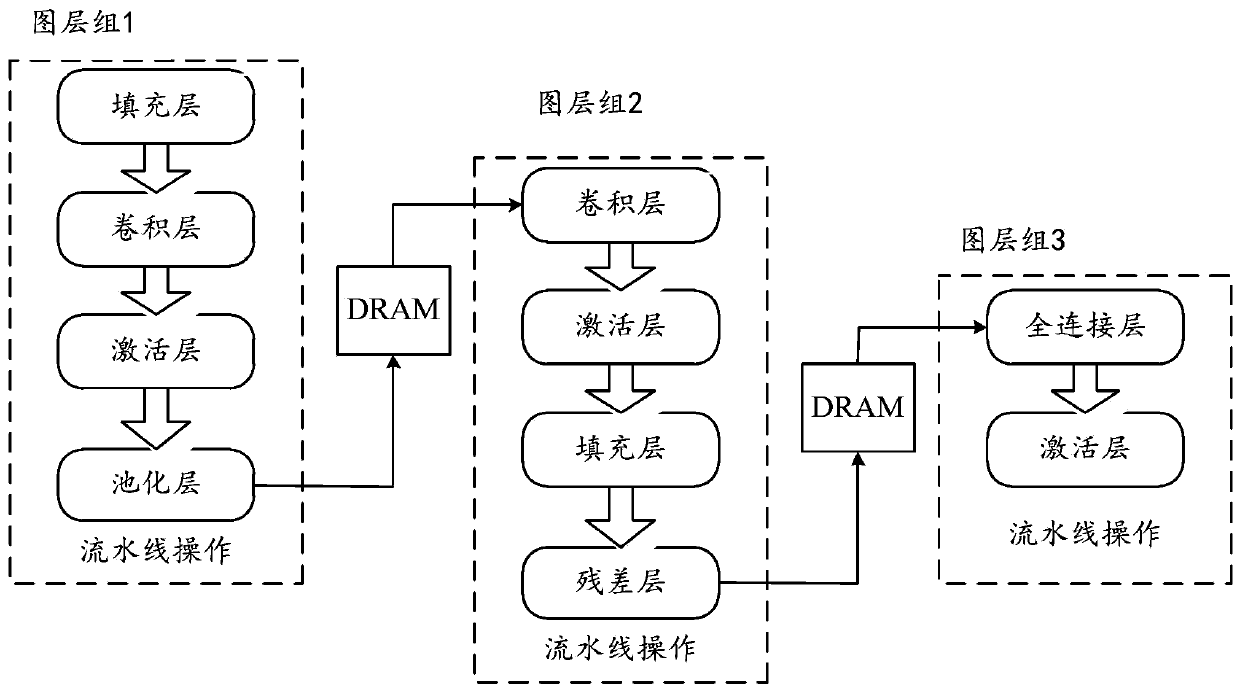

[0097] Based on Example 1, a conventional CNN definition contains various types of layers that are connected from top to bottom to form a complete flow. The intermediate data passed between layers is called a feature map, which usually requires a large storage space and can only be performed off-chip. processed in memory. Since off-chip memory communication delay is the main optimization factor, it is necessary to overcome the problem of how to reduce data communication with off-chip, through layer reorganization, define the main layer and auxiliary layer to reduce off-chip DRAM access and avoid unnecessary write / read Back to the operation, the technical details are as follows:

[0098] After analyzing the format of the CNN definition file, convert the file, compress and extract the network information;

[0099] The network operation is reorganized into multiple layer groups. The layer group includes a main layer and multiple auxiliary layers. The results between the layer gr...

Embodiment 3

[0107] Based on Embodiment 1 or 2, in order to solve the problem of how to find the optimal performance configuration / how to solve the universality problem of the optimal performance configuration, the solution space is searched during the mapping process to obtain a mapping method that guarantees the maximum throughput, through the described The mapping method is used for mapping, including the following steps:

[0108] Step a1: Calculate the peak theoretical value, the calculation is shown in the following formula:

[0109] T=f*TN PE

[0110] Among them, T represents the throughput (operations per second), f represents the operating frequency, TN PE Indicates the total number of PEs available on the chip;

[0111] Step a2: Define the minimum value of the time L required for the entire network calculation, and the calculation is shown in the following formula:

[0112]

[0113] Among them, α i Indicates the PE efficiency of the i-th layer, C i Indicates the amount of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com