Indoor typical scene matching and positioning method based on neural network

A scene matching and neural network technology, applied in the field of computer vision, can solve problems such as difficult to meet the amount of data, achieve the effects of improving detection accuracy, high training efficiency, and making up for shortcomings and deficiencies

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] Below in conjunction with accompanying drawing and specific embodiment the present invention is described in further detail:

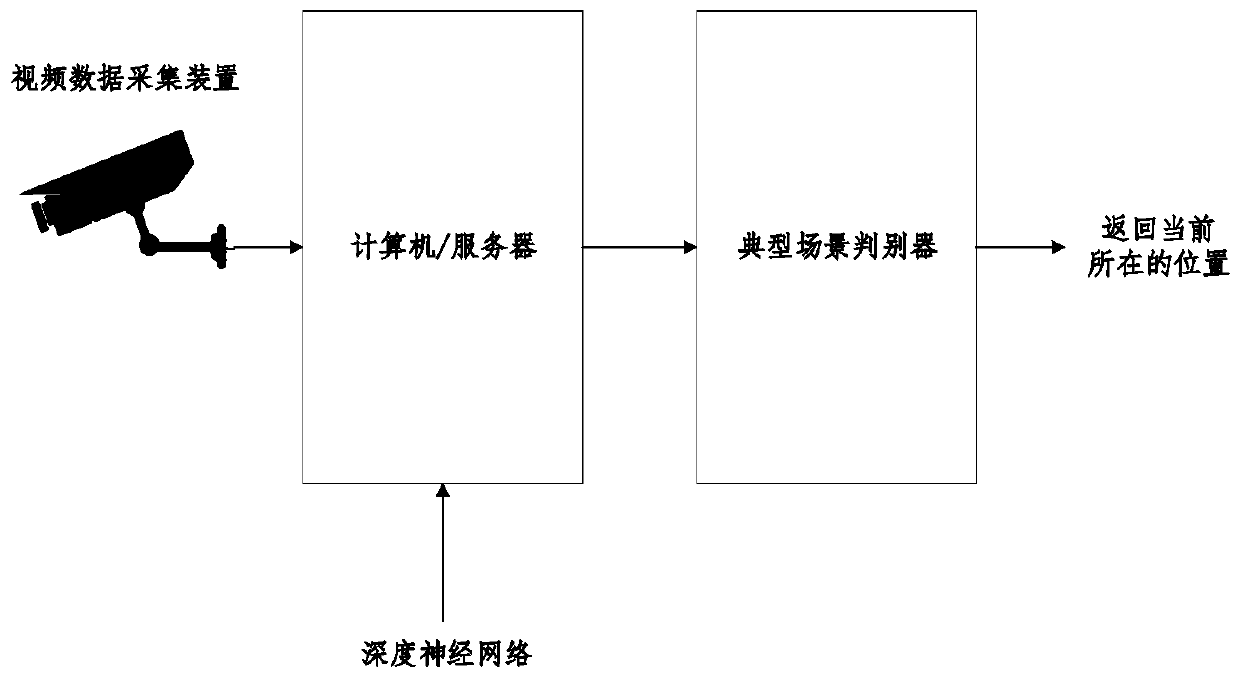

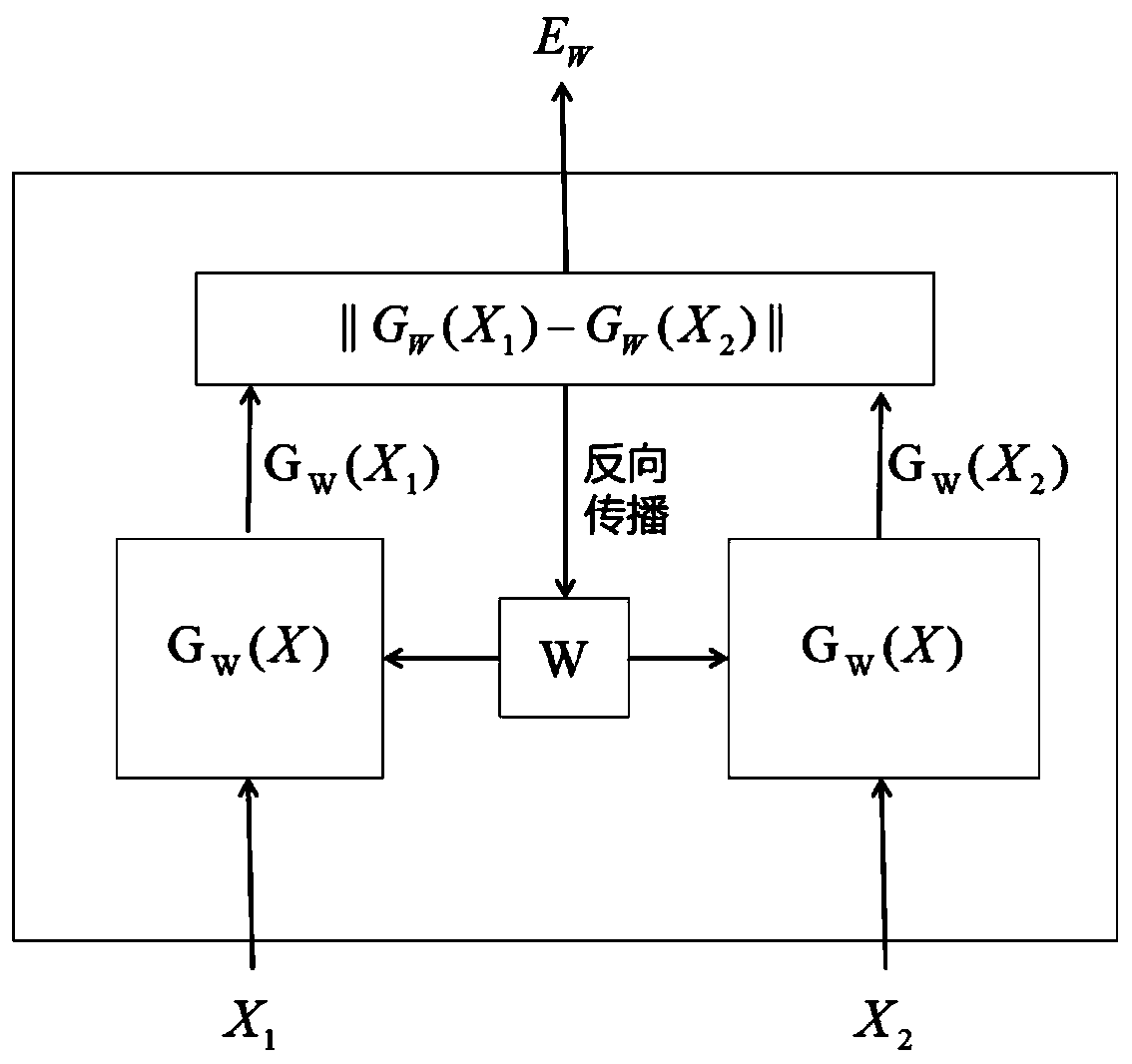

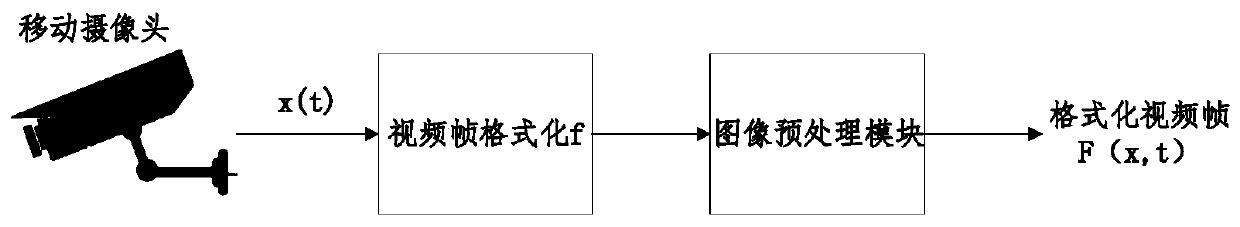

[0017] like figure 1 As shown, the method described in this embodiment adopts a video data acquisition device, a computer / server and a typical scene discriminator, and the server is connected with the video acquisition device; as image 3 As shown, the video data acquisition device includes a mobile camera, a video frame formatting processing module and an image preprocessing module, the mobile camera is used to obtain video data, and the video frame formatting processing module is used to convert real-time video data into formatted video frames f(x, t), where t represents time, and function f( ) represents a video data formatting function; the image preprocessing module judges whether to preprocess the collected image according to the video image collected by the video collection device, and other functions are the same as The existing video c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com