Text abstraction method based on hierarchical interaction attention

A technology of attention and summarization, applied in the field of natural language processing, can solve the problem of ignoring detailed features such as word-level structure, and achieve the effect of improving generation quality, improving generation quality, reducing redundancy and noise

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

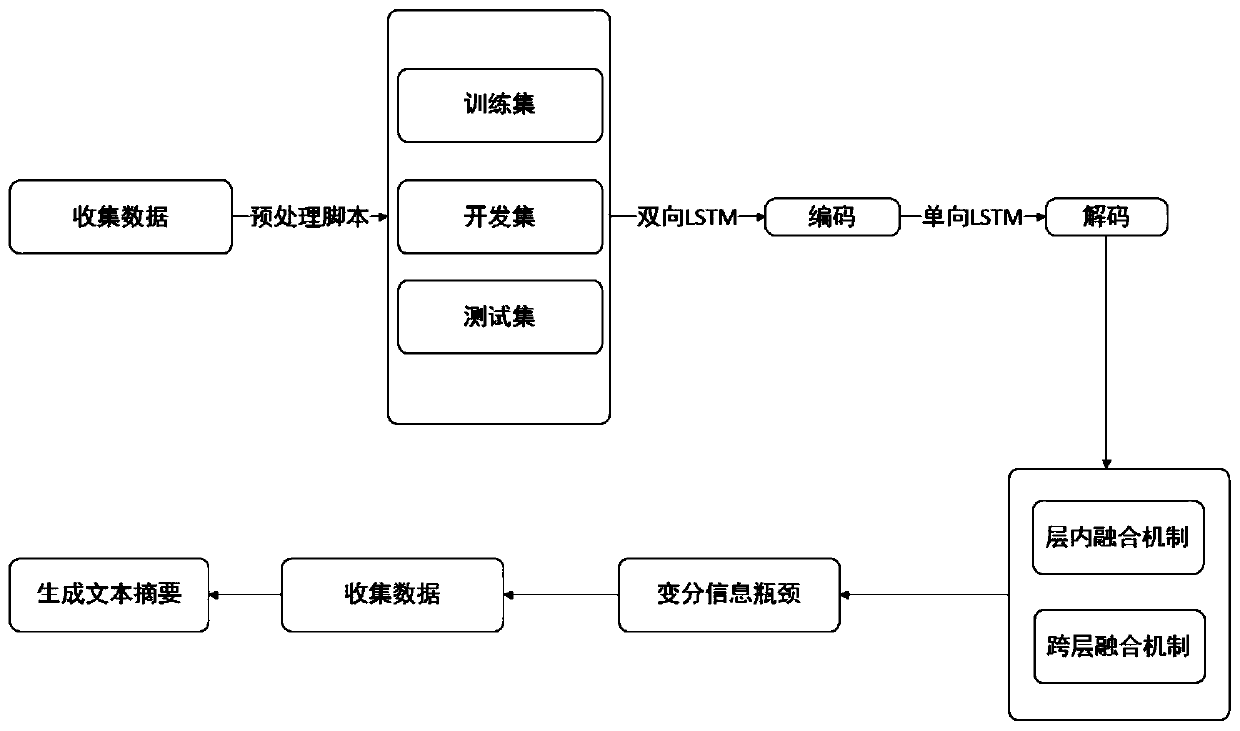

[0032] Example 1: Such as Figure 1-Figure 4 As shown, the text summarization method based on hierarchical interactive attention, the specific steps of the text summarization method based on hierarchical interactive attention are as follows:

[0033] Step1. Use the English data set Gigaword as the training set, and preprocess the data set with a preprocessing script to obtain a training set and development set of 3.8 million and 189,000 respectively. Each training sample contains a pair of input text and summary sentences;

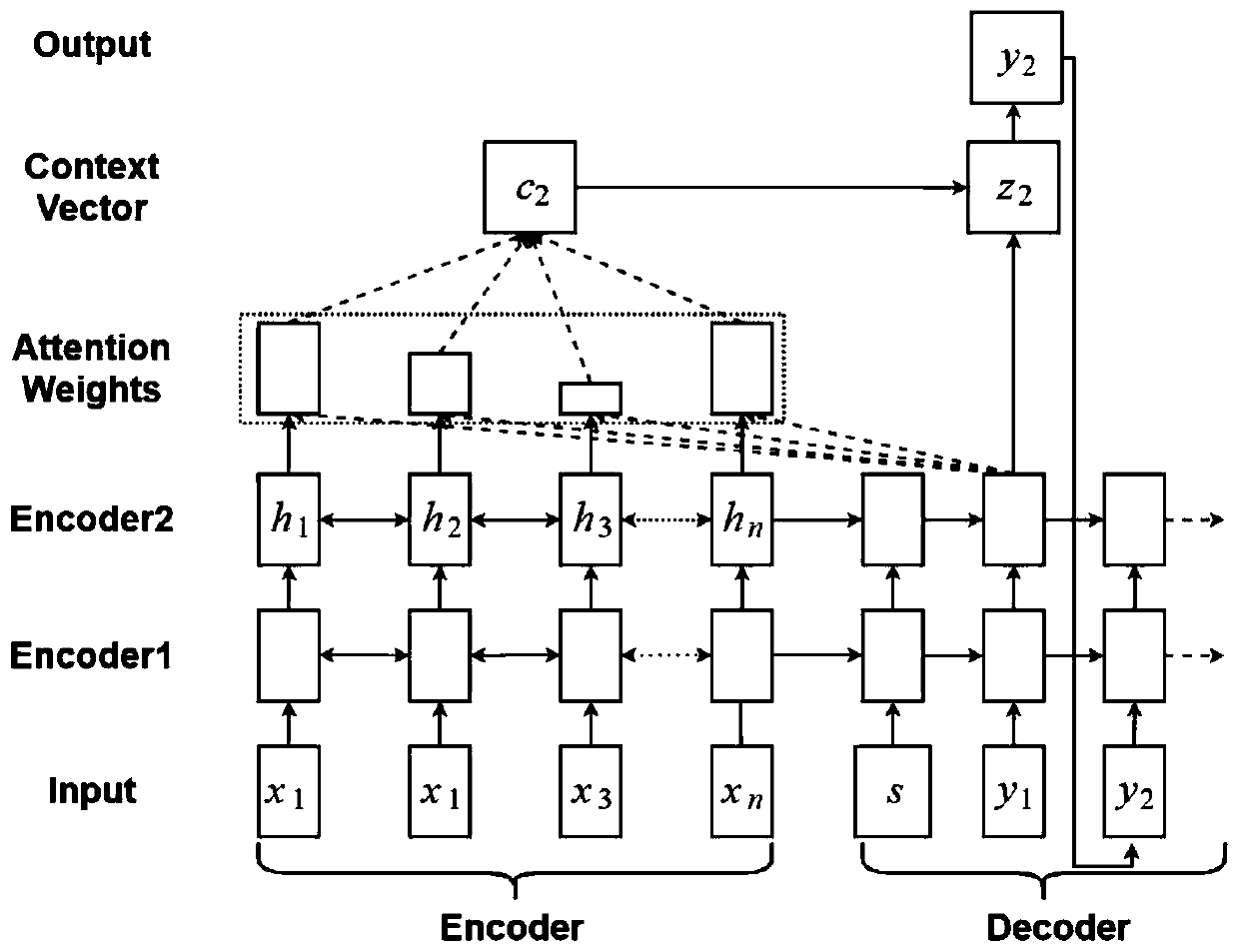

[0034] Step2: The encoder uses two-way LSTM to encode the training set, and the number of layers is set to three;

[0035] Step3: The decoder adopts a one-way LSTM network, input the sentence to be decoded to calculate the context vector of each layer;

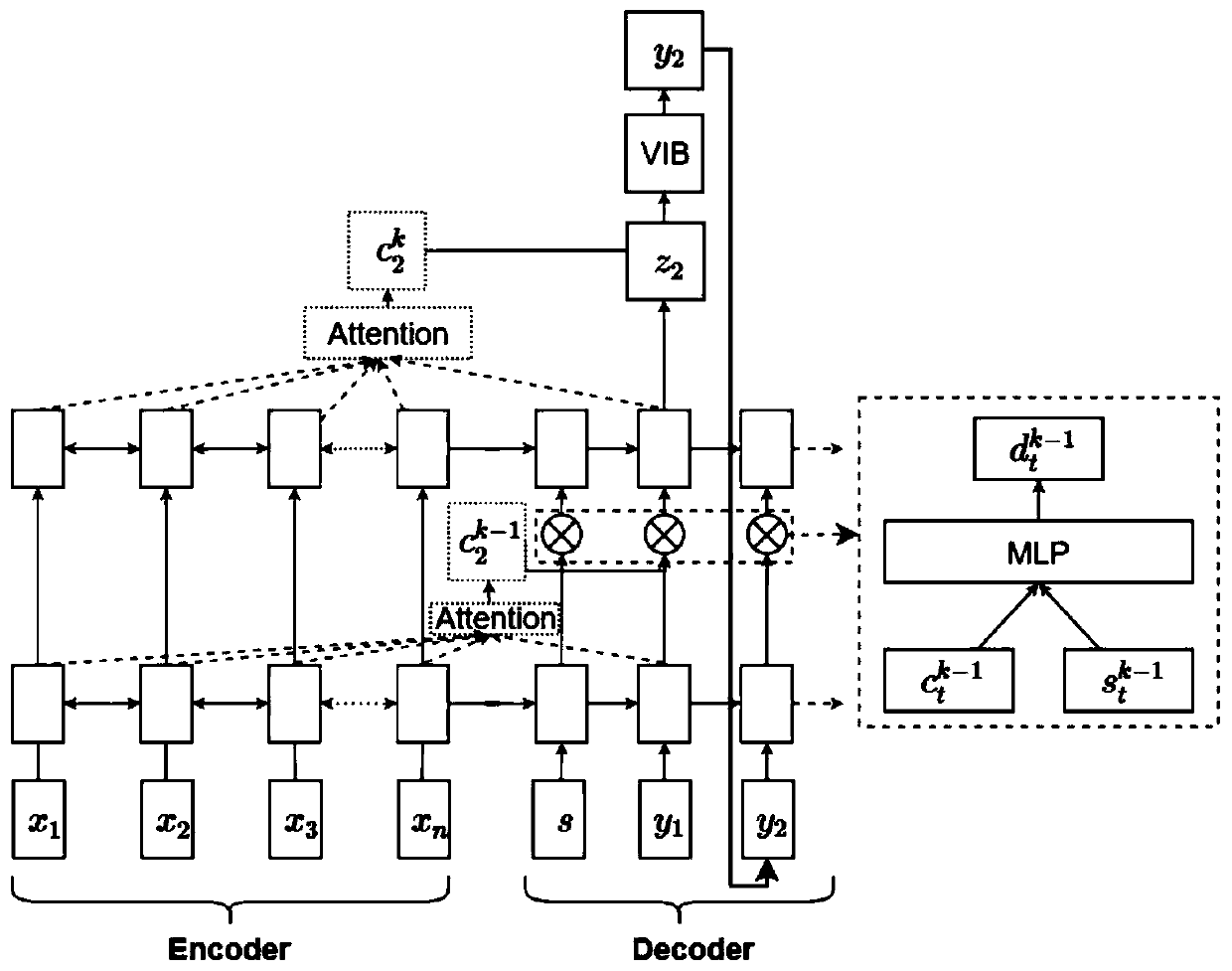

[0036] Step4. For the multi-layer codec model, the codec contains multi-layer LSTMs. In each layer of LSTM, the hidden state representation between the upper layer and the current layer is calculated, so as to fuse...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com