Multi-mode adaptive fusion three-dimensional target detection method

A technology of three-dimensional objects and detection methods, applied in three-dimensional object recognition, character and pattern recognition, instruments, etc., can solve the problems of low detection efficiency, achieve the effect of improving efficiency, improving detection effect, and reducing input

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

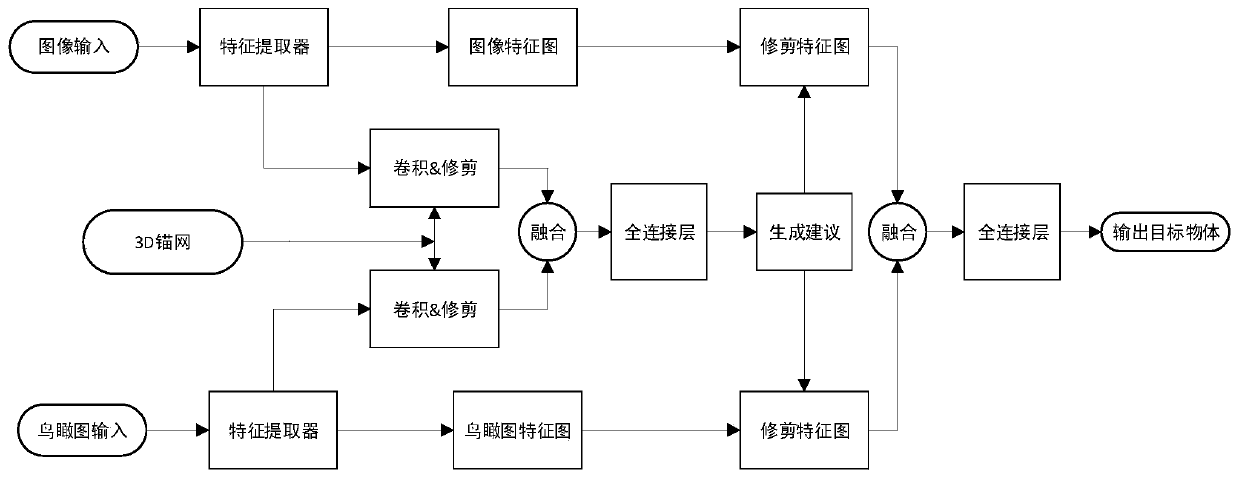

[0012] refer to figure 1 . The specific steps of the three-dimensional target detection method for multi-modal adaptive fusion of the present invention are as follows:

[0013] Step 1. Determine the various information of the input image generated from the data in the KITTI dataset, including the name of the image, the label file of the image, the ground plane equation of the image, point cloud information, and camera calibration information. Read 15 parameters from the file (KITTI dataset format): 2D label coordinates (x1, y1, x2, y2). 3D label coordinates (tx, ty, tz, h, w, l) center point coordinates and length, width and height, delete some labels according to requirements, such as removing pedestrian and cyclist labels when only training cars. Obtain the corresponding ground plane equation (a plane equation: aX+bY+cZ=d), camera calibration parameters include internal and external parameters, and point cloud ([x,....],[y,...],[ z,...]). Create a bird's-eye view image. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com