Image bit enhancement method based on deep learning

A deep learning and deep image technology, applied in the field of deep learning, can solve the problems of blurred image details and light-colored outlines, and achieve the effects of difficult model training, reduced computational complexity, and enhanced bit depth

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0032] The embodiment of the present invention proposes an image bit enhancement method based on deep learning, and perceives the loss function through gradient descent [7] The method of training the model includes the following steps:

[0033] 101: Preprocess the images in the Sintel database with high-bit lossless image quality and quantize them to low-bit images;

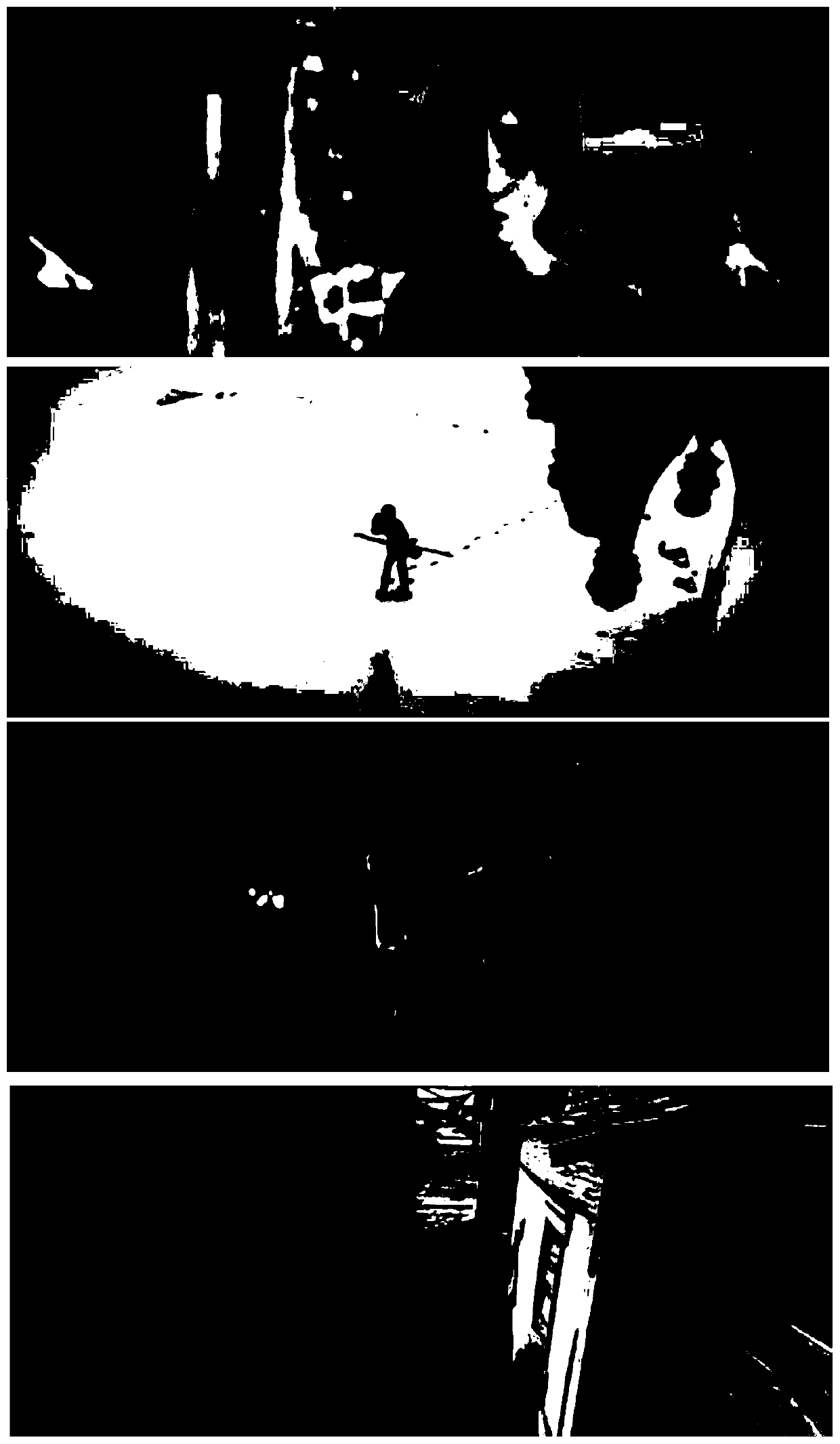

[0034] Among them, the Sintel database comes from a lossless animation short film, and the specific sample images are as follows: figure 2 shown. The images are preprocessed and used to train the convolutional neural network.

[0035] 102: Design a convolutional neural network based on deep learning, take the quantized low-bit image as input, and use the perceptual loss between the output result and the original high-bit image as a loss function;

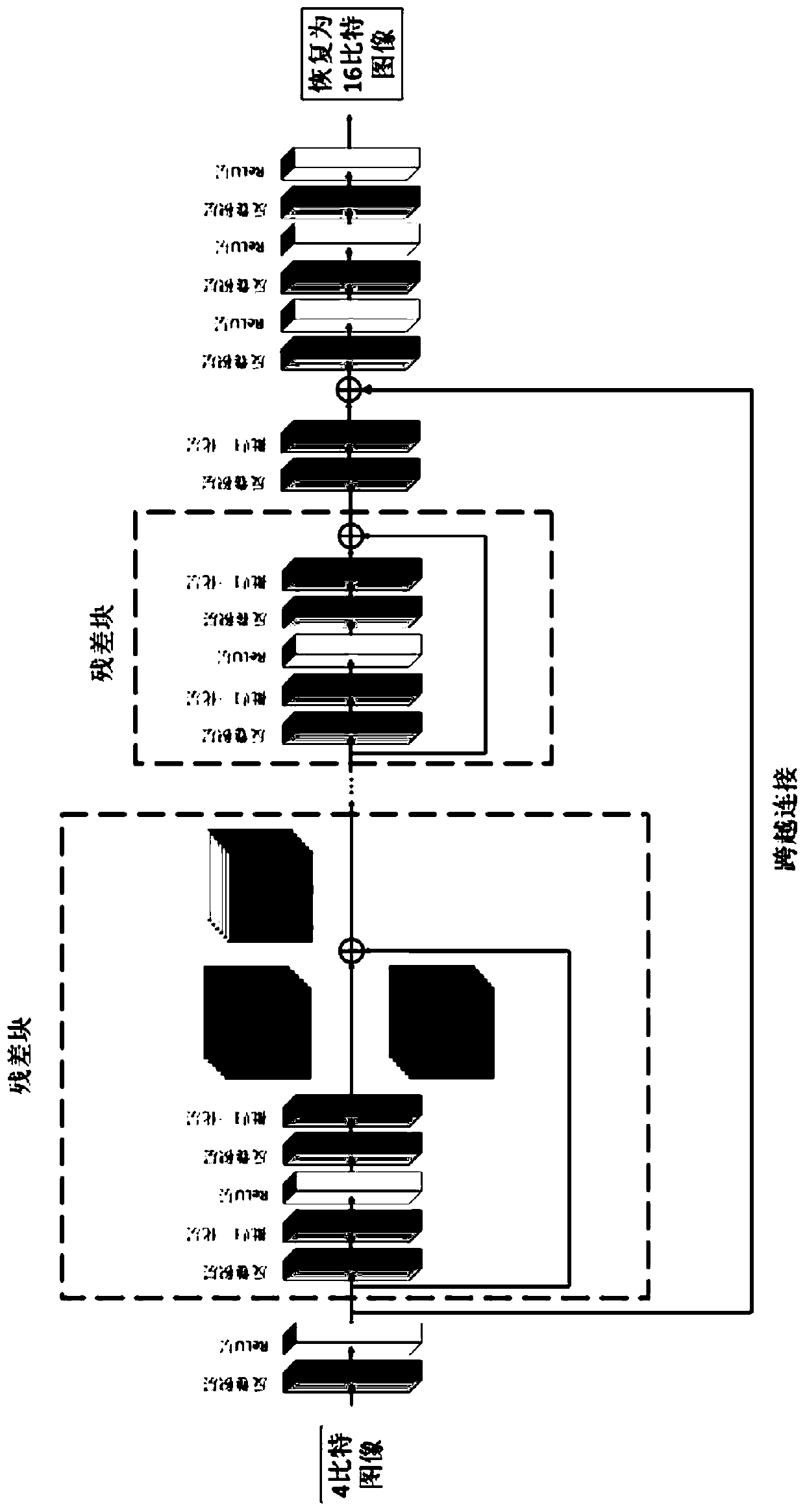

[0036] Among them, such as model figure 1 As shown, the convolutional neural network uses a transposed convolution (TransposedConvolutional Layer) [8] , and borr...

Embodiment 2

[0041] The scheme in embodiment 1 is further introduced below, see the following description for details:

[0042] 201: The Sintel database comes from a lossless animation short film, the image is preprocessed and used to train the convolutional neural network;

[0043] Among them, the Sintel database contains 21,312 frames of 16-bit pictures, each of which has a size of 436x1024. The content of the images covers a variety of scenes, including snow mountains, sky, towns, caves, etc. In order to effectively reduce the memory usage during the training process, after randomly sampling the images in the database, 1000 images are cut into 96x96 small images and stored in the form of numpy arrays. During the training process, the 16-bit image is quantized to 4 bits and input to the convolutional neural network.

[0044] 202: The convolutional neural network uses transposed convolution, and adds skip connections (SkipConnections) between the transposed convolutional layers, and the ...

Embodiment 3

[0052] The evaluation indicators and related bit depth enhancement algorithms at home and abroad are introduced in detail below, and the effects of the solutions in Examples 1 and 2 are evaluated. See the description below for details:

[0053] 1000 images randomly selected from the Sintel database are used to train the model. In order to ensure the accuracy of the test results, the experiment randomly selects images from the remaining image set as the test set to evaluate the experimental effect.

[0054] This method uses two evaluation metrics to evaluate the generated high-bit images:

[0055] Peak Signal to Noise Ratio (PSNR): PSNR is the most common and widely used objective evaluation index for evaluating the similarity between images. PSNR is based on the difference between corresponding pixels between images, that is, an image quality evaluation based on error sensitivity. Since the visual characteristics of the human eye are not taken into account, the objective eva...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com