Visual SLAM front-end pose estimation method based on deep learning

A deep learning and vision technology, applied in the field of visual navigation, can solve problems such as poor universality, difficult feature detection, and poor robustness, and achieve strong robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

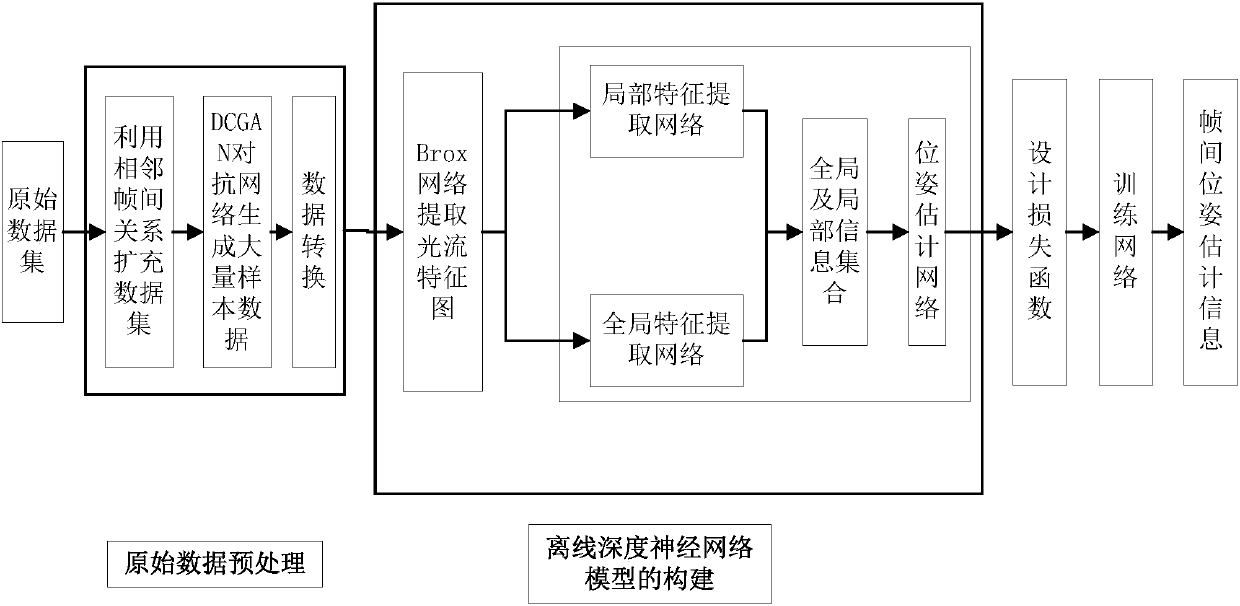

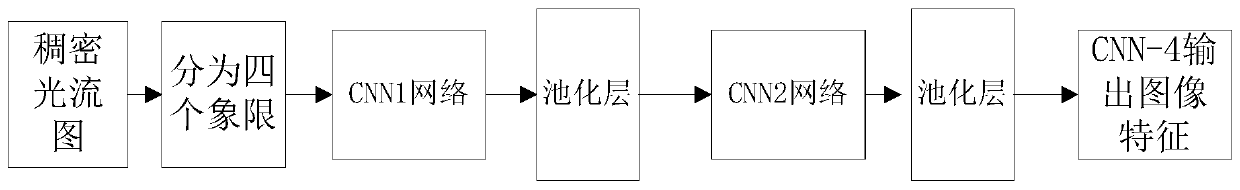

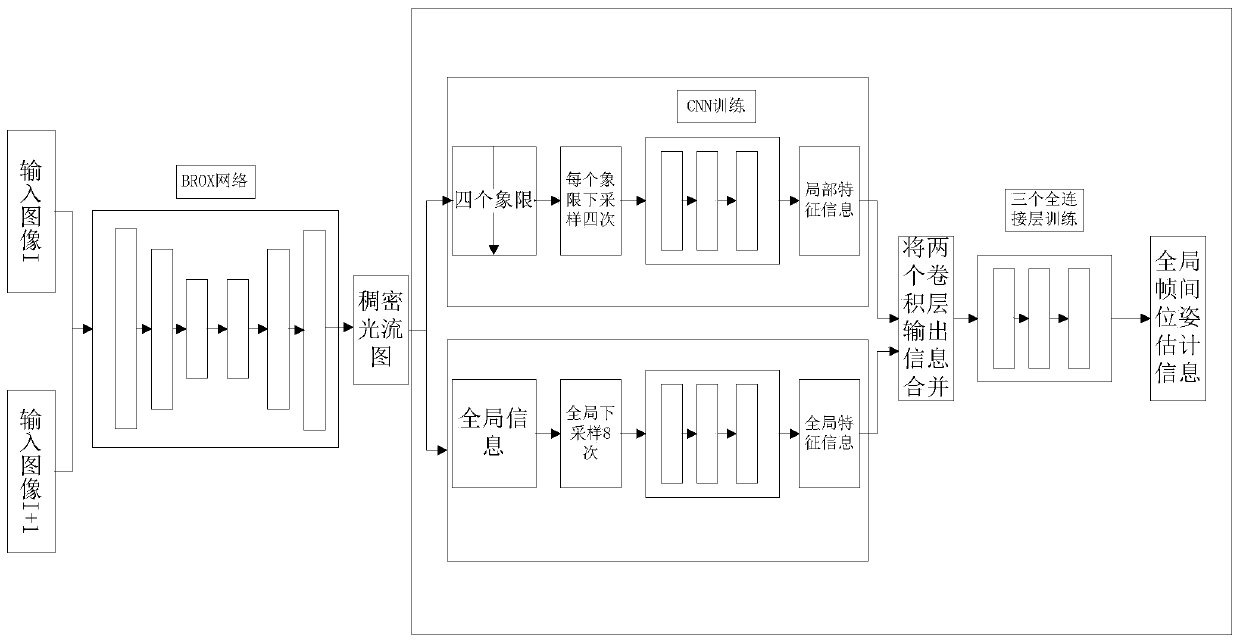

[0052] After receiving continuous image frame input in an end-to-end manner, the pose transformation between frames is estimated in real time. First, data preprocessing is performed on the original data set, including data expansion and data conversion, and then the Brox network is constructed to extract dense optical flow from the input continuous frame images; the extracted optical flow map is divided into two networks for feature extraction, One branch uses global information to extract high-dimensional features, and the other branch divides the optical flow map into 4 sub-images, which are respectively down-sampled to obtain image features; and finally, the features obtained by the training of the two branches are fused. In the last cascaded fully connected network, pose estimation is performed to obtain the pose between two adjacent frames. The ne...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com