Spark-based cassandra data import method, device, equipment and medium

A data import and data technology, applied in the field of data processing, to achieve the effect of uniform data, reducing impact and reducing the number of small files

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0050] Embodiment 1 provides a Spark-based Cassandra data import method, which aims to prevent data imbalance and data skew by equally dividing the data to be imported into each partition, and reduce the probability of memory overflow.

[0051] Spark is a unified analysis engine for large-scale data processing. Spark provides a comprehensive and unified framework for managing various datasets and data sources (batch data or real-time data) with different properties (text data, graph data, etc.). streaming data) for big data processing needs.

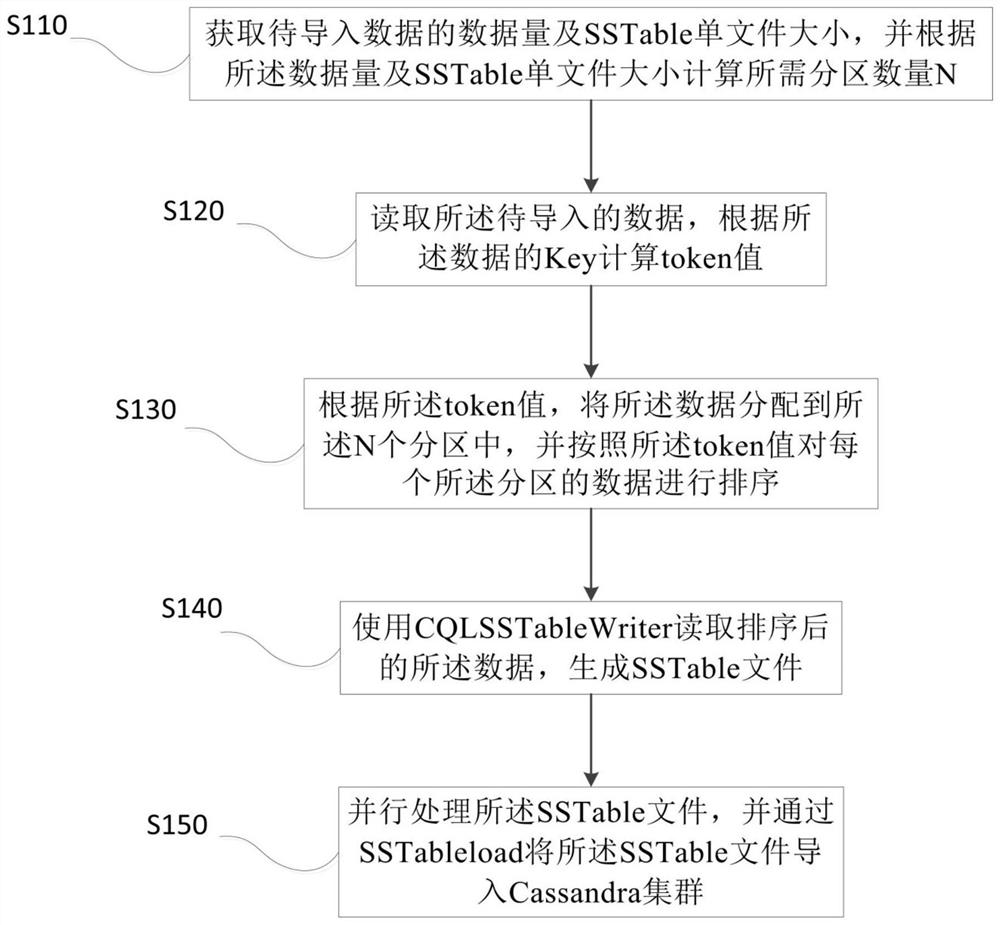

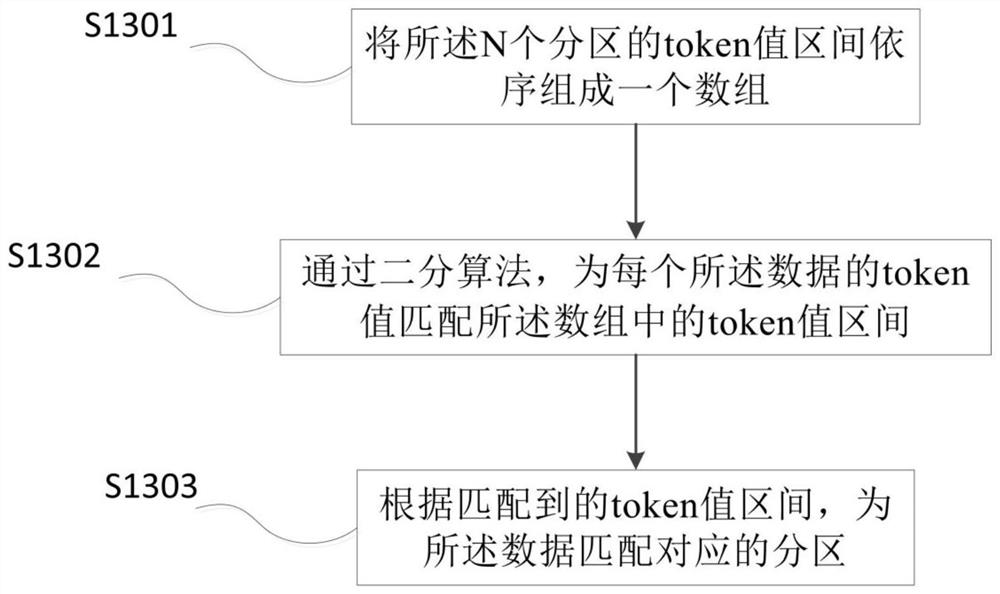

[0052] Please refer to figure 1 As shown, a Spark-based Cassandra data import method includes the following steps:

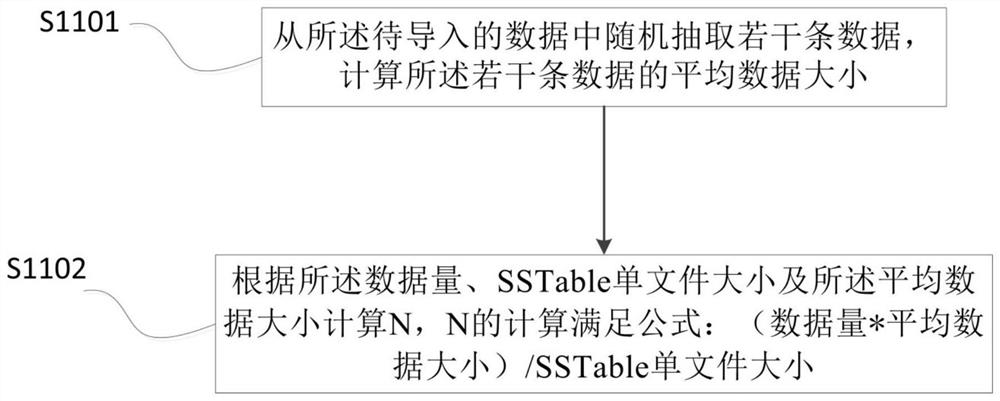

[0053] S110, obtain the data volume of the data to be imported and the SSTable single file size, and calculate the required number of partitions N according to the data volume and the SSTable single file size;

[0054] The SSTable in S110 is the basic storage unit of Cassandra, and the size of a single SSTable file is se...

Embodiment 2

[0081] The second embodiment is carried out on the basis of the first embodiment, and mainly improves the parallel processing process.

[0082] After Spark completes partition (partition) interval calculation and shuffle partition sorting, that is, after completing the steps of S110-S130, when directly using CQLSSTableWriter+SSTableLoader to import data into Cassandra, the parallelism and traffic are difficult to control, which affects the performance of the Cassandra cluster.

[0083] Therefore, in this embodiment, after the SSTable file is generated in step S140 of the first embodiment, a step of copying the SSTable file to the distributed file system is added, and the copy path is recorded, so as to control the parallel number.

[0084] The distributed file system in this embodiment selects hdfs.

[0085] Please refer to Figure 4 shown, including the following steps:

[0086] S210. Calculate the parallel number M according to the number of Cassandra nodes;

[0087] Cass...

Embodiment 3

[0096] Embodiment 3 discloses a device corresponding to the Spark-based Cassandra data import method corresponding to the above embodiment, which is the virtual device structure of the above embodiment, please refer to Figure 5 shown, including:

[0097]The partition calculation module 310 is used to obtain the data volume and the SSTable single file size, and calculate the required number of partitions N according to the data volume and the SSTable single file size;

[0098] The partition allocation module 320 is used for reading data, and calculating a token value according to the key of the data; according to the token value, allocating the data to the N partitions, and assigning each Sort the data in the partition;

[0099] The file generation module 330 is used to read the sorted data using CQLSSTableWriter to generate an SSTable file;

[0100] The file import module 340 is configured to import the SSTable file into the Cassandra cluster through SSTableload.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com