Three-dimensional laser mapping method and system

A 3D laser and map technology, applied in 3D modeling, image analysis, image data processing, etc., can solve the problems of low positioning accuracy and complex methods, achieve high positioning accuracy, simple algorithm, improve robustness and accuracy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

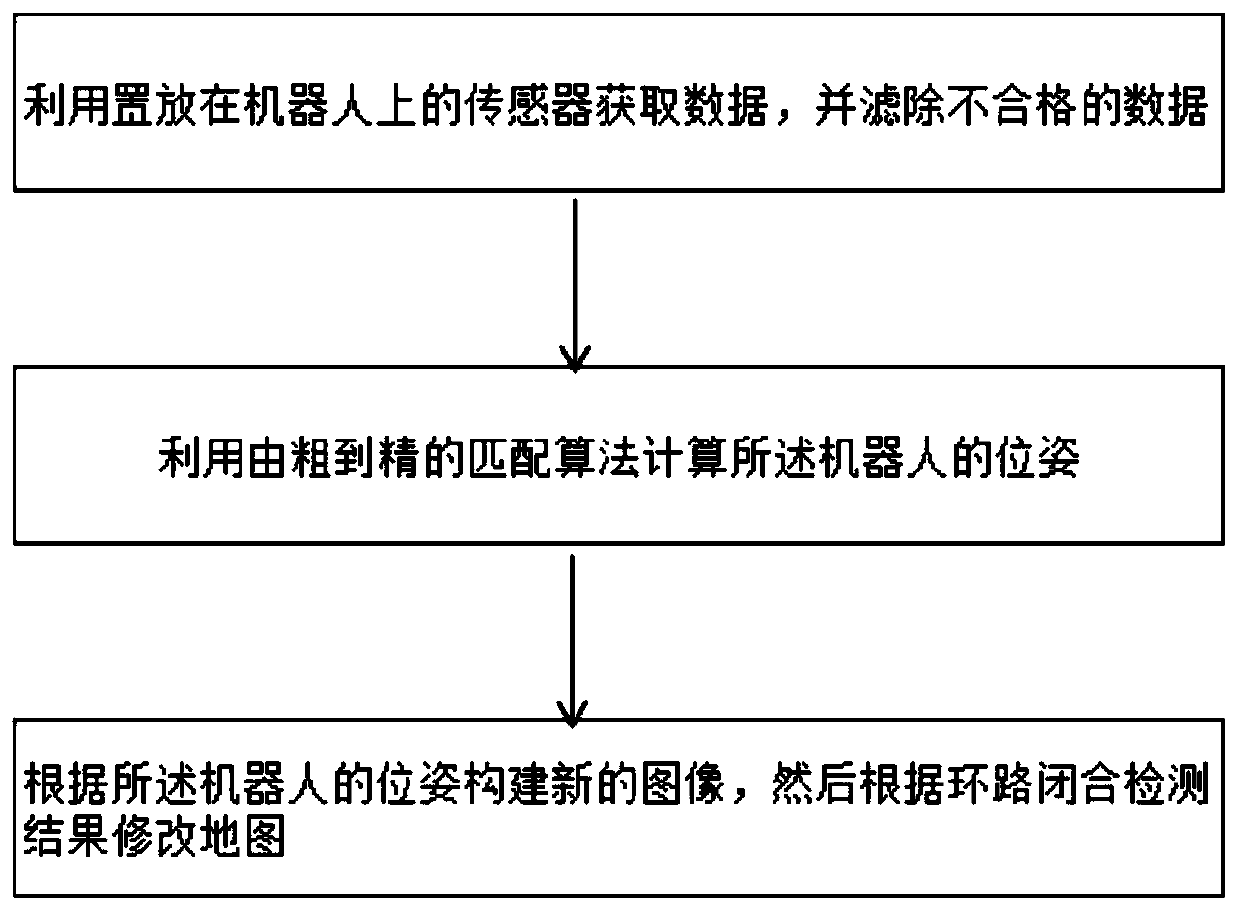

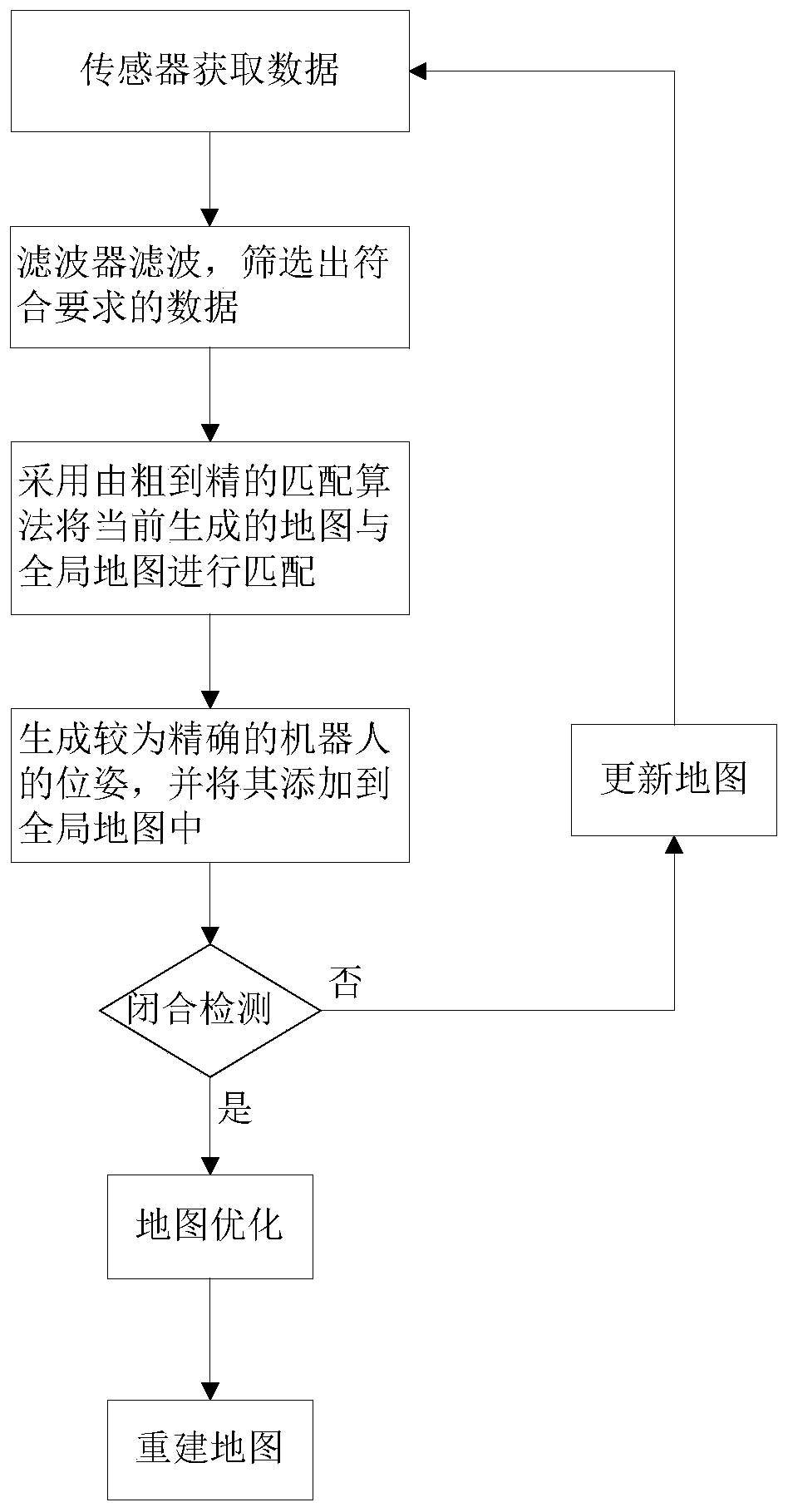

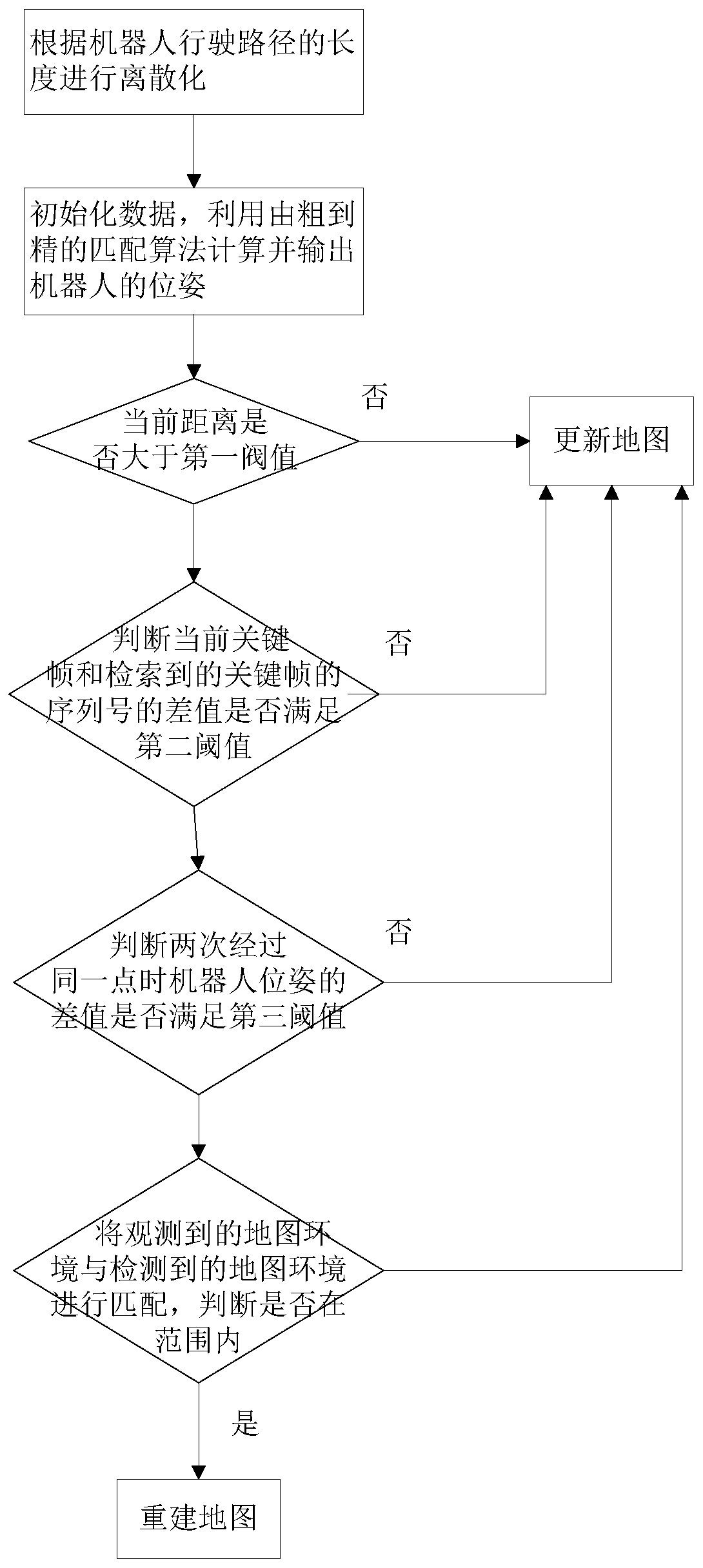

[0032] Such as figure 1 and figure 2 As shown, the present embodiment provides a three-dimensional laser mapping method, including the following steps: Step S1: use the sensor placed on the robot to acquire data, and filter out unqualified data; Step S2: use the coarse-to-fine The matching algorithm calculates the pose of the robot, and the coarse-to-fine matching algorithm includes: Q k Using K-D tree description, for Each laser point in the search at Q k The closest point in the pairing is established; the nonlinear equation is established, and the goal is to minimize the distance of all pairs; the L-M method is used to solve the nonlinear optimization problem, and the T opt = (R, t); then the pose of the robot at time k+1 is where Q k Indicates the point cloud map established at time k, Indicates the point cloud map converted from the current frame to the world coordinate system at time k+1, Indicates the pose of the robot in the world coordinate system at time ...

Embodiment 2

[0069] Based on the same inventive concept, this embodiment provides a 3D laser mapping system, and its problem-solving principle is similar to the above-mentioned 3D laser mapping method, and repeated descriptions will not be repeated here.

[0070] The three-dimensional laser mapping system described in this embodiment includes:

[0071] The acquisition and filtering module is used to acquire data using sensors placed on the robot and filter out unqualified data;

[0072] The calculation module is used to calculate the pose of the robot using a coarse-to-fine matching algorithm, and the coarse-to-fine matching algorithm includes: pairing Q k Using K-D tree description, for Each laser point in the search at Q k The closest point in the pairing is established; the nonlinear equation is established, and the goal is to minimize the distance of all pairs; the L-M method is used to solve the nonlinear optimization problem, and the T opt = (R, t); then the pose of the robot at ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com