Cloud deep neural network optimization method based on CPU and FPGA cooperative computing

A deep neural network and optimization method technology, applied in the field of computer architecture design, can solve the problems of high data communication overhead, poor flexibility, and low cost performance, and achieve the effects of reducing power consumption, improving performance, and low price

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] In order to clearly illustrate the technical features of this solution, the present invention will be described in detail below through specific implementation modes and in conjunction with the accompanying drawings. The following disclosure provides many different embodiments or examples for implementing different structures of the present invention. Descriptions of well-known components and processing techniques and processes are omitted herein to avoid unnecessarily limiting the present invention.

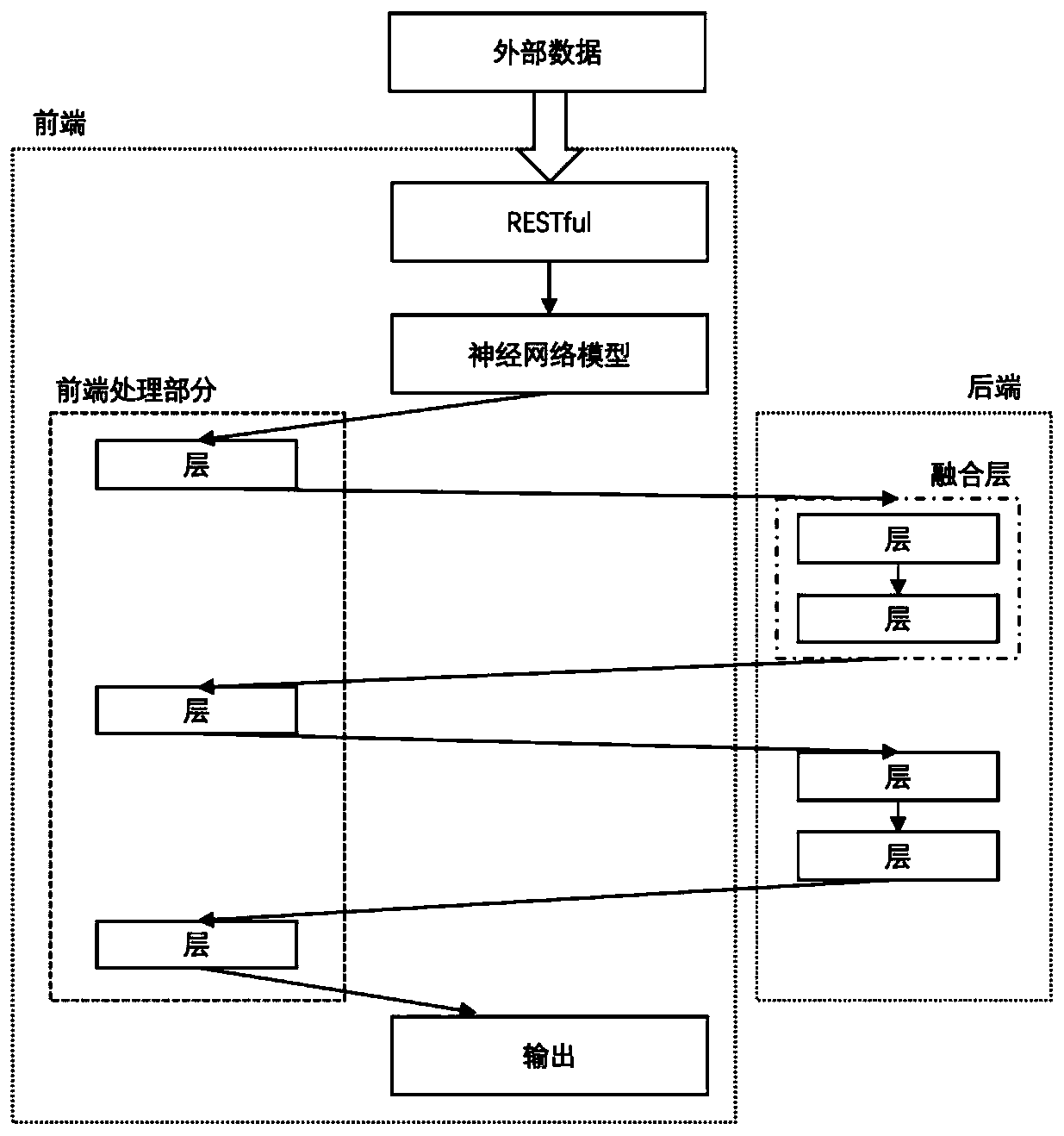

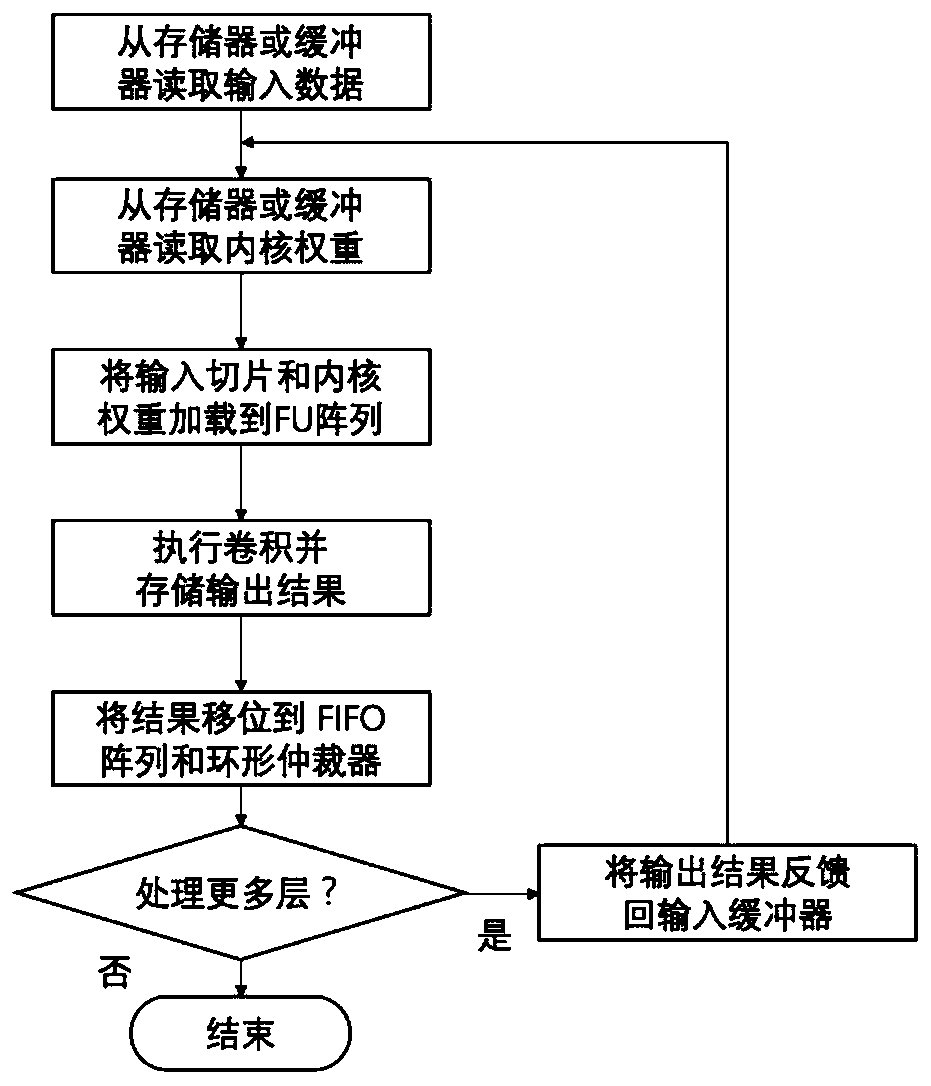

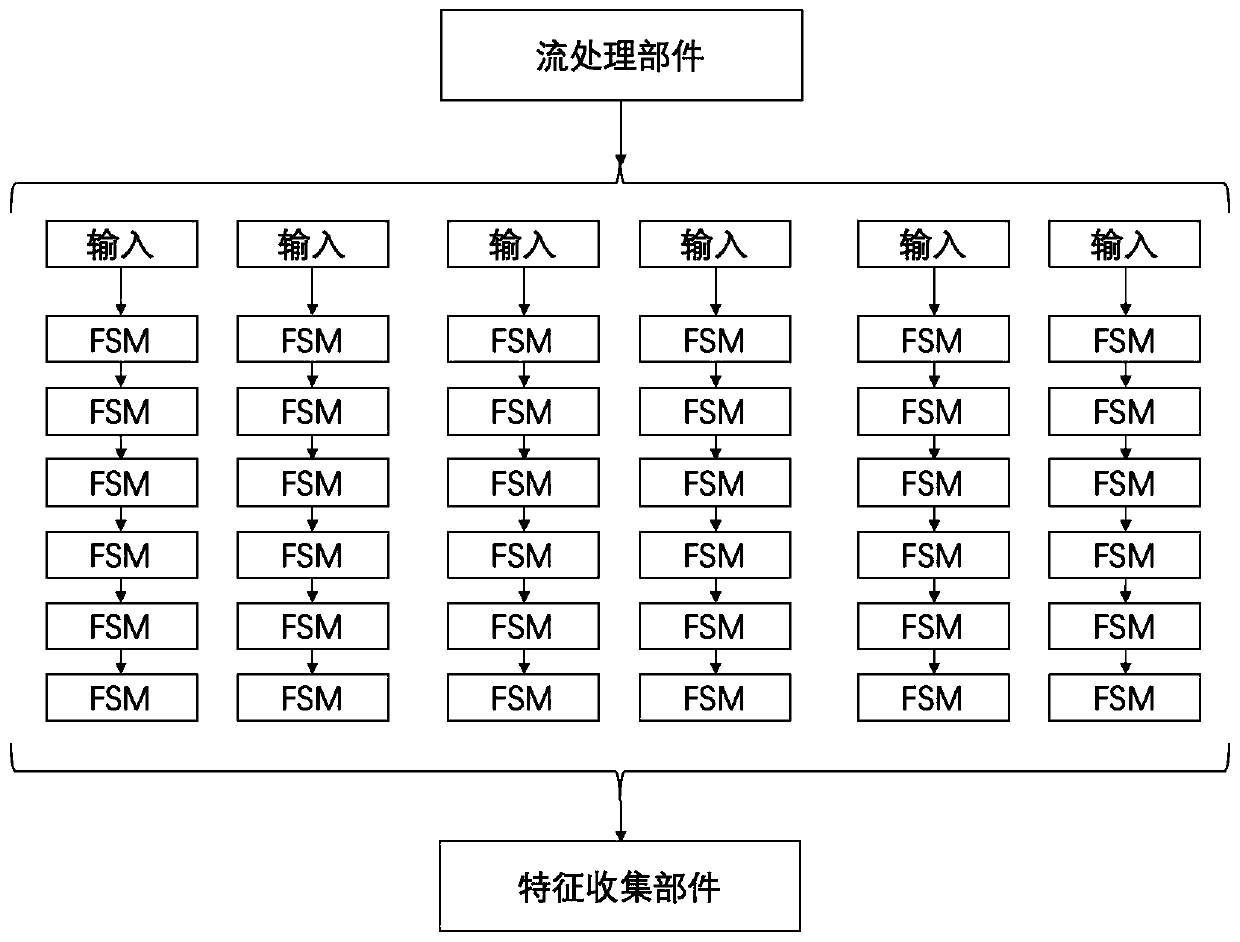

[0020] The present invention provides an optimized method for implementing a deep neural network on a server component comprising a host component having a CPU and a hardware acceleration component connected to the host component; the deep neural network comprising a plurality of layers. The method includes: dividing into two parts respectively suitable for the front and rear ends. The data received by the front end is in the form of a data stream, and the DDR shuttles b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com