Virtual experiment system and method based on multi-modal interaction

A virtual experiment and multi-modal technology, applied in the field of virtual experiment, can solve the problem of low efficiency of virtual-real interaction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

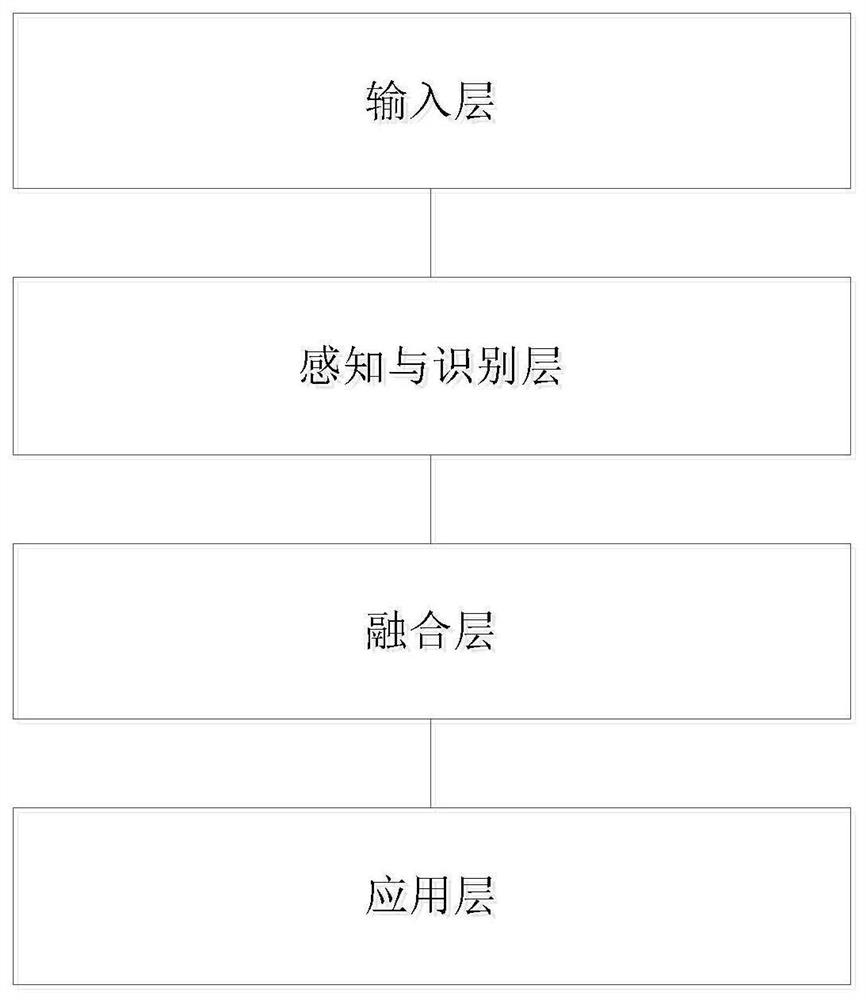

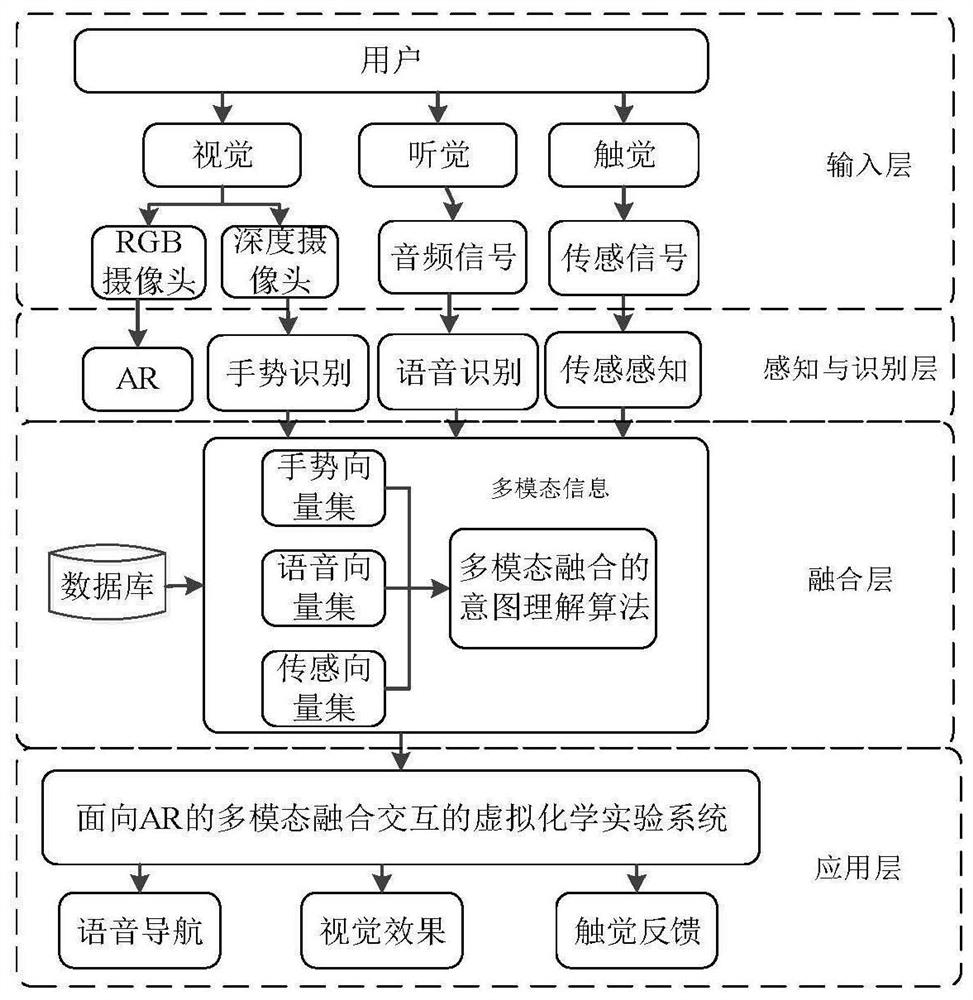

[0088] see figure 1 , figure 1 It is a schematic structural diagram of a virtual experiment system based on multi-modal interaction provided by the embodiment of the present application. Depend on figure 1 It can be seen that the virtual experiment system based on multimodal interaction in this embodiment mainly includes: an input layer, a perception and recognition layer, a fusion layer, and an application layer.

[0089] Among them, the input layer is used to collect the depth information of human skeletal nodes through the visual channel, the sensing signal through the tactile channel and the voice signal through the auditory channel. The depth information of the human skeletal nodes includes: the coordinates of the joint points of the human hand, and the sensing signals include: Magnetic signal, photosensitive signal, touch signal and vibration signal. The perception and identification layer is used to identify the information of the visual channel and the auditory chan...

Embodiment 2

[0128] exist Figure 1-Figure 6 On the basis of the illustrated embodiment see Figure 7 , Figure 7 It is a schematic flowchart of a virtual experiment method based on multi-modal interaction provided by the embodiment of the present application. Depend on Figure 7 As can be seen, the virtual experiment method in this embodiment mainly includes the following processes:

[0129] S1 collects corresponding visual information, sensing signals and voice signals respectively through the visual channel, tactile channel and auditory channel. Sensing signals include: magnetic signals, photosensitive signals, touch signals and vibration signals.

[0130] S2: Identify the information of visual channel, tactile channel and auditory channel respectively.

[0131] Among them, the information recognition method of the visual channel mainly includes the following process:

[0132] S201: Build an AR environment.

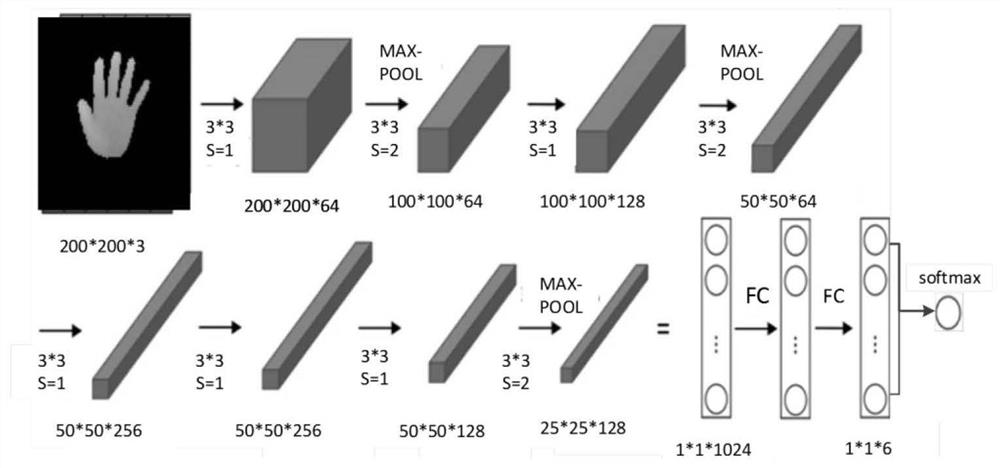

[0133] S202: Train a gesture recognition model in a convolutional neura...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com