Storage system self-adaptive parameter tuning method based on deep learning

An adaptive parameter and storage system technology, applied in neural learning methods, electrical digital data processing, program startup/switching, etc. The effect of improving performance, improving performance and quality of service

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

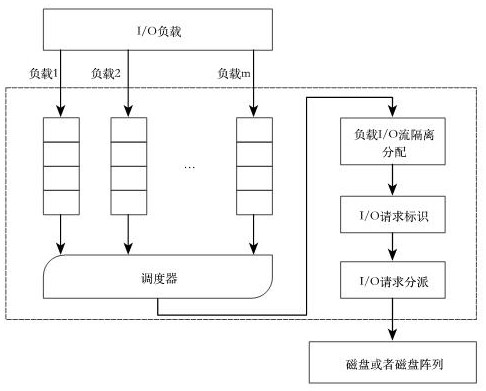

[0029] The present invention will be further described below in conjunction with accompanying drawing, please refer to figure 1 . figure 1 The architecture of the I / O bandwidth scheduling model based on the WFQ algorithm proposed by the present invention is given. After the I / O load is extracted from the application, the I / O load is classified according to different applications and sent to The corresponding I / O request queue waits for service, and then reorders the I / O load requests queued in the queue according to the scheduler, and finally sends them to the underlying storage entities such as disks or disk arrays. The WFQ scheduling algorithm adopted by the scheduler is divided into three parts, which are load I / O flow isolation distribution, I / O request identification and I / O request dispatching. Load I / O flow isolation allocation adopts a dynamic mechanism to distinguish between normal I / O flows and abnormal I / O flows, and at the same time carry out weighted and fair dis...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com