Multi-sensor real-time fusion method for mobile robot remote takeover scene

A mobile robot and multi-sensor technology, applied to color TV parts, TV system parts, TVs, etc., can solve problems such as redundant data, lost distance information, and inability to meet application scenarios, so as to speed up transmission, The effect of removing redundant data

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] In order to describe the present invention more specifically, the technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

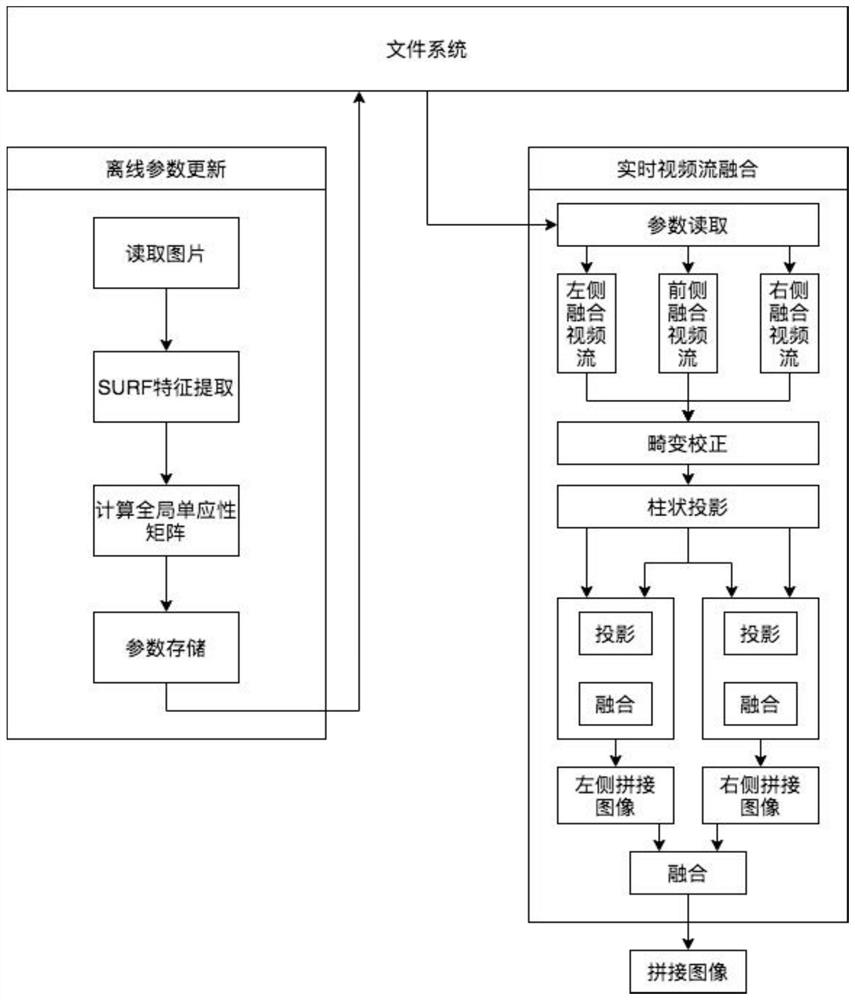

[0040] Such as figure 1 As shown, the present invention is oriented to a multi-sensor real-time fusion method for a remote takeover scene of a mobile robot, which specifically includes the following steps:

[0041] Step 1: The intelligent mobile robot uses three cameras whose positions are relatively fixed.

[0042] In the remote takeover scenario, the camera can collect visual information, but it will lose important distance information. At the same time, it performs poorly in harsh environments and weather. The laser radar can collect accurate distance information, but the resolution is low. The present invention uses Lidar and three monocular cameras make up for the lack of a single sensor. One of the cameras is facing the front, and the other two ca...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com