Indoor environment 3D semantic map construction method based on point cloud deep learning

An indoor environment, semantic map technology, applied in image analysis, image enhancement, neural architecture, etc., can solve the problem of no semantic perception ability or complex and indirect semantic acquisition method, poor adaptability to dynamic scenes, non-topological map structure navigation and obstacle avoidance, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

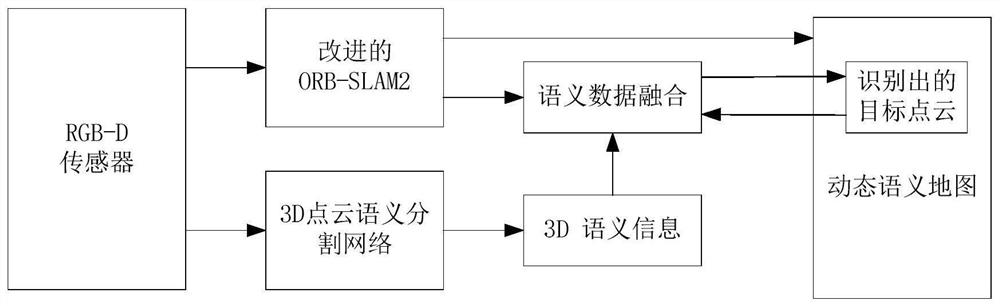

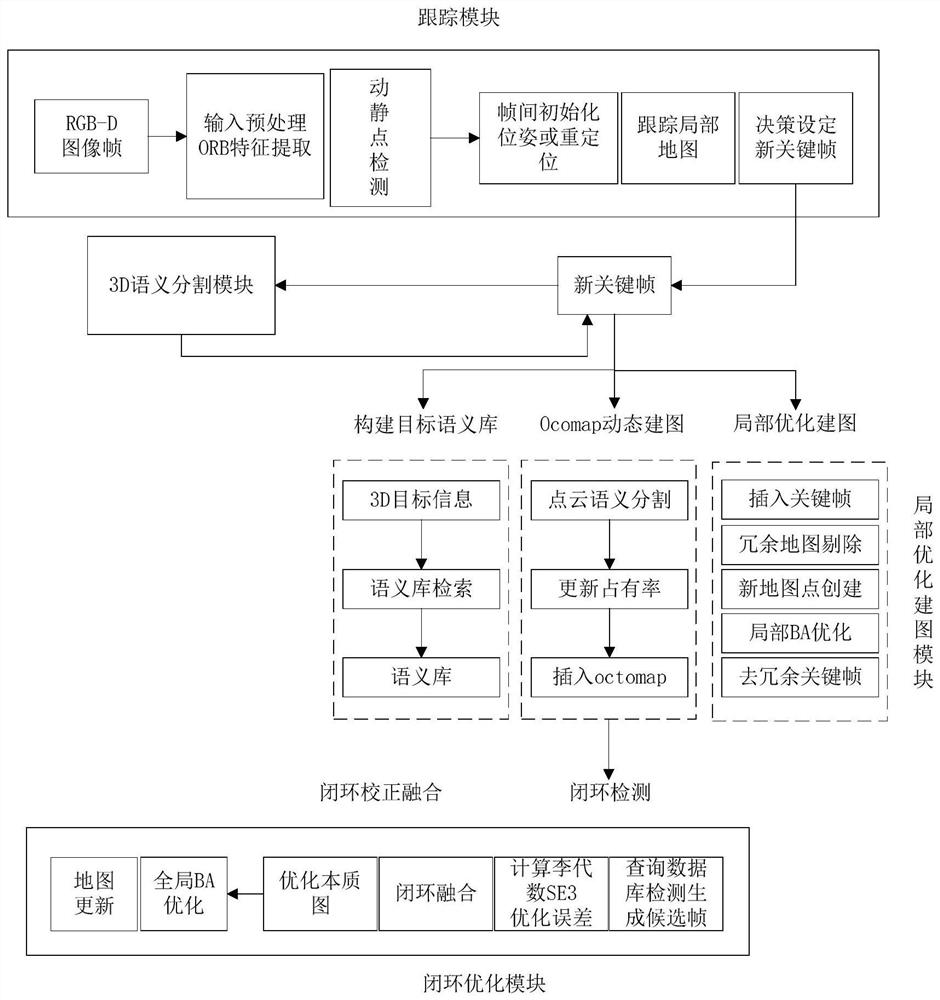

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] The present invention will be described in detail below in conjunction with the embodiments and accompanying drawings, but the present invention is not limited thereto.

[0056] The hardware environment required for the operation of the whole method system is Kinect2.0 depth sensor, CPU i7-8700k, and a server with GTX1080Ti GPU; the software environment is Ubuntu16.04Linux operating system, ROS robot development environment, ORB-SLAM2 open source framework, OpenCV open source vision library, and CUDA, cuDNN, Tensorflow and other deep learning environments for 3D object detection. In addition, there are necessary third-party dependent libraries such as DBoW2 visual dictionary library, map display library Pangolin, and graph optimization library g2o.

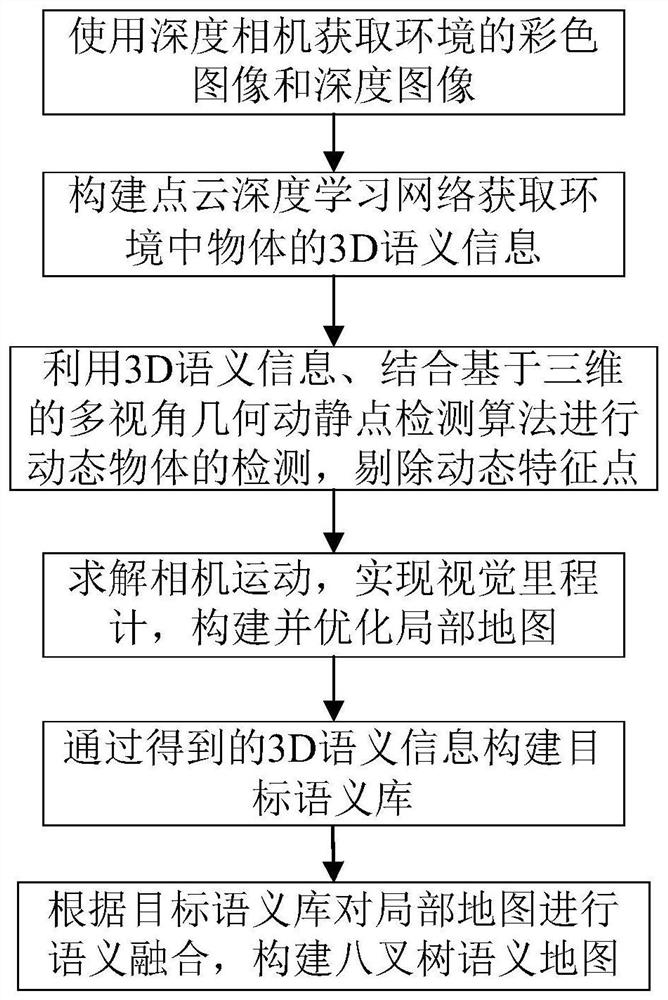

[0057] Such as figure 1 As shown, a method for building a 3D semantic map of an indoor environment based on point cloud deep learning, the main implementation includes the following steps:

[0058] (1) Use a depth camera ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com