Image segmentation model training method and device, image segmentation method and device, equipment and medium

A technology of image segmentation and model training, applied in the field of image processing, can solve problems such as difficult training, unsatisfactory effect, too simple, etc., and achieve the effect of improving output resolution, improving segmentation accuracy, and detail information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

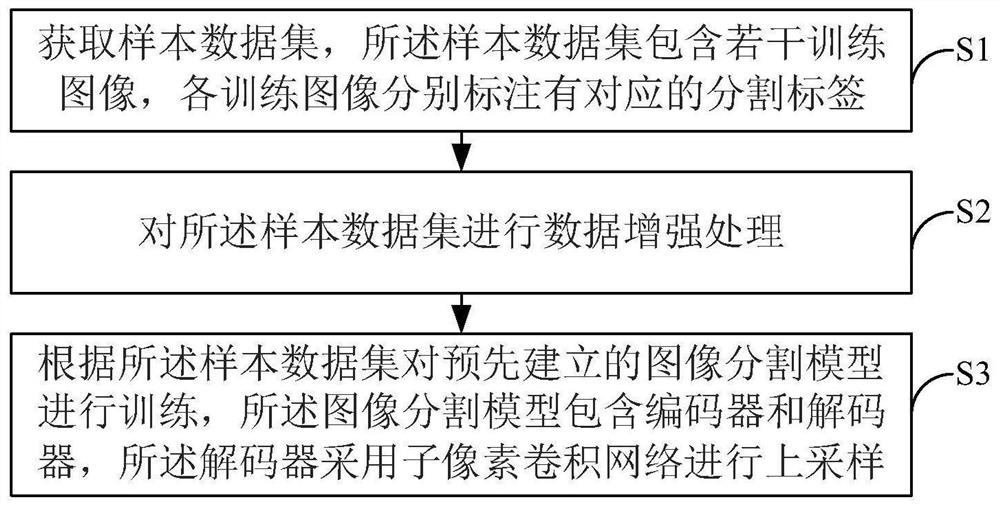

[0059] based on figure 1 The implementation environment shown, this embodiment provides a kind of image segmentation model training method, such as figure 2 As shown, the method includes the following steps:

[0060] S11. Acquire a sample data set, the sample data set includes several training images, and each of the training images is labeled with a corresponding segmentation label.

[0061] For example, when the trained image segmentation model is mainly used for portrait segmentation, the sample data set is EG1800, a well-known data set in the field of portrait segmentation. Pixel-level manual labeling, these images are mainly from selfies of mobile phone cameras, 1800 pictures are divided into two parts, 1600 training sets and 200 test sets.

[0062] Of course, the sample data set in this embodiment is not limited to the use of the data set EG1800, and other existing image data sets can also be used as needed, or the sample data set can be obtained by pixel-level labeli...

Embodiment 2

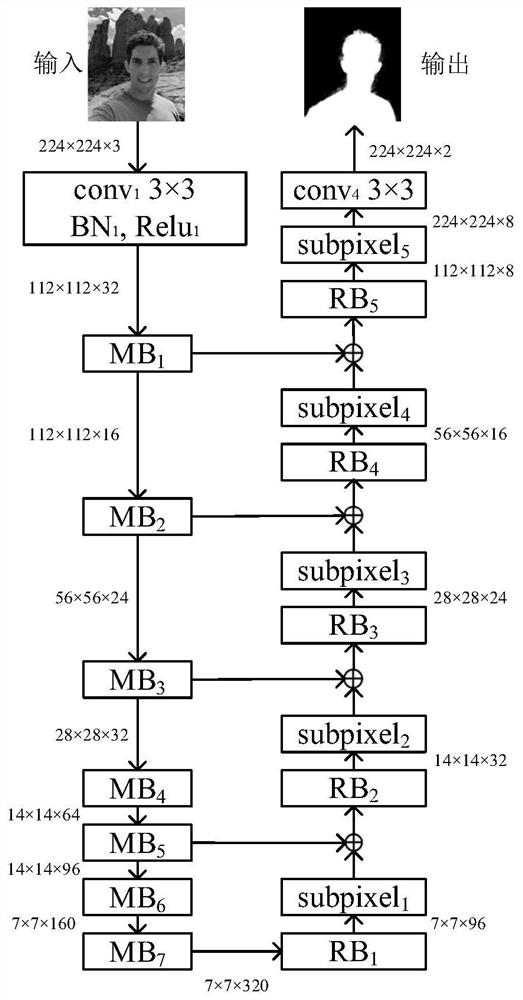

[0077] With the widespread use of mobile terminals, more and more applications require image segmentation on mobile terminals. Mainstream segmentation networks such as U-Net (classic network in medical image segmentation, using encoding and decoding structure and skip connection), DeepLab series (a series of models proposed for semantic segmentation tasks, mainly using deep convolution, probabilistic graph model and hole Convolution for segmentation), Mask-RCNN (a network model based on the convolutional network candidate area extraction mask) only pays attention to accuracy, but not efficiency, so it is not suitable for fast segmentation on the mobile terminal. The emergence of MobileNets (a lightweight network that can be trained on the mobile terminal, mainly using depth-separable convolution to improve efficiency) model provides an efficient neural network model for mobile vision applications, which is very important for machine learning. Successful applications on mobile ...

Embodiment 3

[0089] This embodiment provides an image segmentation method, such as Figure 10 As shown, the method specifically includes the following steps:

[0090] S21, acquiring an image to be segmented;

[0091] S22. Process the image to be segmented based on the image segmentation model trained by the method described in embodiment 1 or embodiment 2, to obtain a target segmentation result of the image to be segmented.

[0092] In this embodiment, by using the image segmentation model trained in Embodiment 1 and Embodiment 2 to perform image segmentation, an image segmentation result with finer boundary information can be obtained.

[0093] It should be noted that, for the foregoing embodiments, for the sake of simple description, they are expressed as a series of action combinations, but those skilled in the art should know that the present invention is not limited by the described action sequence, because according to this According to the invention, certain steps may be performed...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com