Pixel-level target positioning method based on laser and monocular vision fusion

A monocular vision, target positioning technology, applied in the direction of using optical devices, measuring devices, instruments, etc., can solve the problem of computing resource consumption, object positioning accuracy, effective positioning distance and cost, limited computing power of terminal computing equipment, and difficult objects. positioning and other problems, to achieve the effect of improving autonomous operation ability, ensuring real-time positioning, and high positioning accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings.

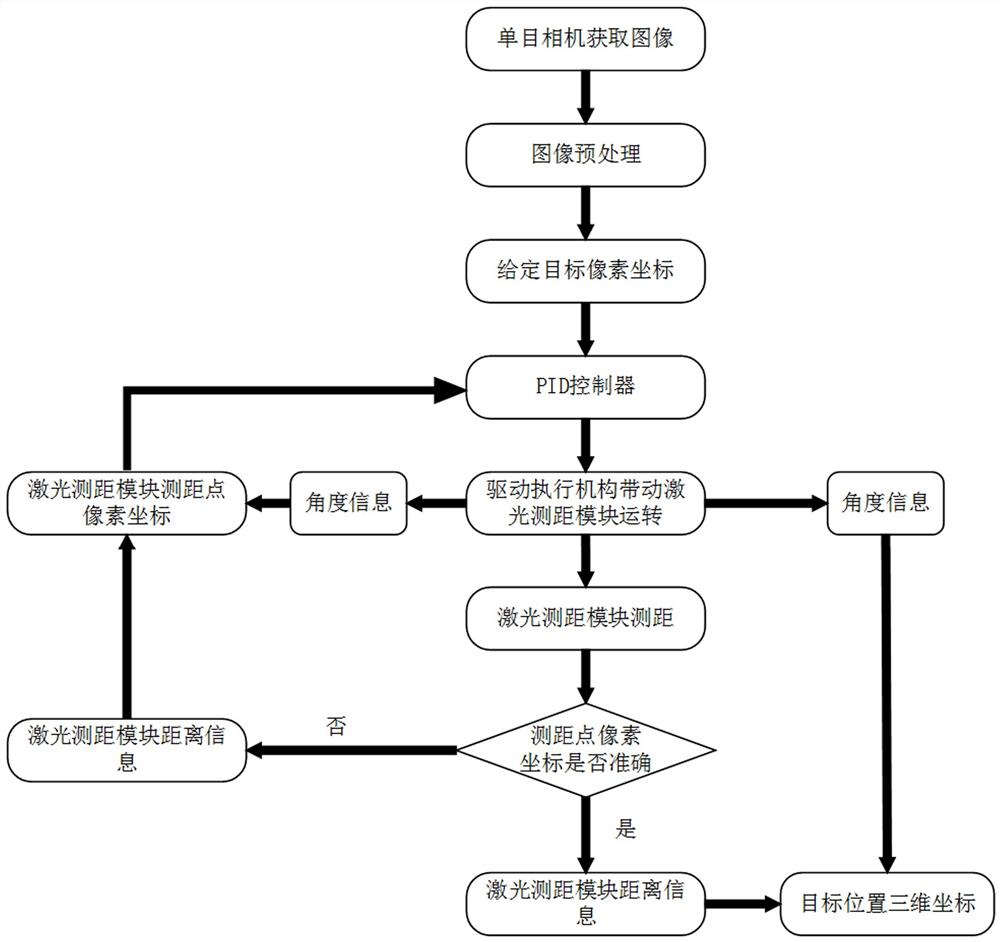

[0031] Such as Figure 1-2 As shown, a pixel-level target positioning method based on laser and monocular vision fusion of the present invention includes the following steps:

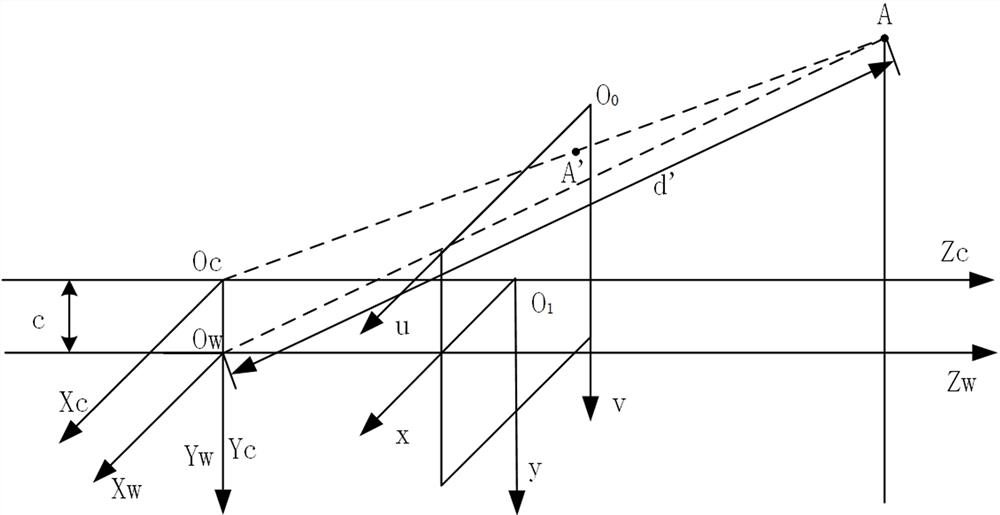

[0032] S1. The installation position of the camera ensures that the optical axis of the camera is parallel to the ground. The installation position of the laser ranging module ensures that the line connecting the optical center of the laser ranging module and the optical center of the camera is perpendicular to the ground and makes the vertical distance between the laser ranging module and the monocular camera spacing as small as possible, where O W x W Y W Z W The coordinate system of the laser ranging module is the world coordinate system of this system, O C x C Y C Z C is the ca...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com