Signal processing method based on deep neural network

A technology of deep neural network and signal processing, applied in the field of video compression combining optical flow information and depth information for frame prediction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The specific implementation manners of the present invention will be further described below in conjunction with the drawings and examples. The following examples are only used to illustrate the technical solution of the present invention more clearly, but not to limit the protection scope of the present invention.

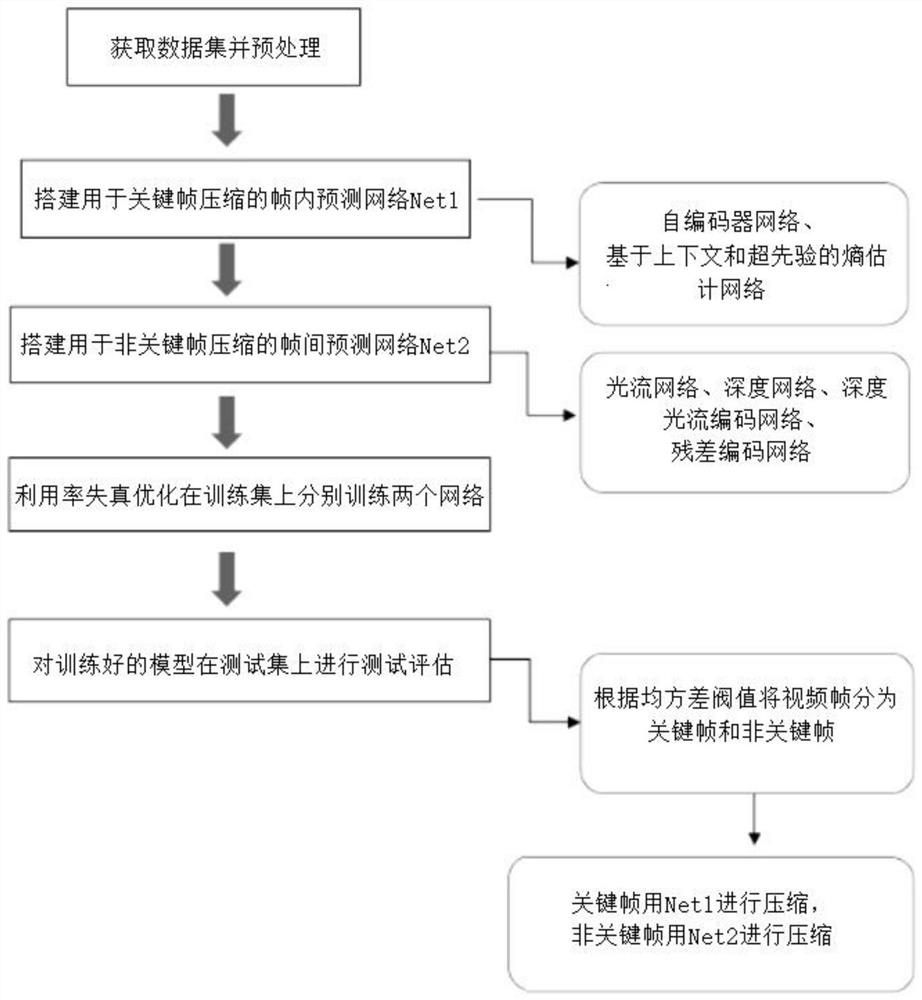

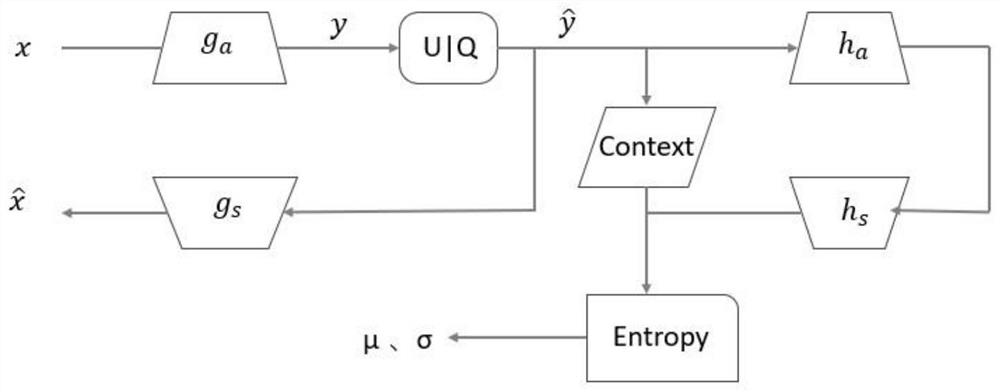

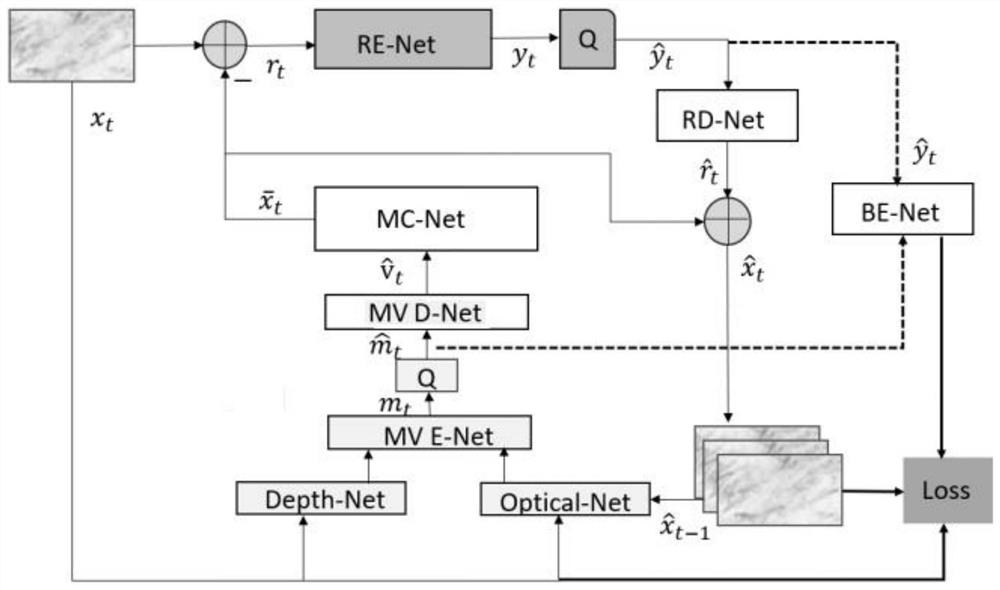

[0033] Such as Figure 1 to Figure 3 Shown, the technical scheme of concrete implementation of the present invention is as follows:

[0034] 1. Build the development environment python3.6+Pytorch1.4+cuda9.0+cudnn7.0.

[0035] 2. Download and preprocess the training data set; the training set uses viemo90K, the data volume of the data set reaches 80G, and consists of 89,800 video clips downloaded from vimeo.com, covering a large number of scenes and actions; mainly used for the following four Video processing tasks: temporal frame interpolation, video denoising, video deblocking, and video super-resolution.

[0036] 3. Establish a video compression projec...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com