Image description text generation method based on generative adversarial network

A technology for image description and text, applied in biological neural network models, neural learning methods, instruments, etc., can solve problems such as inaccurate words, low scores, and insignificant improvement

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

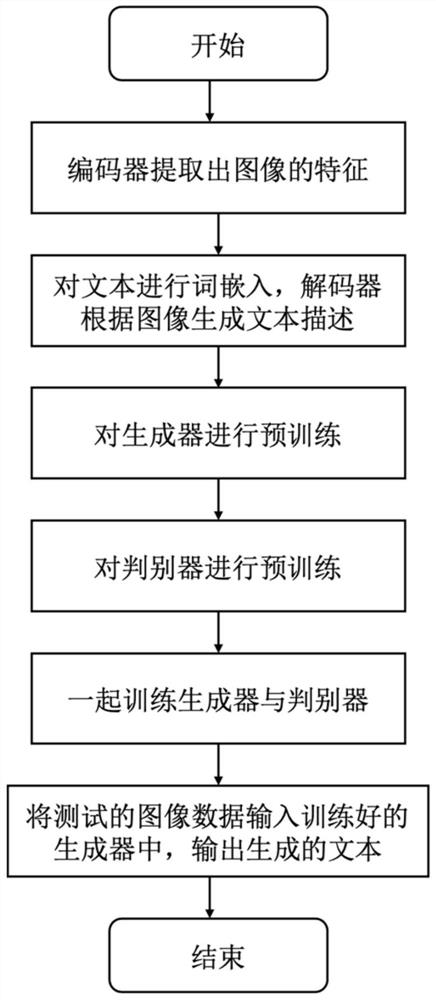

[0045] This method is mainly implemented by Pytorch, such as figure 1 As shown, the present invention provides a method for generating image description text based on generation confrontation network, comprising the following steps:

[0046] 1) Use the target detection model as an encoder to extract the features of the image. The encoder is the target detection model Faster R-CNN, and the image data is passed through the Faster R-CNN model to obtain a set of regional features, a set of bounding boxes, and the category Softmax probability distribution of each region.

[0047] The Faster R-CNN model is built on ResNet-101. ResNet-101 is a pre-trained model for classification training on the ImageNet data set. Faster R-CNN is trained on the Visual Genome data set and used when classifying the target. 1600 category labels and 1 background label, a total of 1601 categories, for the non-maximum value suppression algorithm of the candidate area, the area area overlap rate (Intersect...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com