CNN-BiGRU neural network fusion-based sign language recognition method

A neural network and recognition method technology, applied in the field of sign language recognition based on CNN-BiGRU neural network fusion, can solve problems such as difficult training, ignoring future sequence information, and inability to feature, so as to improve recognition accuracy and reduce network parameters. , The effect of reducing the cost of equipment

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

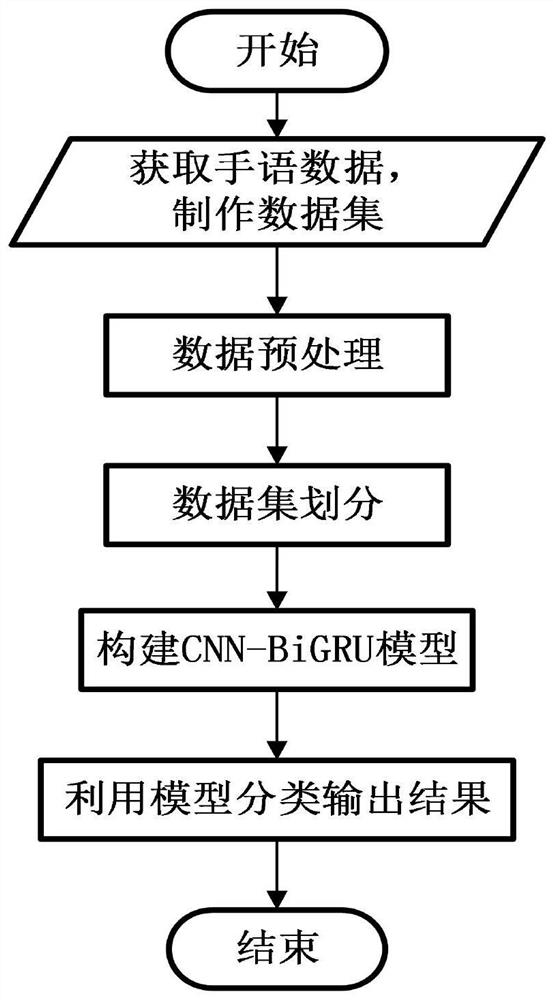

[0046] In this embodiment, a sign language recognition method based on CNN-BiGRU neural network fusion algorithm, such as figure 1 shown, including the following steps:

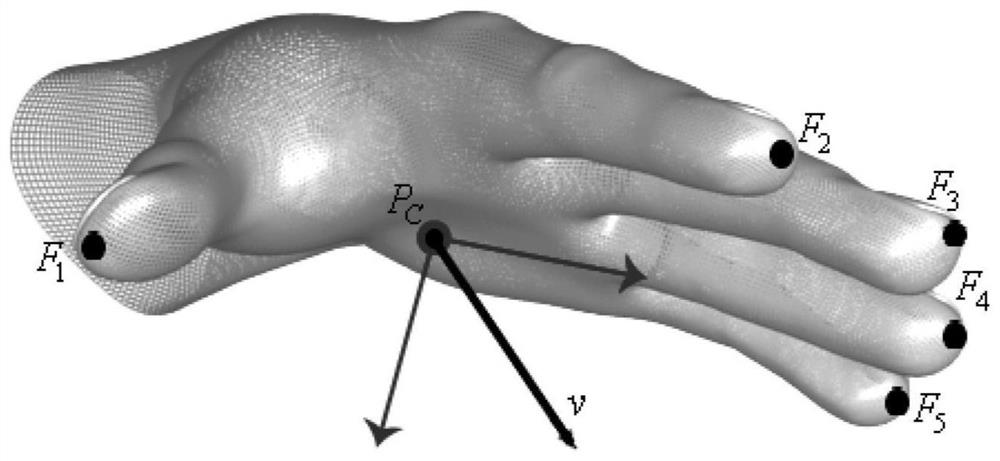

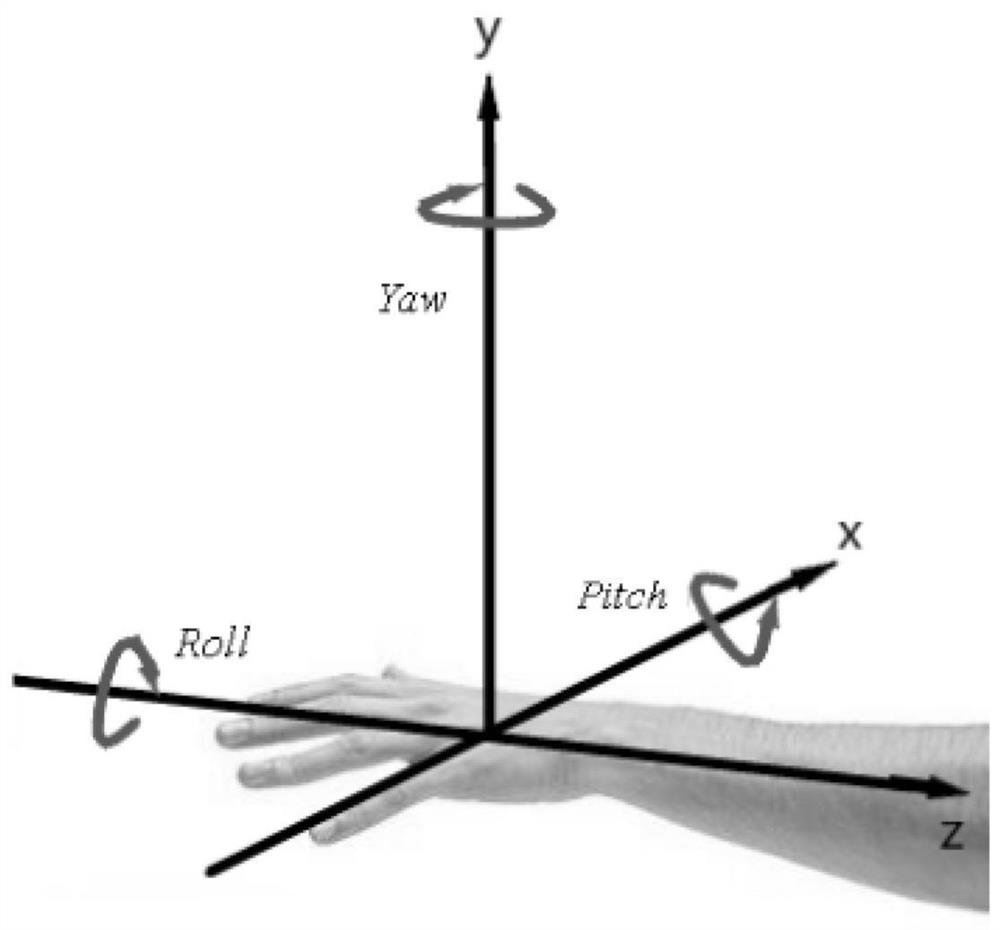

[0047] Step 1: Use the depth camera device Leap Motion to obtain the coordinates F of the thumb position 1 , index finger position coordinates F 2 , the position coordinates of the middle finger F 3 , the position coordinates of the ring finger F 4 , the position coordinates of the little finger F 5 , palm center position P C , palm stable position P S , palm speed v, palm pitch angle Pitch, palm yaw angle Yaw, palm roll angle Roll, hand ball radius r, palm width P W A variety of sign language data is formed; and each sign language data has a corresponding category label; a sign language data set is composed of a variety of sign language data and their category labels;

[0048] In the specific implementation, the operation of acquiring the sign language data set is as follows: 10 acquisition objects, 1...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com