Time sequence classification method, system, medium and device based on multi-representation learning

A time series and classification method technology, applied in the field of time series data mining, can solve the problems of ignoring the potential contribution of deep learning to representation learning, lack of adaptive understanding and representation of diverse time series features, and inability to improve classification accuracy, so as to improve classification accuracy , to achieve the effect of classification interpretability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

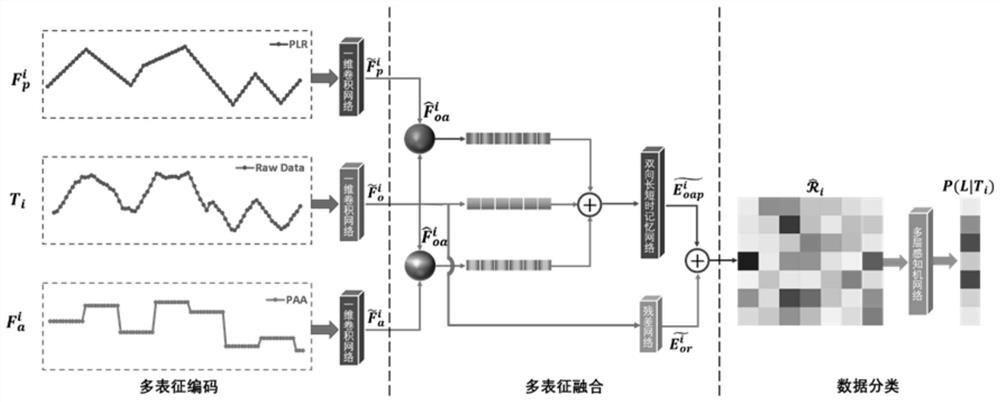

[0086] A time series classification method based on multi-representation learning, such as figure 1 As shown, time series classification is realized by constructing a Multi-representation Learning Networks (MLN) model. The MLN model first uses a variety of representation strategies to comprehensively understand the time series features; secondly, based on the residual network and the bidirectional long-short-term memory network, the multi-representation is effectively fused to achieve representation enhancement; finally, the multi-layer perceptron network is used to realize time series. Classification, and based on the attention mechanism, gives an interpretable basis for the classification results. The specific steps are as follows:

[0087] (1) Multi-feature encoding for a given time series based on different time series representation strategies;

[0088] (2) Using the residual network and the bidirectional long-short-term memory network to achieve representation fusion a...

Embodiment 2

[0092] A time series classification method based on multi-representation learning according to Embodiment 1, the difference is:

[0093] like figure 1 As shown, in step (1), the specific implementation steps of multi-feature encoding include:

[0094] A. Based on the Piecewise Linear Representation (PLR) strategy for the i-th time series T of the time series data set i Perform feature encoding, the time series data set includes N time series, 1≤i≤N, and obtain the first encoding sequence

[0095] B. Time series T based on PiecewiseAggregate Approximation (PAA) strategy i Perform feature encoding to obtain the second encoding sequence

[0096] C. Using one-dimensional time series convolution operation, the first coded sequence obtained in step A The second coding sequence obtained in step B and time series T i Perform data characterization to obtain basic characterization sequences

[0097] The specific implementation process of step C is:

[0098] The first enc...

Embodiment 3

[0104] A time series classification method based on multi-representation learning according to Embodiment 1, the difference is:

[0105] In step (2), the specific implementation steps of characterization fusion and enhancement include:

[0106] D. Perform an element-wise multiplication operation (element-wise multiplication) on the basic representation sequence obtained in step C, and perform representation fusion;

[0107] E. Input the fused characterization sequence obtained in step D into the residual network and the bidirectional long-short-term memory network respectively to deeply understand the temporal characteristics; re-process the characterization sequence processed by the residual network and the bidirectional long-short-term memory network Merge to achieve representation fusion and enhancement.

[0108] In step D, the element-level multiplication operation is shown in formula (IV) and formula (V):

[0109]

[0110]

[0111] In formula (IV) and formula (V),...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com