Dynamic memory allocation method and device based on GPU (Graphics Processing Unit) and memory linked list

A technology of dynamic allocation and memory chaining, which is applied in the directions of memory address/allocation/relocation, resource allocation, multi-programming device, etc. It can solve the problems of large amount of calculation, inability to realize dynamic allocation of memory, high computational complexity, etc. The effect of small memory

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

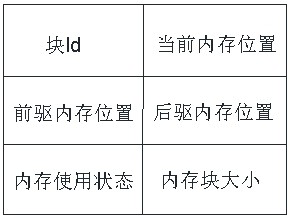

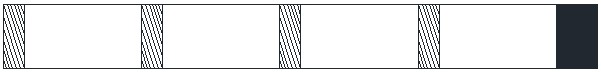

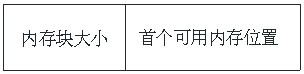

[0056] The present invention provides a preferred embodiment, a GPU-based memory dynamic allocation method, which can be used for memory dynamic allocation of OpenGL, a parallel computing architecture in a GPU system, to be used in combination with mainstream graphics cards to realize a general-purpose parallel computing architecture of GPU. The memory dynamic allocation method provided by the invention has the characteristics of low calculation complexity and small calculation amount, and is more in line with system requirements.

[0057] Preferably, the memory dynamic allocation method in this embodiment mainly includes three memory operations on memory allocation: a memory initial allocation process (allocate), a memory release process (free) and a memory reallocation process (reallocate). The present invention is described in detail according to different memory operations: as Figure 4 As shown, the initial memory allocation process includes:

[0058] Step S11, generate ...

Embodiment 2

[0135] Preferably, based on the OpenGL-based dynamic memory allocation method provided by the present invention, the present invention also provides an OpenGL-based memory dynamic allocation device, including a memory, a processor, and a computer program stored on the memory and run on the processor, The computer program is a dynamic memory allocation program, and the steps of implementing the initial memory allocation process when the processor executes the memory dynamic allocation program are as follows:

[0136] Initialization step: the system initializes and generates multiple storage cache objects, and uses one of the storage cache objects as a storage allocation lock and records it as the first storage cache object, and records the remaining storage cache objects as the second storage cache object; Two storage cache objects are all available memory blocks for memory allocation in the system;

[0137] Element setting step: Obtain the memory size and the minimum memory un...

Embodiment 3

[0162] Preferably, based on the OpenGL-based dynamic memory allocation method provided by the present invention, the present invention also provides a storage medium, which is a computer-readable storage medium on which a computer program is stored, and the computer program is a memory dynamic Allocation program, the steps of implementing the initial memory allocation process when the memory dynamic allocation program is executed by the processor; the steps of the initial memory allocation process specifically include:

[0163] Initialization step: the system initializes and generates multiple storage cache objects, and uses one of the storage cache objects as a storage allocation lock and records it as the first storage cache object, and records the remaining storage cache objects as the second storage cache object; Two storage cache objects are all available memory blocks for memory allocation in the system;

[0164] Element setting step: Obtain the memory size and the minim...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com