Low-resource language OCR (Optical Character Recognition) method fusing language information

A technology that integrates languages and languages. It is applied in the fields of instruments, computing, character and pattern recognition. It can solve the problems of scarcity of training data resources, and achieve the effect of well fitting the characteristics of the data set, comprehensive coverage and improving performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

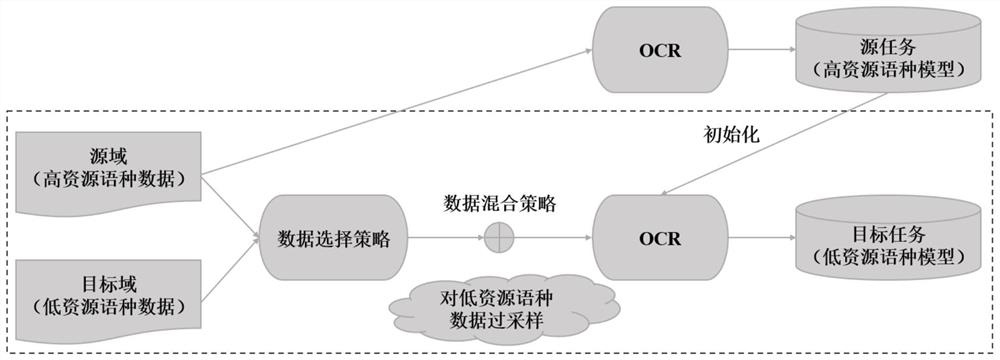

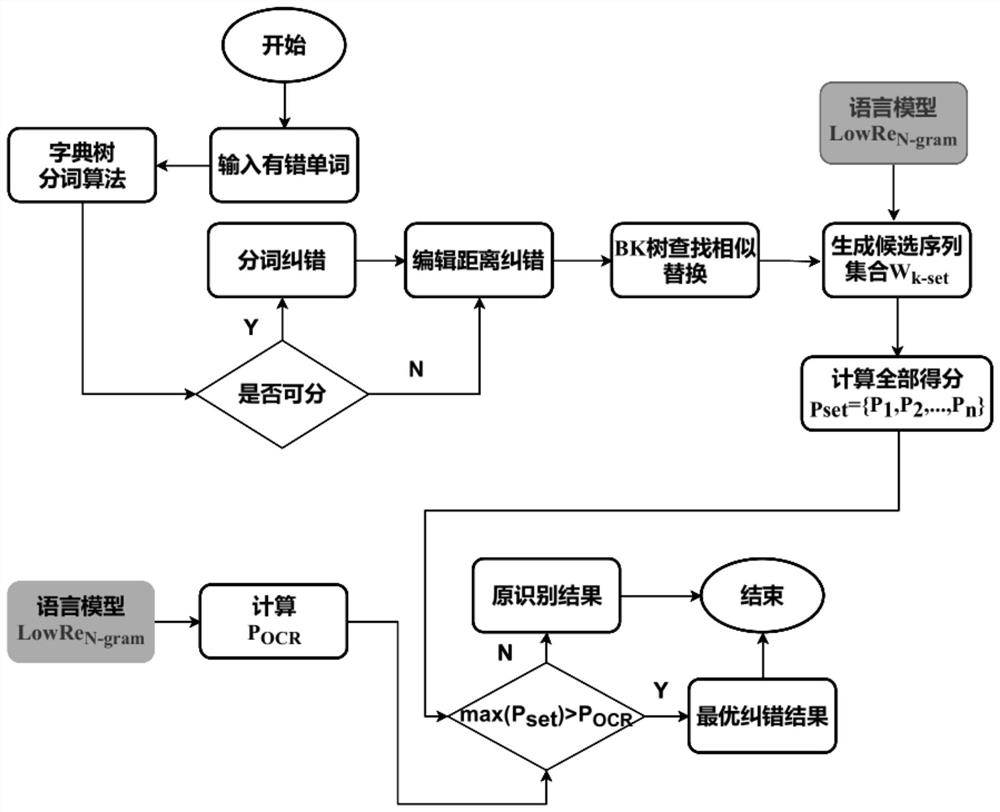

[0025] A low-resource language OCR method for merging language information according to the present invention includes: obtaining open-source texts of low-resource languages to generate pictures and enhancing OCR training data of low-resource languages based on image and text characteristics; selecting languages based on similarity between languages For high-resource languages with high similarity to low-resource languages, apply the hybrid fine-tuning migration strategy to migrate the OCR model of high-resource languages to the OCR model of low-resource languages, and then recognize based on the OCR model, and use the scoring of the recognition results as the basis for judging the recognition results contains errors. Vocabulary detection is carried out for sentences with low scores, the wrong words are located and recognized, and multi-strategy fusion is used to generate possible correction schemes based on the vocabulary and edit distance; finally, each correction s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com