FPGA-oriented deep convolutional neural network accelerator and design method

A neural network and deep convolution technology, applied in FPGA-oriented deep convolutional neural network accelerators and design fields, can solve problems such as network model calculation speed limitations, FPGA accelerated deployment difficulties, etc., to improve calculation speed and reduce storage space and the demand for computing resources, the effect of reducing the amount of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

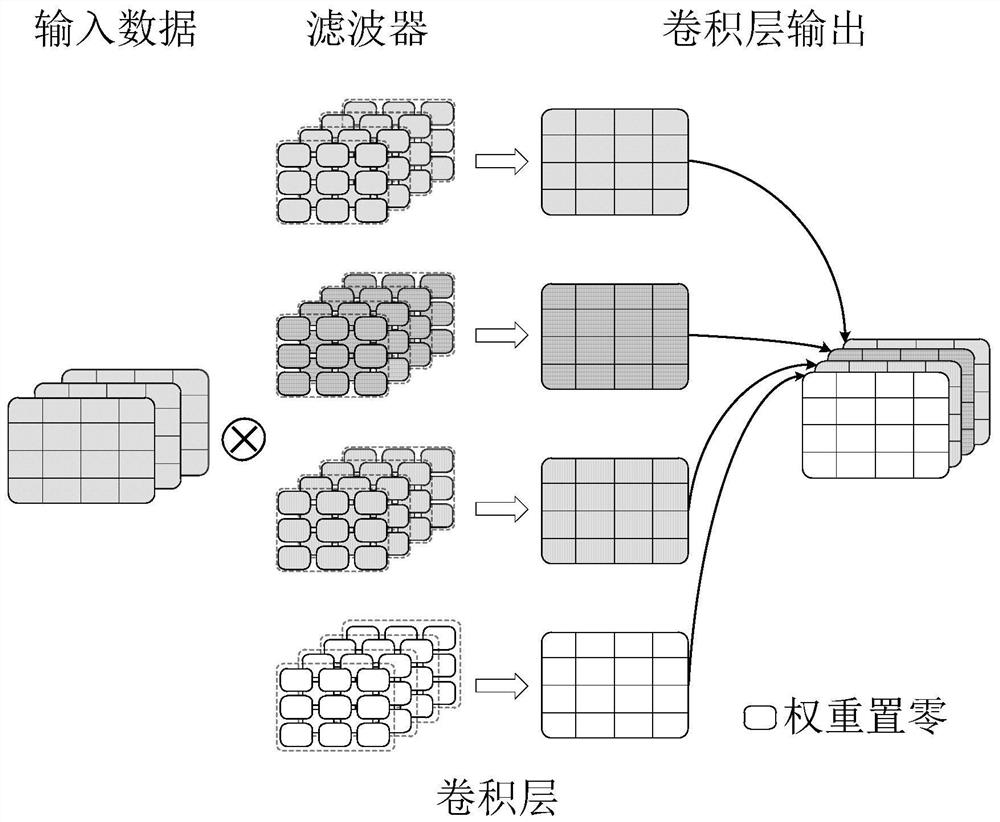

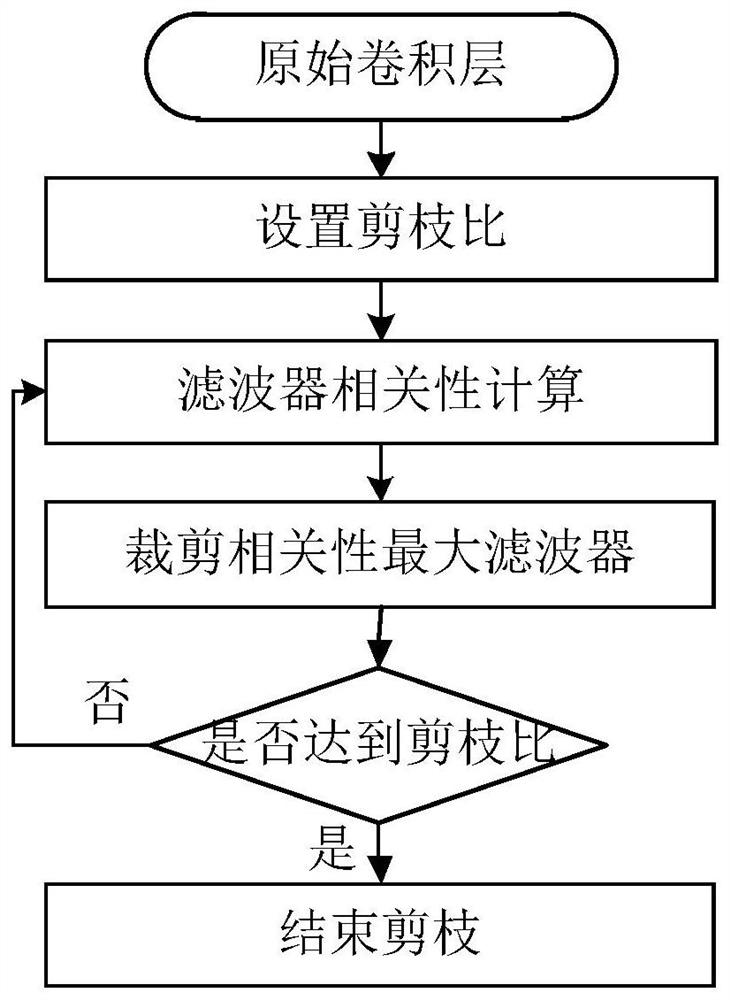

[0060] See figure 1 — Figure 12 , the present invention proposes an FPGA-oriented DCNN accelerator design method, which includes: a DCNN model pruning unit, a DCNN model quantization unit and a hardware structure design unit. The DCNN model pruning unit further includes: model pre-training unit, filter correlation calculation unit, filter soft pruning unit, filter hard pruning unit, pruning sensitivity calculation unit and model retraining unit. The various units are connected to each other, and finally the FPGA-based DCNN accelerator is obtained.

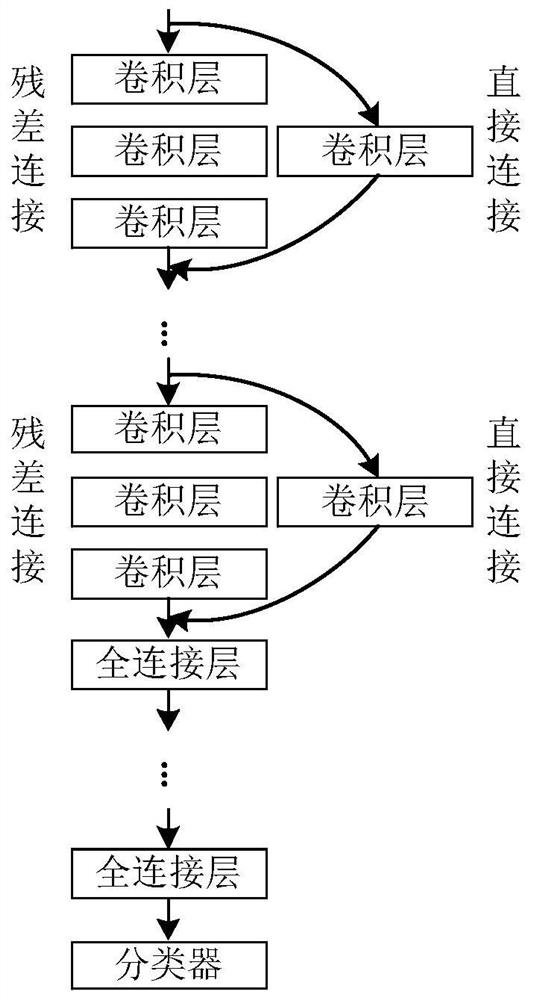

[0061] The model pre-training unit is connected with the filter correlation calculation unit, the pruning sensitivity calculation unit, the filter soft pruning unit and the filter hard pruning unit. This module trains complex DCNN model structures for the tasks to be faced, such as figure 1 As shown, which includes residual connections and direct connections, residual connections appear in some multi-branch models, and direct c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com