Hardware accelerator capable of configuring sparse attention mechanism

A technology of hardware accelerator and attention, which is applied to digital computer components, instruments, computers, etc., can solve the problems of lack of hardware acceleration capabilities, and achieve the effects of maintaining accuracy, low chip area, and high throughput

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

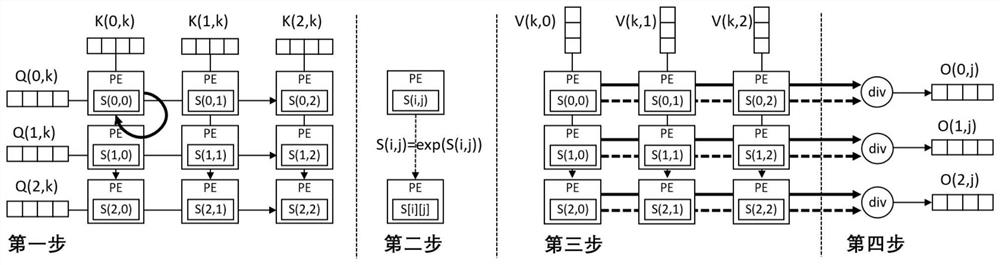

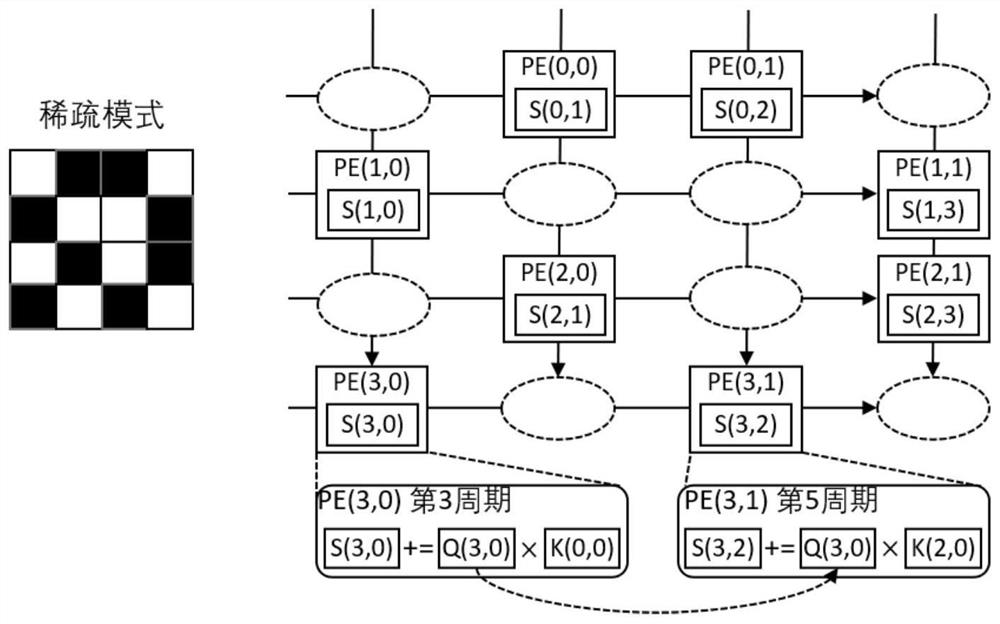

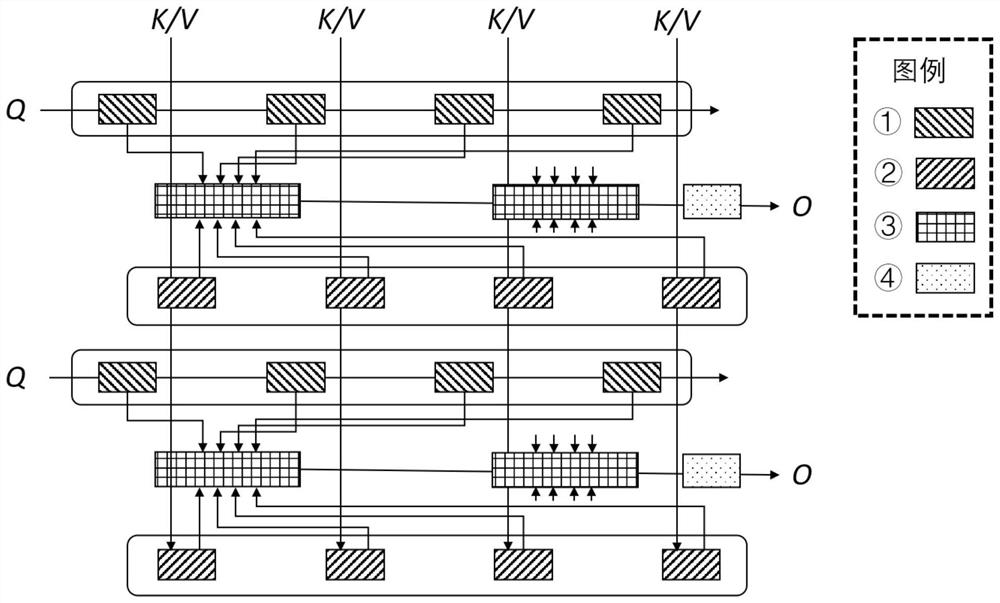

[0040] Below in conjunction with accompanying drawing, further describe the present invention through embodiment, but do not limit the scope of the present invention in any way. The present invention provides a hardware accelerator with a configurable sparse attention mechanism, which includes: a sampled dense matrix multiplication module, a mask segmentation and packaging module, and a configurable sparse matrix multiplication module. In the task of natural language processing and computer vision, when using the artificial neural network that processing attention mechanism comprises converter structure to carry out reasoning, can send three input matrices Q, K and V to the hardware accelerator that the present invention provides, and receive The output matrix of the hardware accelerator achieves the effect of improving the operation speed.

[0041] In natural language processing, the Q and K matrices are the encoding matrices of the words in the text, and V is the encoding ma...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com