Trunk two-way image semantic segmentation method for scene understanding of mobile robot in complex environment

A mobile robot and semantic segmentation technology, applied in the field of image processing, can solve the problems of inability to find a solution, time-consuming and labor-intensive training, and high equipment requirements, and achieve the effects of simple structure, improved accuracy, and improved depth.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

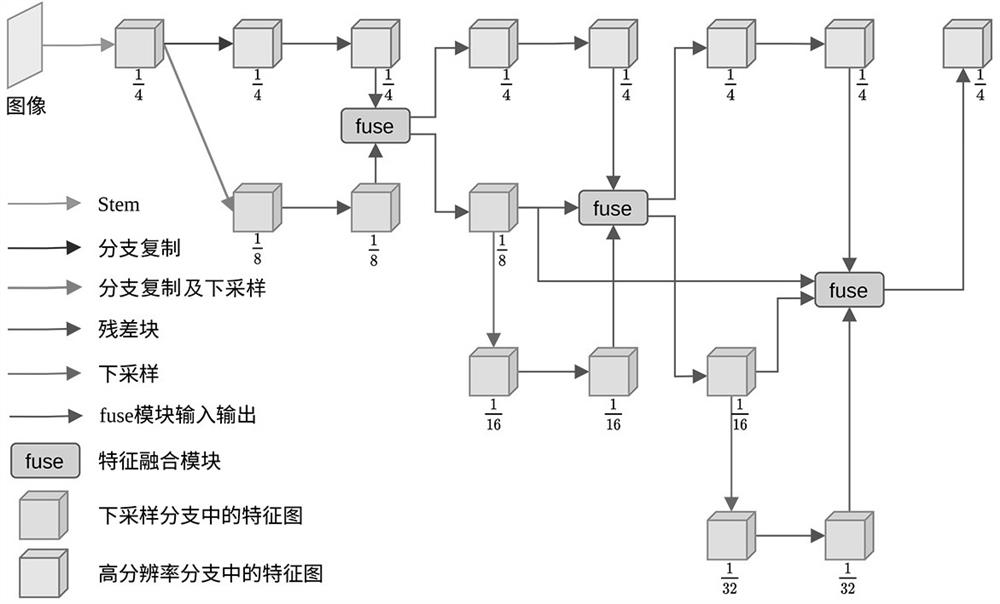

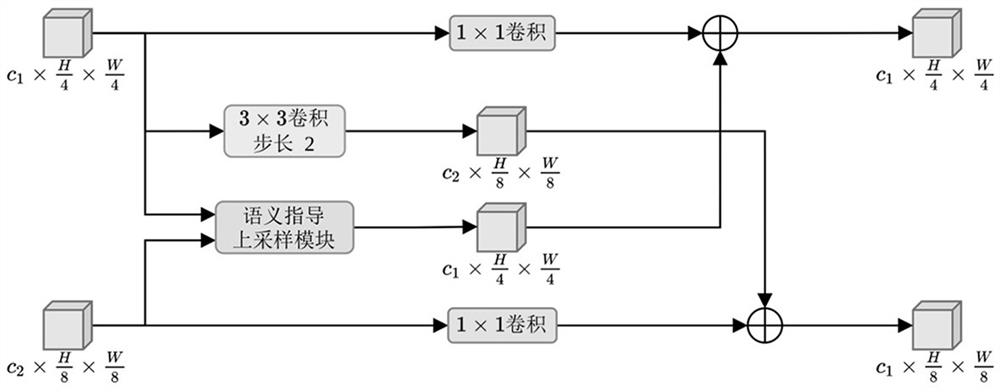

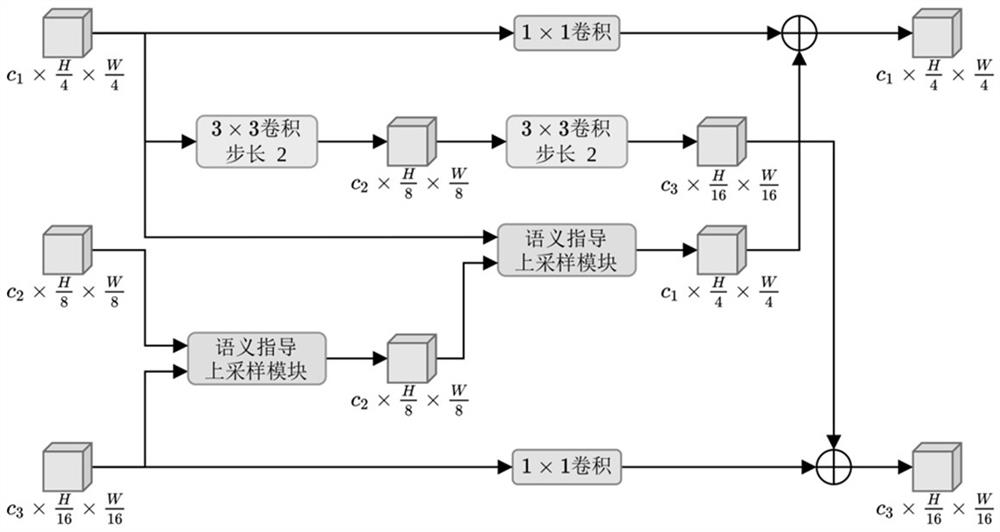

[0051] A backbone two-way image semantic segmentation method for scene understanding of mobile robots in complex environments, comprising the following steps:

[0052] S1: Input the image to be segmented into the encoder of the image semantic segmentation model (the network architecture diagram of the encoder is shown in figure 1 As shown), the initial feature map of the image to be segmented is extracted by the initial module of the encoder, and the initial feature map is obtained. The spatial size of the initial feature map is 1 / 2 of the image to be segmented; and then the initial feature map is input into the high The resolution branch and the downsampling branch perform processing; wherein, the initial module stem module.

[0053] S2: Input the initial feature map of the high-resolution branch through the residual network (the residual network is a ResNet18 network) for feature extraction, and obtain a first-level high-resolution feature map with the same spatial size as t...

Embodiment 2

[0075] This embodiment mainly provides a method for training an image semantic segmentation model described in the above-mentioned embodiment 1, and the steps of the method are:

[0076] A: Obtain a sample image set, the sample image set includes a plurality of sample images, the sample image contains the sample segmentation area and the sample category information corresponding to the sample segmentation area; the sample image set is randomly divided into training set, verification Set and test set; The sample images in the sample image set are from at least one of ImageNet dataset, Cityscapesdataset, ADE20K dataset three image datasets.

[0077] B: Input the sample image in the training set into the pre-built image semantic segmentation model for detection, and obtain the semantic segmentation result of the sample image, the semantic segmentation result includes the feature area and feature area of the sample image obtained based on semantic recognition Corresponding categ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com