Array calculation accelerator architecture based on binary neural network

A binarized neural and neural network technology, applied in the field of array computing accelerator architecture, can solve the problems of slow speed, high computing power consumption, low reconfigurability, etc., to reduce area, meet computing requirements, and enhance versatility Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] Below in conjunction with accompanying drawing, technical solution of the present invention is described in detail:

[0027] Currently, in order to complete matrix multiplication operations, the processing units in the array usually need to be composed of a multiplier, an adder and a register, which are respectively used to complete the functions of multiplication, accumulation, part and storage. However, the implementation of the multiplier will occupy a large area of the chip, and the execution of the multiplication operation will cause a certain delay and generate large power consumption. These problems limit the performance improvement of the computing array to a certain extent.

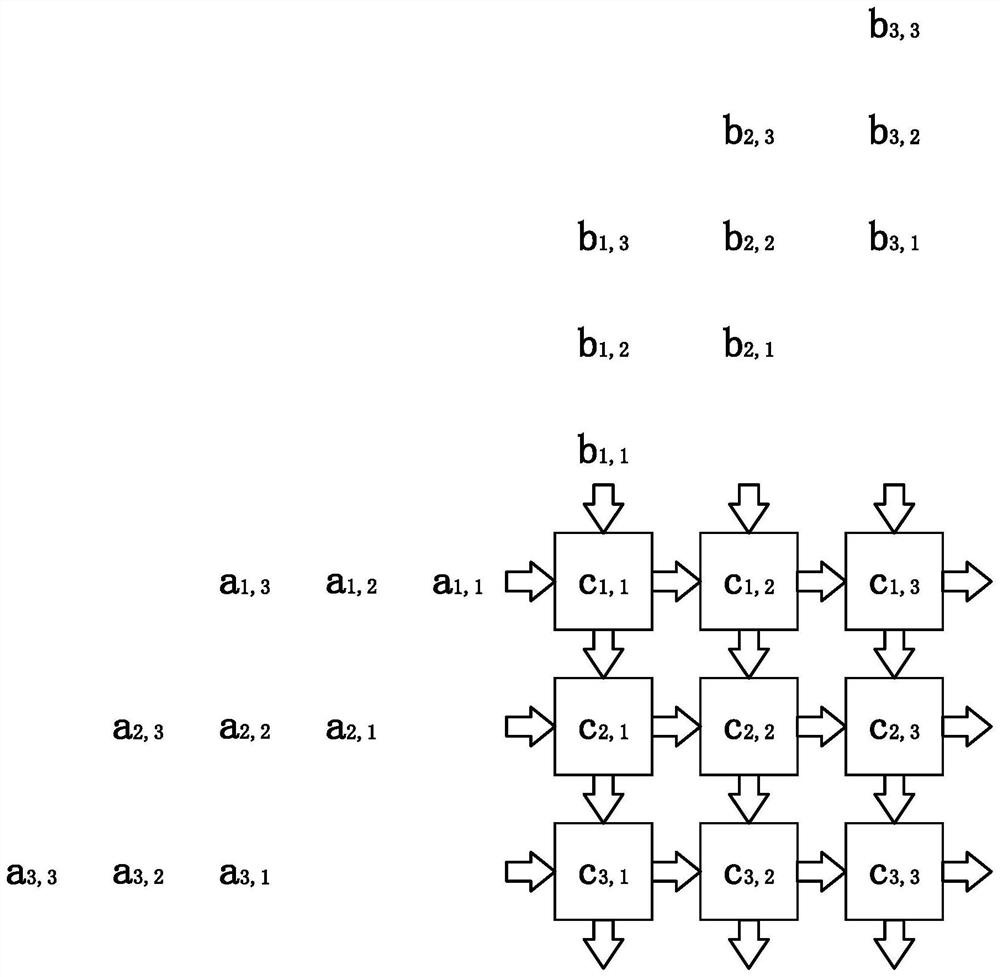

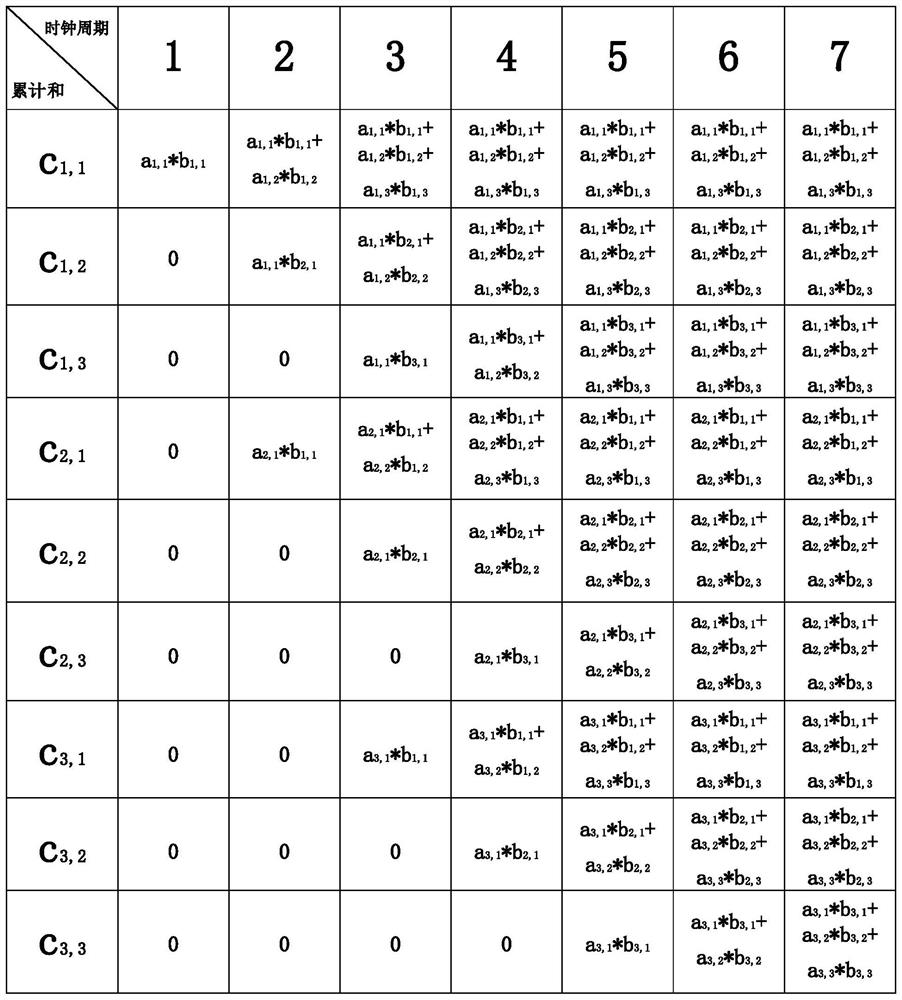

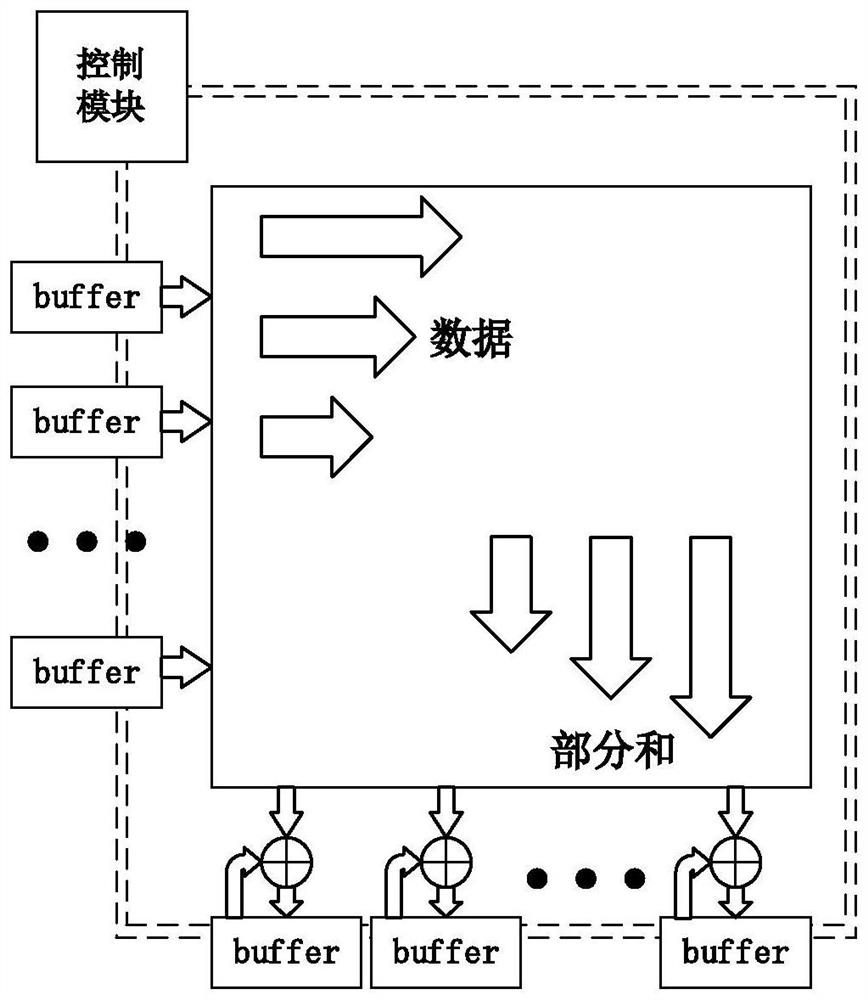

[0028] The calculation performed by the FC (Full Connection) layer in the neural network is essentially matrix multiplication. For this calculation process, the calculation core will operate in the form of block matrix multiplication. The general process is: for a matrix A with i rows an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com