Image processing system and method applied to multi-path camera of large automobile

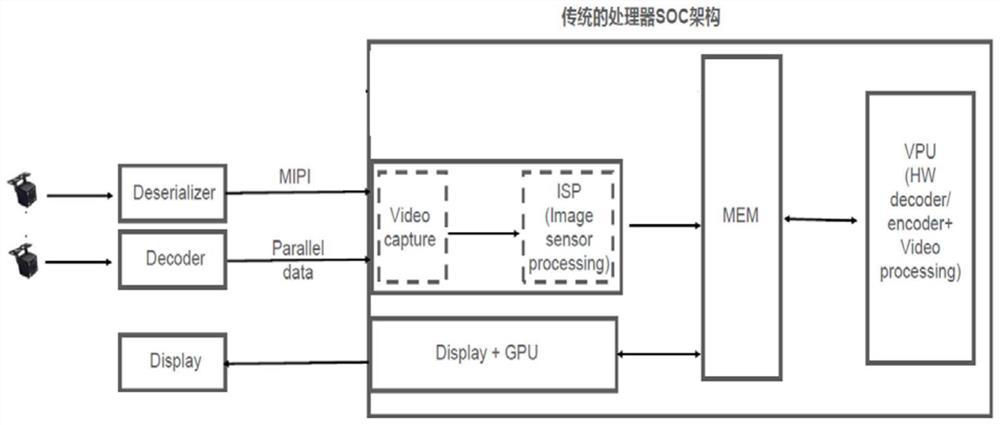

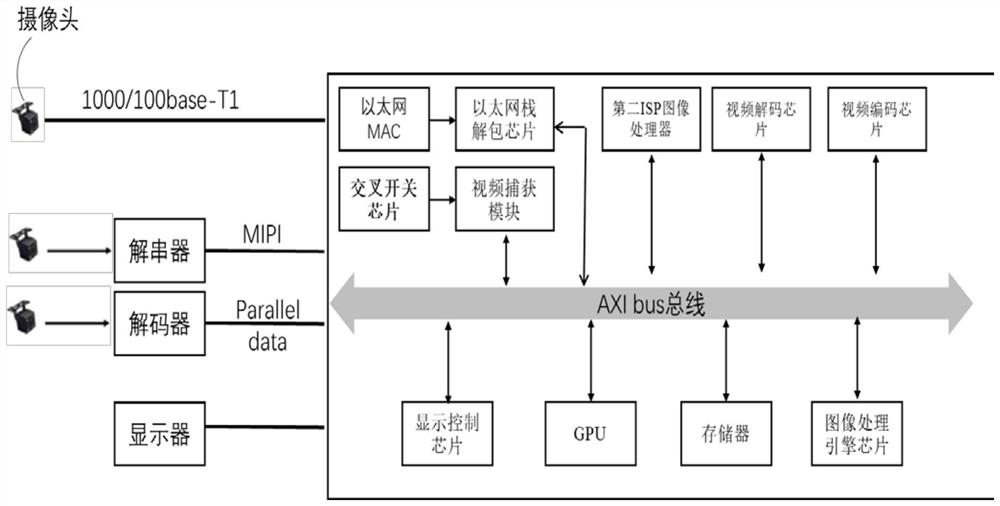

A technology of image processing and image processor, which is applied in image data processing, closed-circuit television system, image communication, etc. It can solve the problems of image blurring, four camera solutions cannot form a surround view system, etc., and achieve the effect of meeting application requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

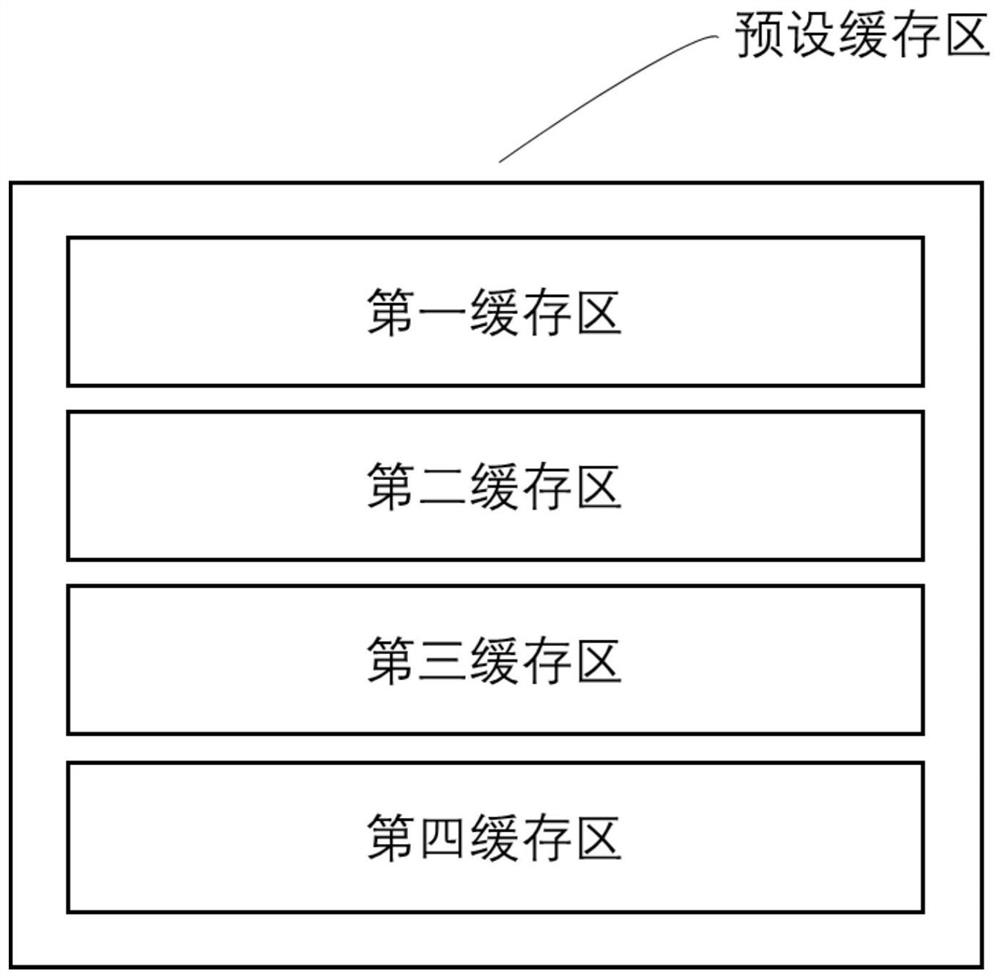

[0043] In order to have a clearer understanding of the technical features, purposes and effects herein, the specific embodiments of the present invention will now be described with reference to the accompanying drawings, in which the same reference numerals denote the same parts. For the sake of brevity of the drawings, the relevant parts of the present invention are schematically shown in each drawing, and do not represent the actual structure as a product. In addition, in order to make the drawings simple and easy to understand, in some drawings, only one of the components having the same structure or function is schematically shown, or only one of them is marked.

[0044] With regard to the control system, functional modules and application programs (APP) are well known to those skilled in the art, and can take any appropriate form, either hardware or software, a plurality of discretely set functional modules, or are multiple functional units integrated into one hardware. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com