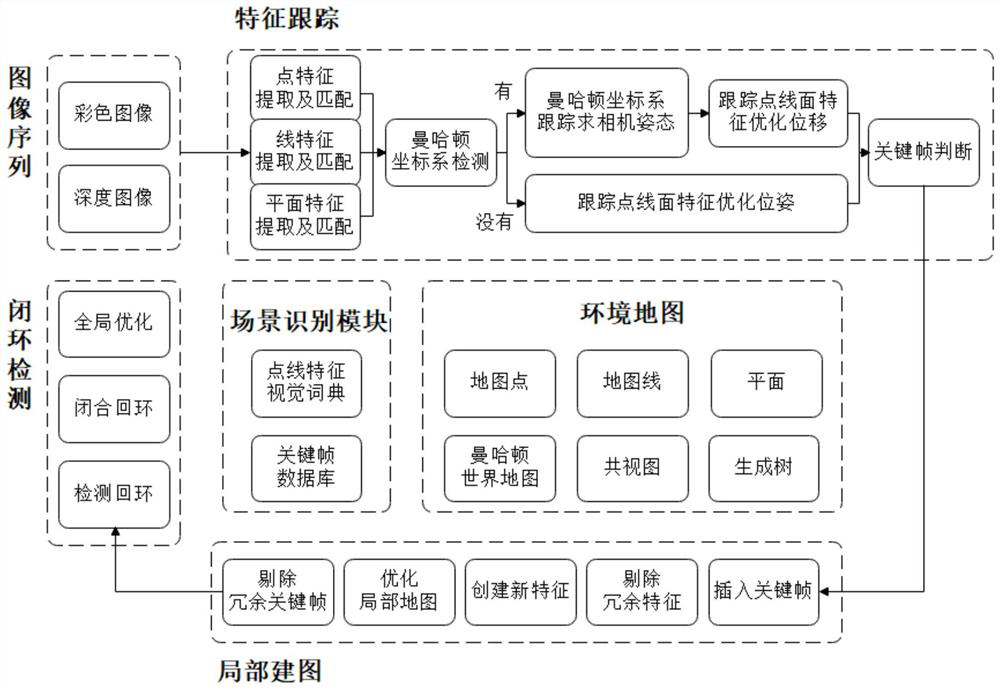

Structured scene vision SLAM (Simultaneous Localization and Mapping) method based on point-line-surface features

A surface feature, structured technology, applied in the field of structured scene vision, can solve the problems of lack of texture, low pose estimation accuracy, poor robustness, etc., to reduce the cumulative drift error between frames, improve the tracking effect and accuracy. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 2

[0117] A structured scene vision SLAM method based on point, line and surface features, including the following steps:

[0118] S1, input a color image, extract point features and line features according to the color image and perform feature matching; wherein in the present embodiment, the EDLine algorithm is used to extract the line feature, and the LSD algorithm can also be used to extract the line feature, and the split line segment extracted by the LSD algorithm is used. The merging method is also based on the endpoint distance, angle and descriptor information as the screening criteria;

[0119] S2, input the depth image, convert it into a point cloud sequence structure, then extract the image plane, and then match the extracted image plane with the map surface; in this embodiment, the plane matching is based on the size of the normal angle and the origin of the world coordinate system. The difference between the distance to the plane is used as the criterion for judging...

Embodiment 3

[0124] A structured scene vision SLAM method based on point, line and surface features, including the following steps:

[0125] S1, input color image, extract point feature and line feature according to color image and carry out feature matching; Wherein in the present embodiment, use LSD algorithm to extract line feature, and the merging method of the split line segment extracted by LSD algorithm is also according to endpoint distance, Angle and descriptor information as screening criteria;

[0126] S2, input the depth image, convert it into a point cloud sequence structure, then extract the image plane, and then match the extracted image plane with the map surface; in this embodiment, the plane matching is based on the size of the normal angle and the origin of the world coordinate system. The difference between the distance to the plane is used as the criterion for judging plane matching, and the angle between the normal and whether there is a collision area between the two...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com