Method for encoding and decoding video information, a motion compensated video encoder and a coresponding decoder

a video information and motion compensation technology, applied in the field of encoding and decoding video information, can solve the problems of inefficiency in transmitting a separate motion vector, operation usually produces some degradation, and the amount of data required to represent the video sequence is large, so as to reduce the complexity of the encoding process, minimize the cost, and estimate the cost of each macroblock segmentation—prediction-method pair

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

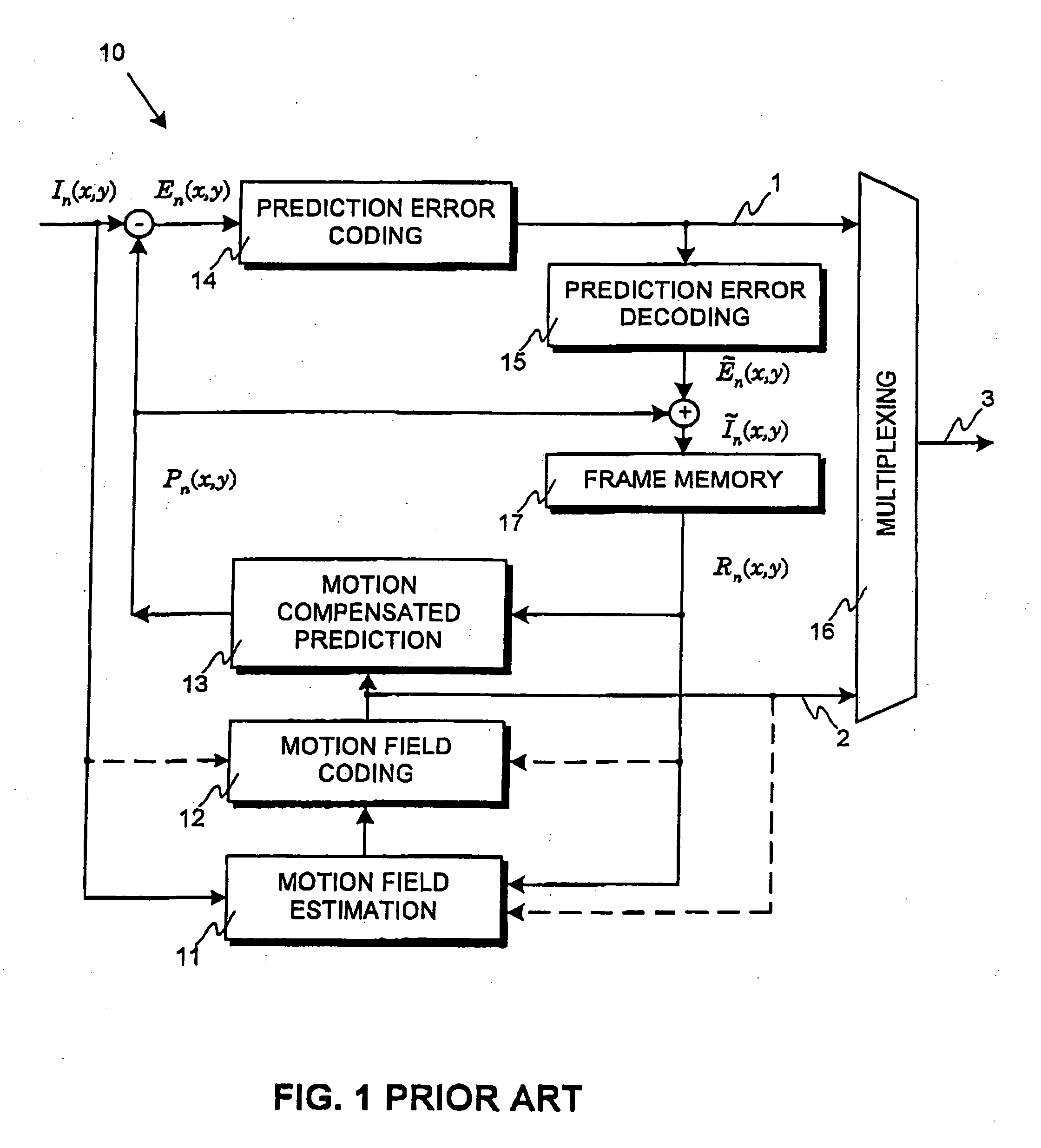

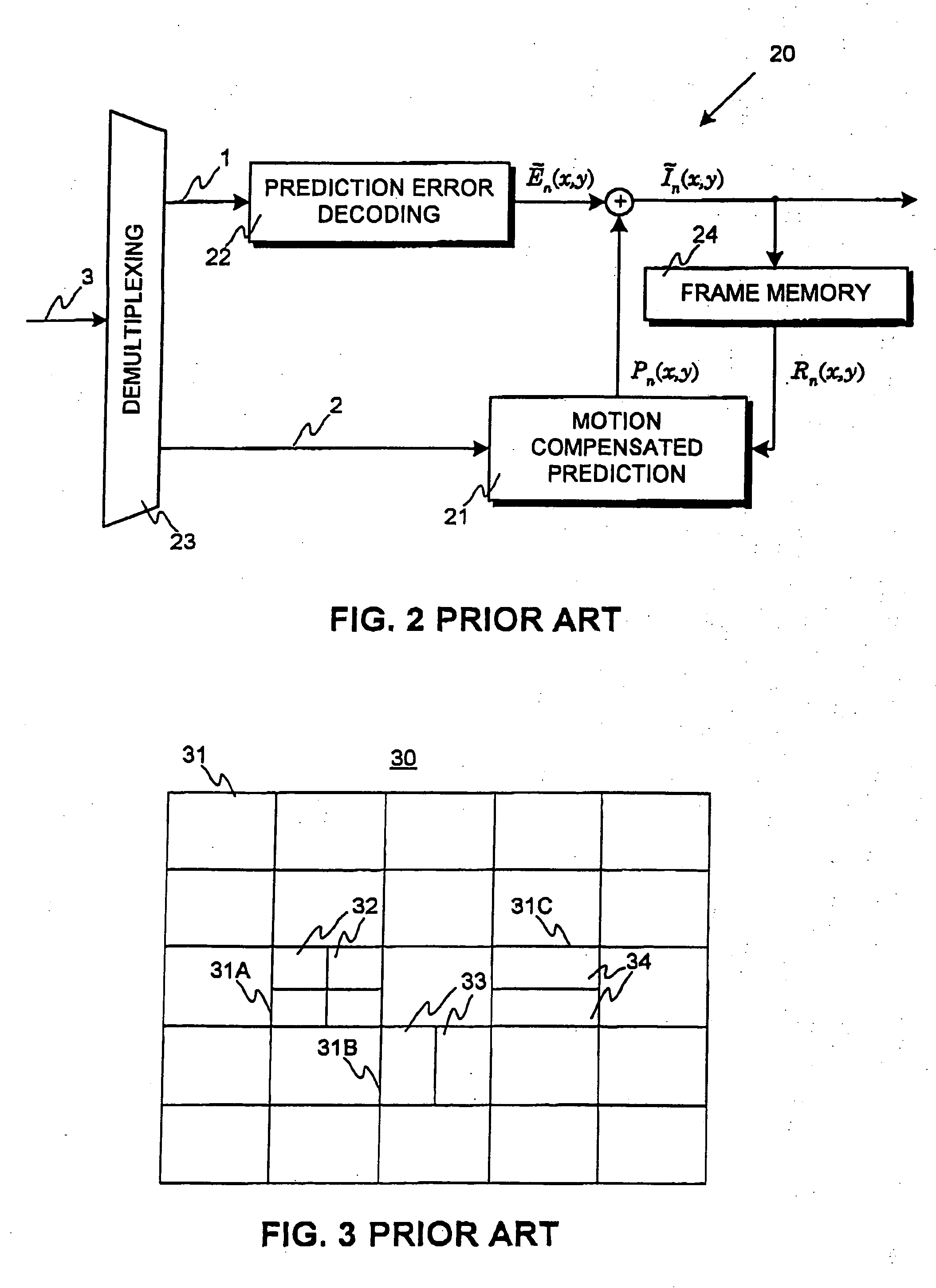

[0071]FIGS. 1-3 are discussed in detail in the description of motion compensated video encoding and decoding according to prior art.

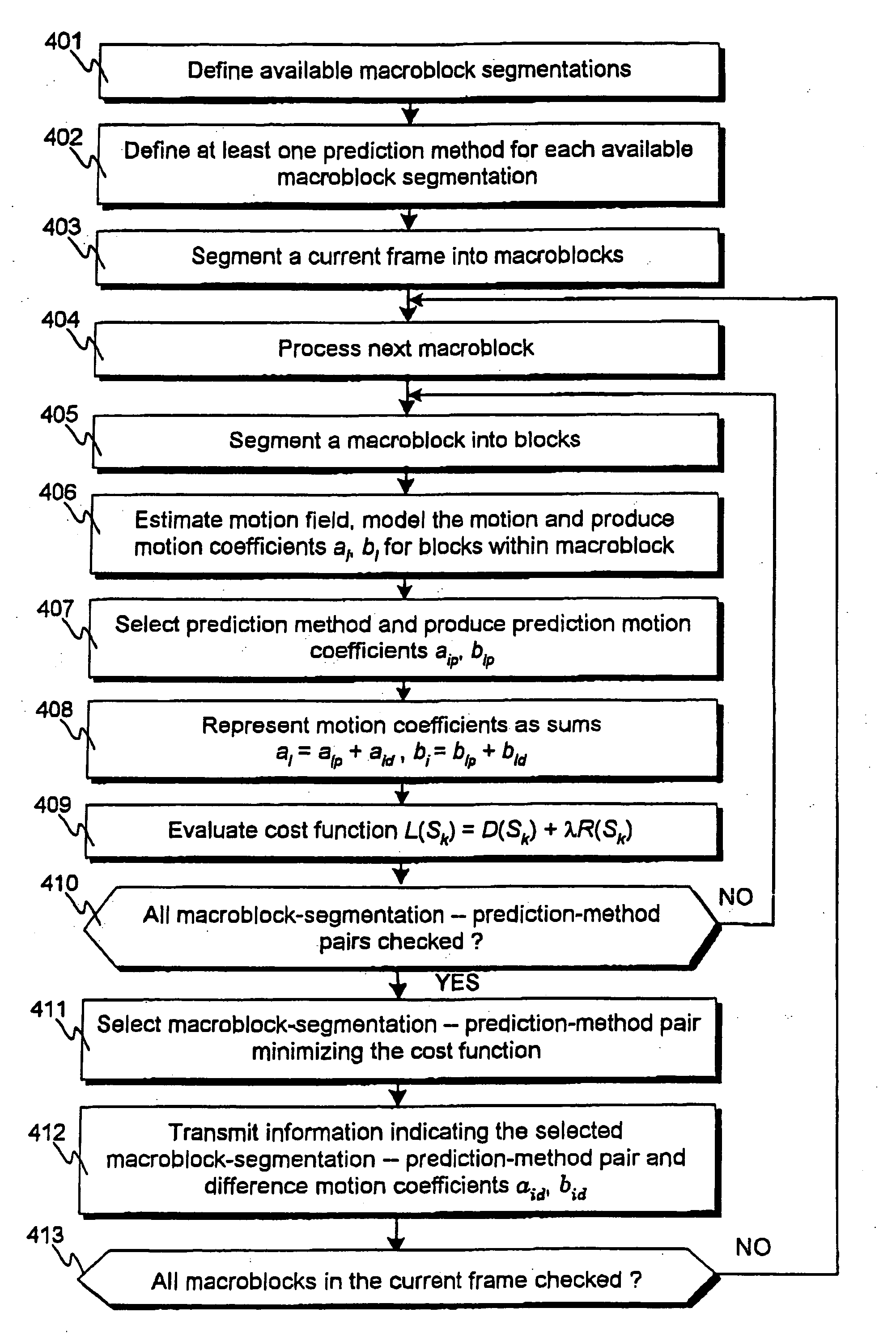

[0072]FIG. 4 presents a flowchart of a method for encoding video information according to the invention. Only features related to motion encoding are presented in FIG. 4, it does not present, for example, the formation or coding of the prediction error frame. Typically these features are included in encoding methods according to the invention and, of course, may be implemented in any appropriate manner.

[0073] In step 401 the available macroblock segmentations are defined. The available macroblock segmentations can comprise, for example, such macroblock segmentations as presented in FIG. 3, In step 402 at least one prediction method for predicting motion coefficients is defined for each available macroblock segmentation, resulting in a certain number of available macroblock-segmentation—prediction-method pairs. Typically, for certain macroblock segment...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com