Image processing device, method and program

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

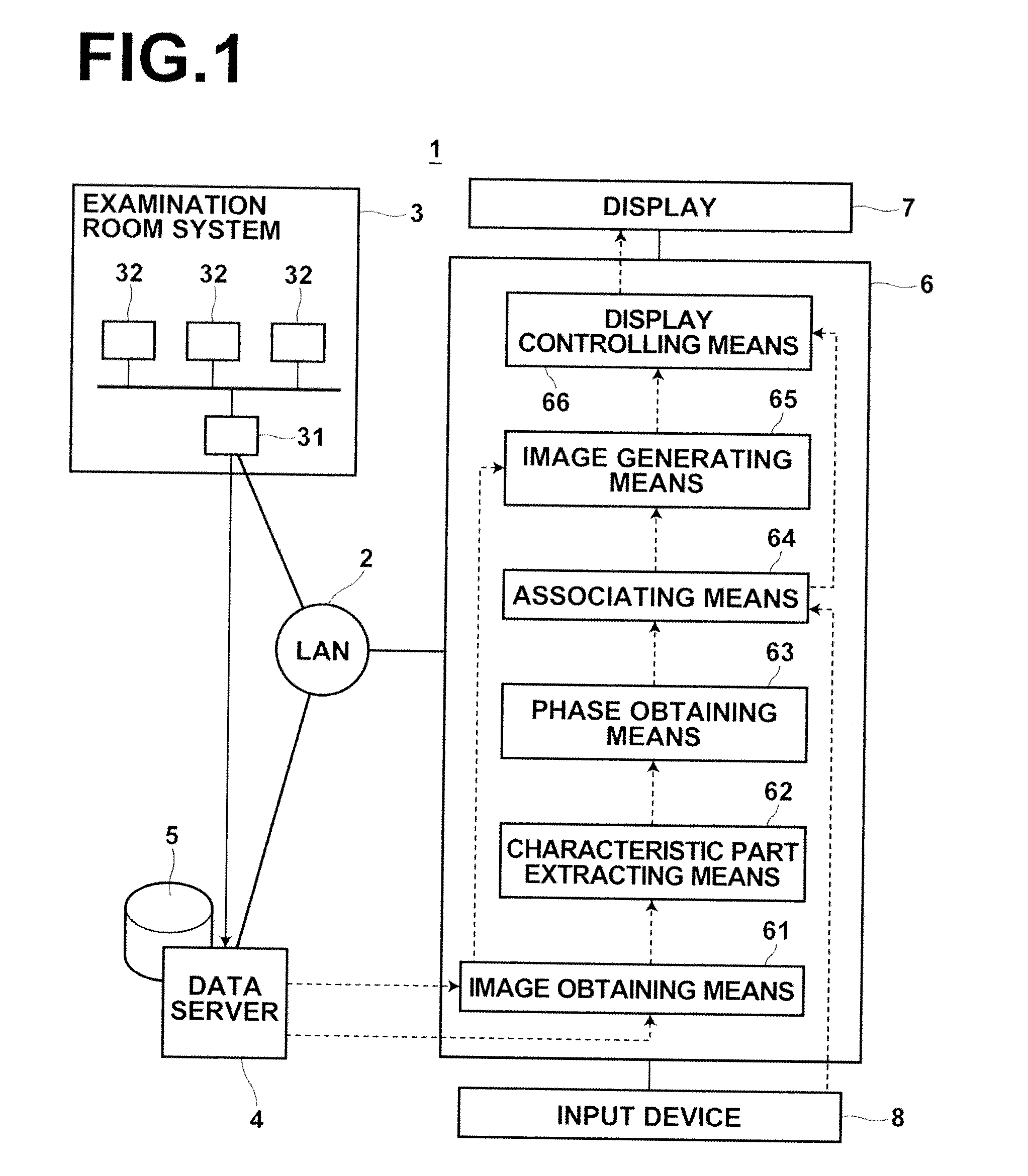

[0027]Hereinafter, embodiments of an image processing device, an image processing program and an image processing method of the present invention will be described in detail with reference to the drawings.

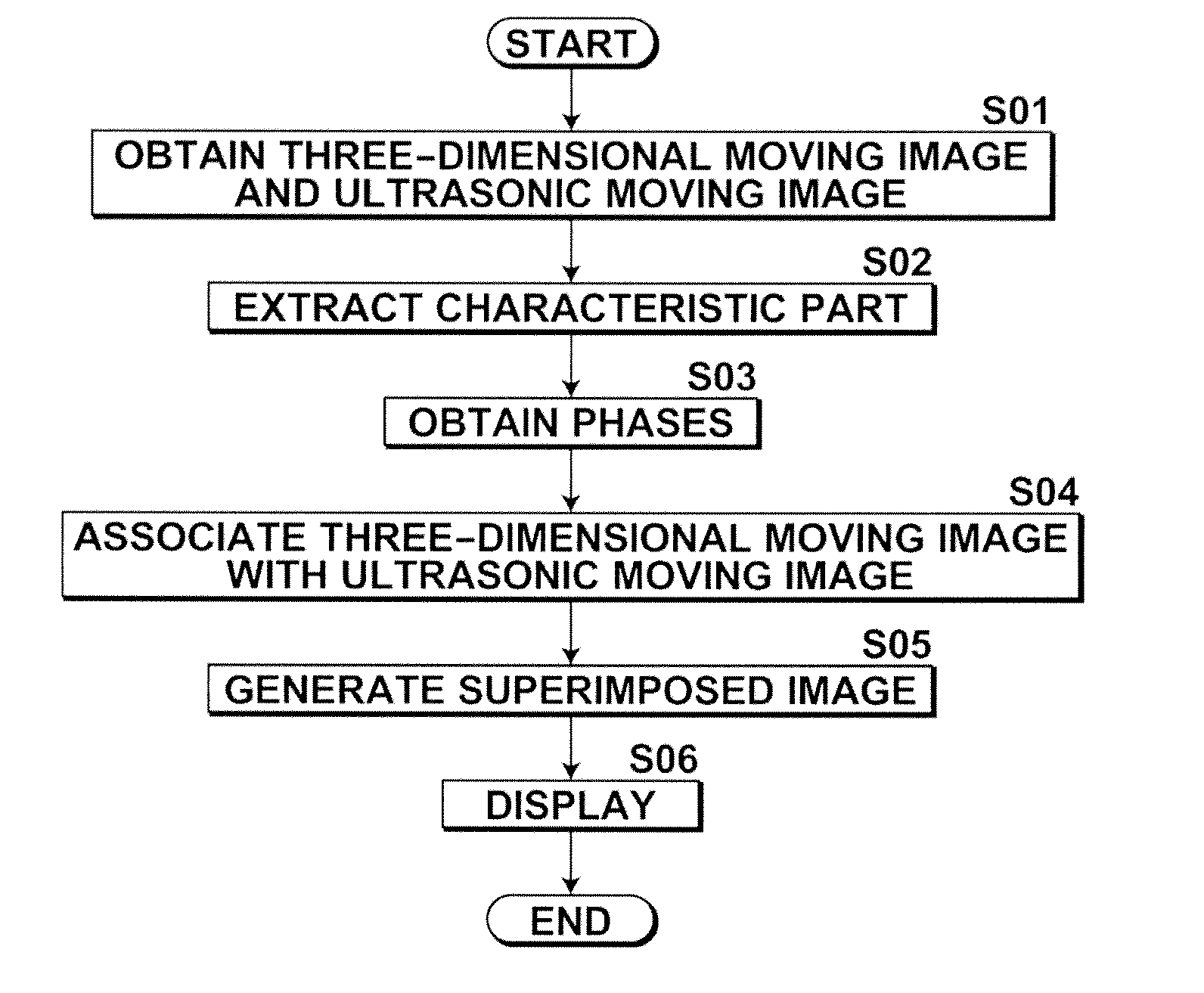

[0028]FIG. 1 illustrates the schematic configuration of a hospital system 1 incorporating an image processing device 6 according to one embodiment of the invention. The hospital system 1 includes an examination room system 3, a data server 4 and a diagnosis workstation (WS) 6, which are connected with each other via a local area network (LAN) 2.

[0029]The examination room system 3 includes various modalities 32 for imaging a subject, and an examination room workstation (WS) 31 used for checking and controlling images outputted from the individual modalities. The modalities 32 in this example includes a CT (Computed Tomography) apparatus and an MRI (Magnetic Resonance Imaging) apparatus, which are able to obtain a shape image representing shape information of the heart, and also incl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com