Humanoid-head robot device with human-computer interaction function and behavior control method thereof

A technology of human-computer interaction and robotics, applied in toys, instruments, automatic toys, etc., can solve the problems of limited perception function, lack of artificial emotional model and human-computer interaction function, and achieve compact structure and avoid variable definition conflicts Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

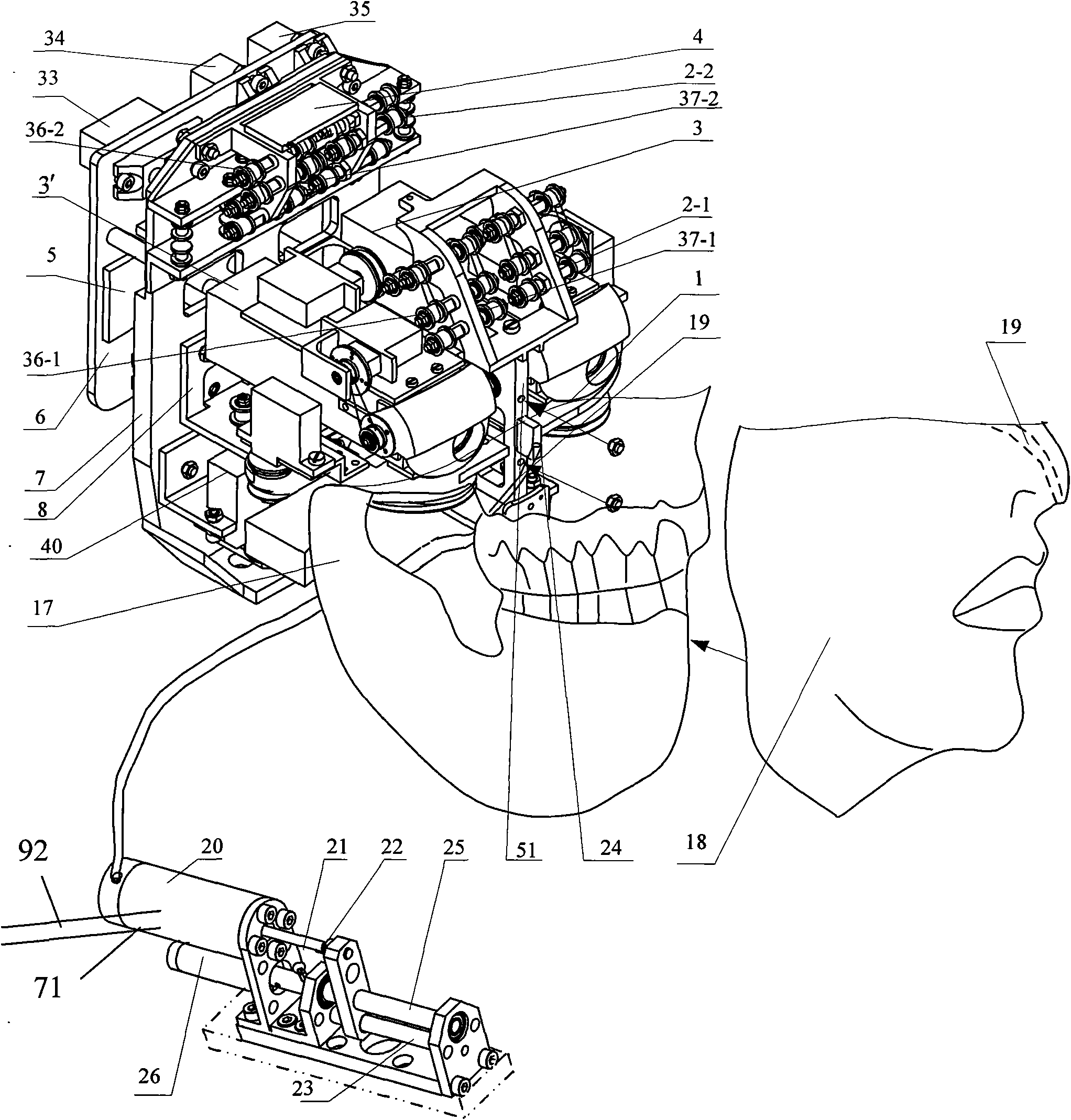

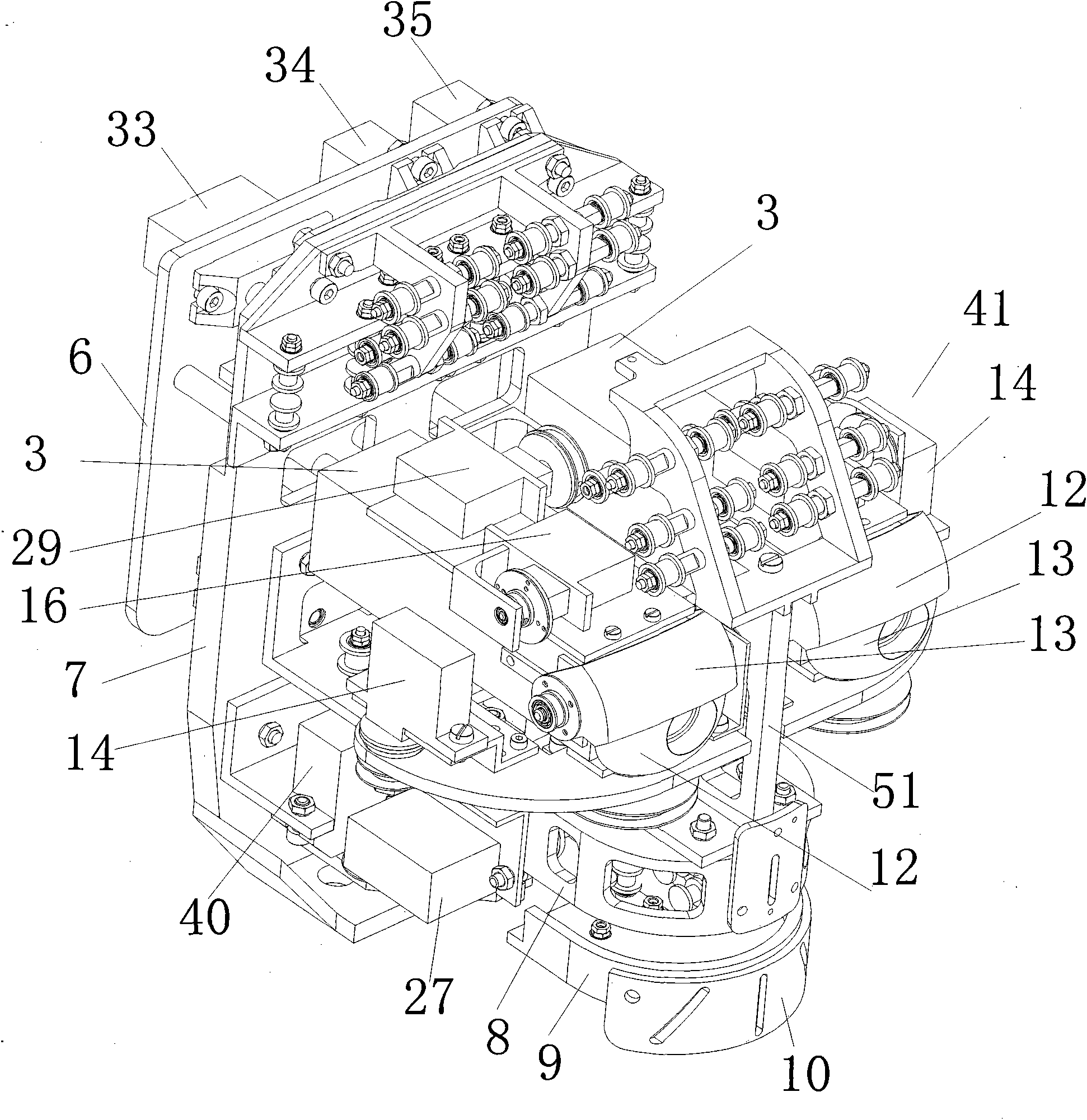

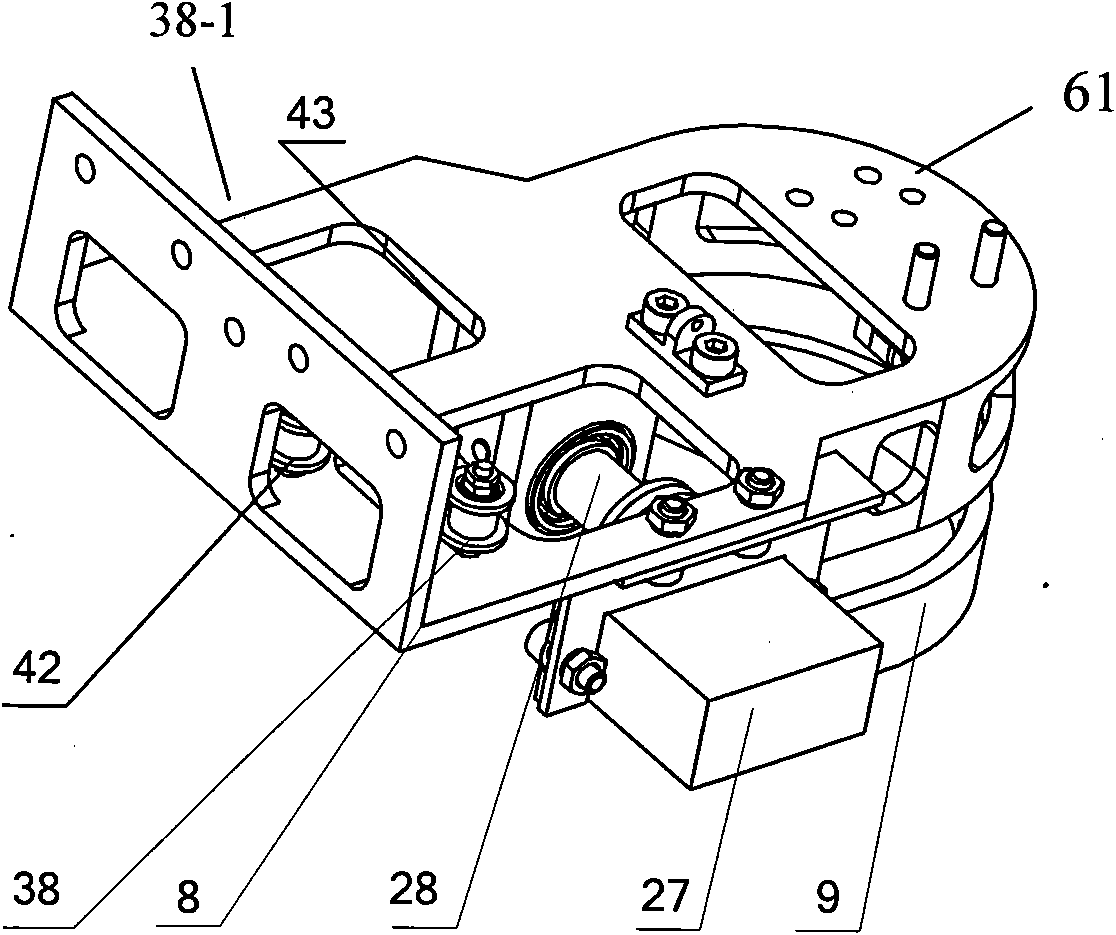

[0014] Specific implementation manner one: such as Figure 1a , Figure 1b , Figure 2a , Figure 2b , image 3 , Figure 4a , Figure 4b with Image 6 As shown, the humanoid avatar robot device with human-computer interaction function described in this embodiment is composed of a humanoid avatar robot body, a robot behavior control system, and a sensor perception system; the humanoid avatar robot body includes an eye movement unit 1. Upper and lower jaw movement unit 61, artificial lung device 71, facial expression and mouth shape drive mechanism 81, front support plate 7, rear support plate 6, stand 51, face shell 17 and facial elastic skin 18; eye movement unit 1 is composed of Two eyeballs 12, an eyeball transmission mechanism, two eyeball servo motors 14, two eyelids 13, an eyelid transmission mechanism, two eyelid servo motors 16 and a servo motor 29; the upper and lower jaw movement unit 61 consists of the upper jaw 8, the lower jaw 9, The motor 27 and the rotating shaft 2...

specific Embodiment approach 2

[0022] Embodiment 2: The sensor sensing system of this embodiment further includes a tactile sensor, and the tactile sensor is arranged in the middle of the forehead. The other components and connection relationships are the same as in the first embodiment.

specific Embodiment approach 3

[0023] Specific embodiment 3: The sensor sensing system of this embodiment further includes two temperature sensors, and the two temperature sensors are respectively arranged on the left and right sides of the forehead. Other components and connection relationships are the same as those in the first or second embodiment.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com