A shared resource scheduling method and system for distributed parallel processing

A technology of shared resources and parallel processing, applied in the direction of multi-programming device, program startup/switching, etc., can solve the problem of multi-processing node access competition, and achieve the effect of solving the access competition problem, improving processing efficiency, and avoiding head-of-line blocking.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020] The specific implementation method of the present invention will be described in detail with reference to the accompanying drawings.

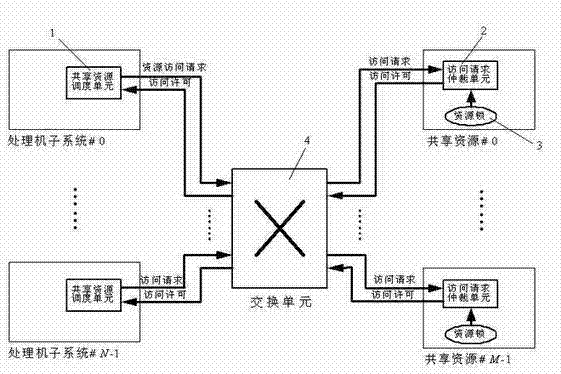

[0021] The shared resource scheduling method of the distributed parallel system proposed by the present invention is a distributed processing mechanism, such as figure 1 shown. This mechanism is realized by a shared resource scheduling unit distributed in each processing node, a resource lock distributed in each shared resource, and an access request arbitration unit. These distributed processing units can be software modules, or hardware modules with the same function, or hardware modules with firmware, and they send messages to each other through the switching unit (there are two types, resource access request signal and resource access permission signal) to communicate.

[0022] Each processing node of the distributed parallel system, that is, each processor subsystem runs its own operating system and application program. In order ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com