Patents

Literature

234 results about "Virtual queue" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Virtual queuing is a concept used in inbound call centers. Call centers use an Automatic Call Distributor (ACD) to distribute incoming calls to specific resources (agents) in the center. ACDs hold queued calls in First In, First Out order until agents become available. From the caller’s perspective, without virtual queuing they have only two choices: wait until an agent resource becomes available, or abandon (hang up) and try again later. From the call center’s perspective, a long queue results in many abandoned calls, repeat attempts, and customer dissatisfaction.

Virtual-wait queue for mobile commerce

InactiveUS6845361B1Improved customer careOvercome difficultiesTicket-issuing apparatusInterconnection arrangementsNetwork connectionThe Internet

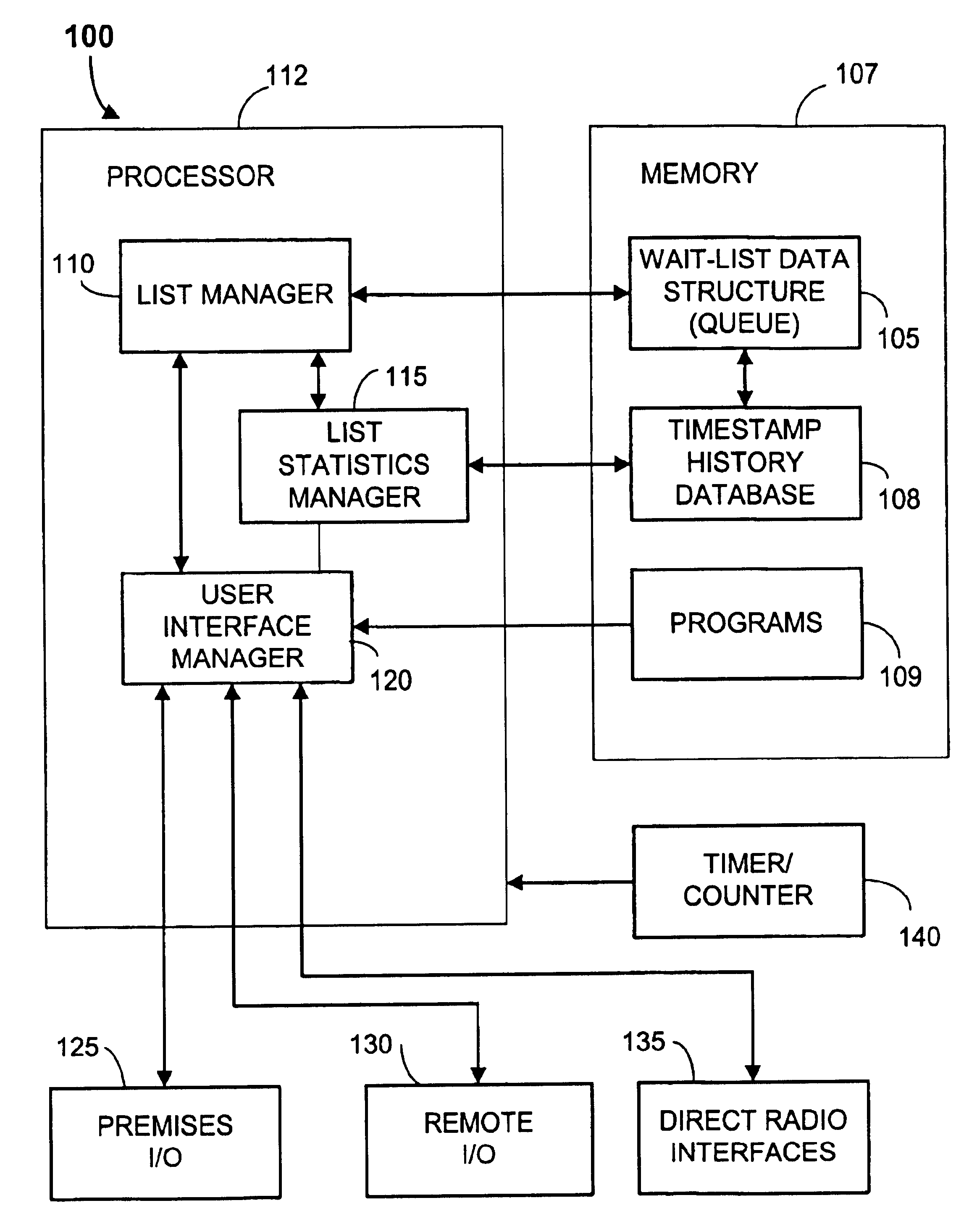

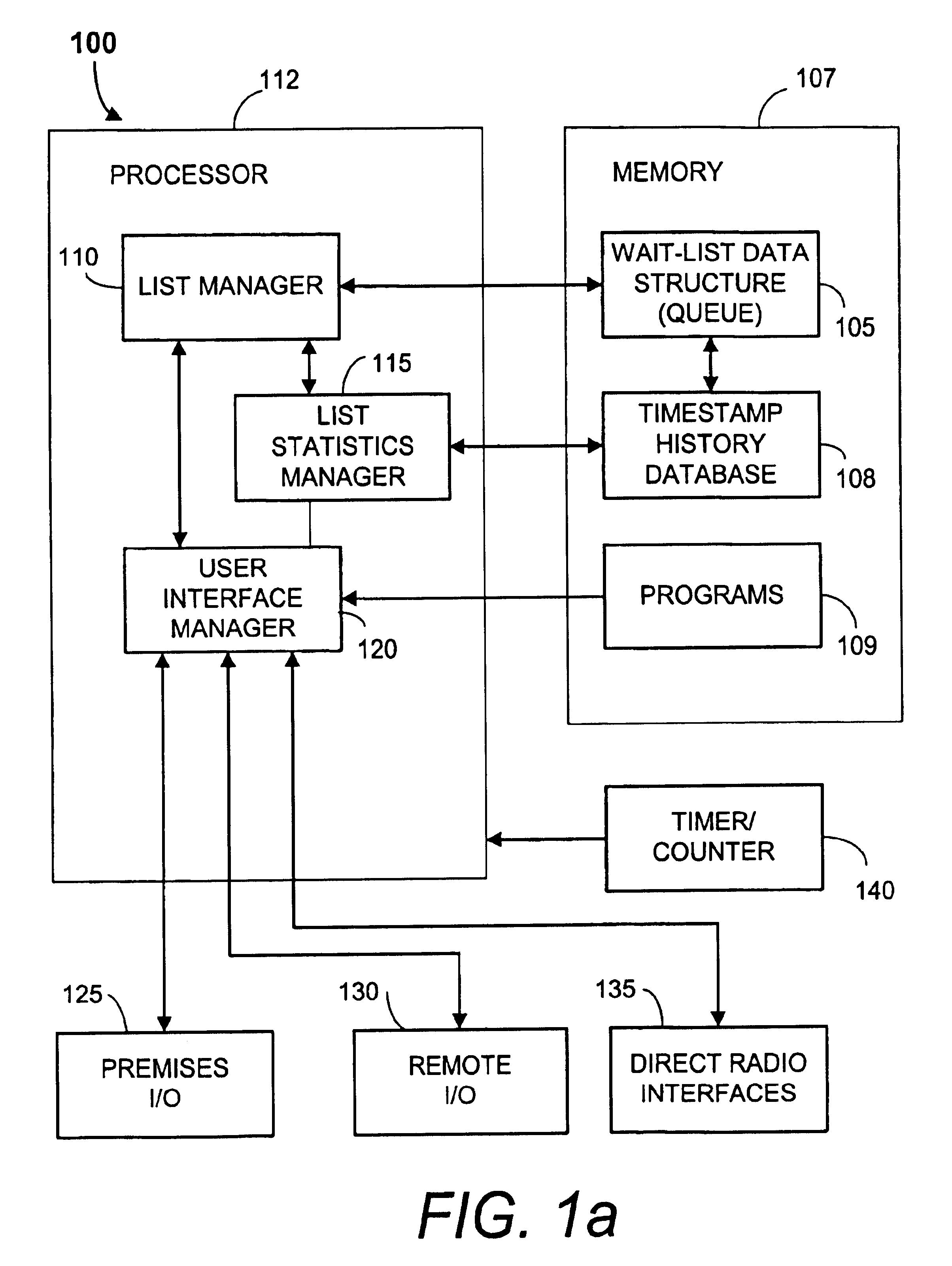

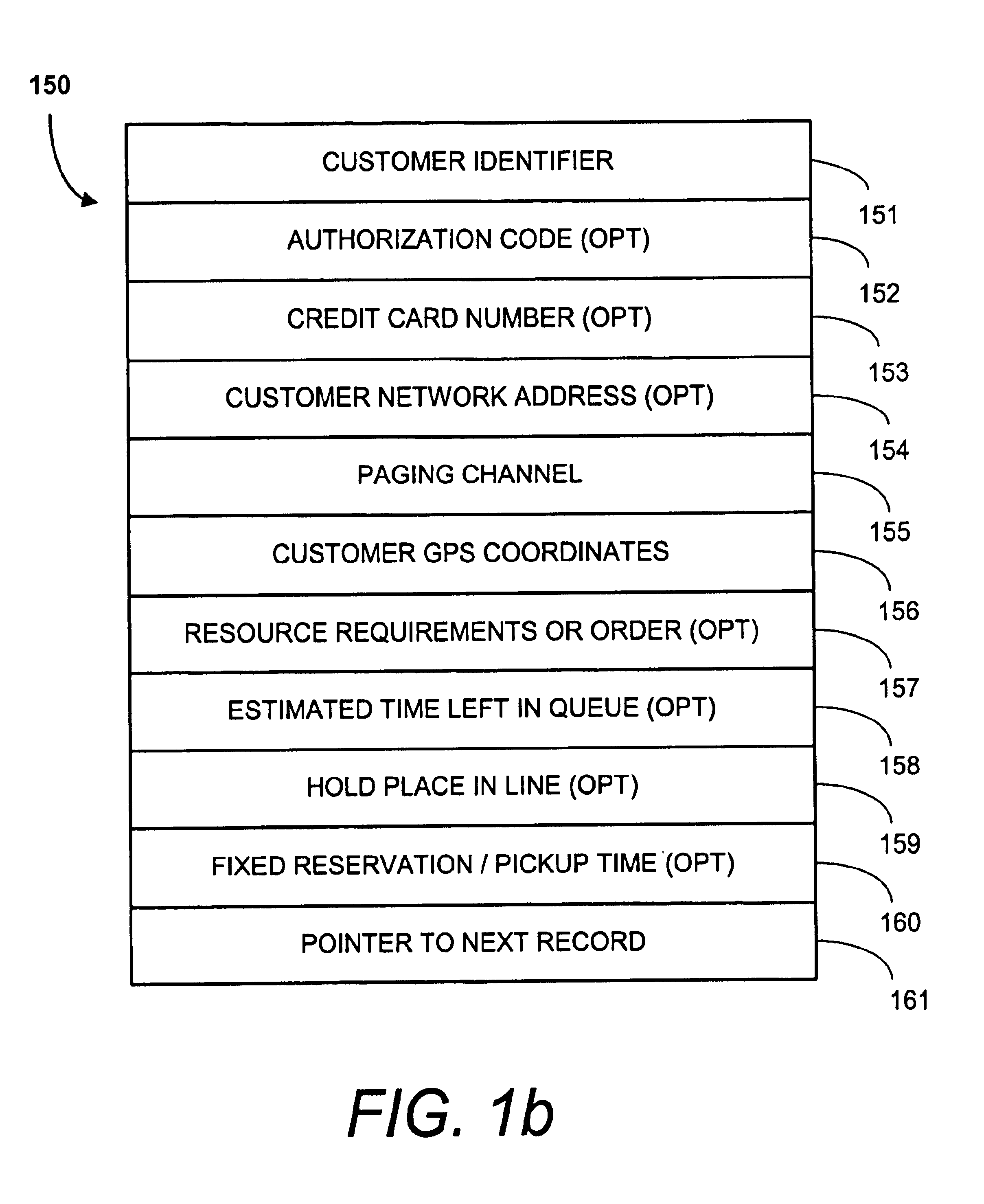

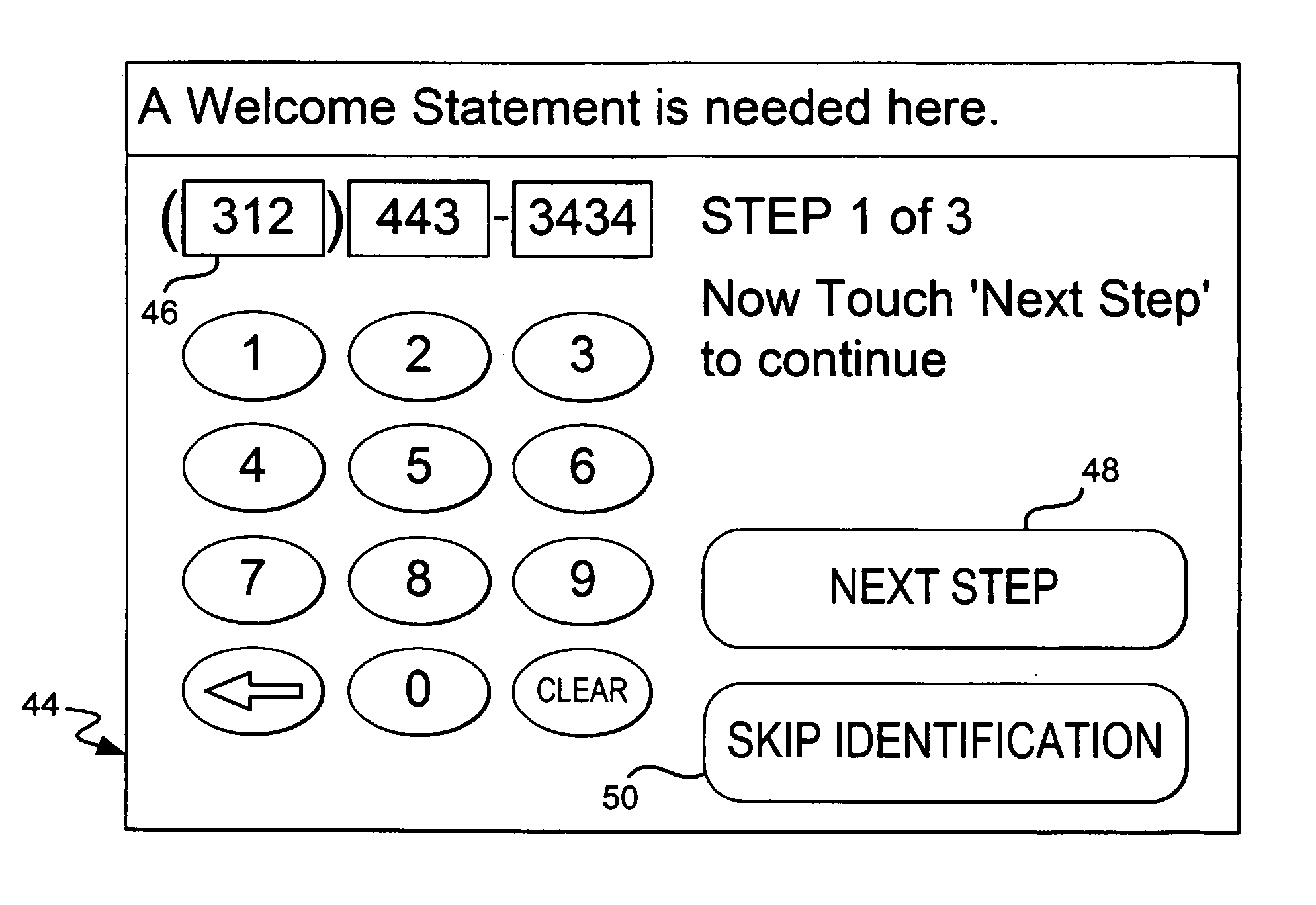

A method and a system are provided for maintaining a virtual-wait queue that controls access by customers to a physical resource such as a restaurant table. The method and system are especially adapted for use by customers operating Internet-enabled wireless devices. The system operates by maintaining a virtual-wait queue data structure capable of storing a plurality of entries. Each entry is representative of a customer. The system accepts an instruction from a premises I / O device indicating to either add or delete an entry to the data structure. The system also accepts an instruction from a network connection to either add or delete the remote customer into or from the virtual wait queue. The virtual wait queue system indicates to the remote customer the estimated time left in the queue, freeing the customer from the need to wait in line.

Owner:RPX CORP

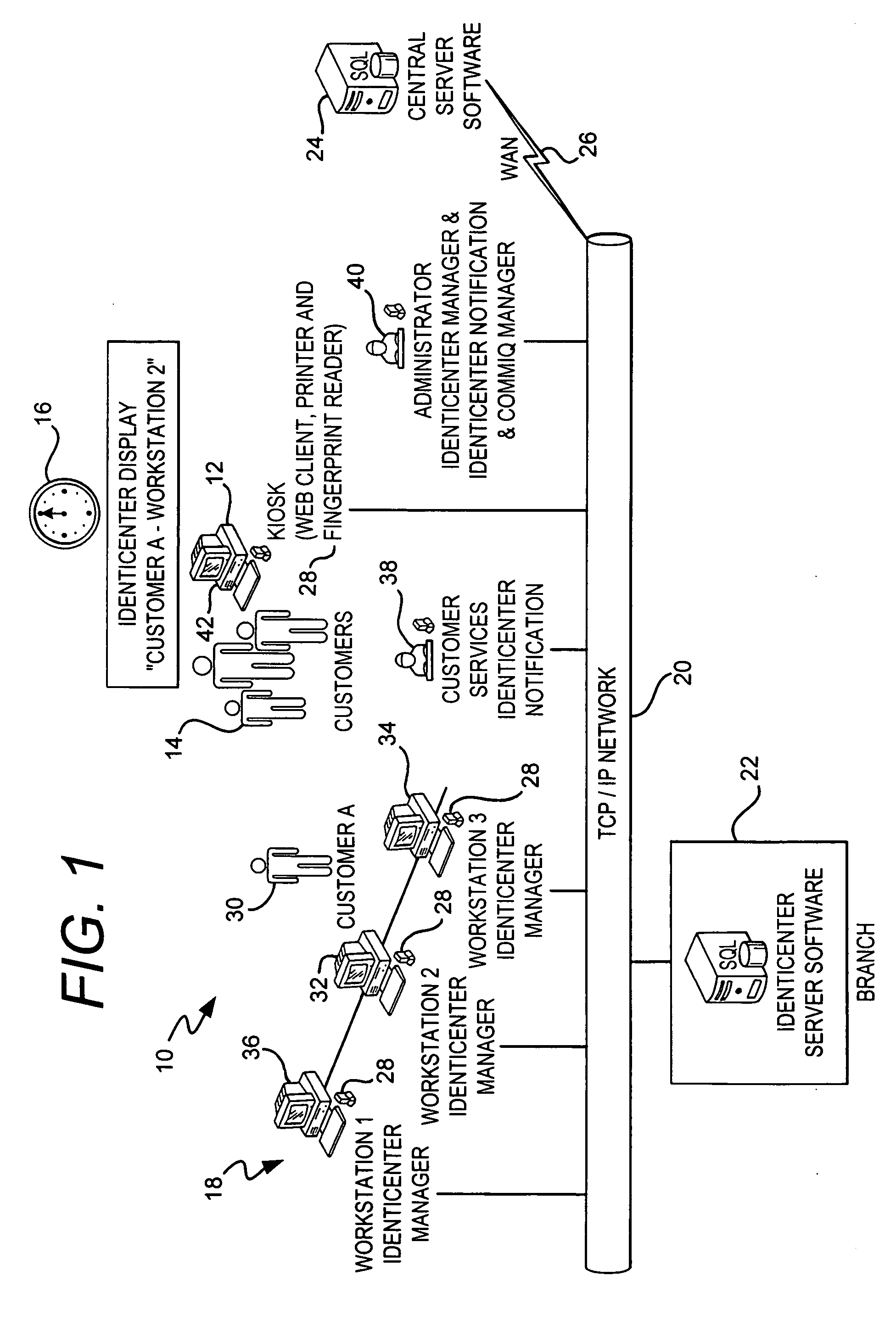

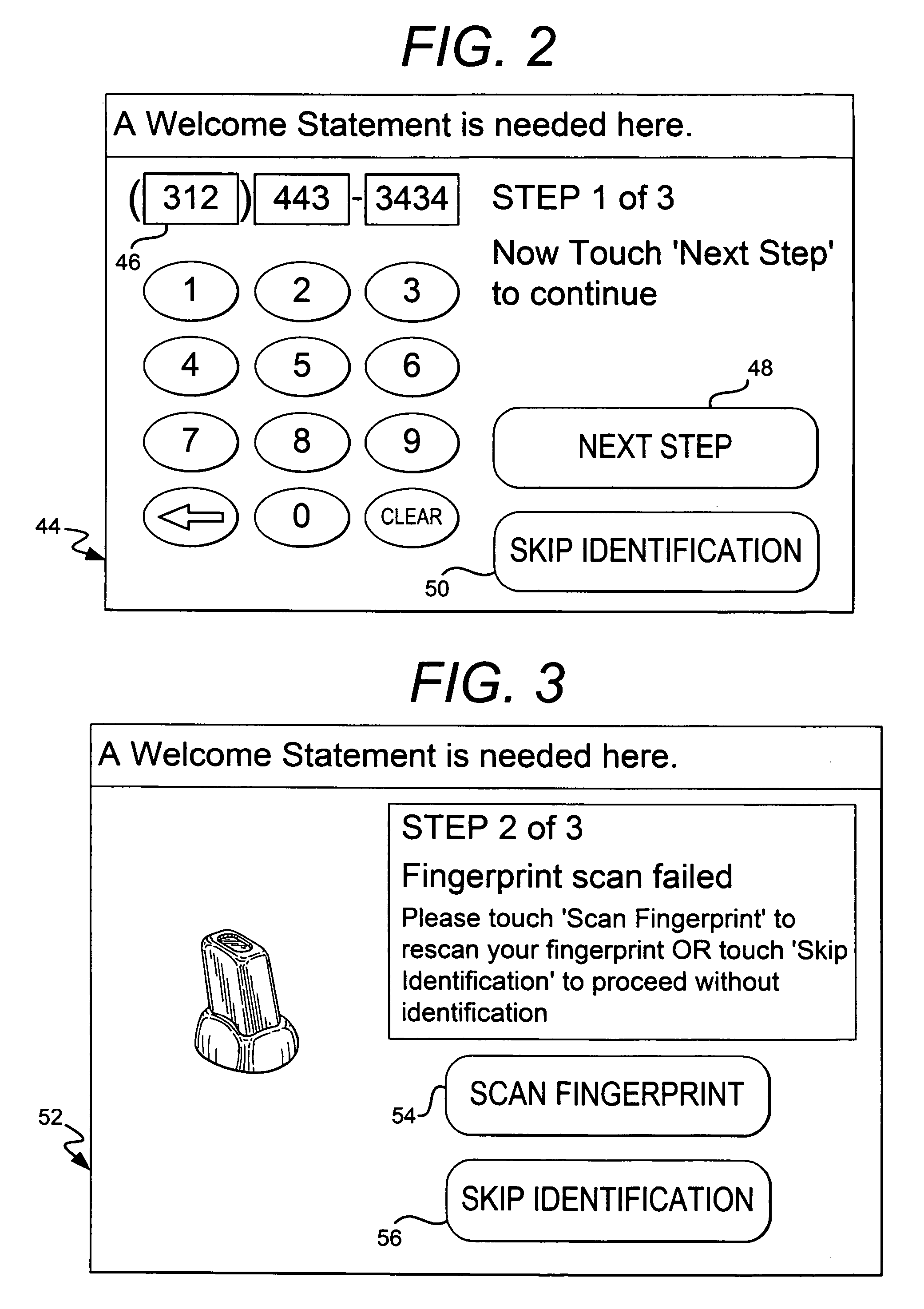

System and method for identifying and managing customers in a financial institution

InactiveUS20060253358A1Preventing account fraudAccurate identificationFinanceChecking apparatusCustomer informationLibrary science

A system and method for managing a customer in a banking institution. The system includes a customer kiosk with biometric device for identifying the customer. The system places the customer in a virtual queue to see a service provider.

Owner:US BIOMETRICS CORP

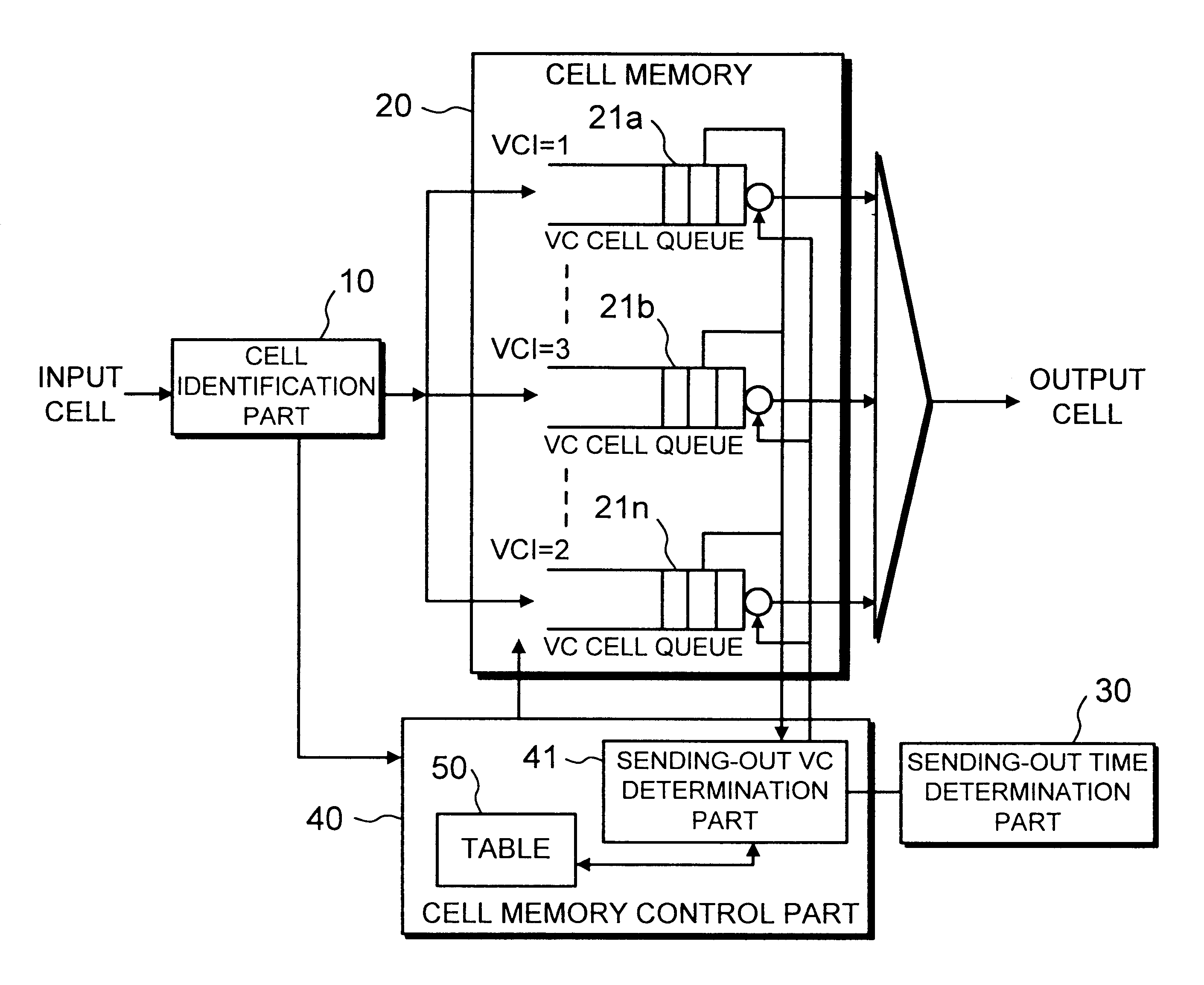

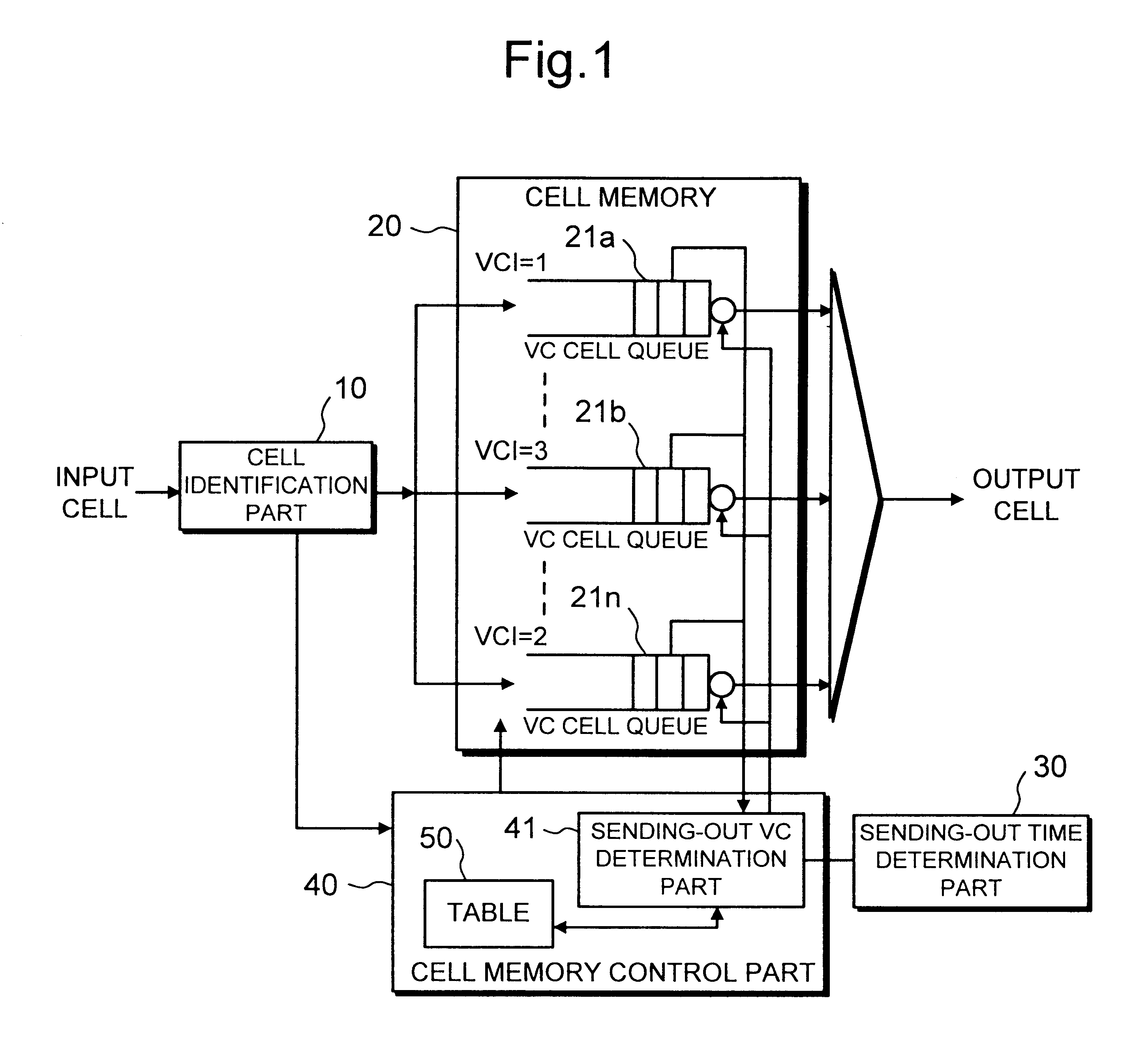

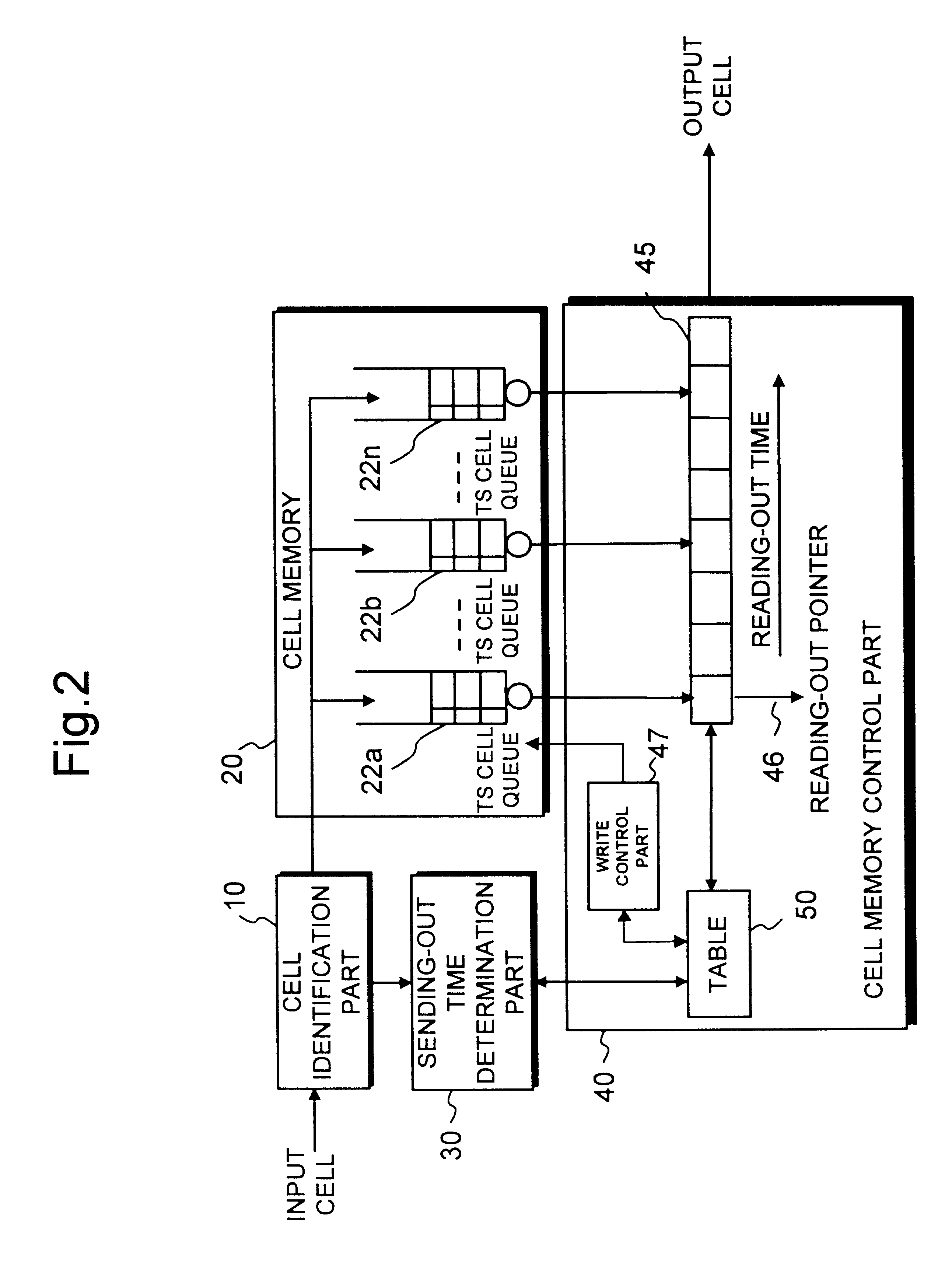

Traffic shaper

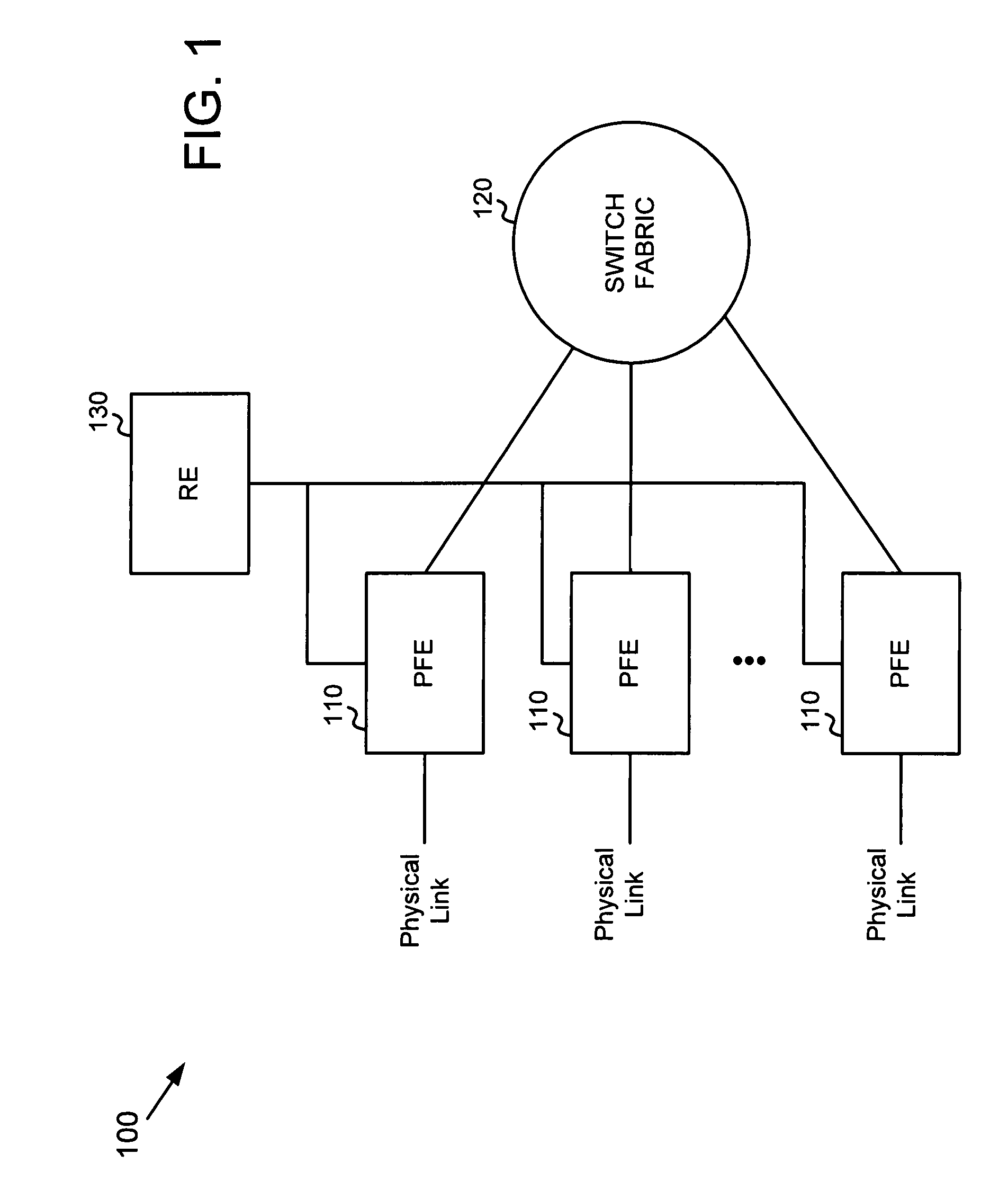

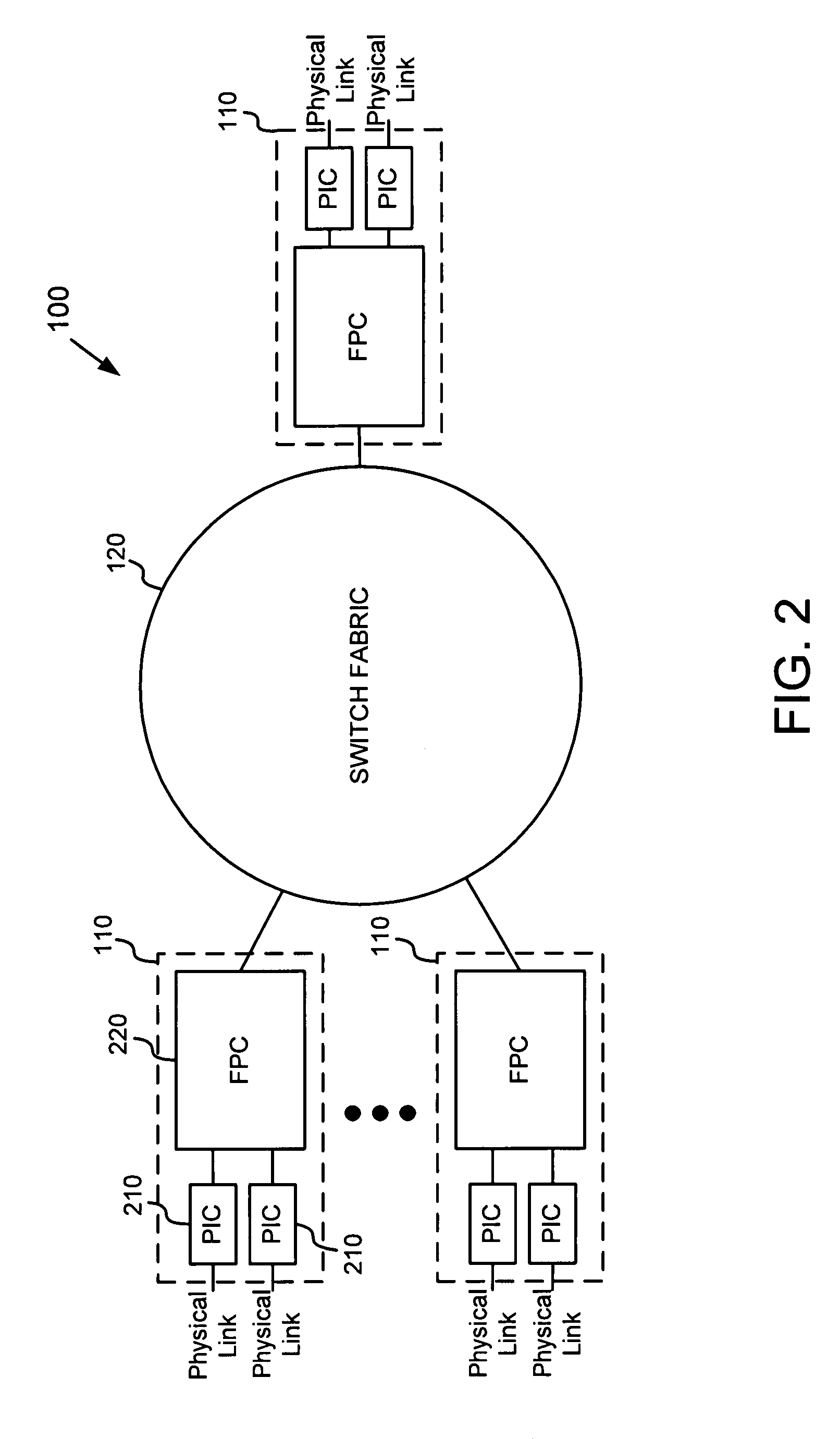

InactiveUS6704321B1Decreasing cell delay variationError preventionFrequency-division multiplex detailsCell delayDistributed computing

A traffic shaper for absorbing a cell delay variation of cell flow in each of virtual connections in an ATM (asynchronous transmission mode) network is realized. The traffic shaper comprises a cell identification part, a cell memory, a sending-out time determination part and a cell memory control part. The cell identification part is provided for identifying a virtual connection allocated to an input cell, the cell memory is provided for storing input cells into respective virtual queues, each queue is corresponding to the virtual connection, in accordance with the identified virtual connection of each input cell, the sending-out time determination part is provided for determining a sending-out time for each cell stored in the cell memory on the virtual connection basis, and the cell memory control part is provided for performing a cell output from each virtual queue in accordance with the sending-out time determined for each cell, and performing an output competition control by selecting a cell to be output among cells having the same sending-out time in different virtual queues in accordance with a predetermined output priority assigned to each virtual connection.

Owner:JUMIPER NETWORKS INC

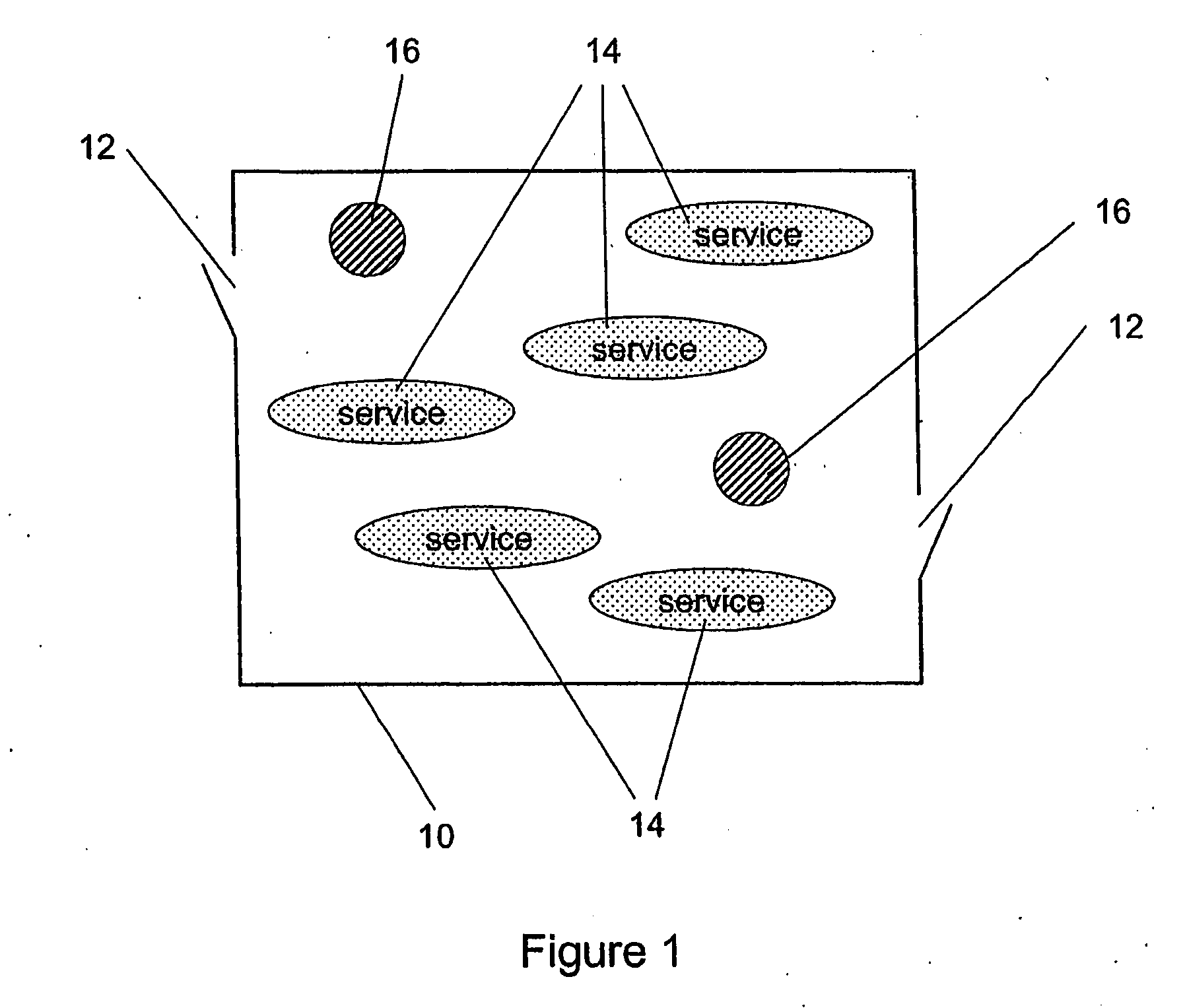

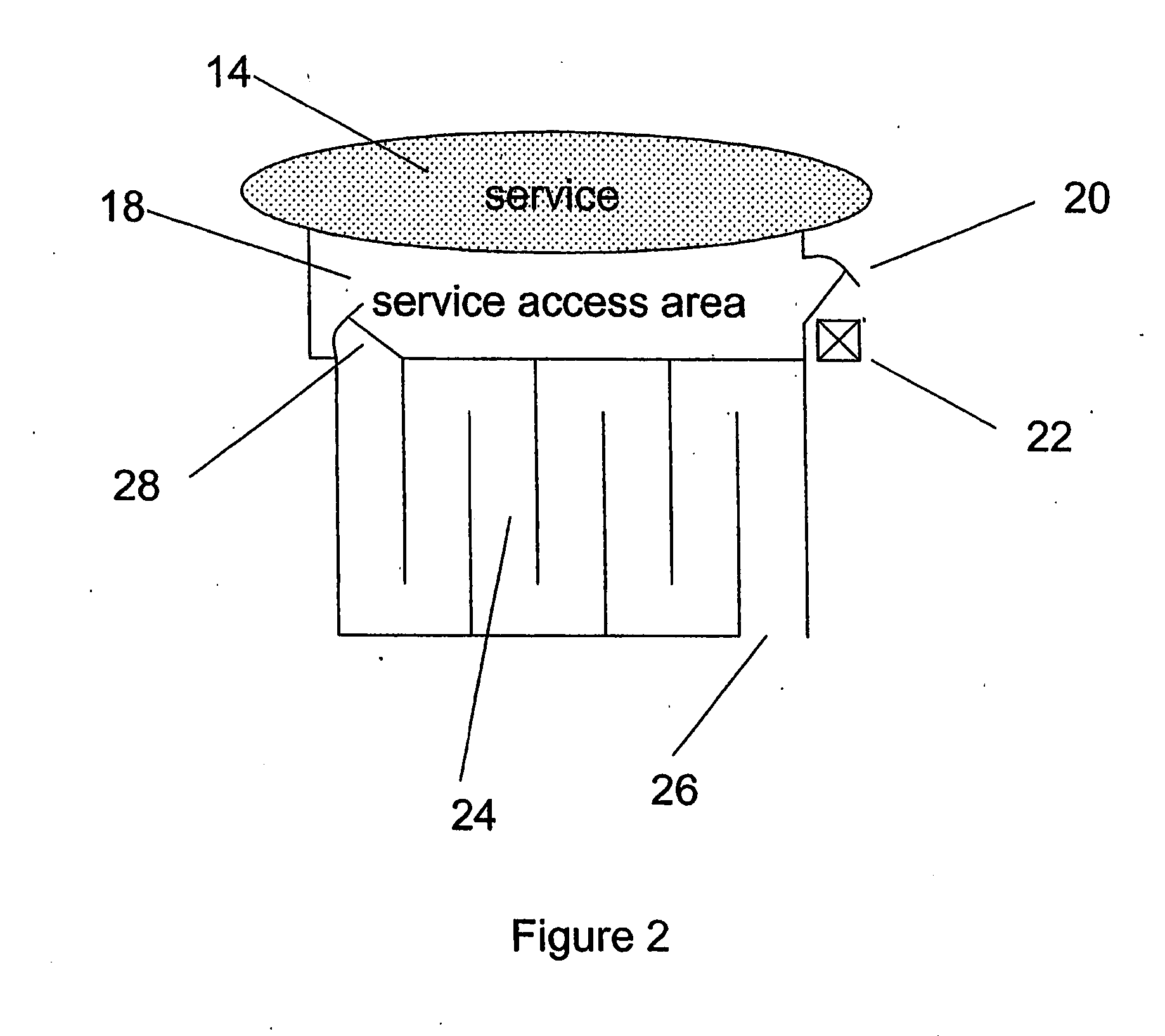

Queue Management System and Method

InactiveUS20070286220A1Avoid the needChecking apparatusData switching by path configurationQueue management systemComputer science

The invention provides a queue management system and method for controlling the movement of a group of one or more people through a virtual queue line for a service. The system comprises registration means (50) for registering the group, the registration means comprising an information carrier (52) bearing a registration code and at least one ID tag (54) including ID details for the member(s) of the group. The registration means associates the registration code with an indication of group size and uniquely with the ID details. The system further comprises interface means (48) for enabling communications to and from the group, and a processor (32, 34) associated with the interface means and responsive to a communication from the group including a communicator address and the registration code for generating a registration record for the group representing the group size, the ID details and the communicator address. The processor is arranged to receive a communication from the group requesting access to the virtual queue and to monitor the place of the group in the queue line and then trigger a summons signal when the group approaches or reaches the head of the queue line. The interface means is responsive to the summons signal for initiating a communication to the communicator address for summoning the group to the service. Access control apparatus (22) at the service reads the at least one ID tag and compares the ID details with the registration record in order to evaluate whether access to the service should be permitted or prevented.

Owner:ACCESSO TECH GRP

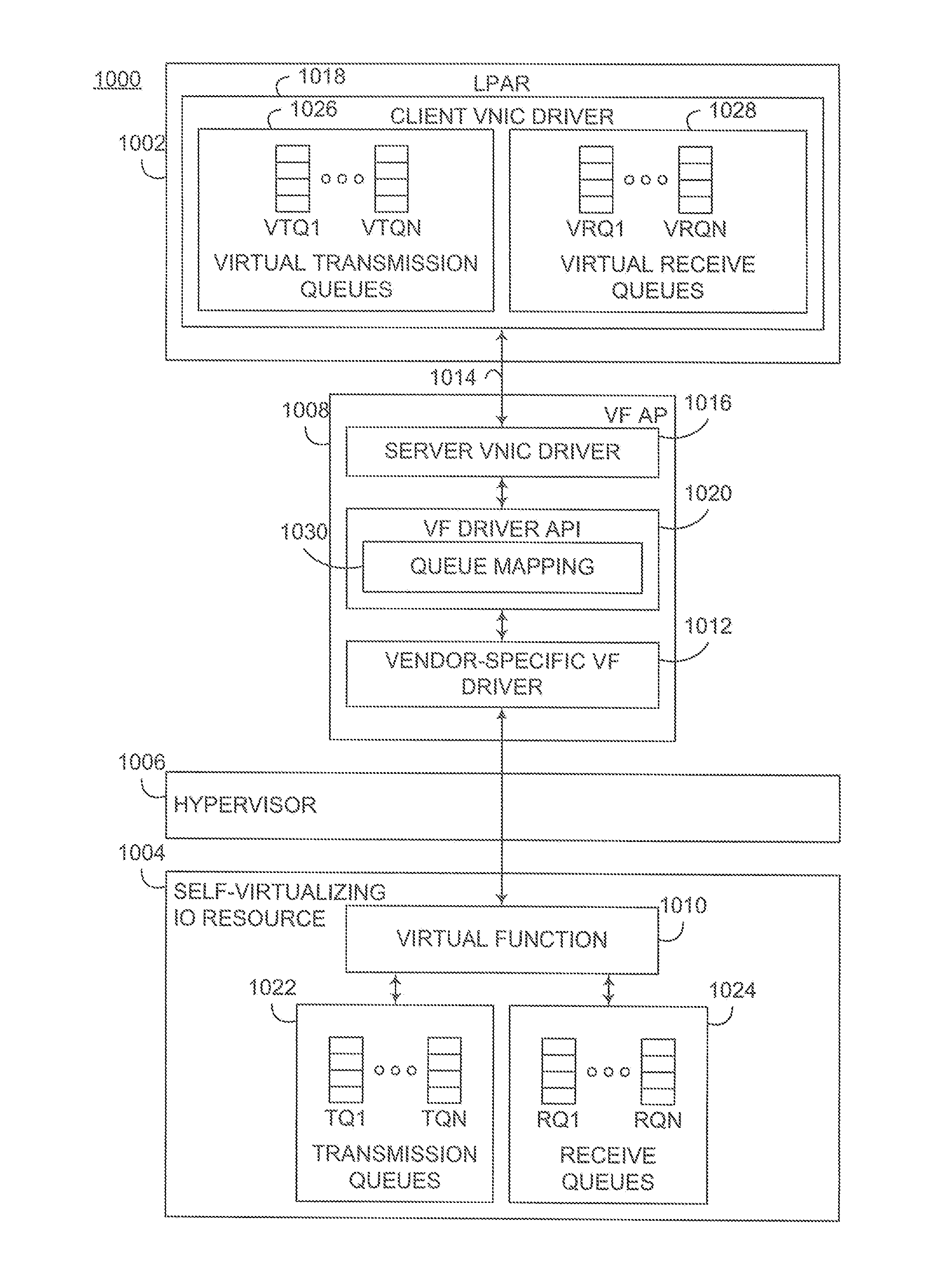

Virtualization of hardware queues in self-virtualizing input/output devices

ActiveUS20120151472A1Effectively abstract awaySoftware simulation/interpretation/emulationMemory systemsComputer hardwareVirtualization

Hardware transmit and / or receive queues in a self-virtualizing IO resource are virtualized to effectively abstract away resource-specific details for the self-virtualizing IO resource. By doing so, a logical partition may be permitted to configure and access a desired number of virtual transmit and / or receive queues, and have an adjunct partition that interfaces the logical partition with the self-virtualizing IO resource handle the appropriate mappings between the hardware and virtual queues.

Owner:IBM CORP

Maintaining packet order using hash-based linked-list queues

InactiveUS7764606B1Error preventionFrequency-division multiplex detailsData streamParallel processing

Ordering logic ensures that data items being processed by a number of parallel processing units are unloaded from the processing units in the original per-flow order that the data items were loaded into the parallel processing units. The ordering logic includes a pointer memory, a tail vector, and a head vector. Through these three elements, the ordering logic keeps track of a number of “virtual queues” corresponding to the data flows. A round robin arbiter unloads data items from the processing units only when a data item is at the head of its virtual queue.

Owner:JUMIPER NETWORKS INC

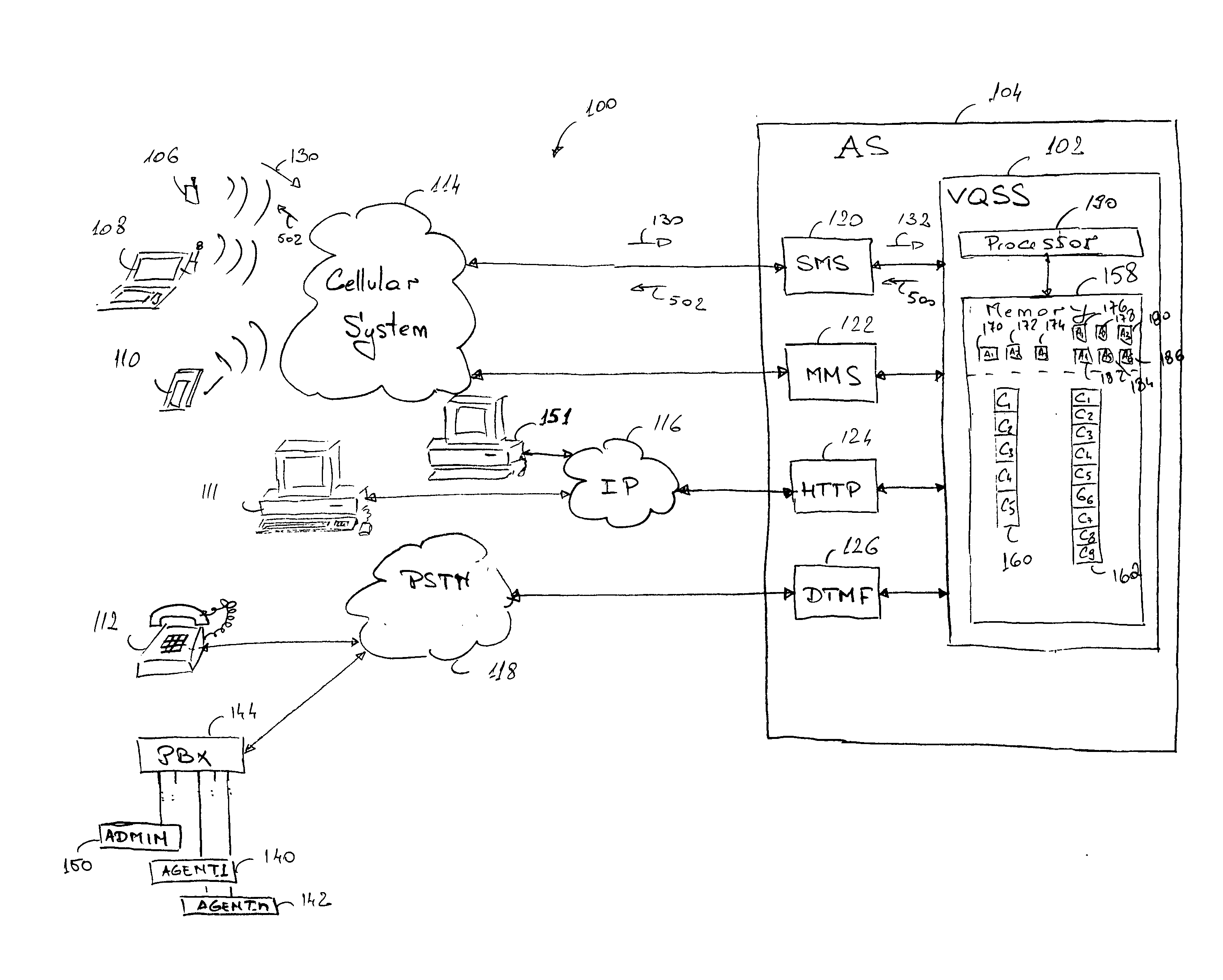

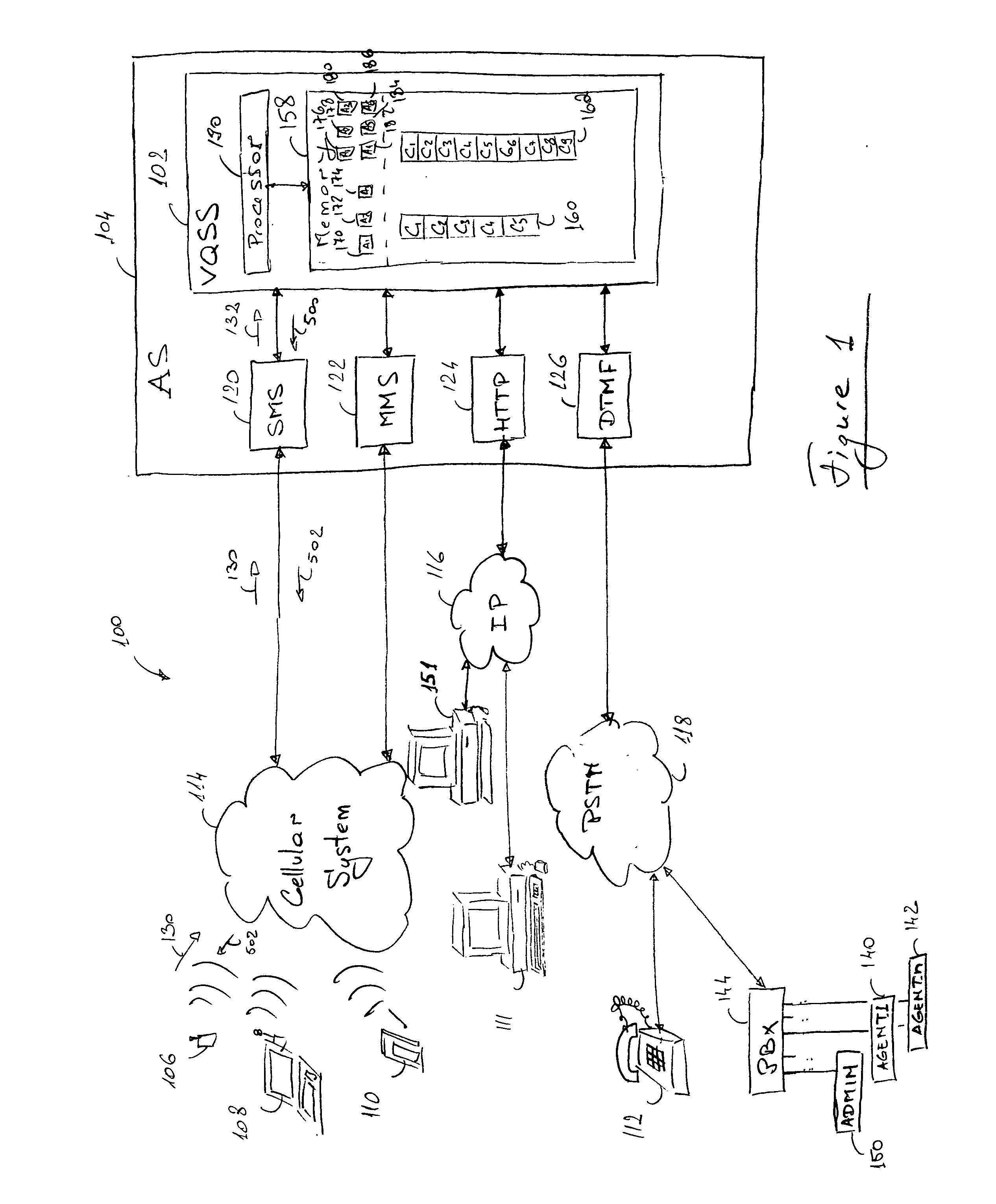

Virtual queuing support system and method

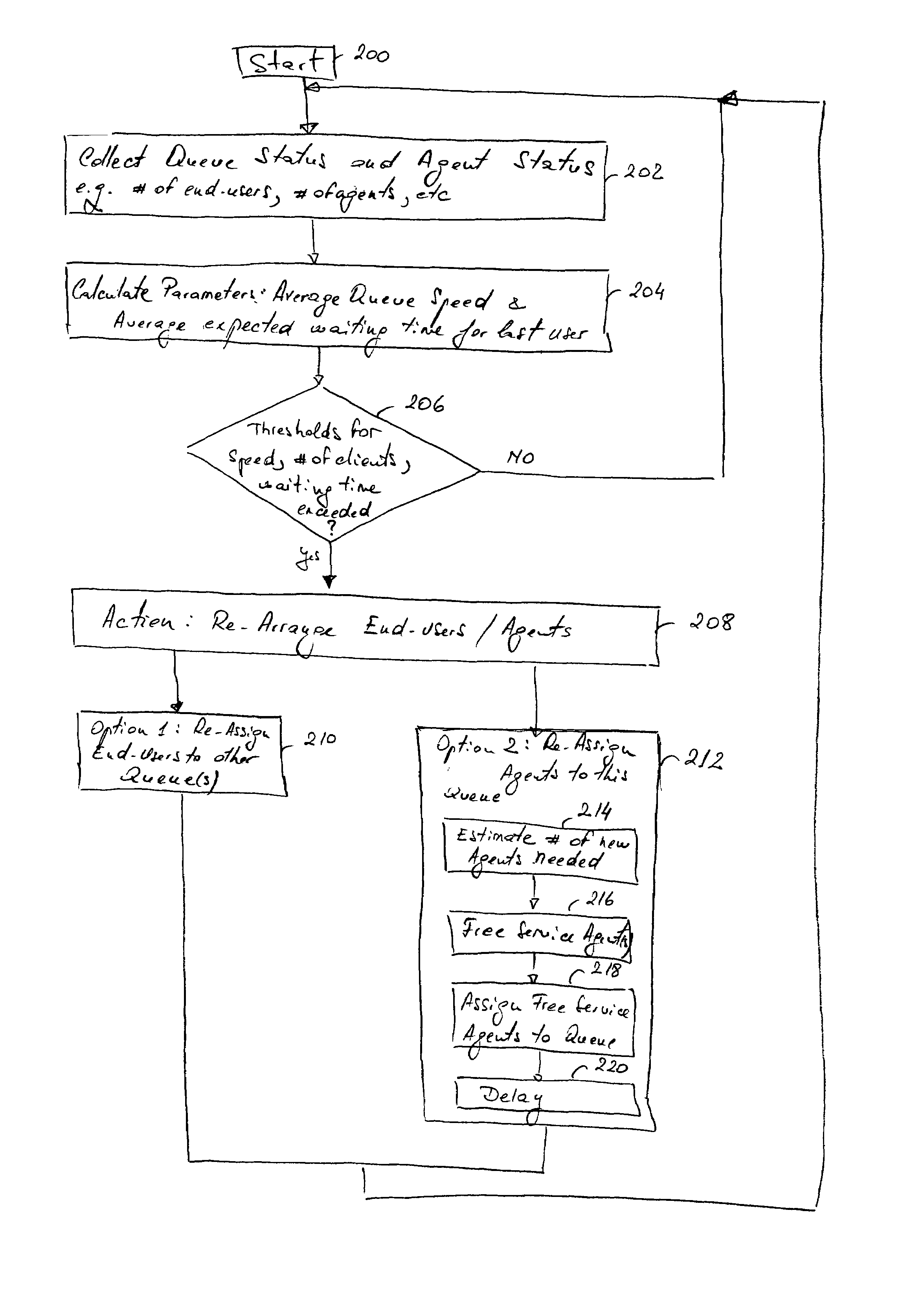

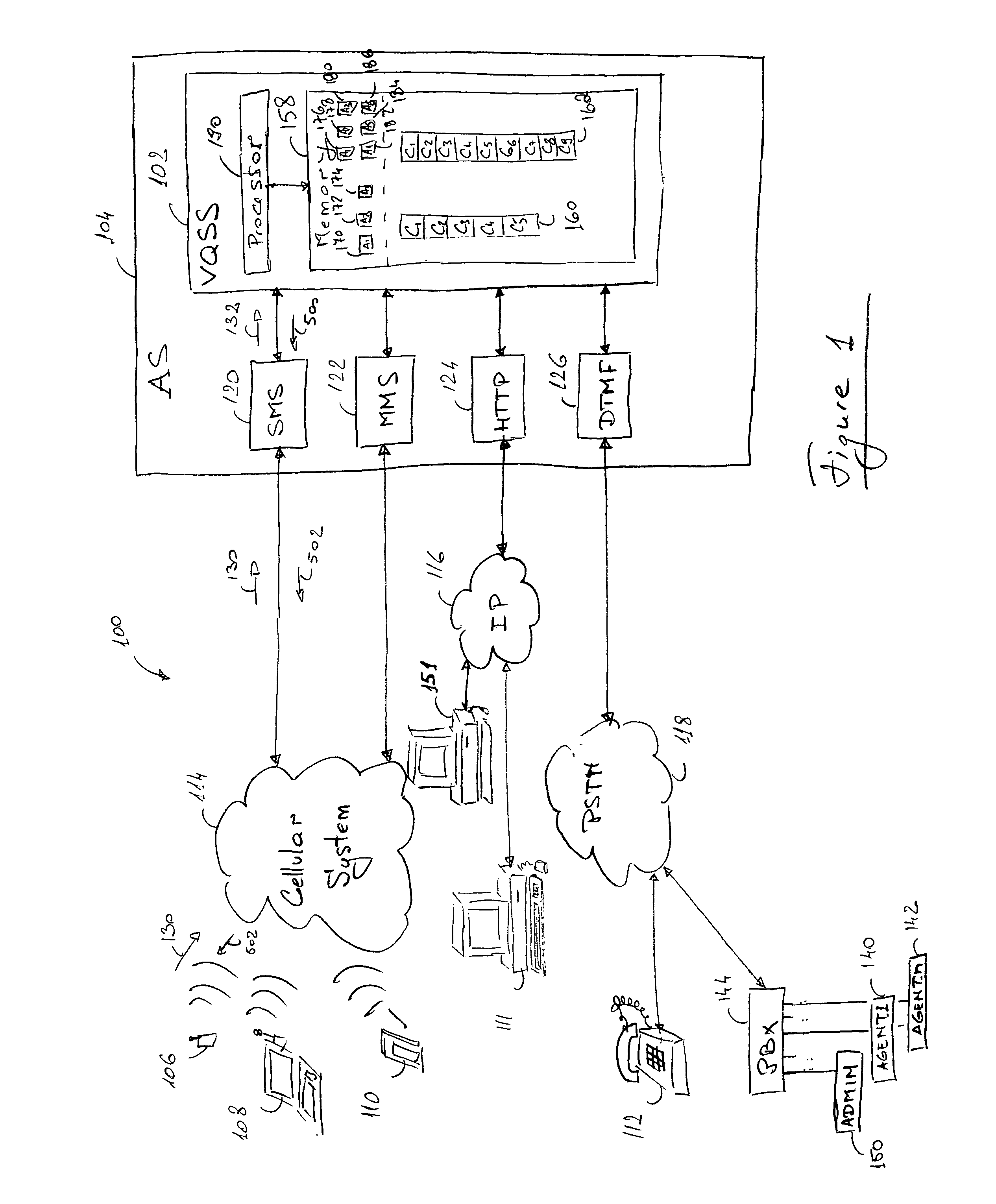

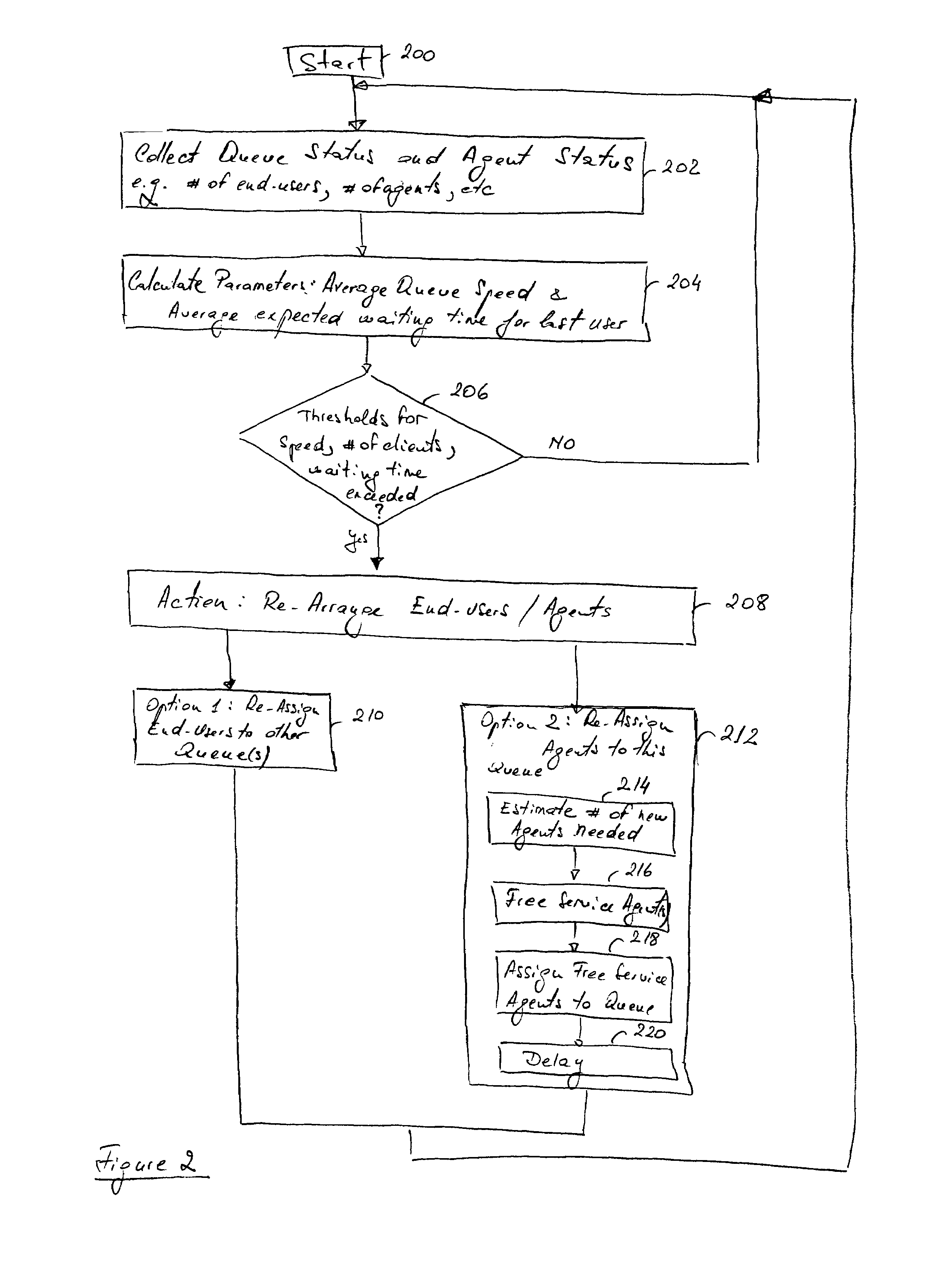

ActiveUS20050089053A1Special service for subscribersData switching by path configurationSupporting systemClient-side

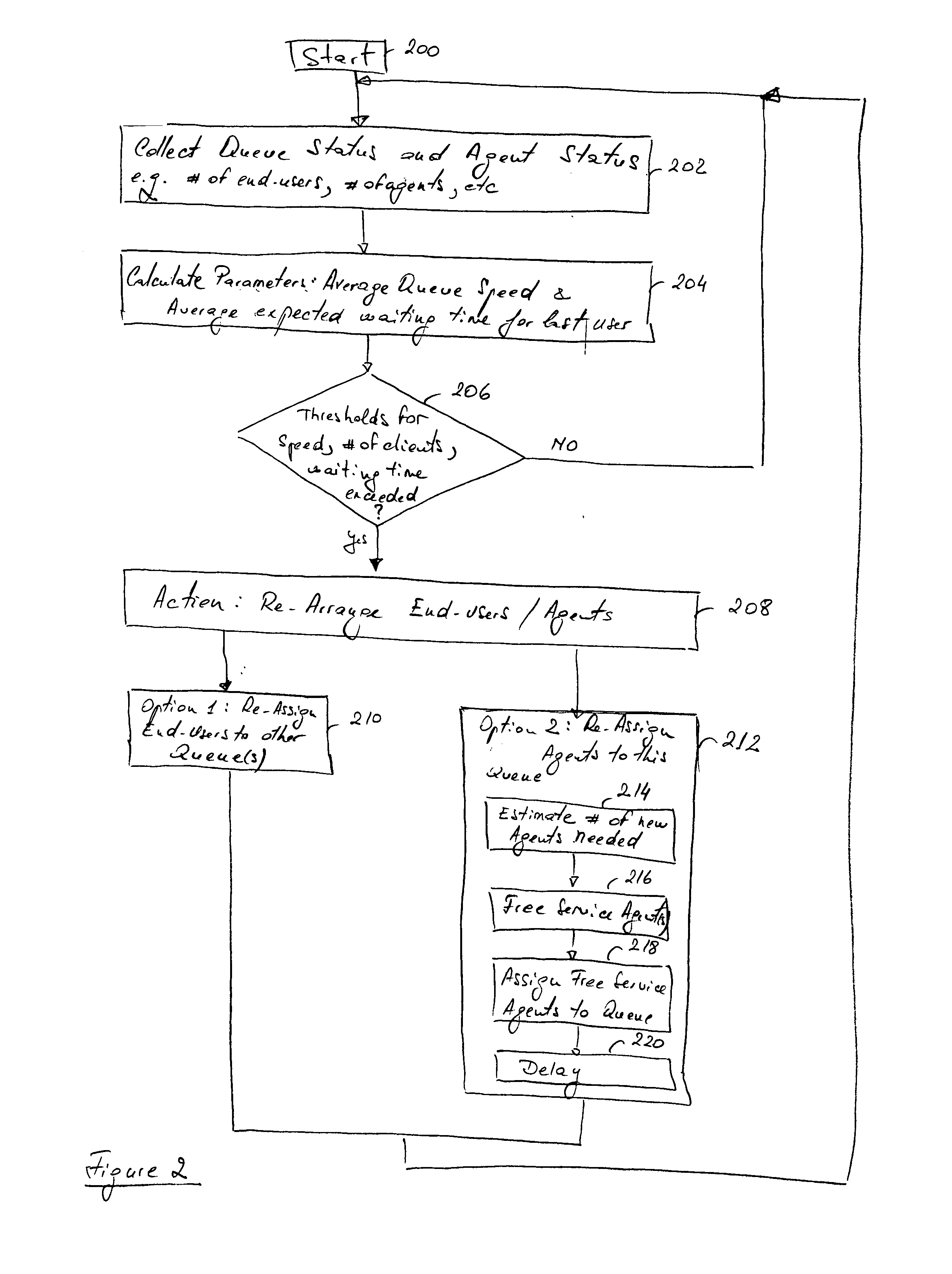

A method and a Virtual Queuing Support System (VQSS) for optimizing end-user service for clients waiting for a service request to be responded and who are registered in various virtual queues of the VQSS. End-users register in a virtual queue of the VQSS, which monitors the status of the queues and the status of the service agents. When a parameter such as the number of users in a queue or the expected waiting time exceeds a pre-set threshold, the VQSS reassigns end-users from the problematic queue, and / or re-assigns service agents from other queues to the problematic queue. The VQSS comprises a memory storing the virtual queues, and a processor for managing the virtual queues.

Owner:TELEFON AB LM ERICSSON (PUBL)

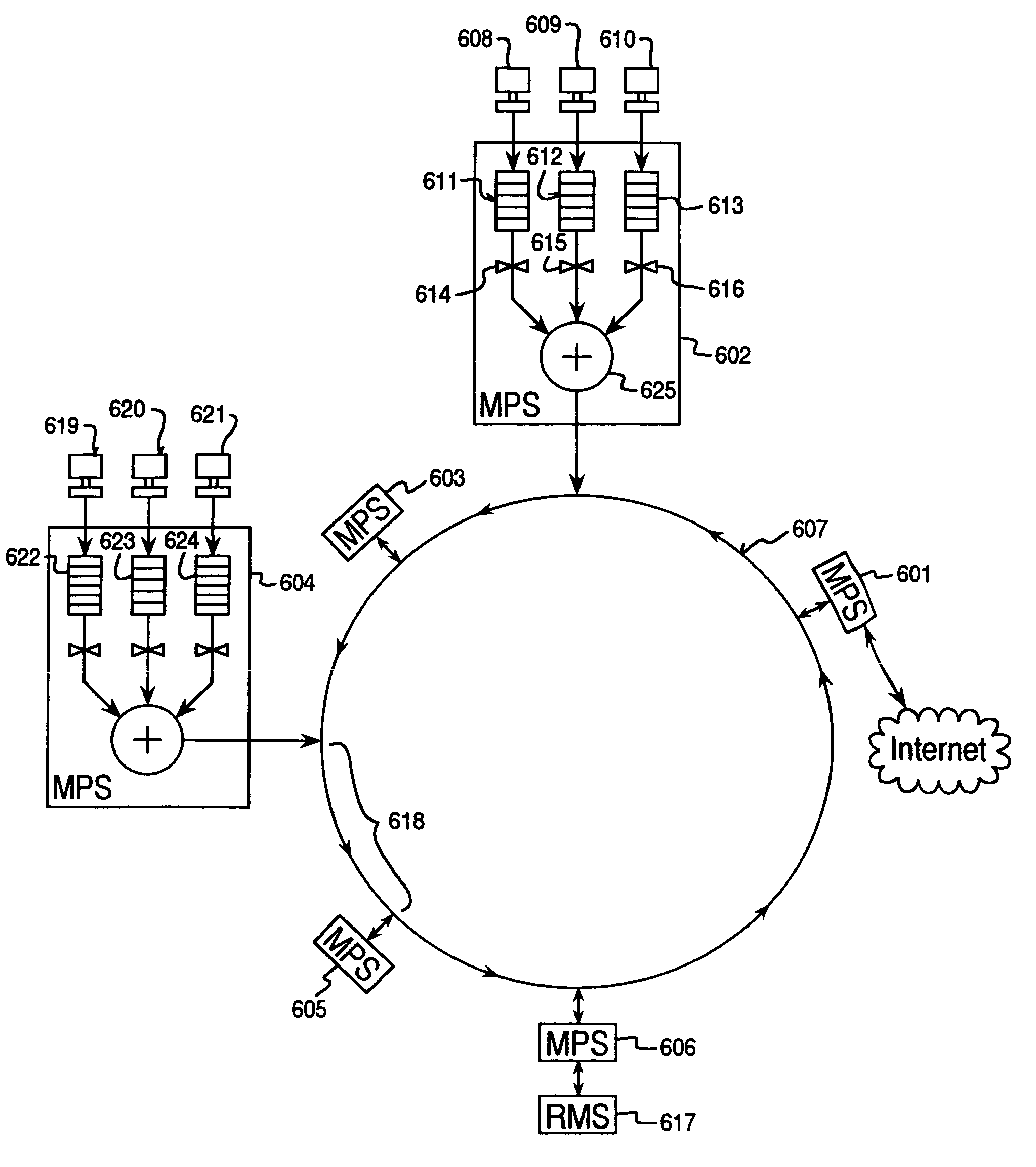

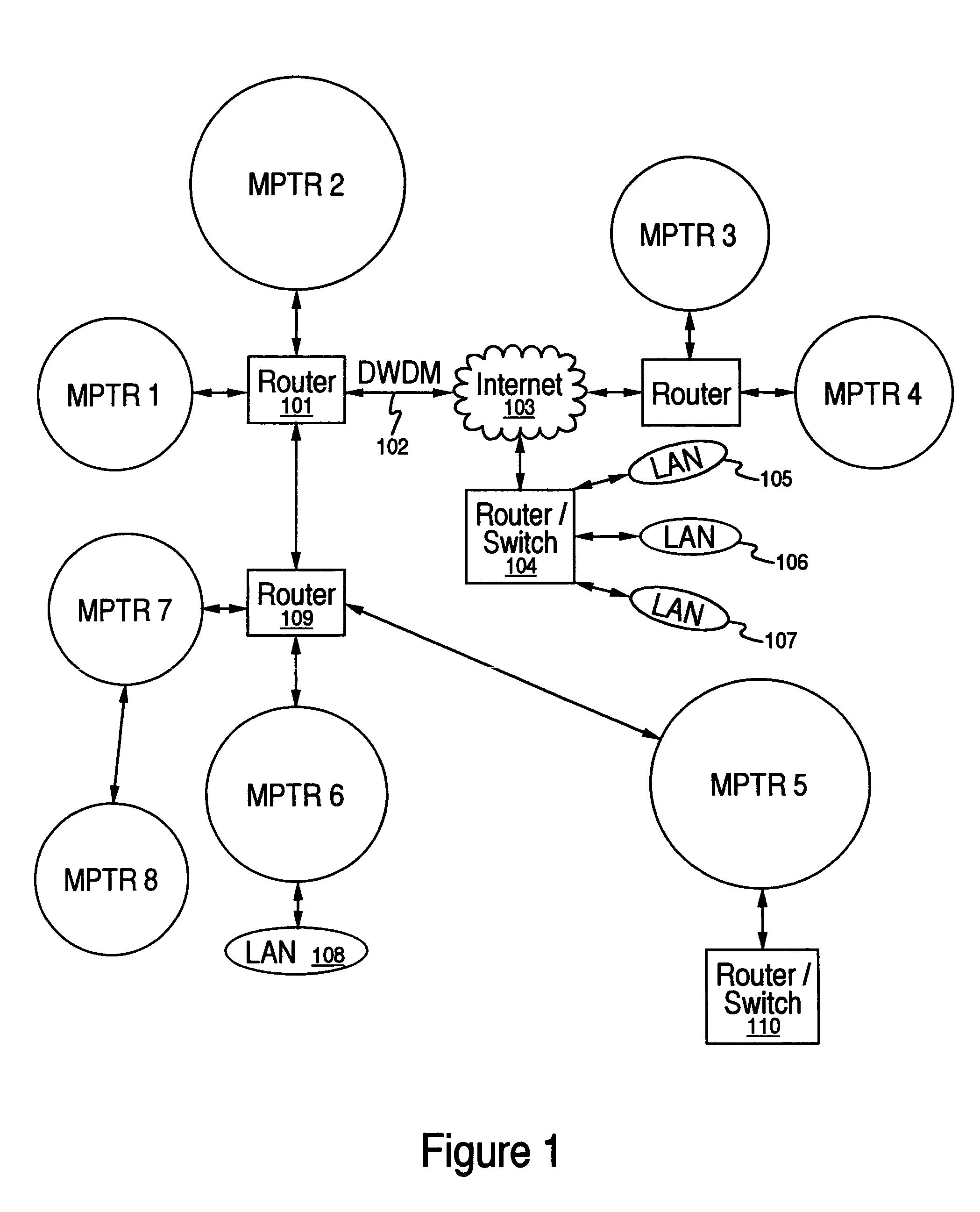

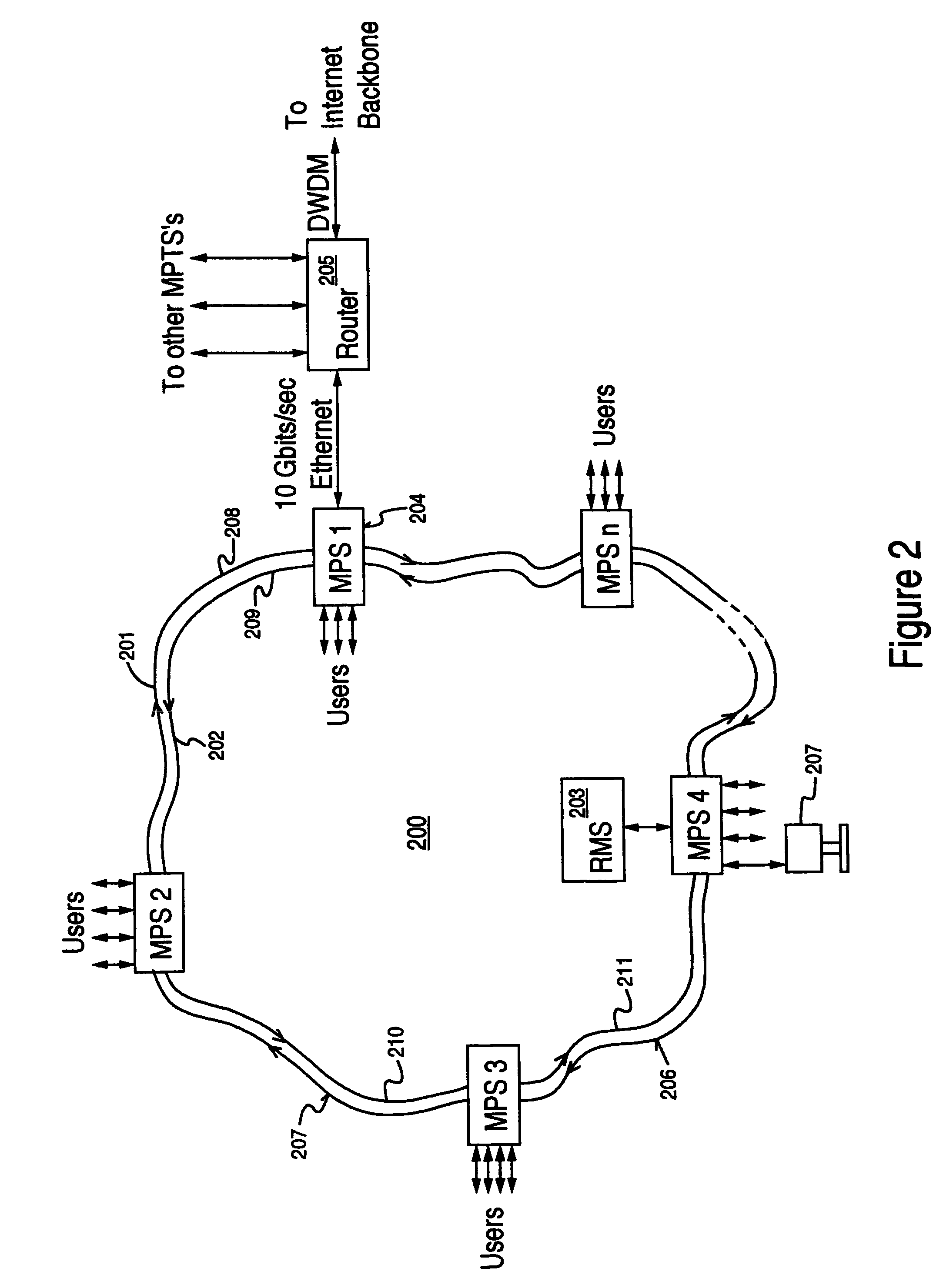

Method and system for weighted fair flow control in an asynchronous metro packet transport ring network

InactiveUS7061861B1Large amount of available bandwidthExcessive buffering delayError preventionFrequency-division multiplex detailsQuality of serviceRing network

A method and system for implementing weighted fair flow control on a metropolitan area network. Weighted fair flow control is implemented using a plurality of metro packet switches (MPS), each including a respective plurality of virtual queues and a respective plurality of per flow queues. Each MPS accepts data from a respective plurality of local input flows. Each local input flow has a respective quality of service (QoS) associated therewith. The data of the local input flows are queued using the per flow queues, with each input flow having its respective per flow queue. Each virtual queue maintains a track of the flow rate of its respective local input flow. Data is transmitted from the local input flows of each MPS across a communications channel of the network and the bandwidth of the communications channel is allocated in accordance with the QoS of each local input flow. The QoS is used to determine the rate of transmission of the local input flow from the per flow queue to the communications channel. This implements an efficient weighted bandwidth utilization of the communications channel. Among the plurality of MPS, bandwidth of the communications channel is allocated by throttling the rate at which data is transmitted from an upstream MPS with respect to the rate at which data is transmitted from a downstream MPS, thereby implementing a weighted fair bandwidth utilization of the communications channel.

Owner:ARRIS ENTERPRISES LLC

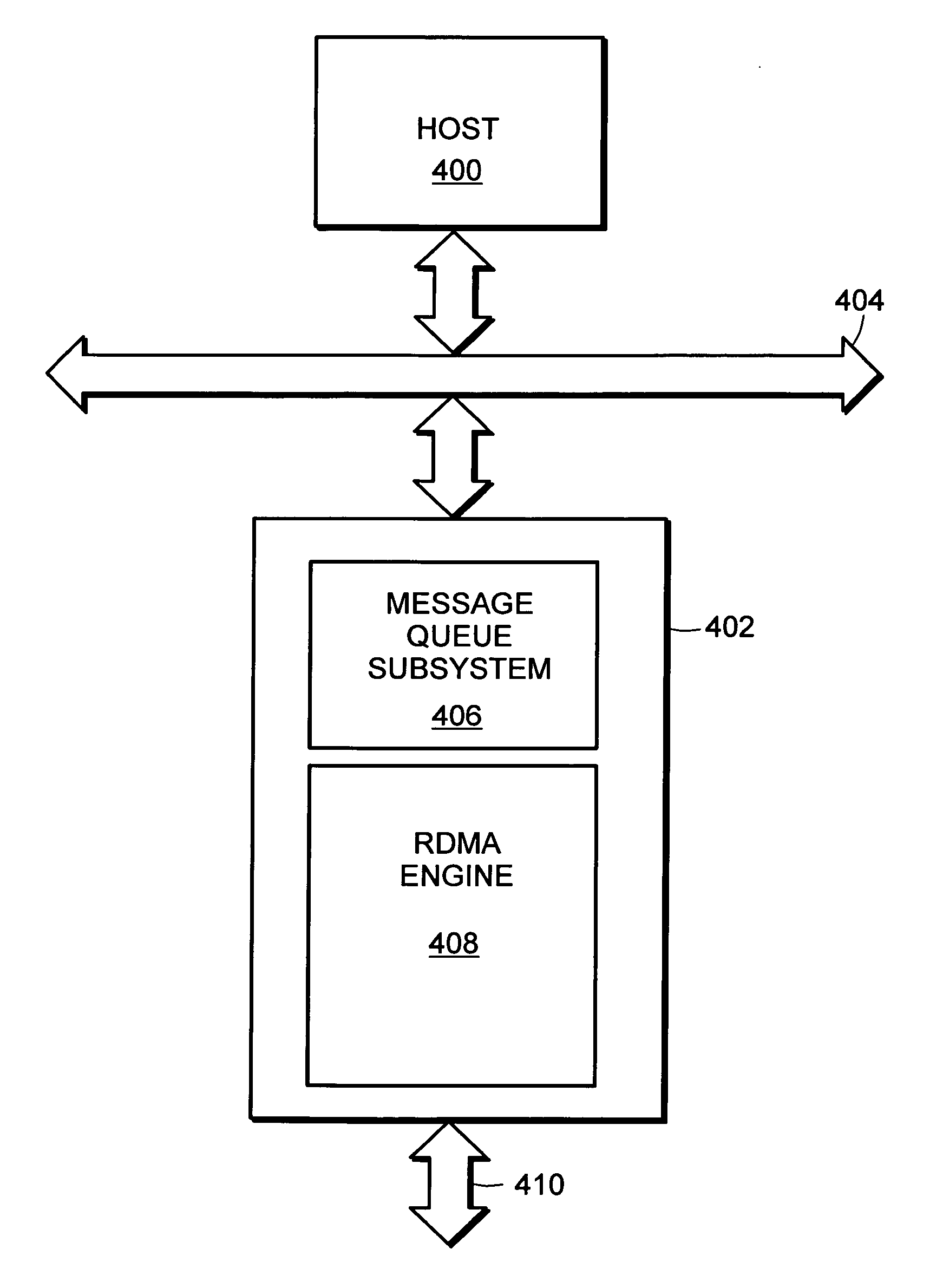

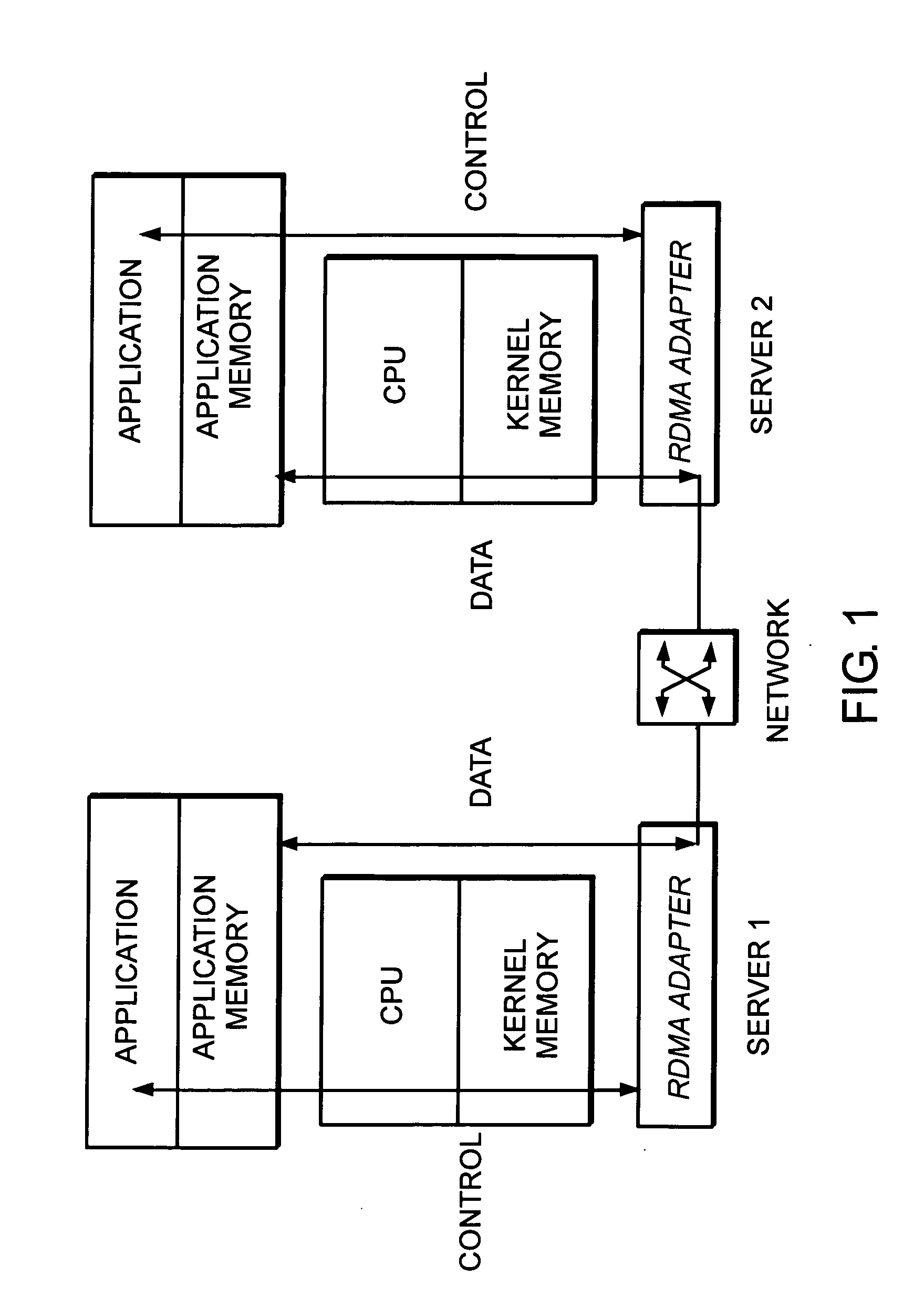

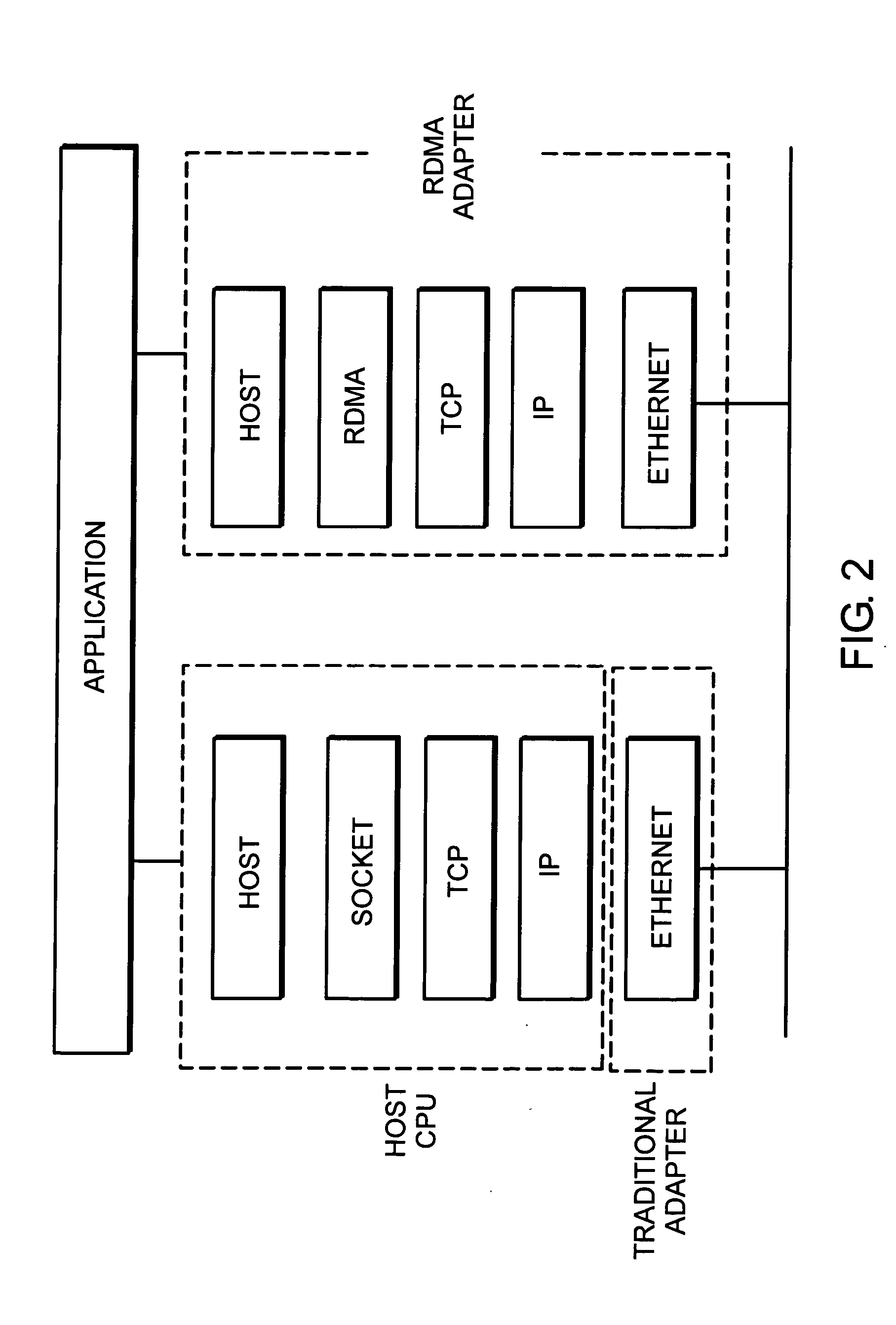

System and method for work request queuing for intelligent adapter

InactiveUS20050220128A1Effective supportData switching by path configurationNetwork connectionsMessage queueMemory address

A system and method for work request queuing for an intelligent network interface card or adapter. More specifically, the invention provides a method and system that efficiently supports an extremely large number of work request queues. A virtual queue interface is presented to the host, and supported on the “back end” by a real queue shared among many multiple virtual queues. A message queue subsystem for an RDMA capable network interface includes a memory mapped virtual queue interface. The queue interface has a large plurality of virtual message queues with each virtual queue mapped to a specified range of memory address space. The subsystem includes logic to detect work requests on a host interface bus to at least one of specified address ranges corresponding to one of the virtual queues and logic to place the work requests into a real queue that is memory based and shared among at least some of the plurality of virtual queues, and wherein real queue entries include indications of the virtual queue to which the work request was addressed.

Owner:AMMASSO

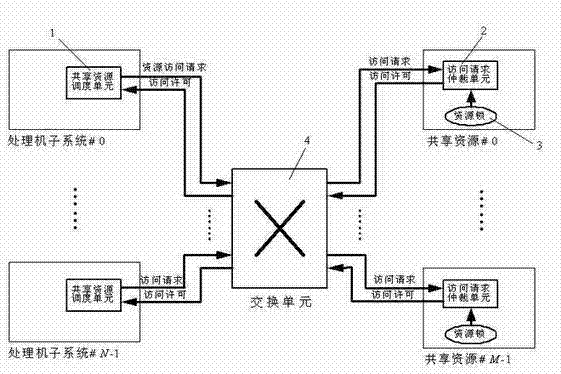

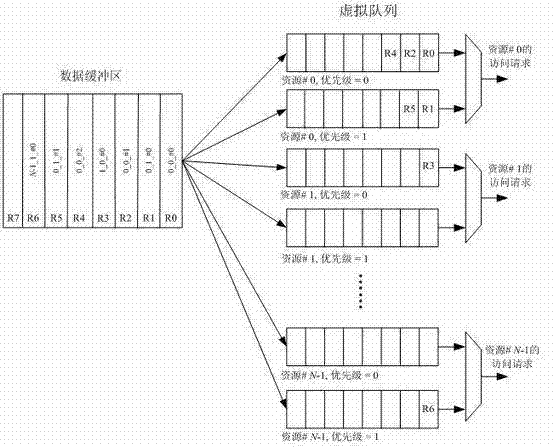

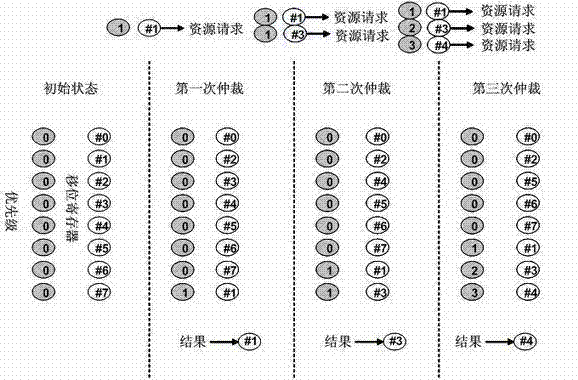

A shared resource scheduling method and system for distributed parallel processing

InactiveCN102298539ASolve the access contention problemAvoid deadlockProgram initiation/switchingParallel processingShared resource

The invention discloses a shared resource scheduling method and system used in distributed parallel processing. The method and system are based on a distributed operation mechanism. The shared resource scheduling units distributed in each processor subsystem are distributed in each shared Resource locks and resource request arbitration units are implemented. These distributed processing units communicate by sending messages (resource access requests / permissions) to each other through the switching unit. The shared resource scheduling unit in the processor subsystem uses virtual queue technology to manage all resource access requests in the data cache, that is, a special queue is specially opened for each accessible shared resource. Resource locks in shared resources are used to ensure the uniqueness of access to shared resources at any time. Resource locks have two states: lock occupation and lock release. The request arbitration unit in the shared resource uses a priority-based fair polling algorithm to arbitrate resource access requests from different processing nodes. The invention can effectively avoid the competition problem when each processing node accesses the shared resource, can also avoid the deadlock of the shared resource and the starvation problem of the processing node, and provides high-efficiency mutually exclusive access to the shared resource.

Owner:EAST CHINA NORMAL UNIV

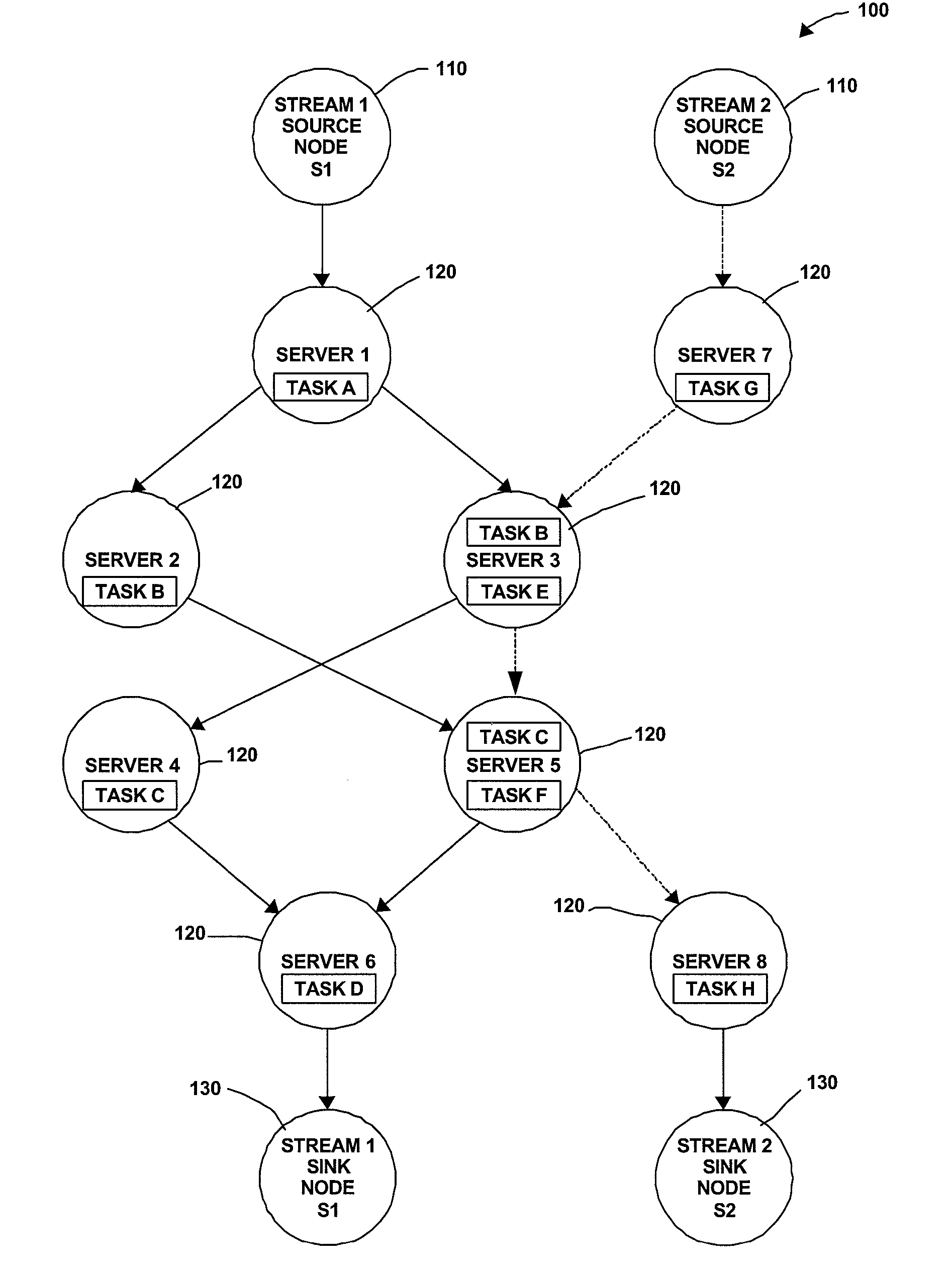

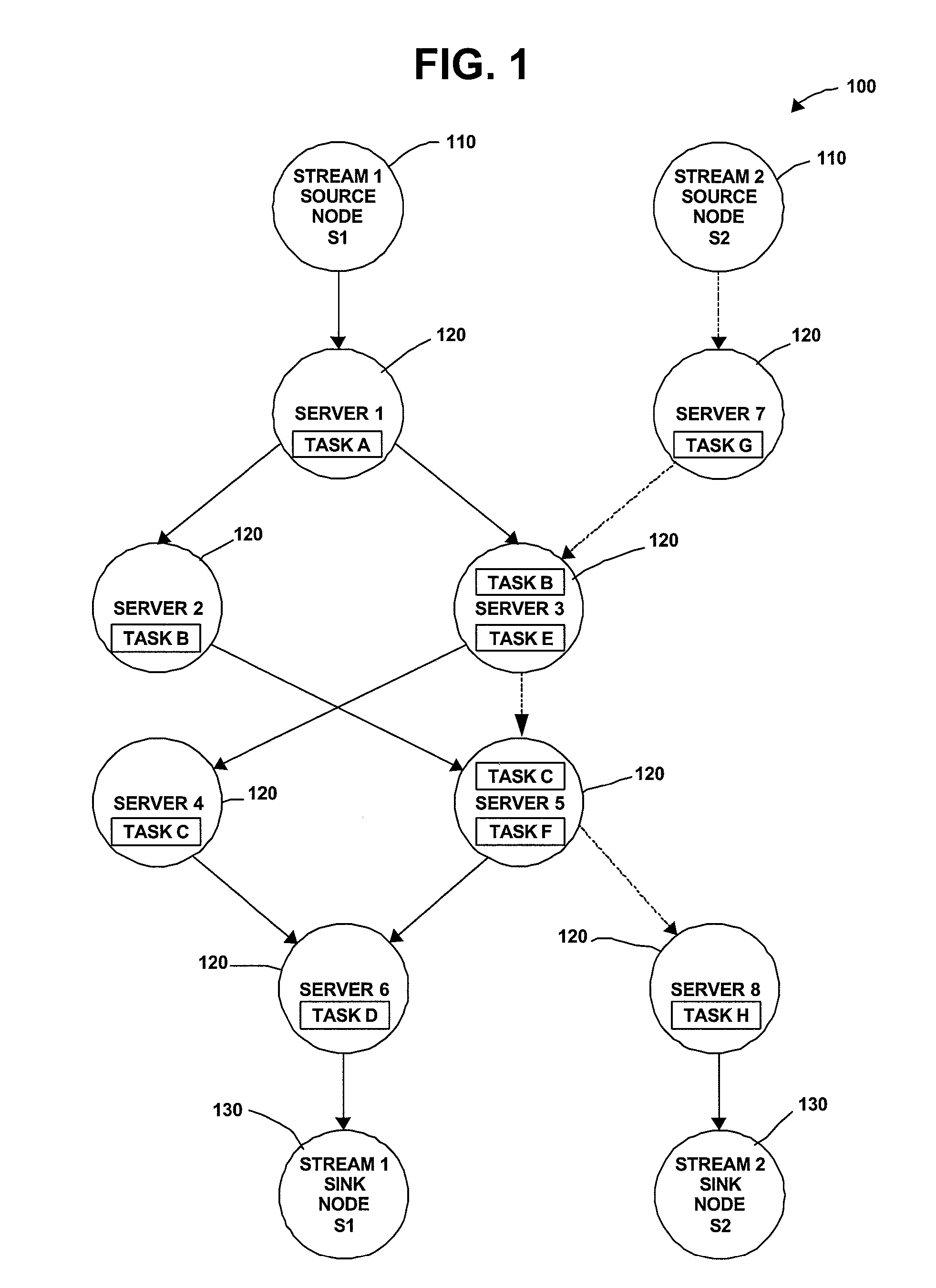

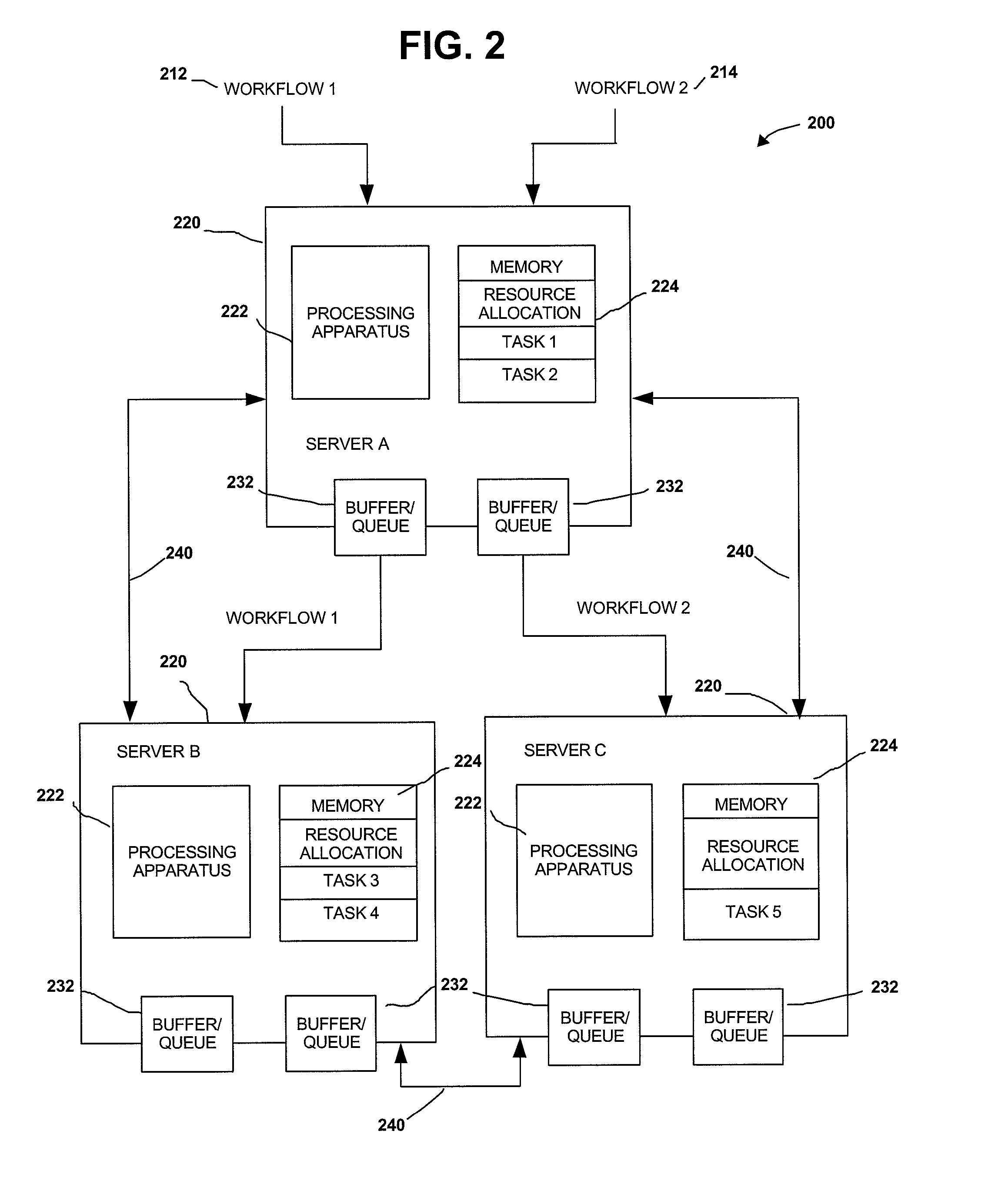

Distributed Joint Admission Control And Dynamic Resource Allocation In Stream Processing Networks

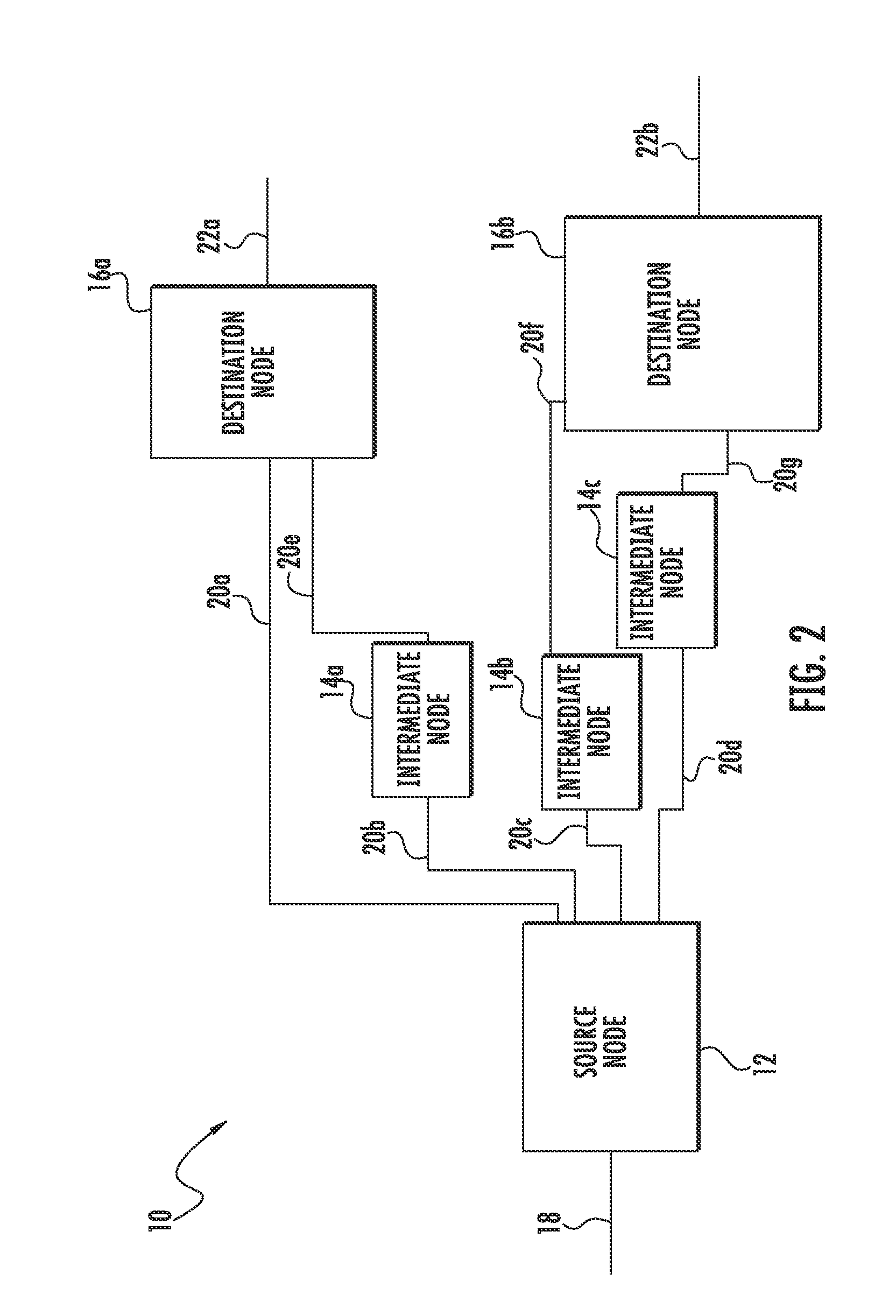

InactiveUS20080304516A1Error preventionFrequency-division multiplex detailsLocal congestionDynamic resource

Methods and apparatus operating in a stream processing network perform load shedding and dynamic resource allocation so as to meet a pre-determined utility criterion. Load shedding is envisioned as an admission control problem encompassing source nodes admitting workflows into the stream processing network. A primal-dual approach is used to decompose the admission control and resource allocation problems. The admission control operates as a push-and-pull process with sources pushing workflows into the stream processing network and sinks pulling processed workflows from the network. A virtual queue is maintained at each node to account for both queue backlogs and credits from sinks. Nodes of the stream processing network maintain shadow prices for each of the workflows and share congestion information with neighbor nodes. At each node, resources are devoted to the workflow with the maximum product of downstream pressure and processing rate, where the downstream pressure is defined as the backlog difference between neighbor nodes. The primal-dual controller iteratively adjusts the admission rates and resource allocation using local congestion feedback. The iterative controlling procedure further uses an interior-point method to improve the speed of convergence towards optimal admission and allocation decisions.

Owner:IBM CORP

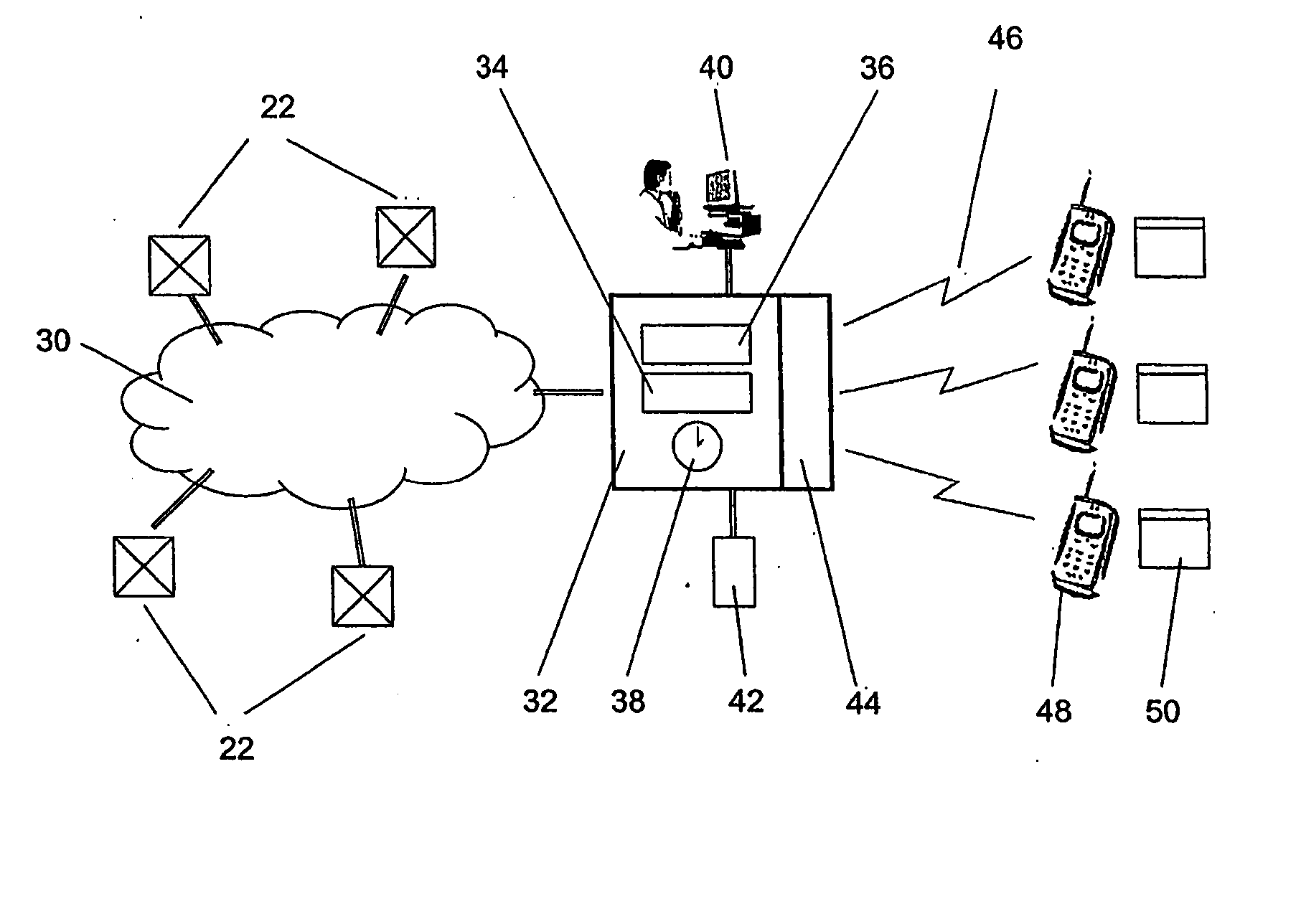

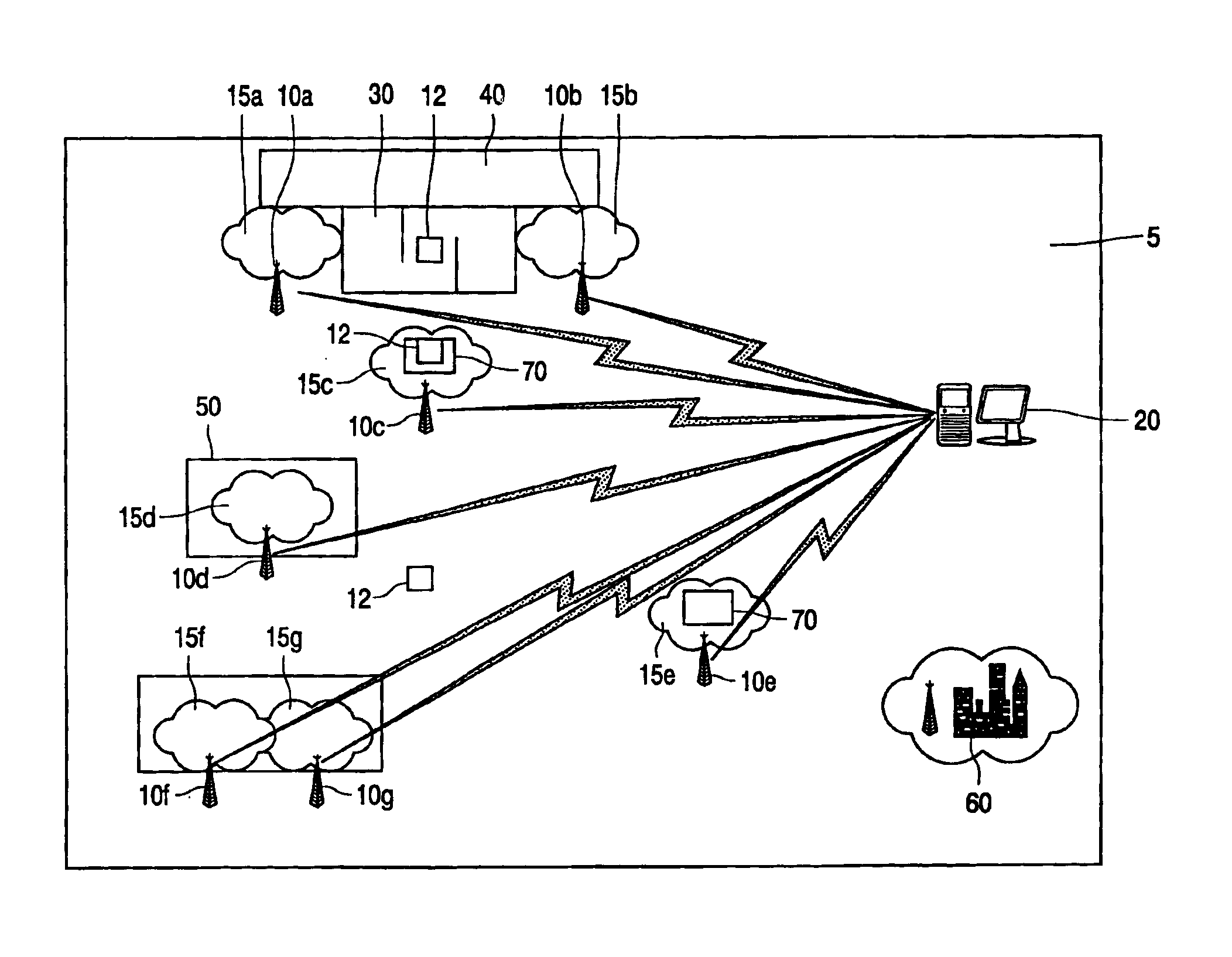

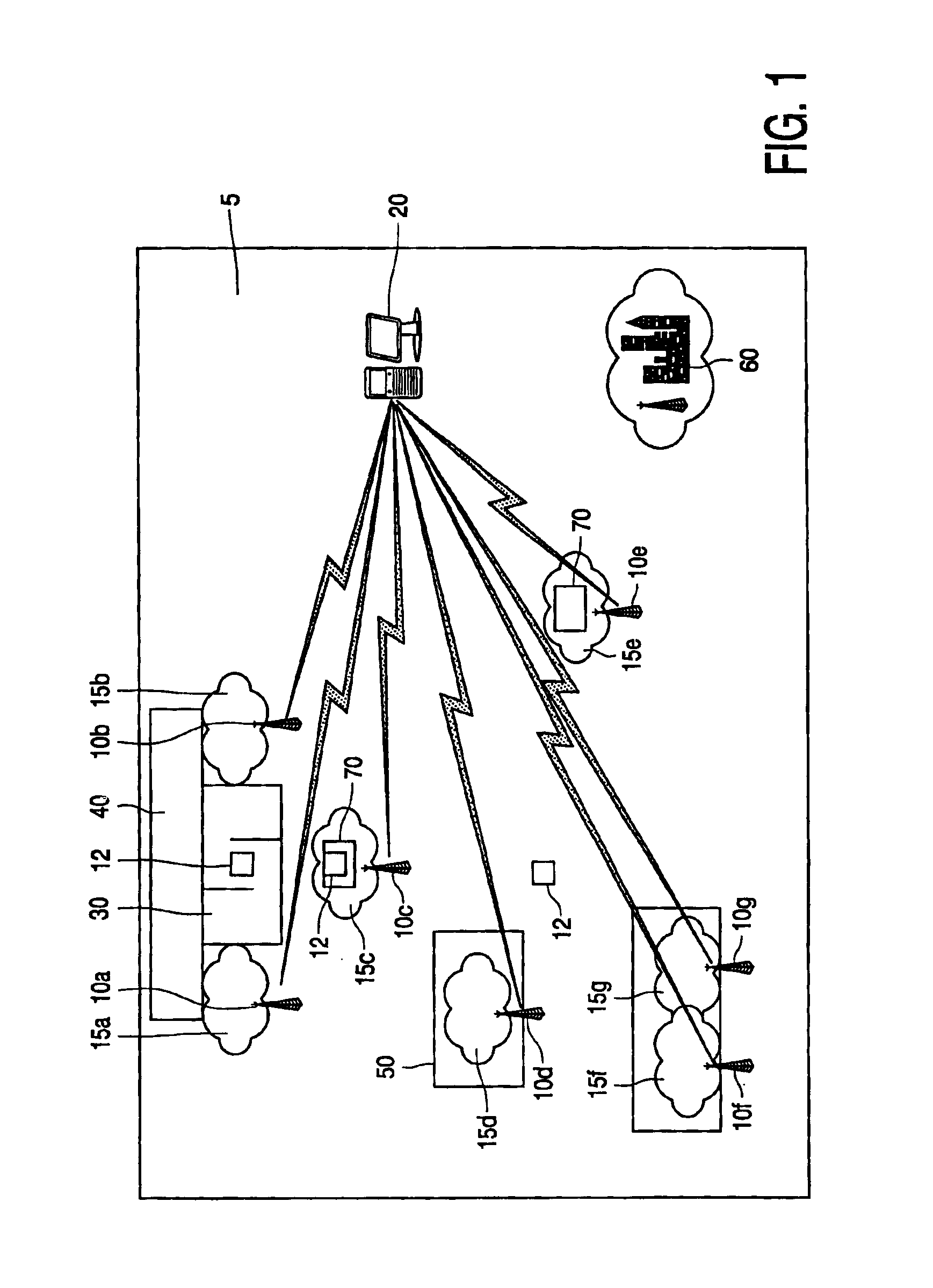

Method and system for electronic route planning and virtual queue handling

InactiveUS6889900B2Improve efficiencyImprove returnForecastingChecking apparatusMonitoring systemRoute planning

A queue monitoring system is disclosed. A detection system (10) provides at least one coverage zone (15) covering at least a part of a queuing area (30). A handset (12) is issued to a user, the detection system (10) being arranged to detect the handset (12) when it is within the coverage zone (15) and to record the user of the handset as being in the queue. Queue load is subsequently used to provide recommended itineraries to visitors via the handsets (12).

Owner:LO Q

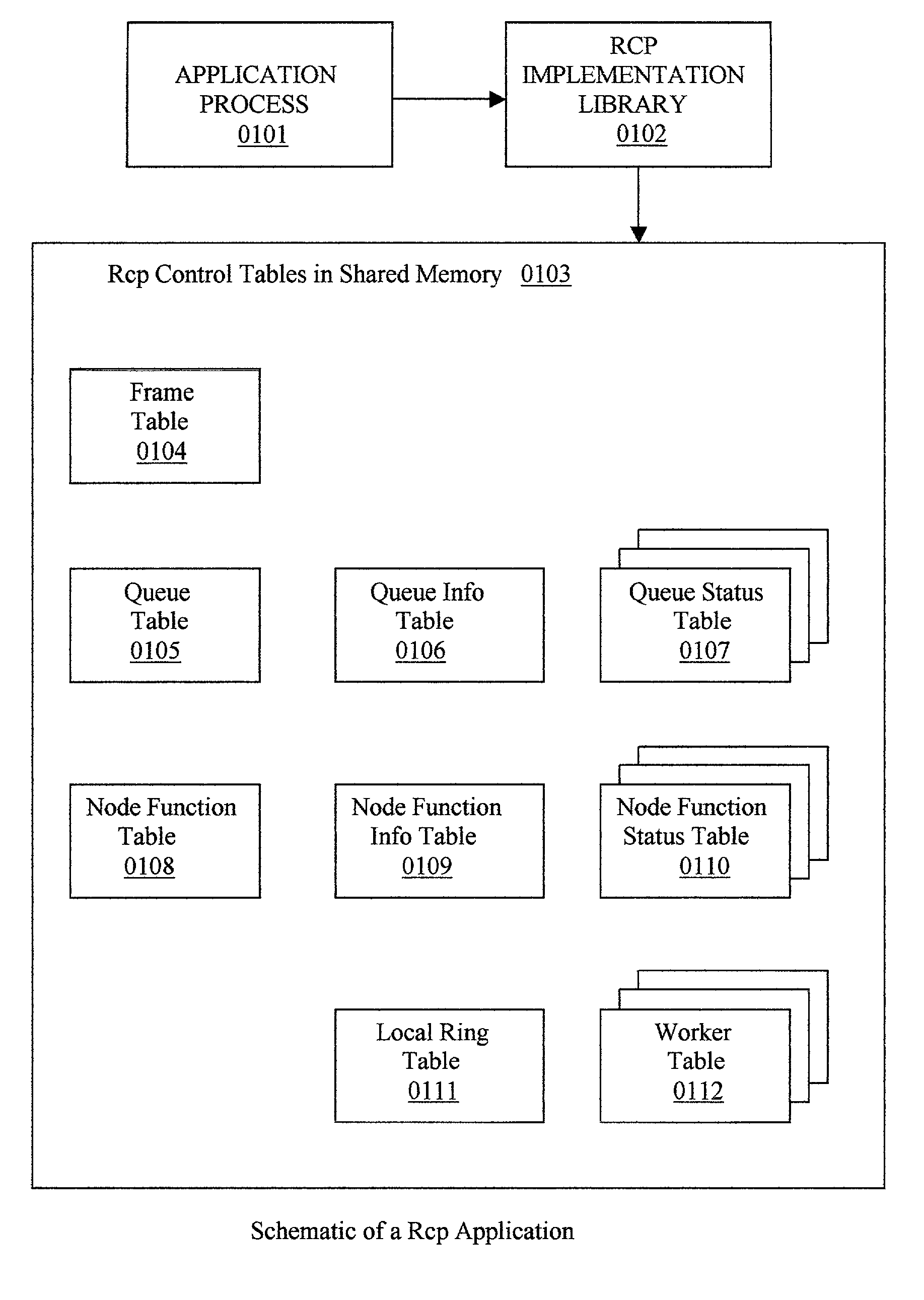

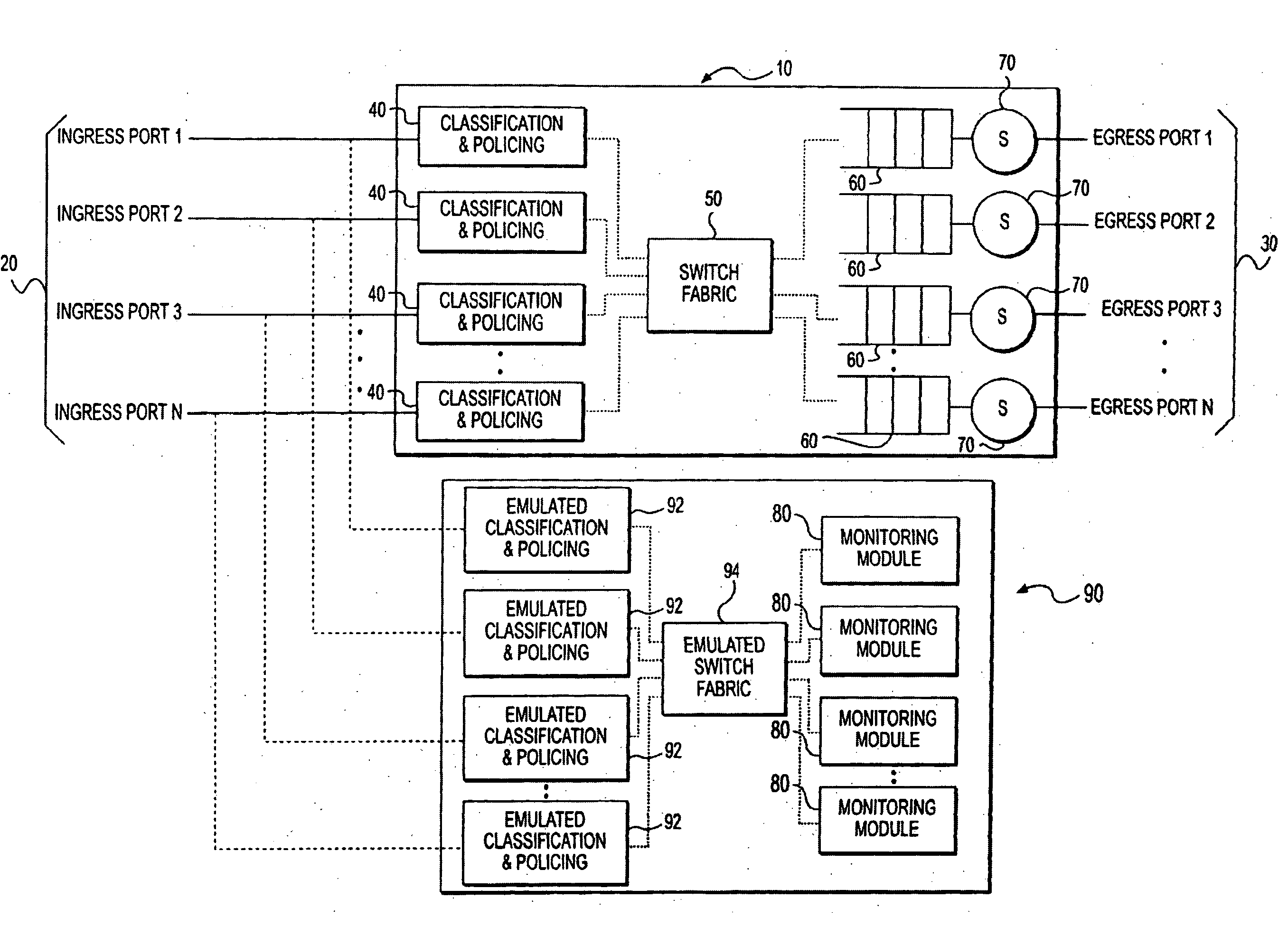

Parallel processing system design and architecture

InactiveUS20030188300A1Resource allocationSpecific program execution arrangementsVirtualizationComputer architecture

An architecture and design called Resource control programming (RCP), for automating the development of multithreaded applications for computing machines equipped with multiple symmetrical processors and shared memory. The Rcp runtime (0102) provides a special class of configurable software device called Rcp Gate (0600), for managing the inputs and outputs and user functions with a predefined signature called node functions (0500). Each Rcp gate manages one node function, and each node function can have one or more invocations. The inputs and outputs of the node functions are virtualized by means of virtual queues and the real queues are bound to the node function invocations, during execution. Each Rcp gate computes its efficiency during execution, which determines the efficiency at which the node function invocations are running. The Rcp Gate will schedule more node function invocations or throttle the scheduling of the node functions depending on the efficiency of the Rcp gate. Thus automatic load balancing of the node functions is provided without any prior knowledge of the load of the node functions and without computing the time taken by each of the node functions.

Owner:PATRUDU PILLA G

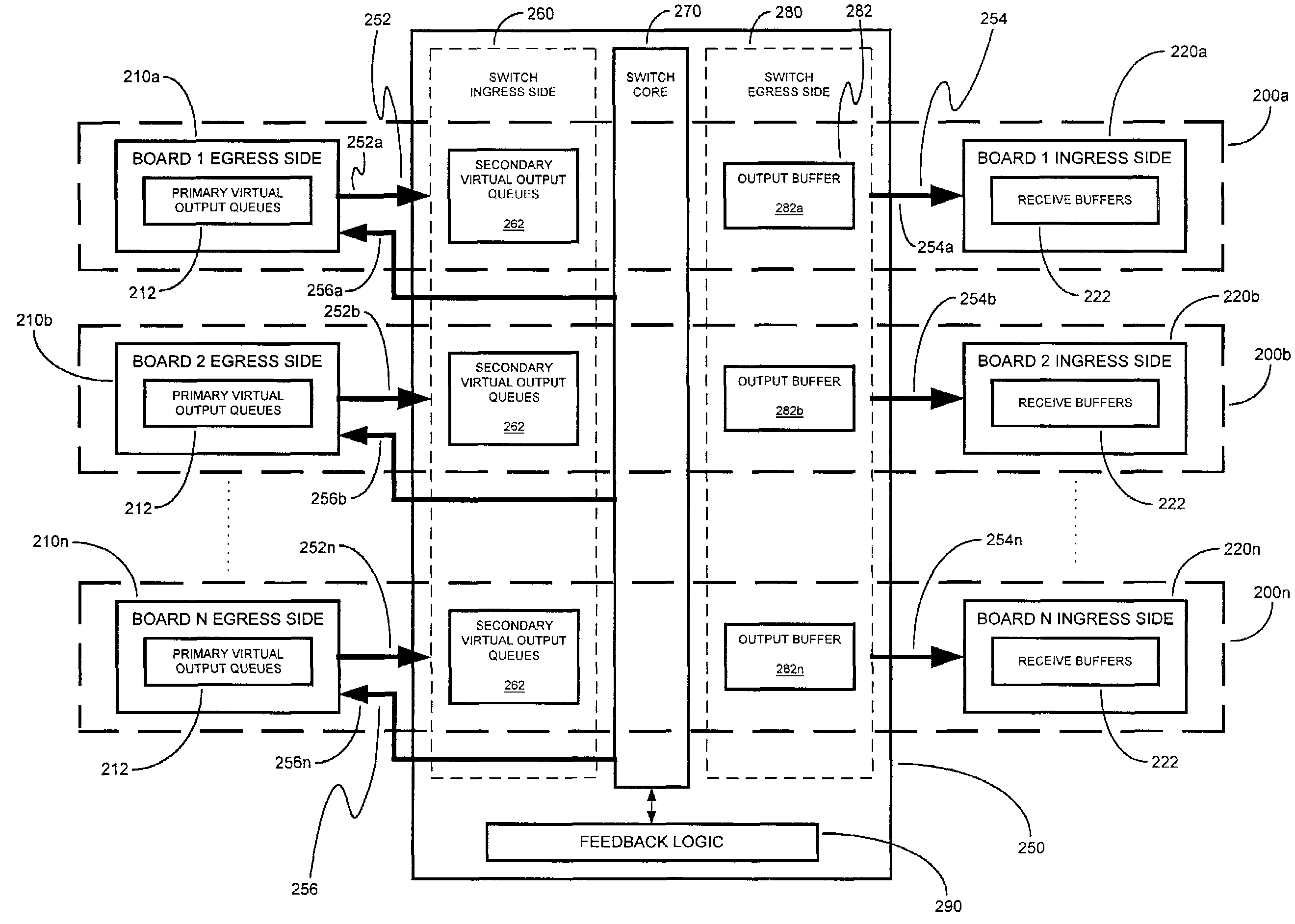

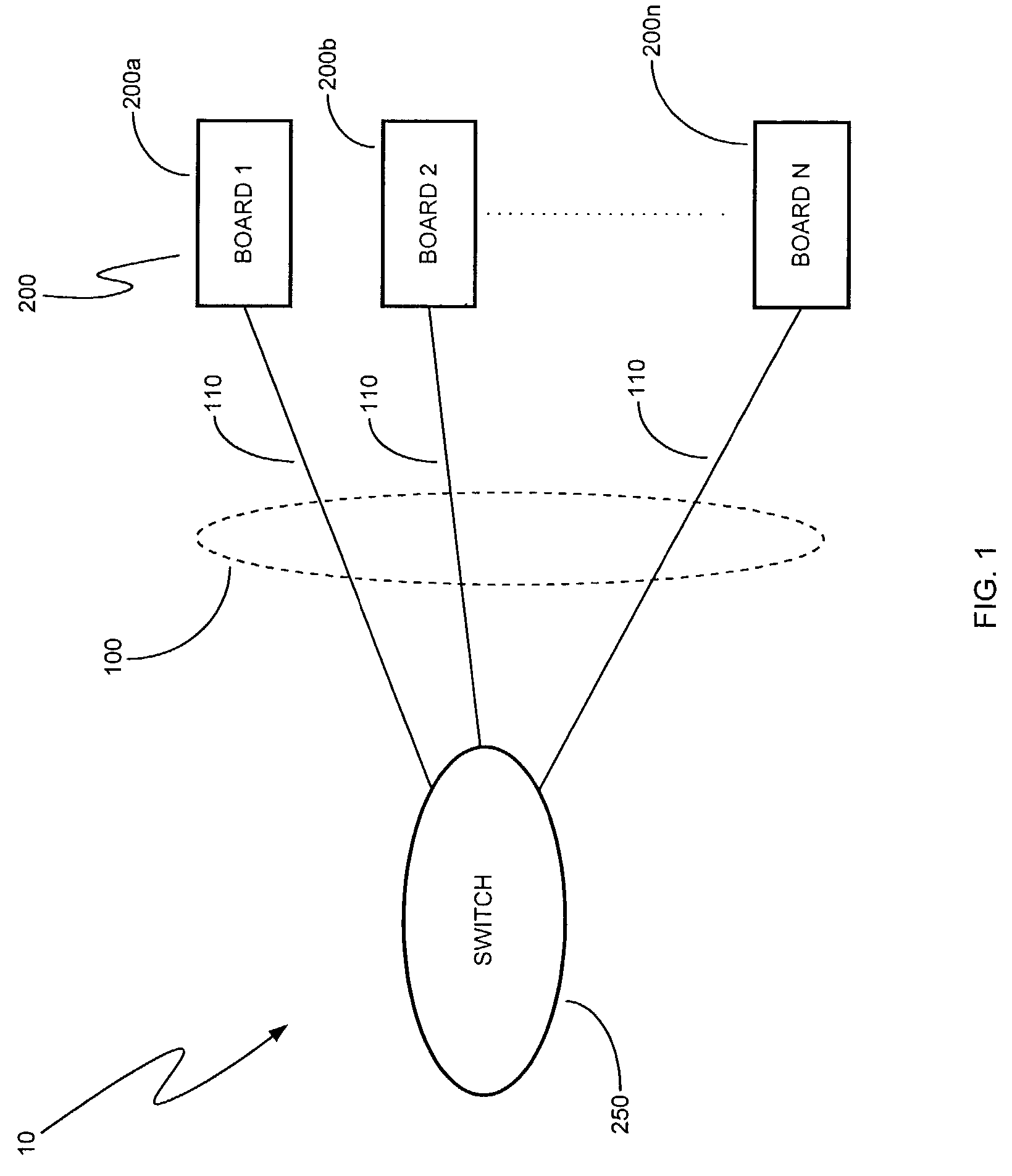

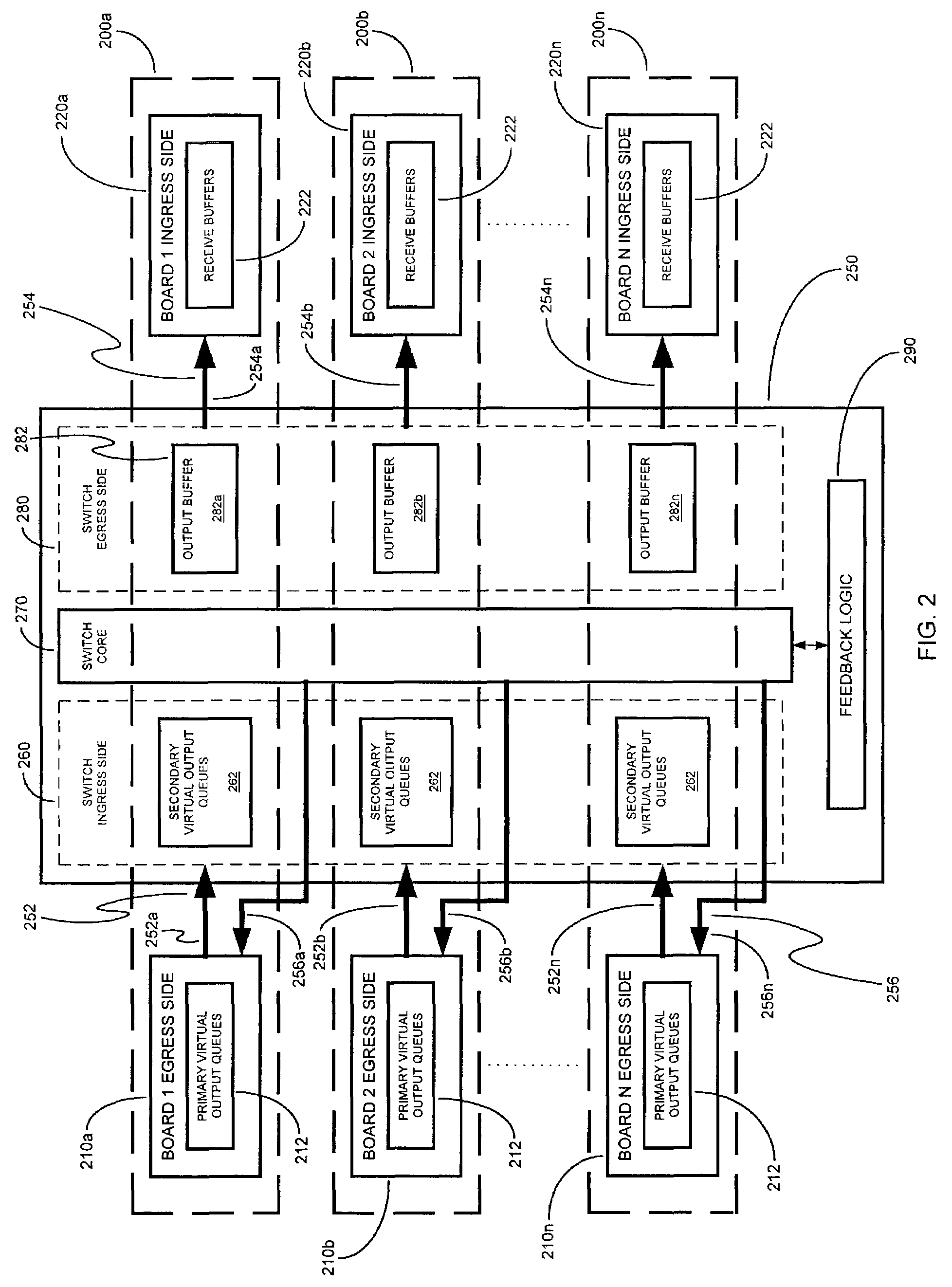

Apparatus and method for virtual output queue feedback

A method of providing virtual output queue feedback to a number of boards coupled with a switch. A number of virtual queues in the switch and / or in the boards are monitored and, in response to one of these queues reaching a threshold occupancy, a feedback signal is provided to one of the boards, the signal directing that board to alter its rate of transmission to another one of the boards. Each board includes a number of virtual output queues, which may be allocated per port and which may be further allocated on a quality of service level basis.

Owner:INTEL CORP

Virtualization of hardware queues in self-virtualizing input/output devices

ActiveUS8881141B2Multiprogramming arrangementsSoftware simulation/interpretation/emulationVirtualizationOutput device

Hardware transmit and / or receive queues in a self-virtualizing IO resource are virtualized to effectively abstract away resource-specific details for the self-virtualizing IO resource. By doing so, a logical partition may be permitted to configure and access a desired number of virtual transmit and / or receive queues, and have an adjunct partition that interfaces the logical partition with the self-virtualizing IO resource handle the appropriate mappings between the hardware and virtual queues.

Owner:INT BUSINESS MASCH CORP

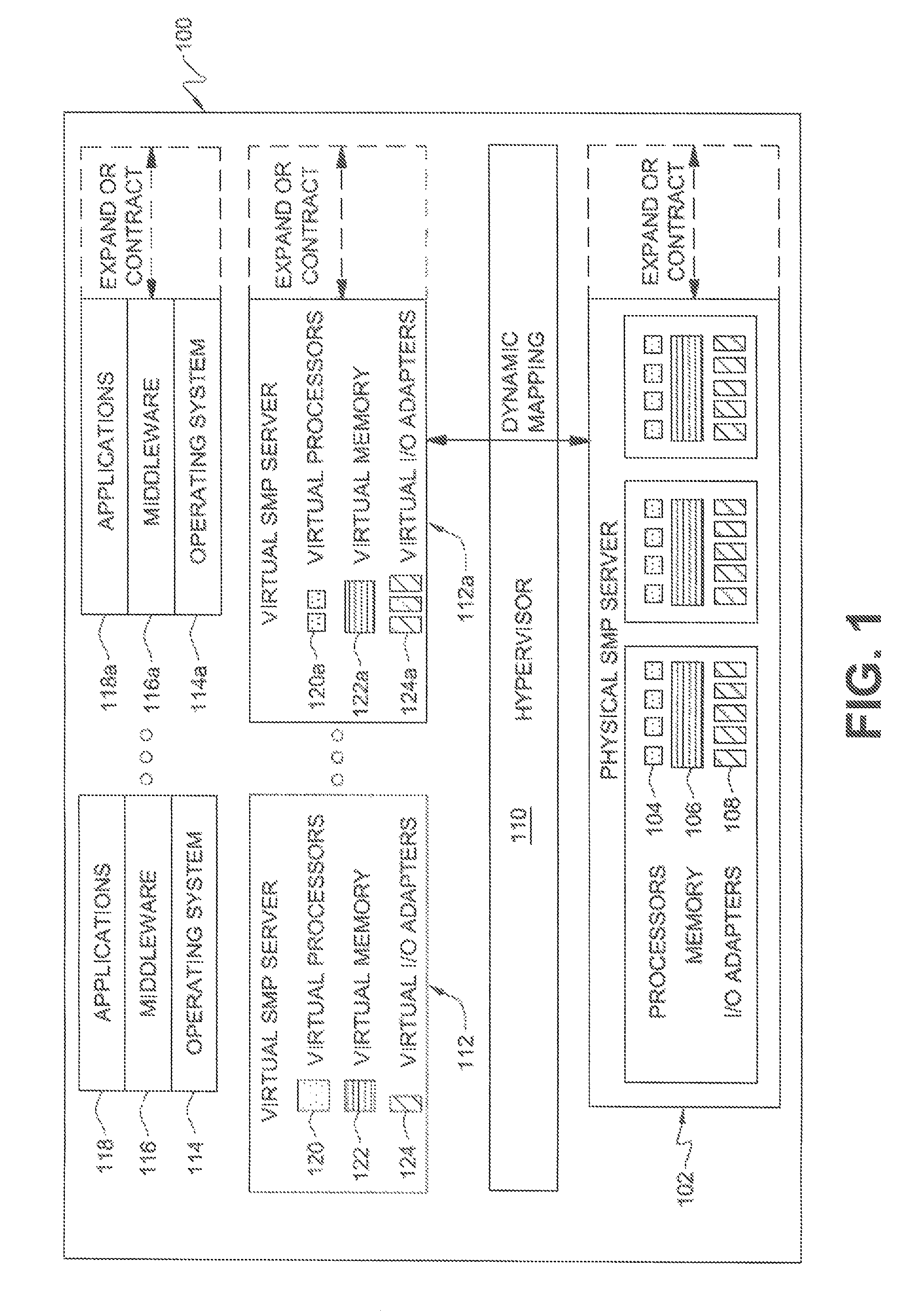

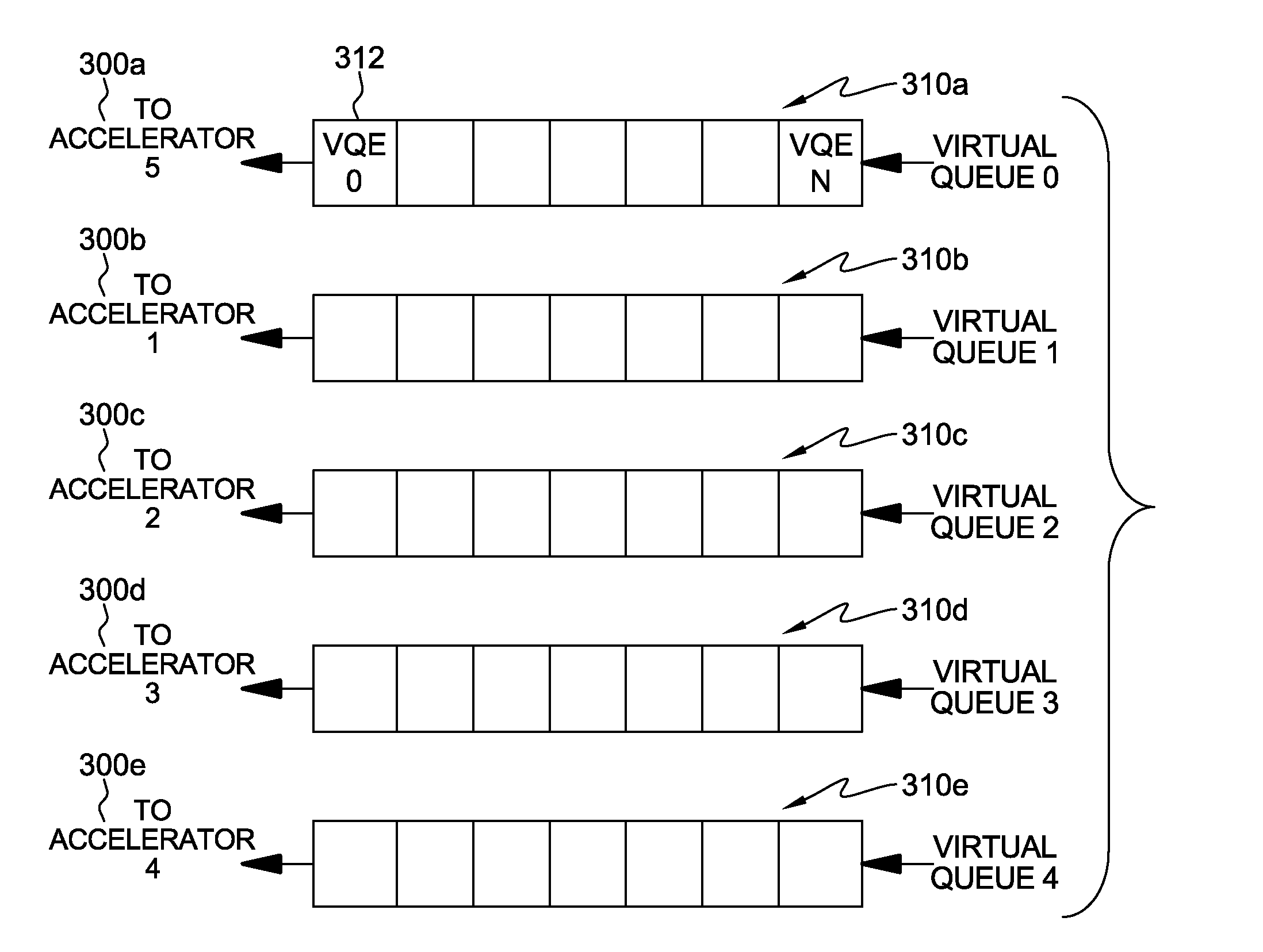

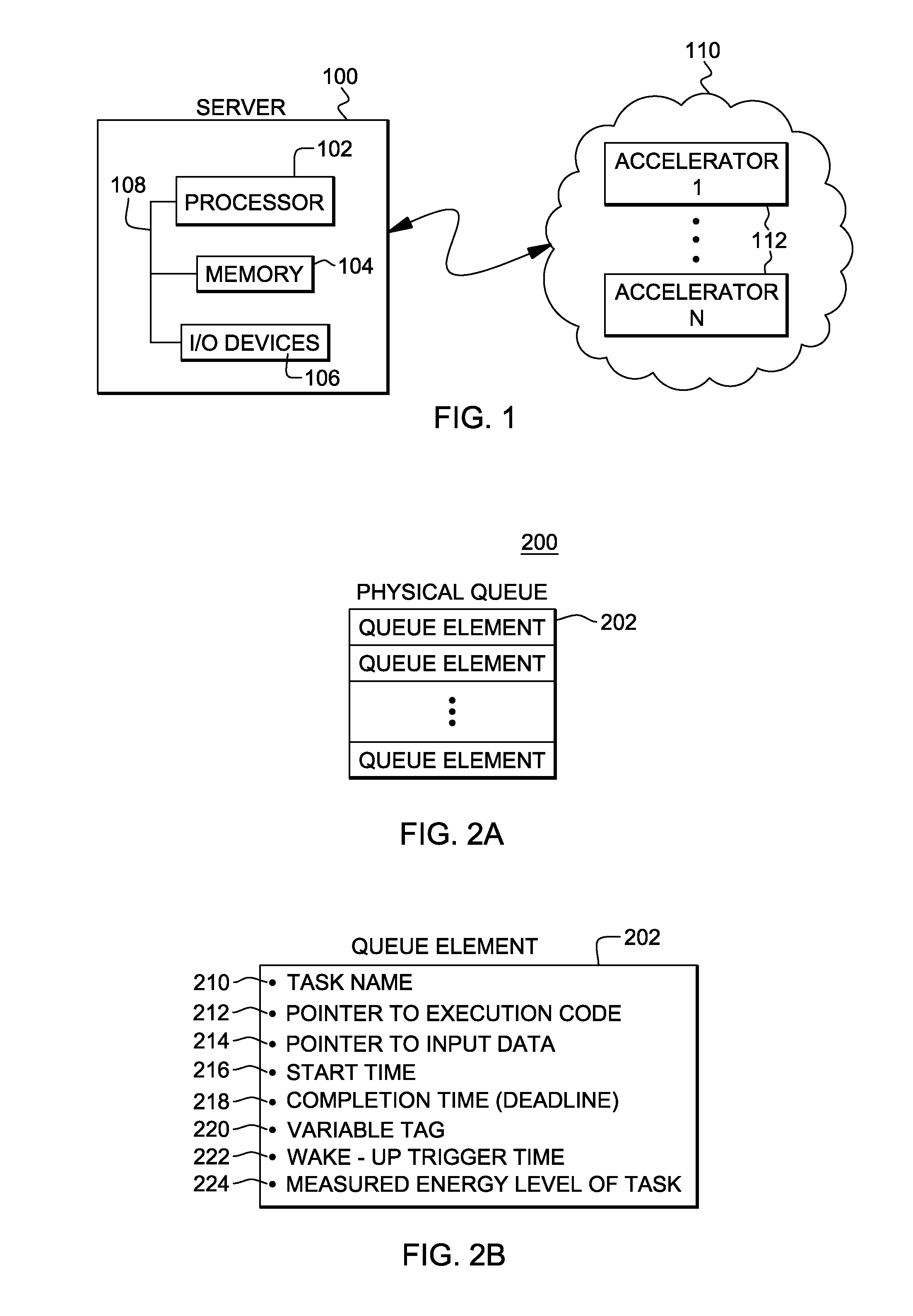

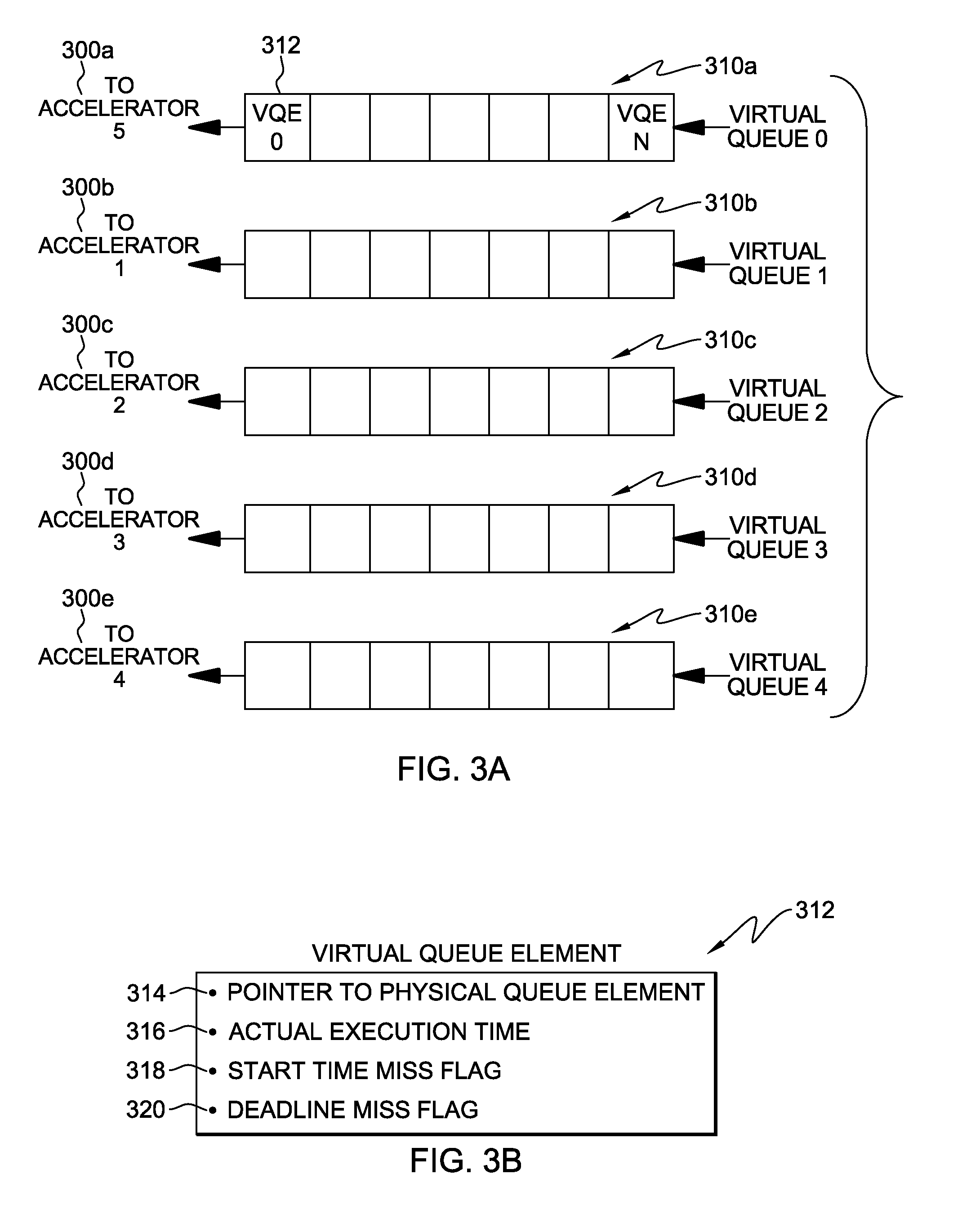

Managing accelerators of a computing environment

ActiveUS20110131430A1Facilitates managing energy consumptionOvercomes shortcomingEnergy efficient ICTVolume/mass flow measurementEnergy consumptionDistributed computing

Accelerators of a computing environment are managed in order to optimize energy consumption of the accelerators. To facilitate the management, virtual queues are assigned to the accelerators, and a management technique is used to enqueue specific tasks on the queues for execution by the corresponding accelerators. The management technique considers various factors in determining which tasks to be placed on which virtual queues in order to manage energy consumption of the accelerators.

Owner:IBM CORP

Dynamic control of air interface throughput

InactiveUS20120106338A1Lower latencyMaximize download speedError preventionTransmission systemsRadio networksAir interface

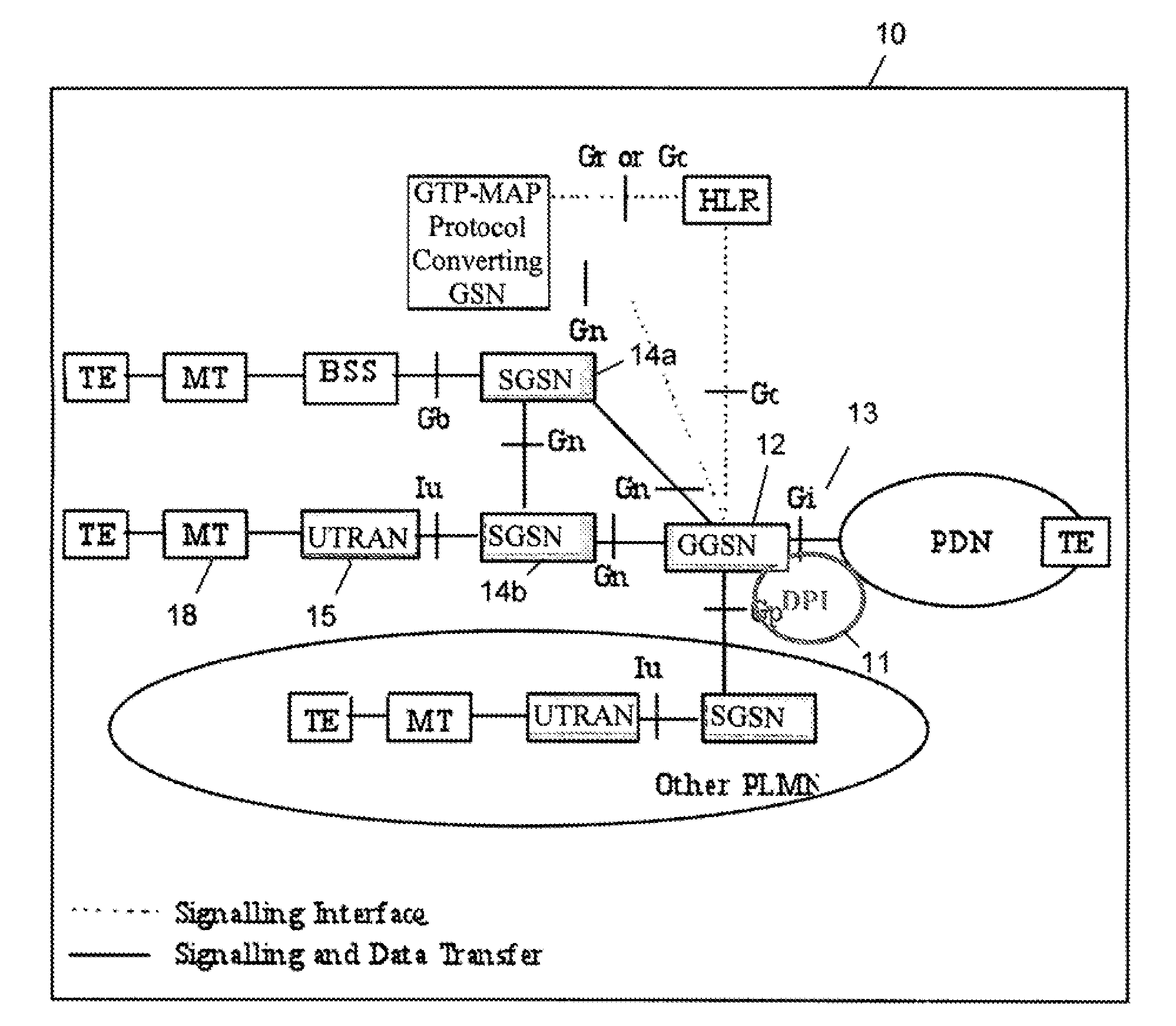

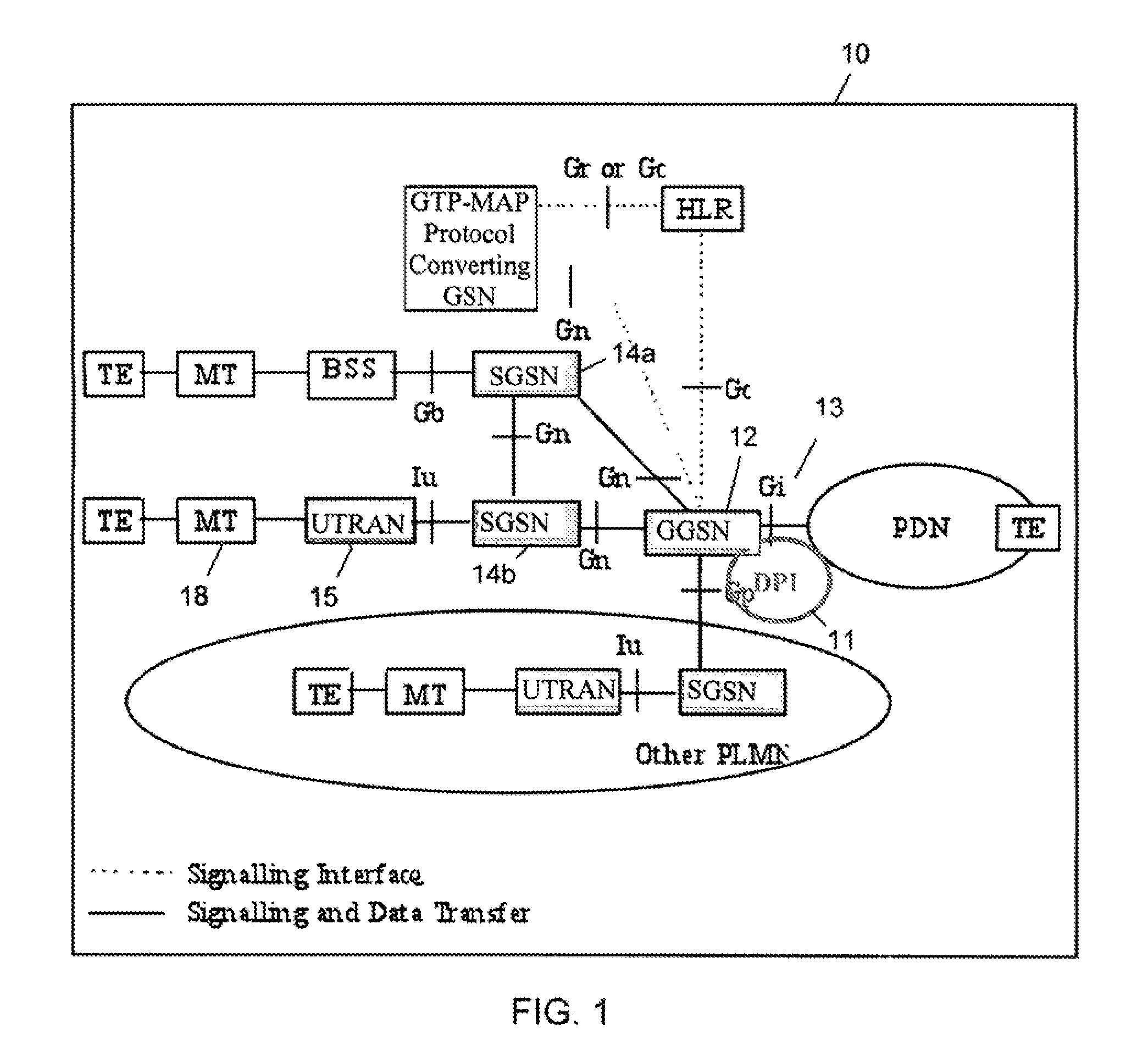

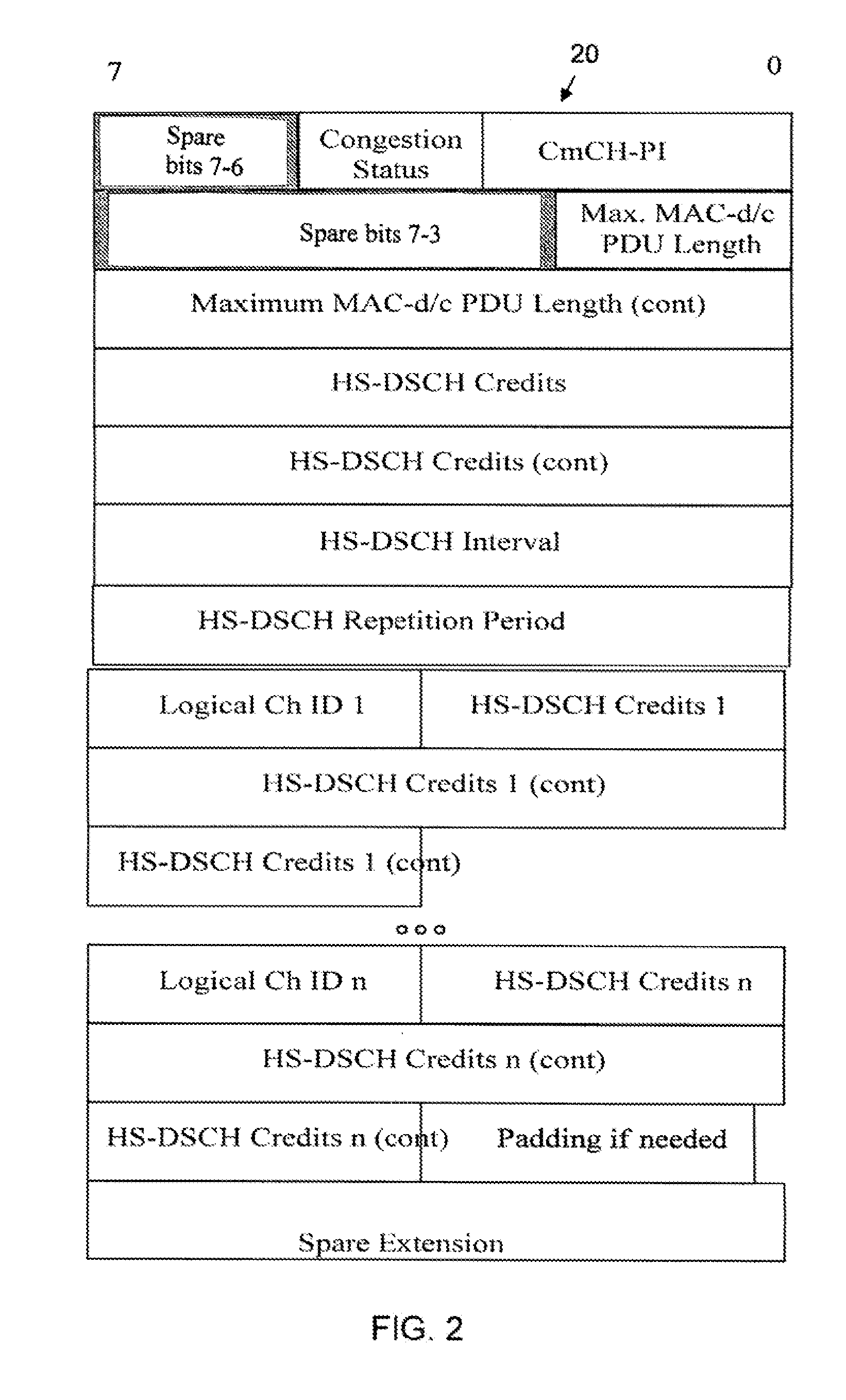

A system, method, and network node for dynamically controlling throughput over an air interface between a mobile terminal and a radio telecommunication system. A Gateway GPRS Service Node (GGSN) receives a plurality of traffic flows for the mobile terminal and uses a Deep Packet Inspection (DPI) module to determine a target delay class for each traffic flow. The GGSN signals the target delay class of each traffic flow to a Radio Network Controller (RNC) utilizing per-packet marking within a single radio access bearer (RAB). The RNC defines a separate virtual queue for each delay class on a per-RAB basis, and instructs a Node B serving the mobile terminal to do the same. The Node B services the queues according to packet transmission delays associated with each queue. A flow control mechanism in the Node B sets a packet queue length for each queue to optimize transmission performance.

Owner:TELEFON AB LM ERICSSON (PUBL)

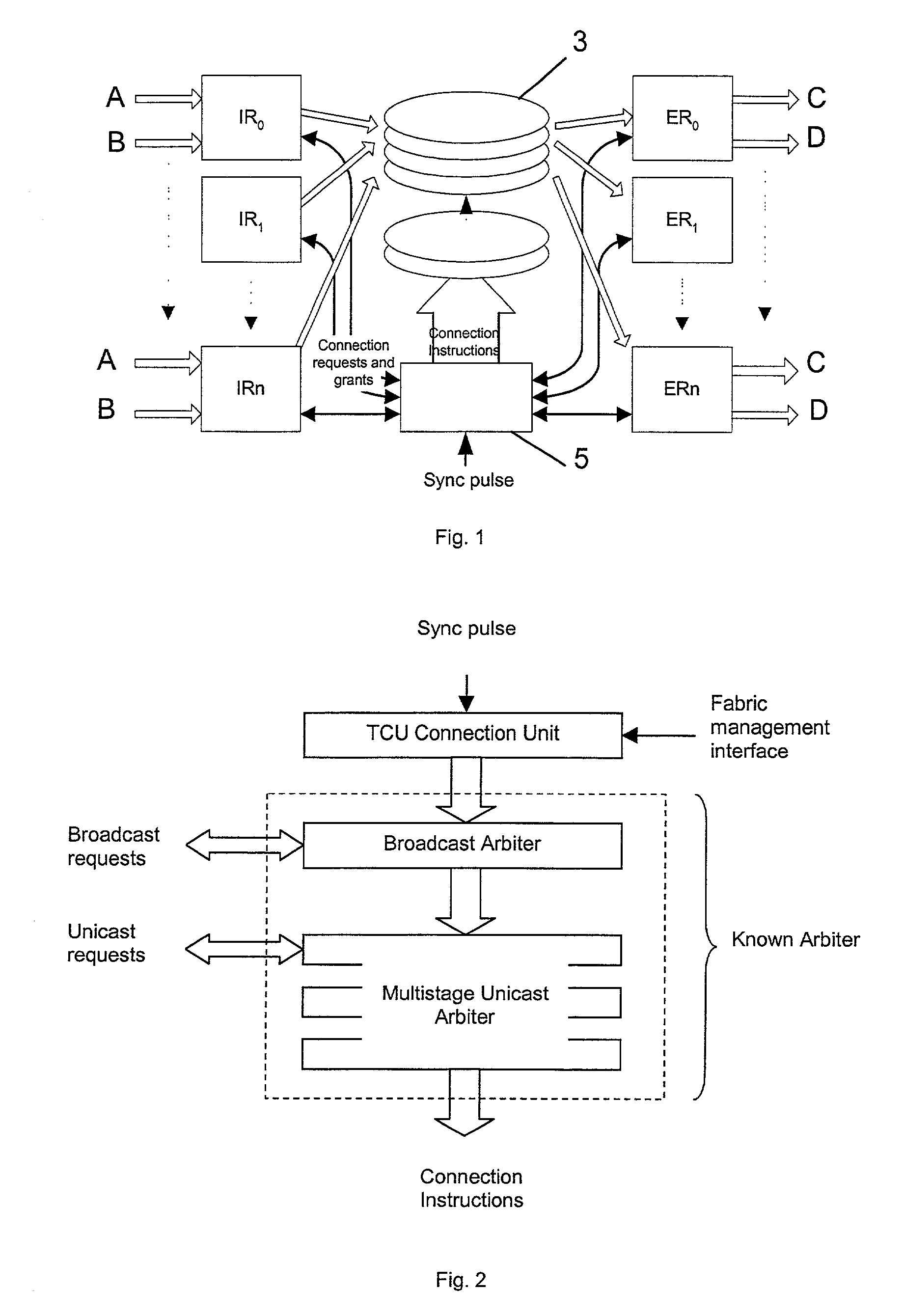

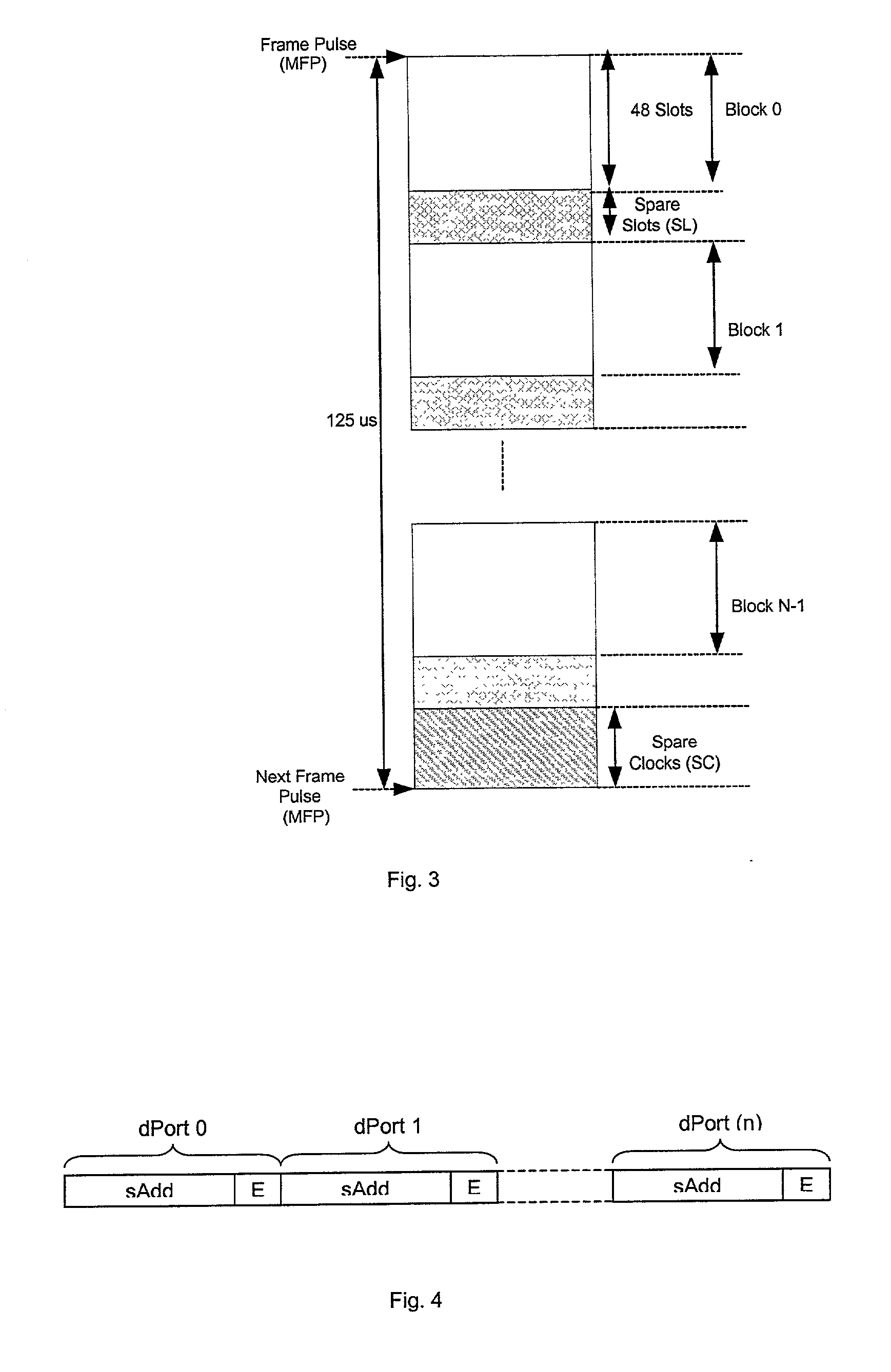

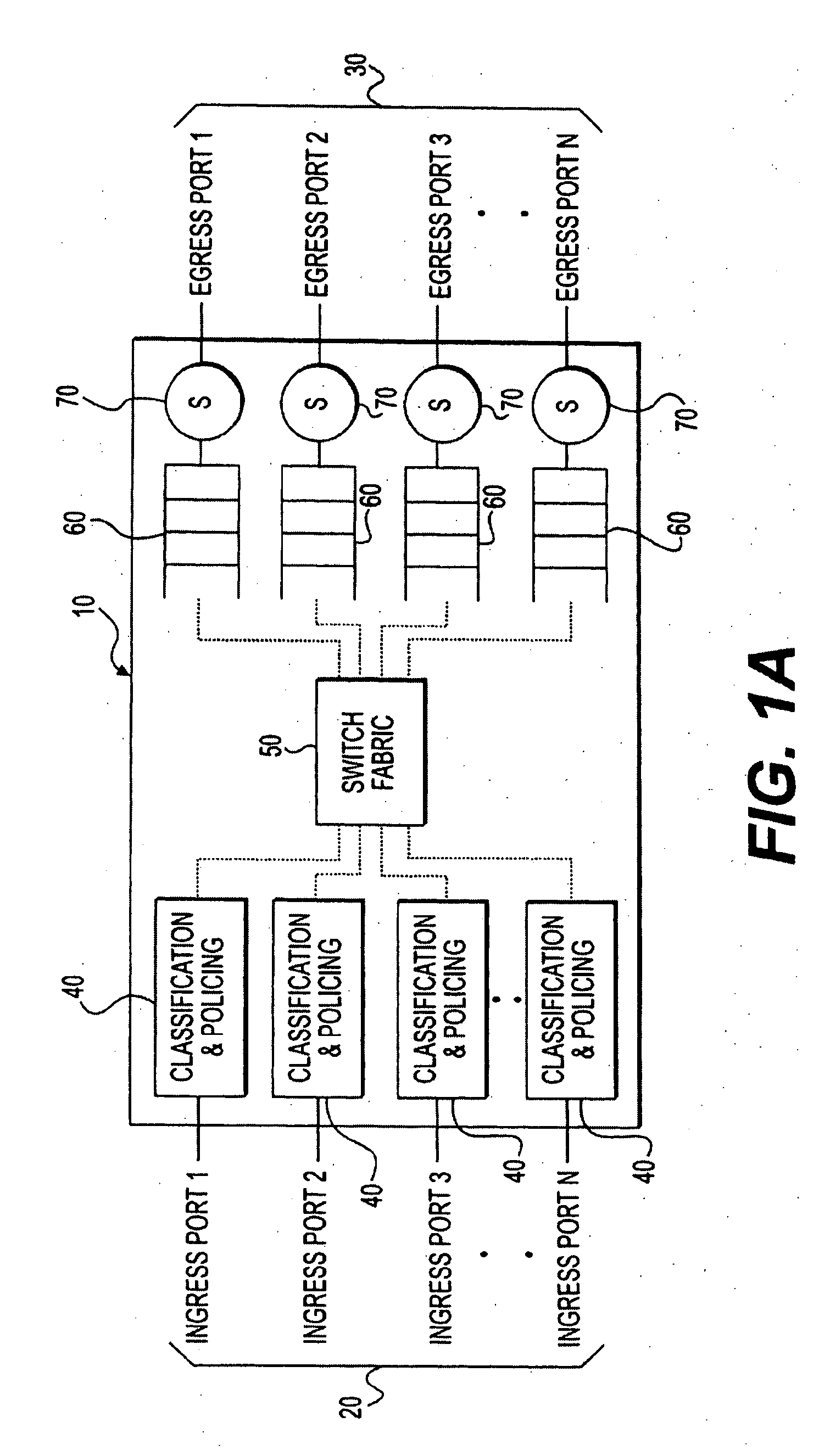

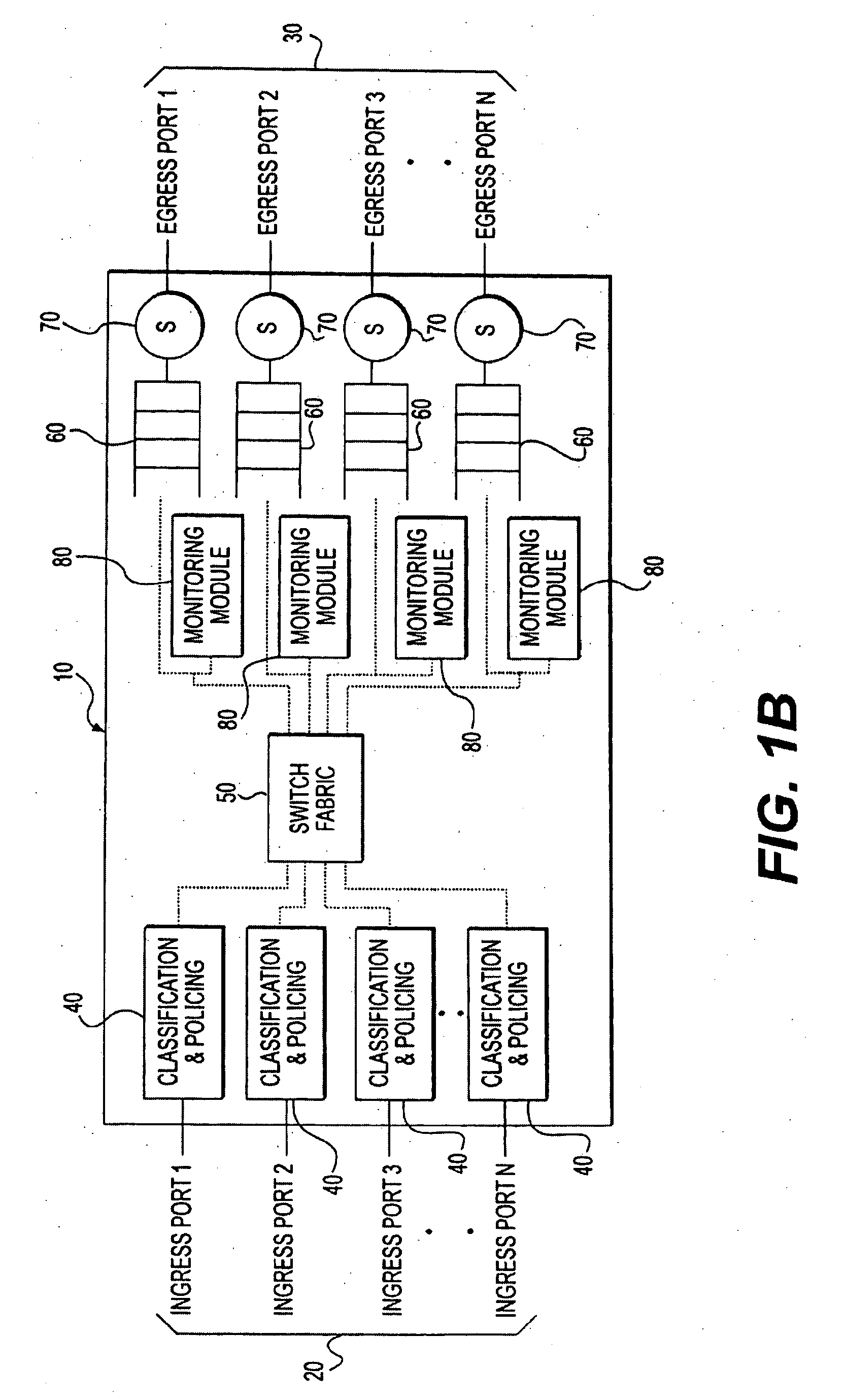

Data switch and a method for controlling the data switch

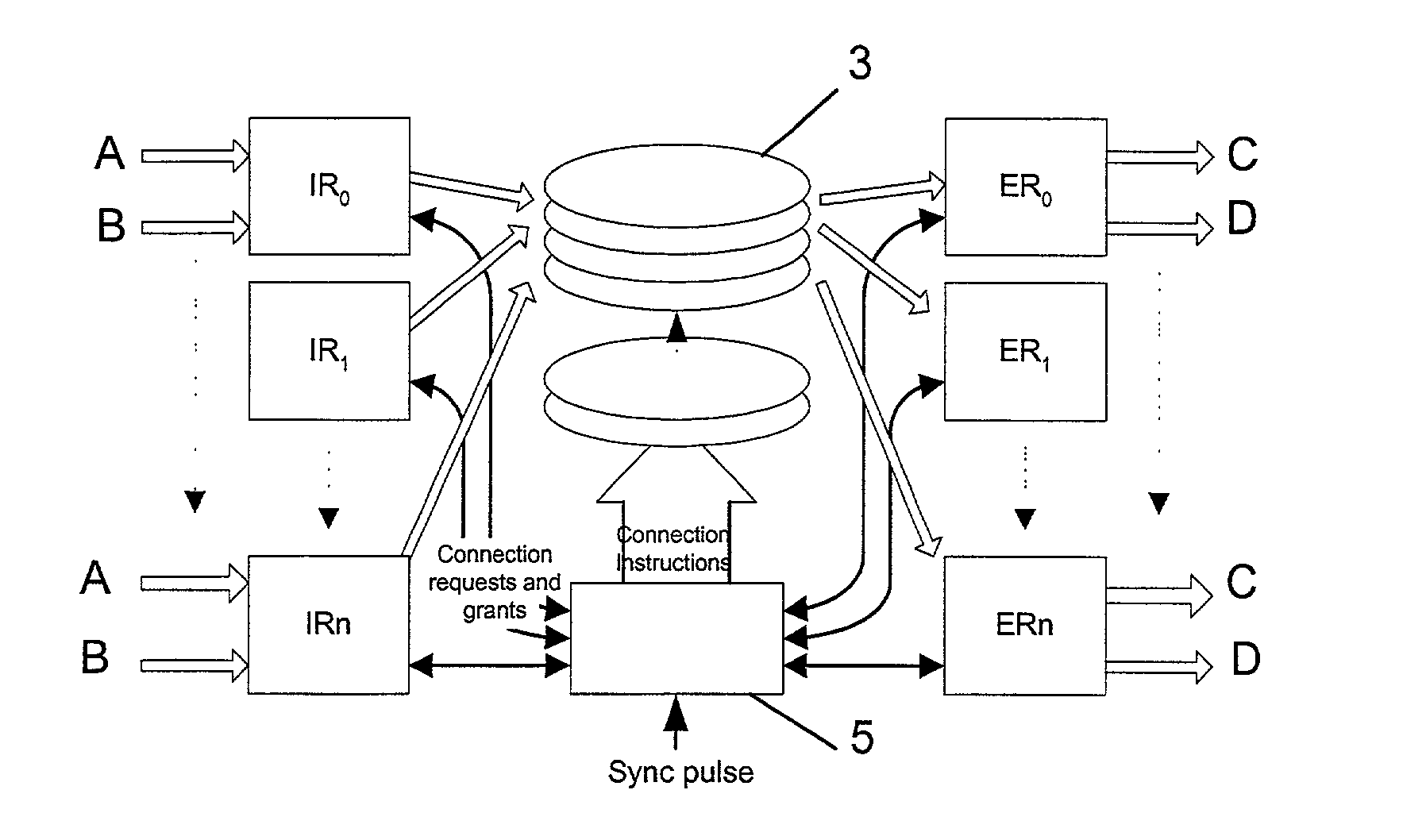

ActiveUS20020141397A1Reduce, or even eliminate, variation in the latency of the throughputMultiplex system selection arrangementsTime-division multiplexControl dataEgress router

A data switch is proposed of the type having virtual queue ingress routers interconnected with egress routers by way of a memoryless switching matrix controlled by a control unit which performs an arbitration process to schedule connections across the switch. This scheduling is performed to ensure that data cells which arrive at the ingress routers at unpredictable times are transmitted to the correct egress routers. Each ingress router further includes a queue for time division multiplex traffic, and at times when such traffic exists, the control unit overrides the arbitration process to allow the time division multiplex traffic to be transmitted through the switch.

Owner:MICRON TECH INC

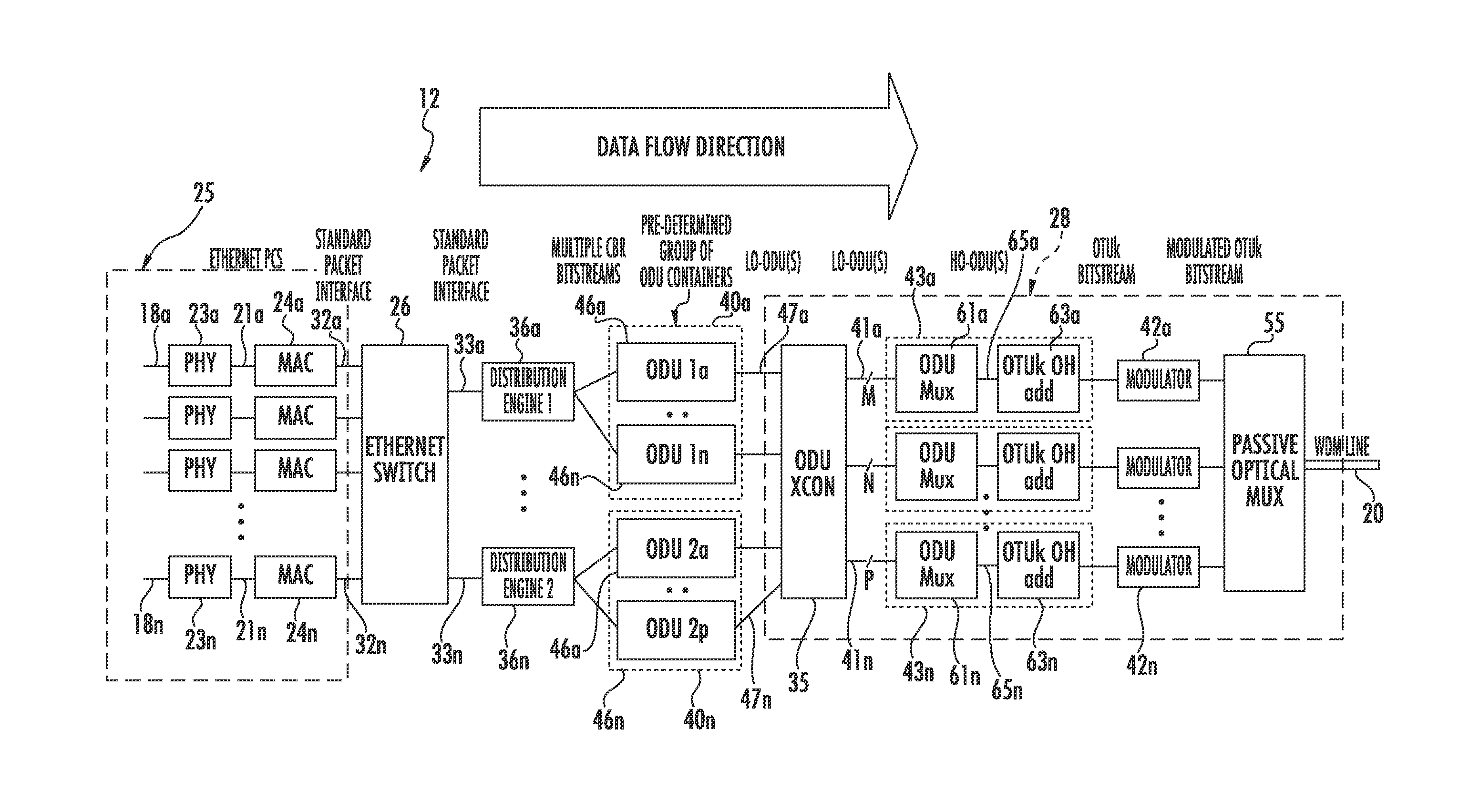

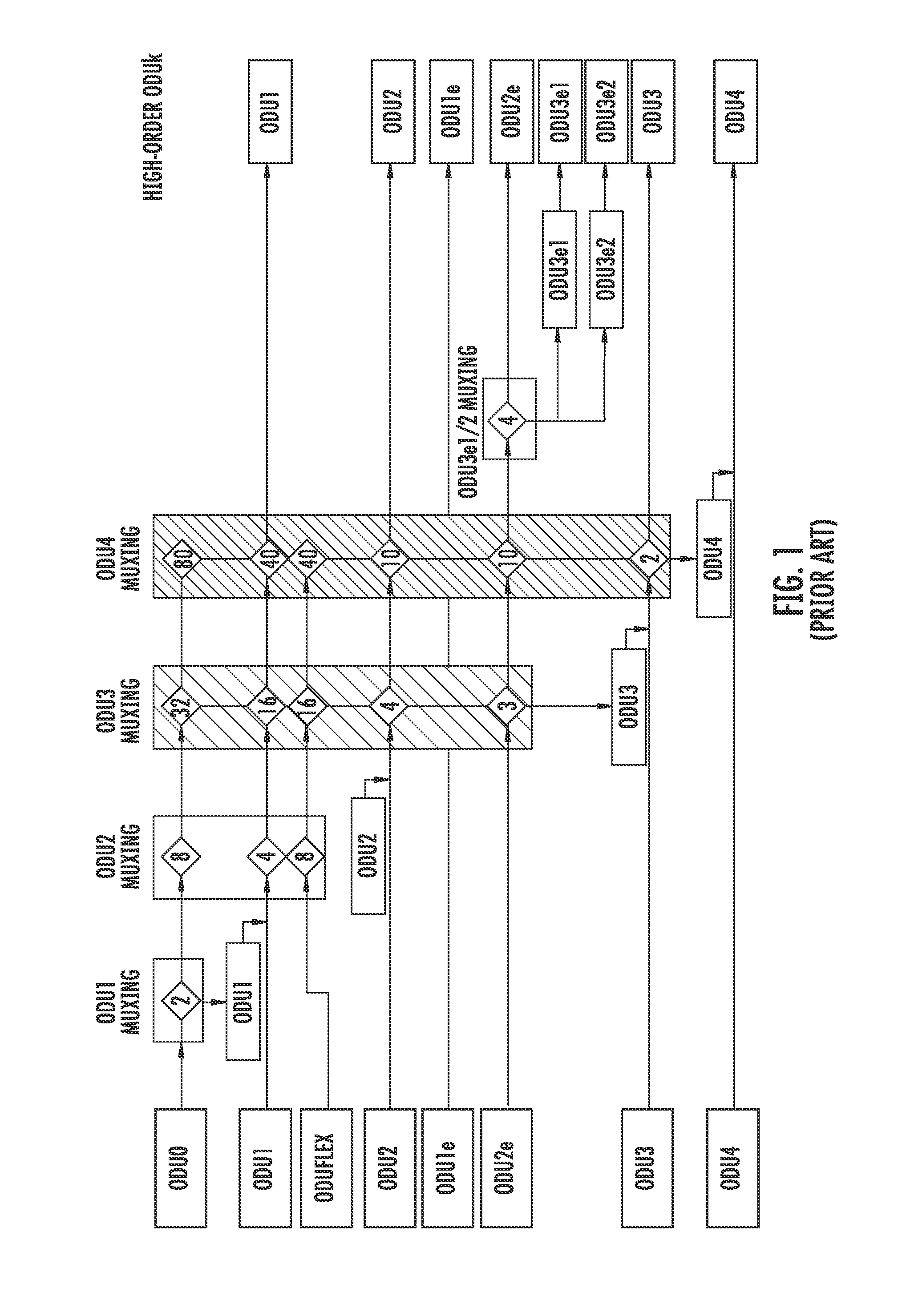

Method and apparatus for mapping traffic using virtual concatenation

A node comprising a packet network interface, an ethernet switch, an optical port, and a distribution engine. The packet network interface adapted to receive a packet having a destination address and a first bit and a second bit. The ethernet switch is adapted to receive and forward the packet into a virtual queue associated with a destination. The optical port has circuitry for transmitting to a plurality of circuits. The distribution engine has one or more processors configured to execute processor executable code to cause the distribution engine to (1) read a first bit and a second bit from the virtual queue, (2) provide the first bit and the second bit to the at least one optical port for transmission to a first predetermined group of the plurality of circuits.

Owner:INFINERA CORP

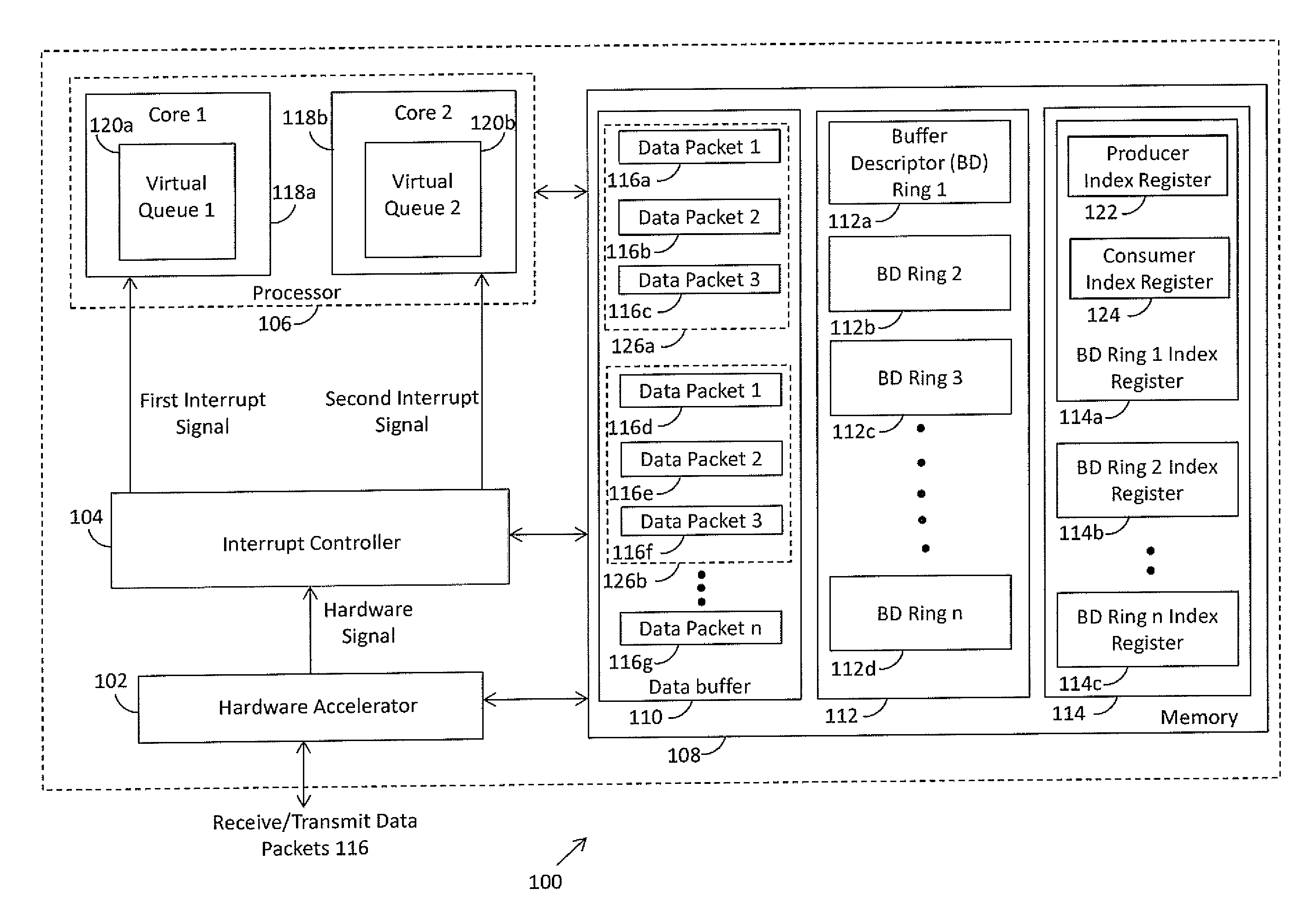

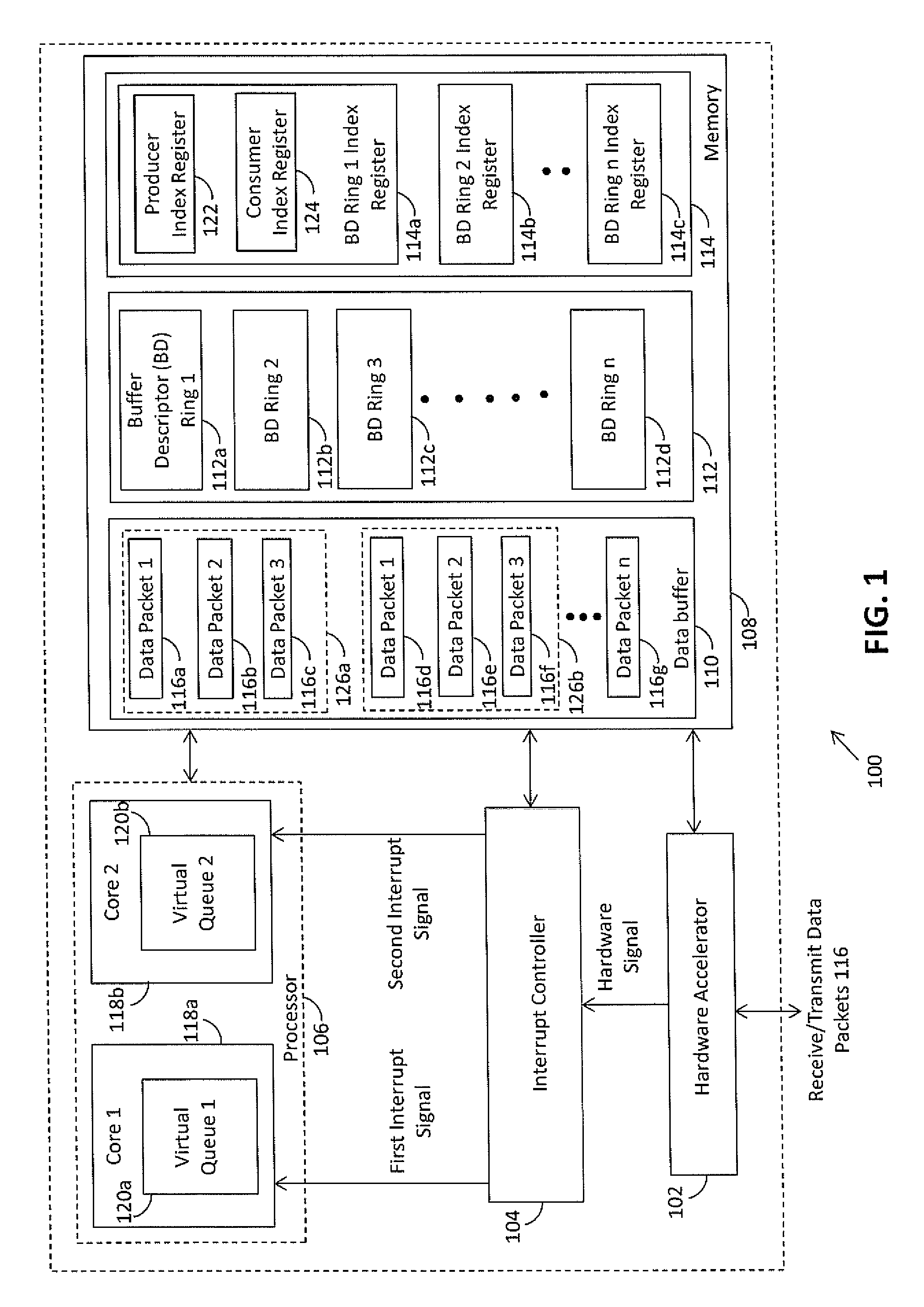

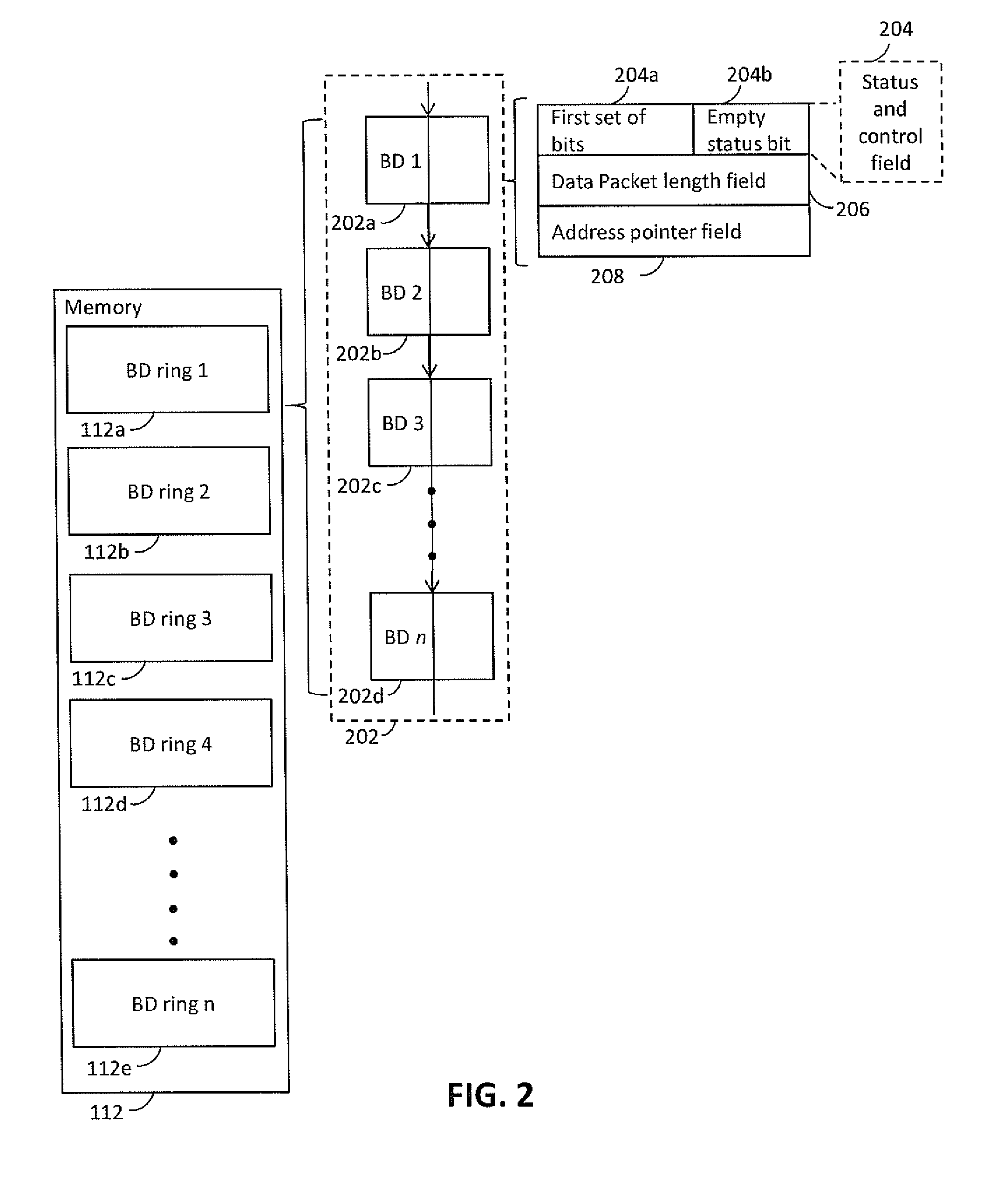

Multi-core processor for managing data packets in communication network

A system for managing data packets has multiple cores, a data buffer, a hardware accelerator, and an interrupt controller. The interrupt controller transmits a first interrupt signal to a first one of the cores based on a first hardware signal received from the hardware accelerator. The first core creates a copy of buffer descriptors (BD) of a buffer descriptor ring that correspond to the data packets in the data buffer in a first virtual queue and indicates to the hardware accelerator that the data packets are processed. If there are additional data packets, the interrupt controller transmits a second interrupt signal to a second core, which performs the same steps as performed by the first core. The first and the second cores simultaneously process the data packets associated with the BDs in the first and second virtual queues, respectively.

Owner:NXP USA INC

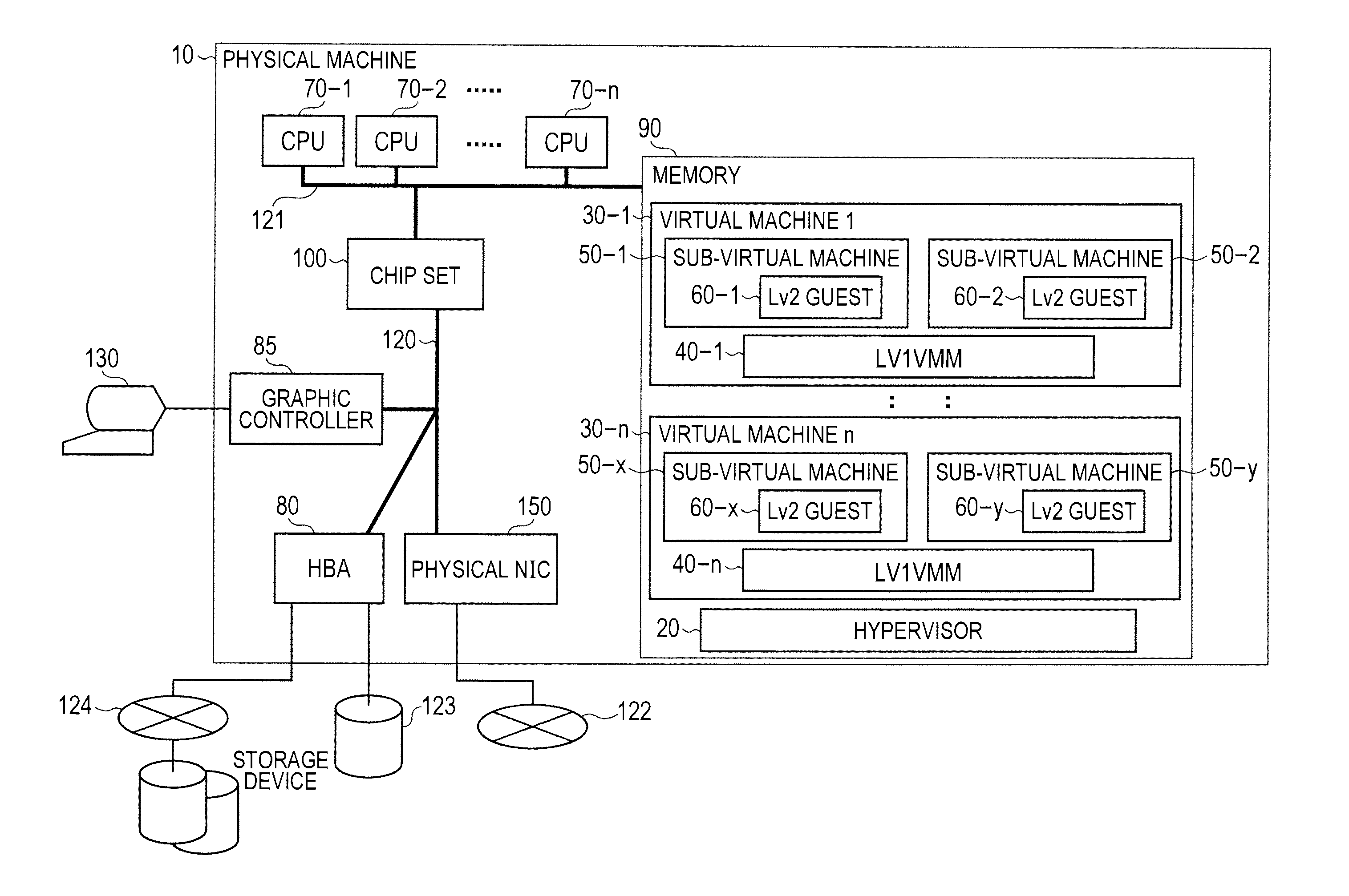

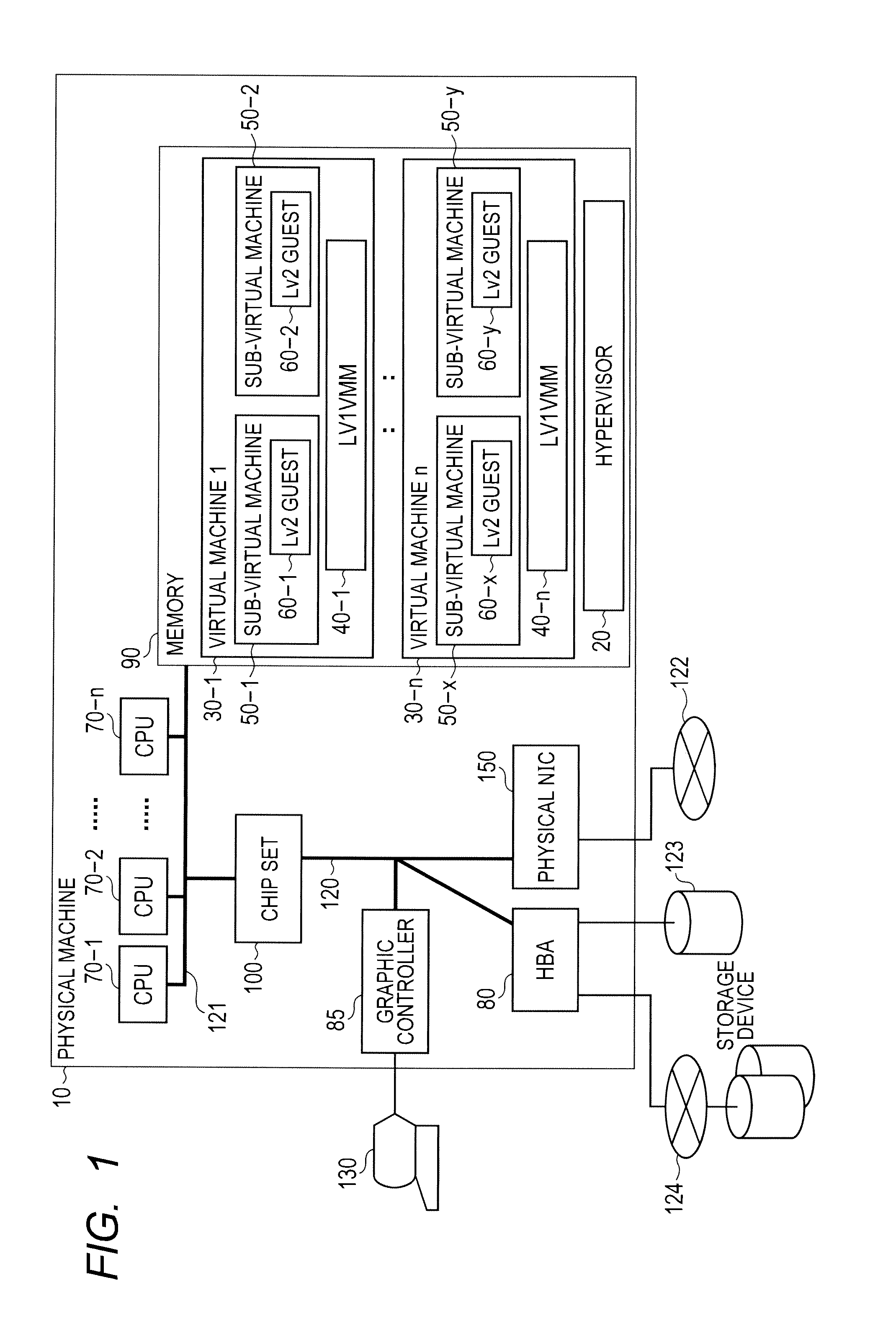

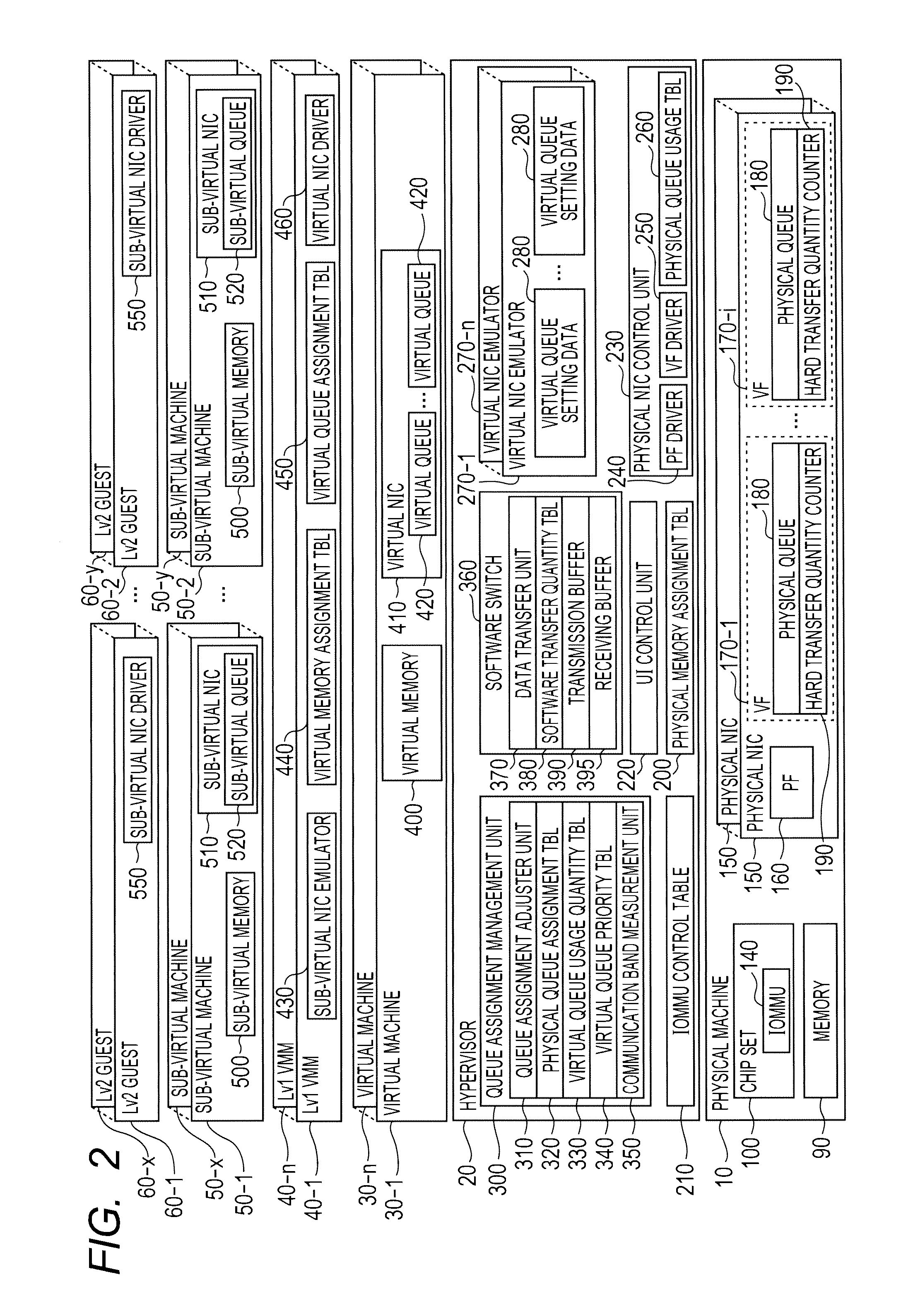

Virtual machine control method and virtual machine

InactiveUS20130247056A1Reduce loadHinder its implementationProgram initiation/switchingResource allocationTerm memoryComputer engineering

A virtual machine control method and a virtual machine having the dual objectives of utilizing NIC on a virtual machine that creates sub-virtual machines operated by a VMM on virtual machines generated by a hypervisor to avoid software copying by the VMM and to prevent band deterioration during live migration or adding sub-virtual machines. In a virtual machine operating plural virtualization software on a physical machine including a CPU, memory, and multi-queue NIC; a virtual multi-queue NIC is loaded in the virtual machine, for virtual queues included in the virtual multi-queue NIC, physical queues configuring the multi-queue NIC are assigned to virtual queues where usage has started, and the physical queues are allowed direct access to the virtual machine memory.

Owner:HITACHI LTD

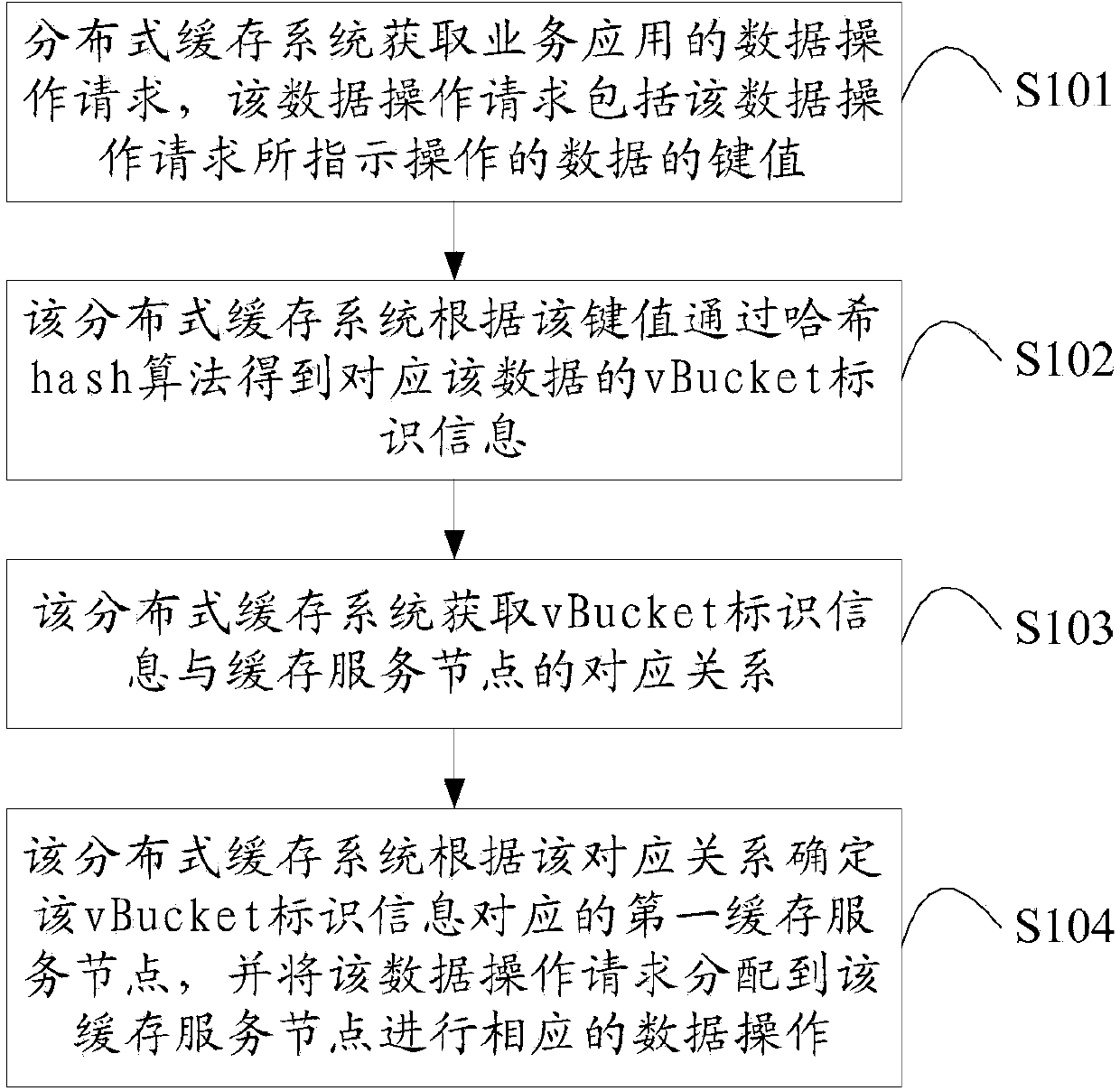

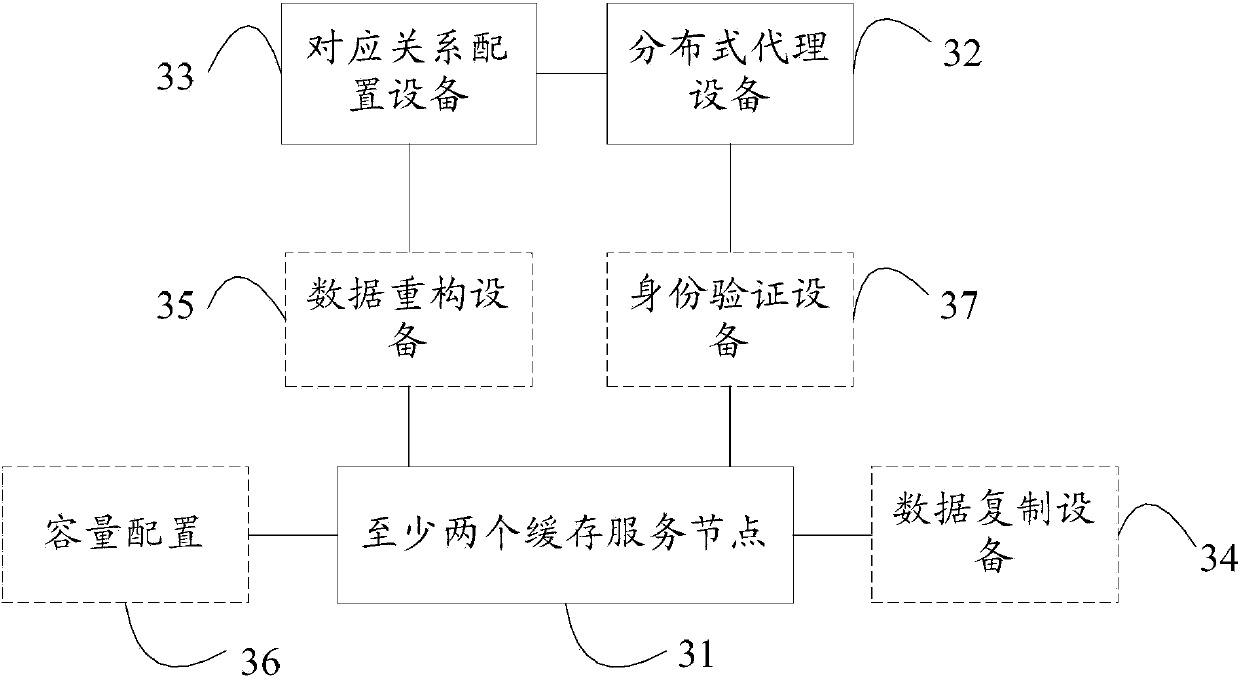

Distributed caching method and system

The embodiment of the invention provides a distributed caching method and a distributed caching system, and relates to the field of data storage. The data access speed of the distributed caching system is increased. The method comprises the following steps that the distributed caching system acquires a data operation request of a service application, and performs hash operation according to a key value of data indicated to be operated by the data operation request to obtain the identification information of a virtual queue vBucket corresponding to the data; the distributed caching system acquires a corresponding relationship between the identification information of the vBucket and a caching service node, determines a first caching service node corresponding to the identification information of the vBucket according to the corresponding relationship, and allocates the data operation request to the first caching service node for corresponding data operation. The embodiment of the invention is used for distributed data caching.

Owner:LETV CLOUD COMPUTING CO LTD

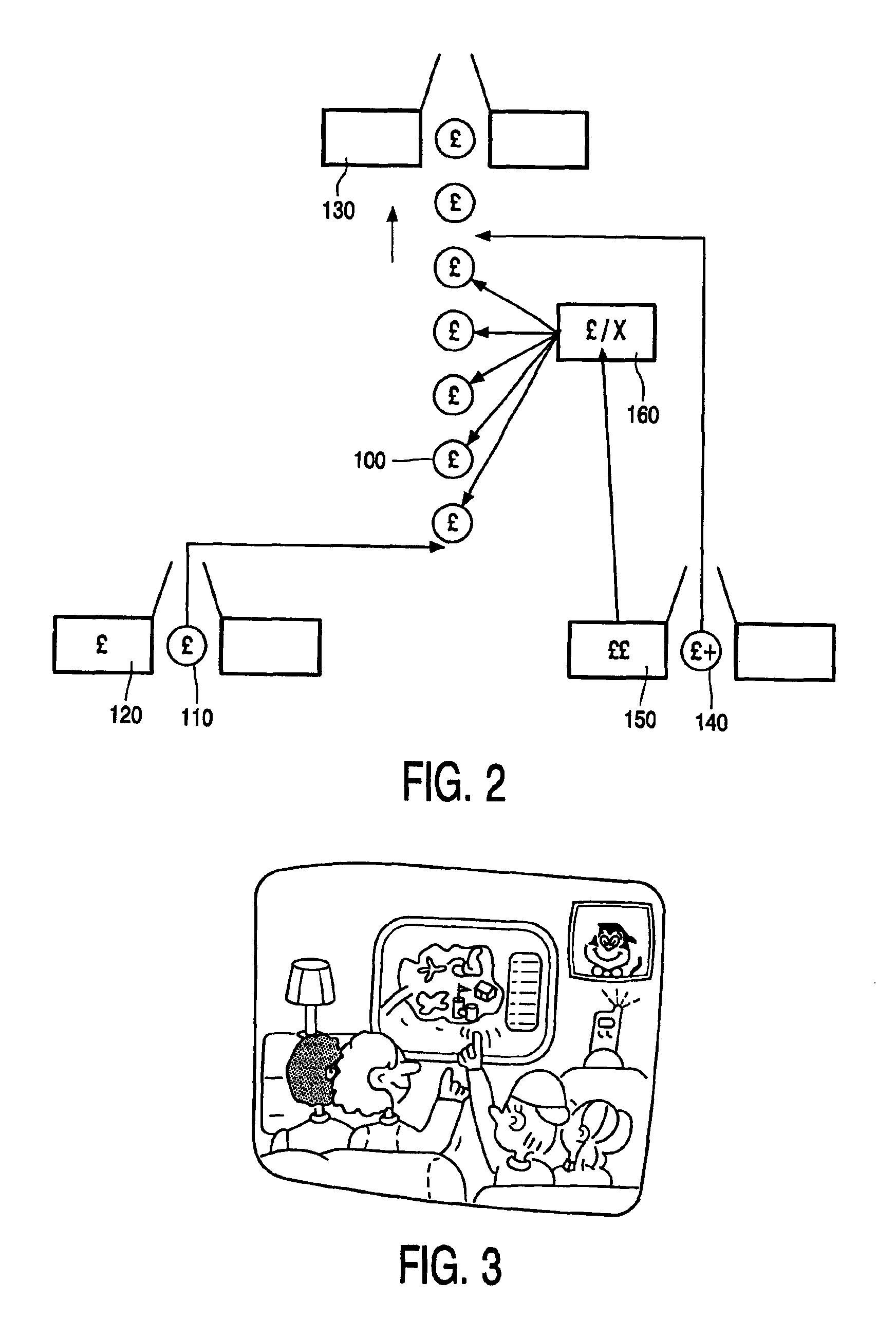

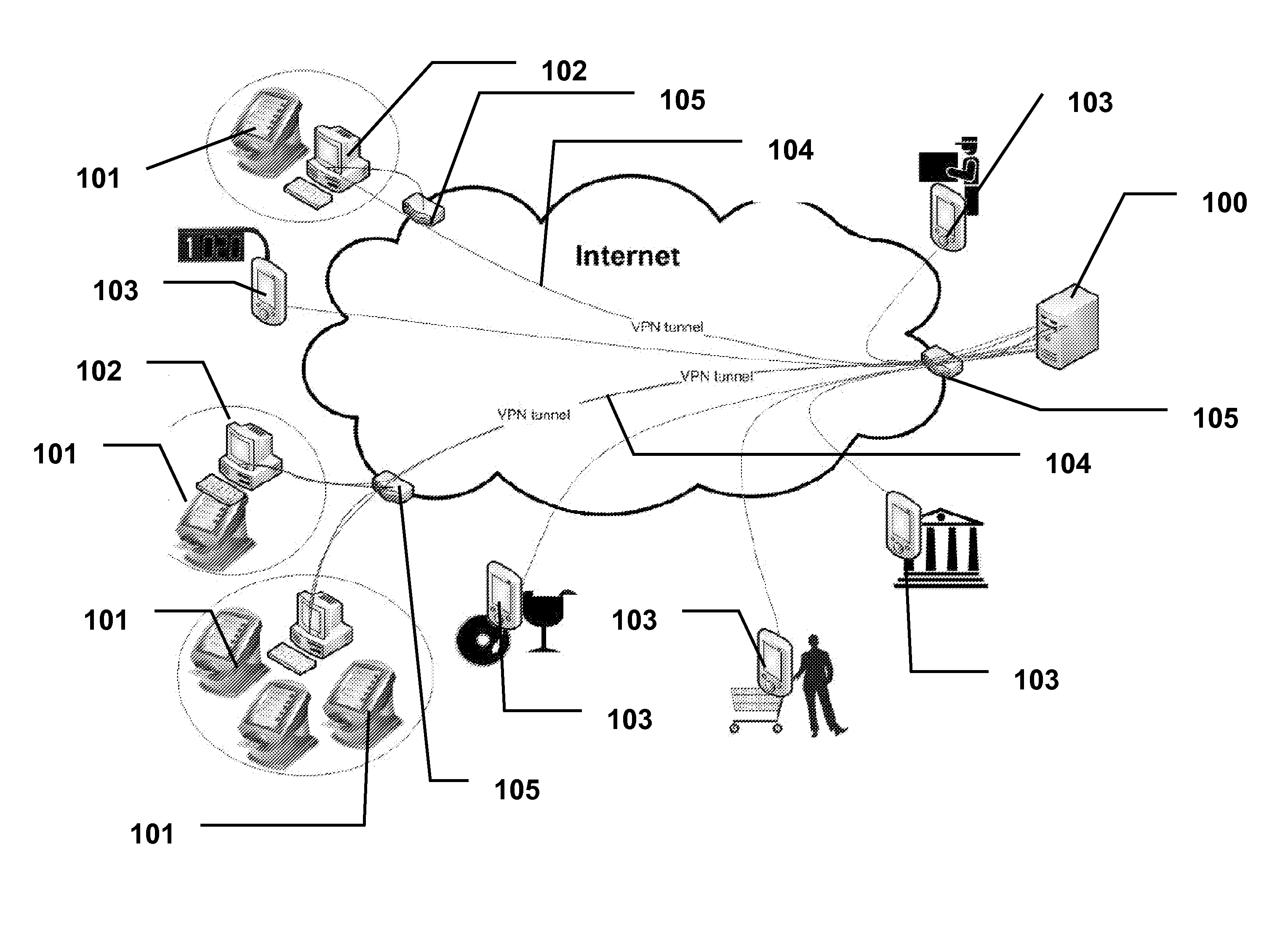

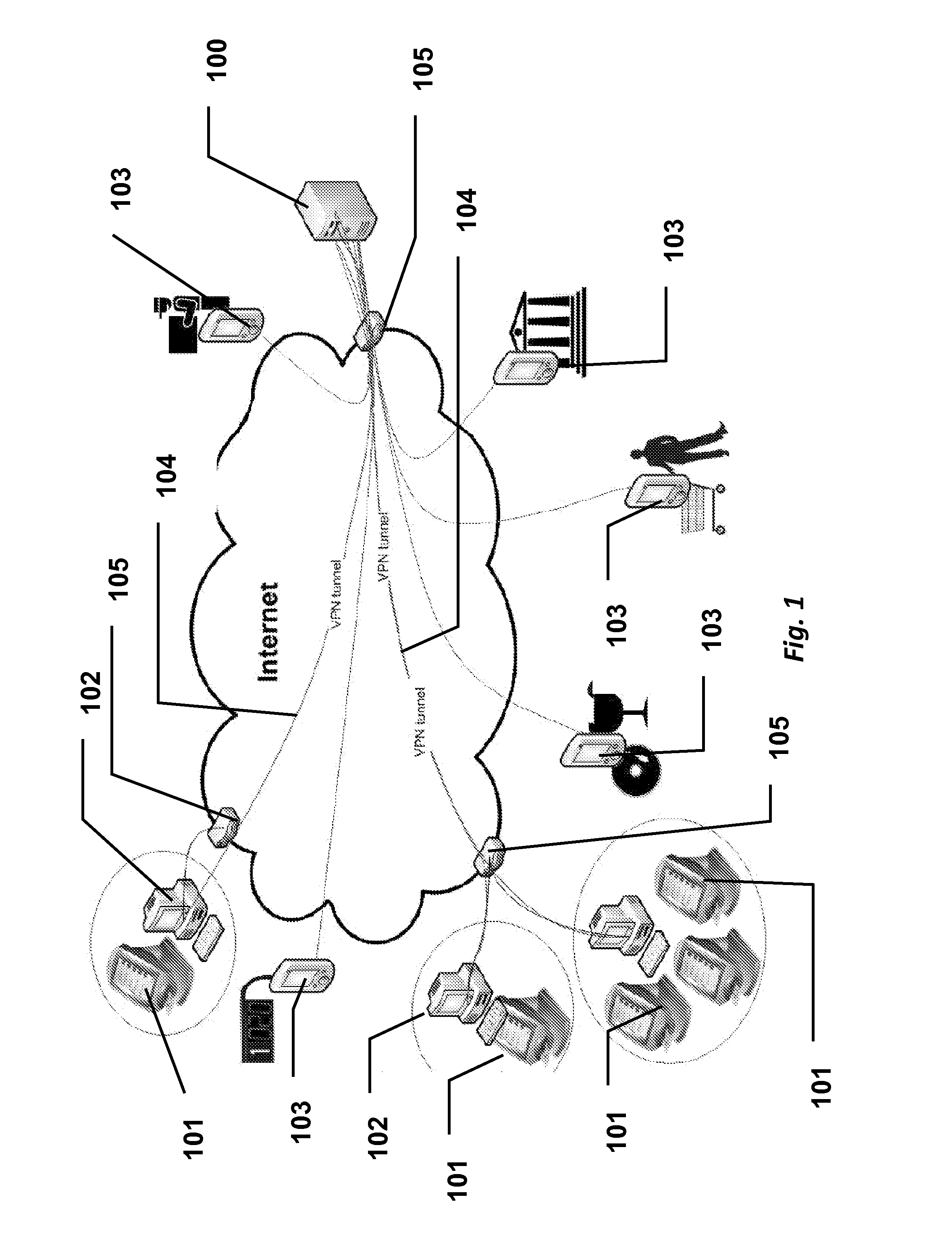

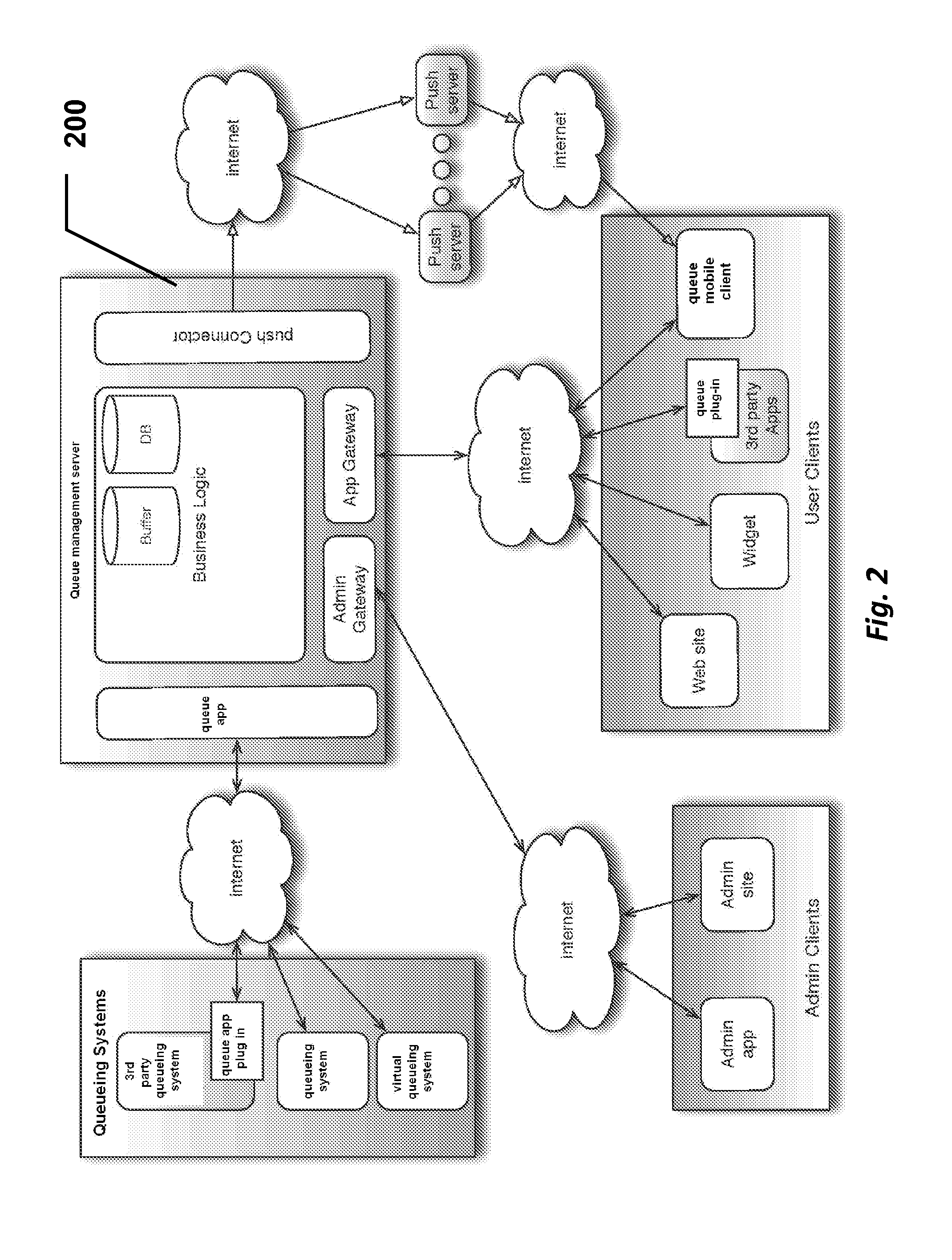

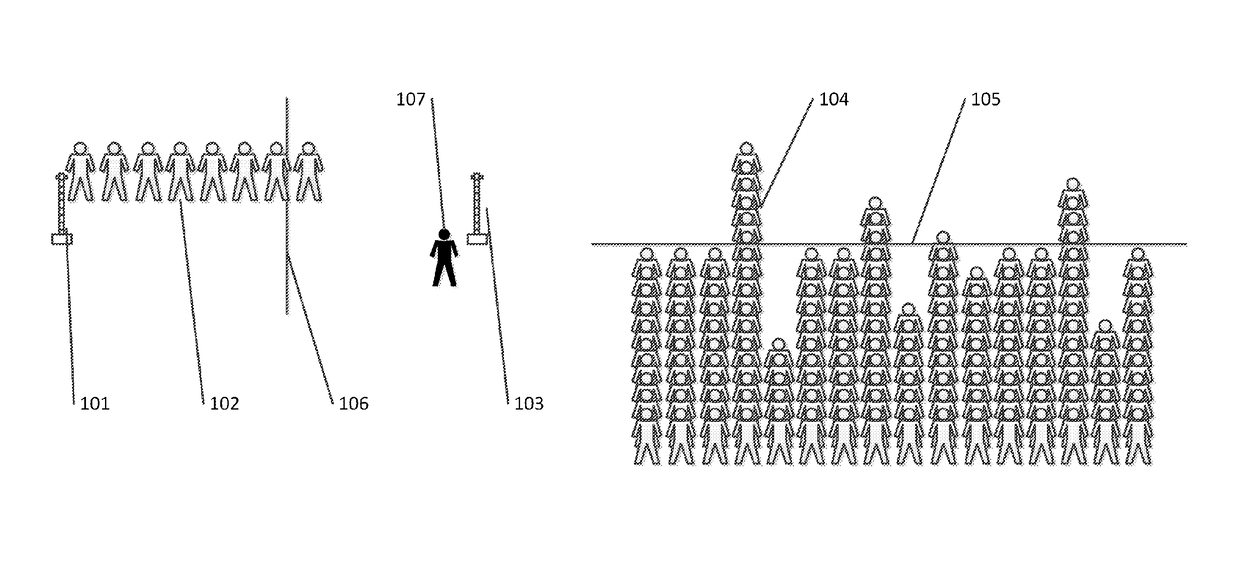

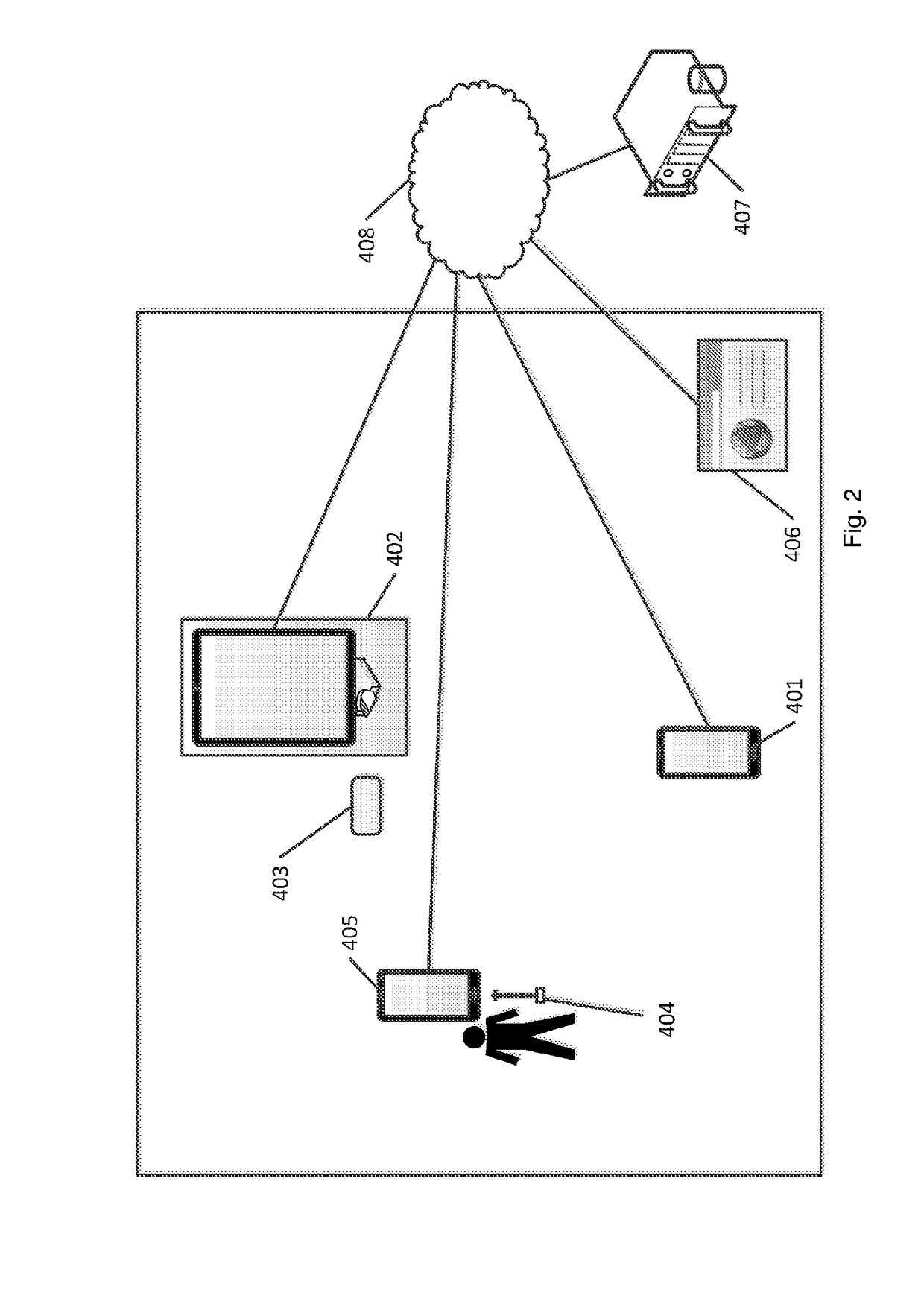

Queue remote management system and method

A queue remote management system and a related method are provided, allowing a user to remotely book, receive and manage a ticket for participating to a virtual queue, and finally to receive the call for approaching the counter and the corresponding physical queue, minimizing the time of physical presence at the counter before being provided of the expected service, with integration between conventional ticket machines, operating at different counters and at different entity locations, and a wireless virtual ticket system operated by any user through his personal client terminal, the system comprising: at least one queue management server (100) wherein a plurality of virtual queues are stored and managed, the virtual queues concerning a plurality of counters (102) of one or more entities, each counter (102) being connected to the queue management server (100) to exchange a predetermined information set concerning the call of queue tickets; a plurality of ticket machines (101) physically associated to said counters (102) and issuing physical queue tickets, each ticket machine (101) being connected to the queue management server (100) to exchange a predetermined information set concerning the issue of said physical queue tickets; a plurality of client terminals (103), allowing a user to obtain a virtual queue ticket related to one or more of said counters (102), each client terminal (103) comprising an identification code or an identification certificate allowing to establish a connection to the queue management server (100) to exchange a predetermined information set concerning the status of a user's queue based on both the physical and the virtual tickets, the counters (102), the ticket machines (101) and the client terminal (103) being connected to the at least one queue management server (100) through a communication network, wherein said ticket machines (101), said counters (102) and the at least one queue management server (100) communicate through a corresponding VPN tunnel (104) or through an encrypted web service established in said communication network, the ticket machines (101) and the counters (102) being linked through a dedicated IP address or domain.

Owner:QURAMI

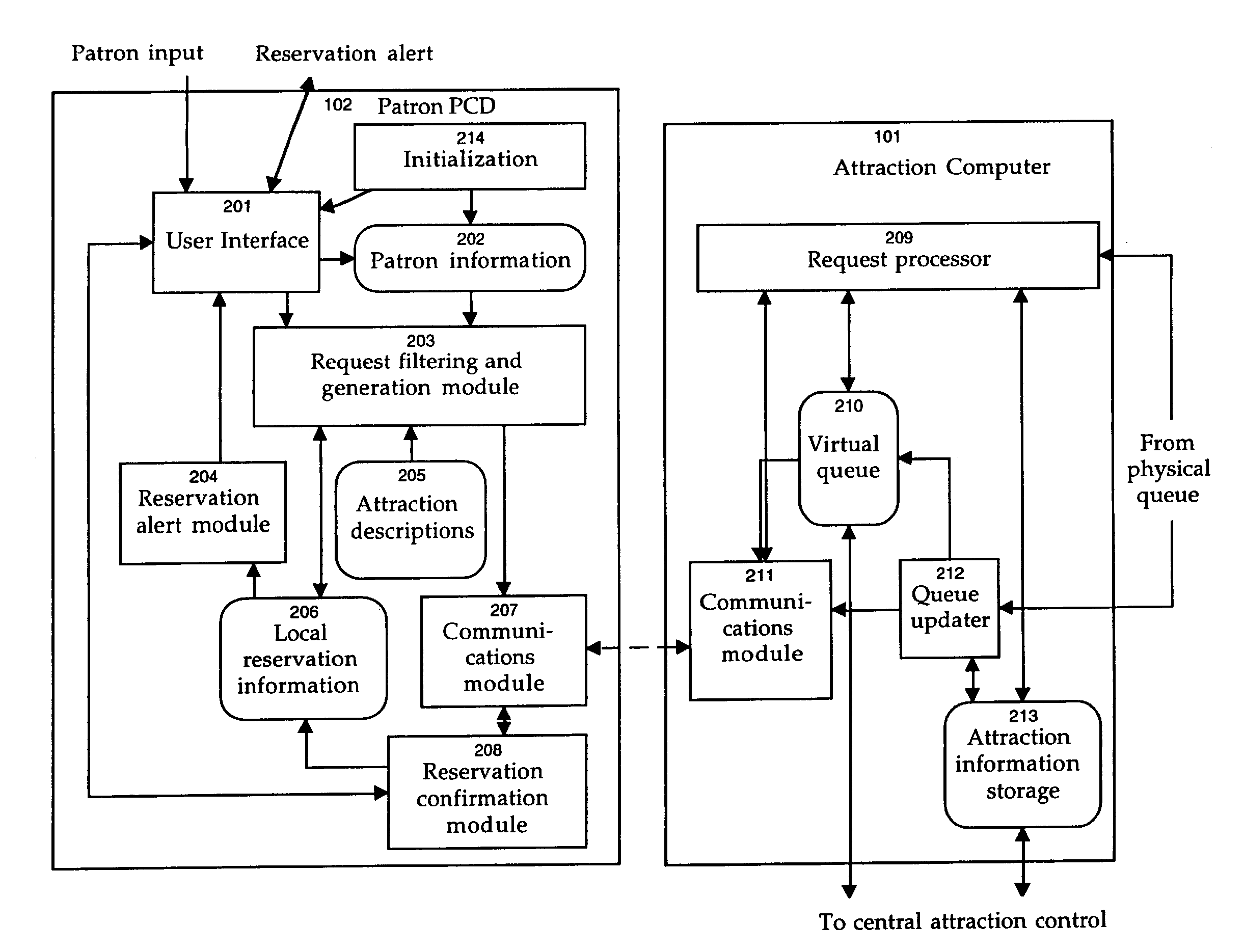

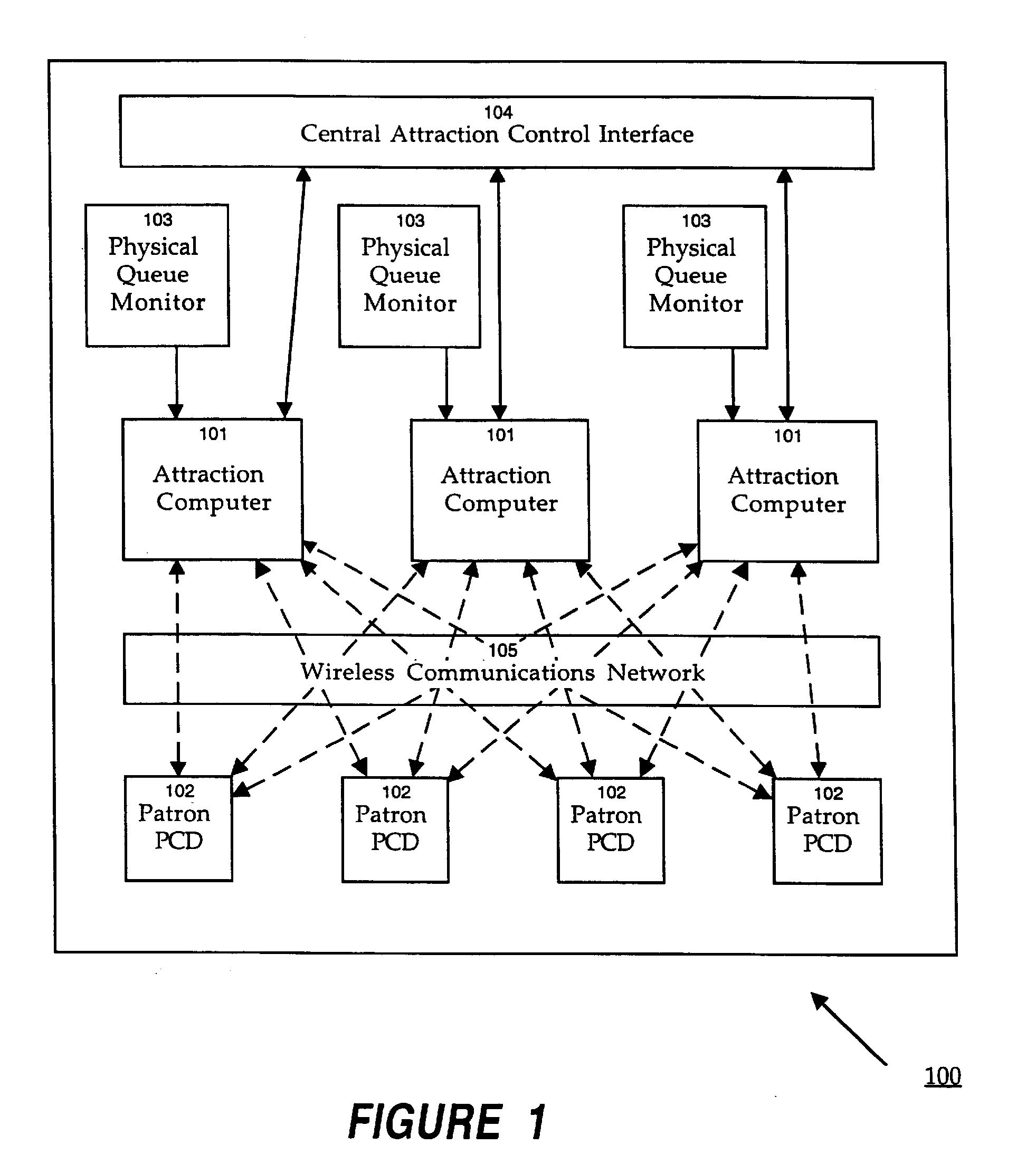

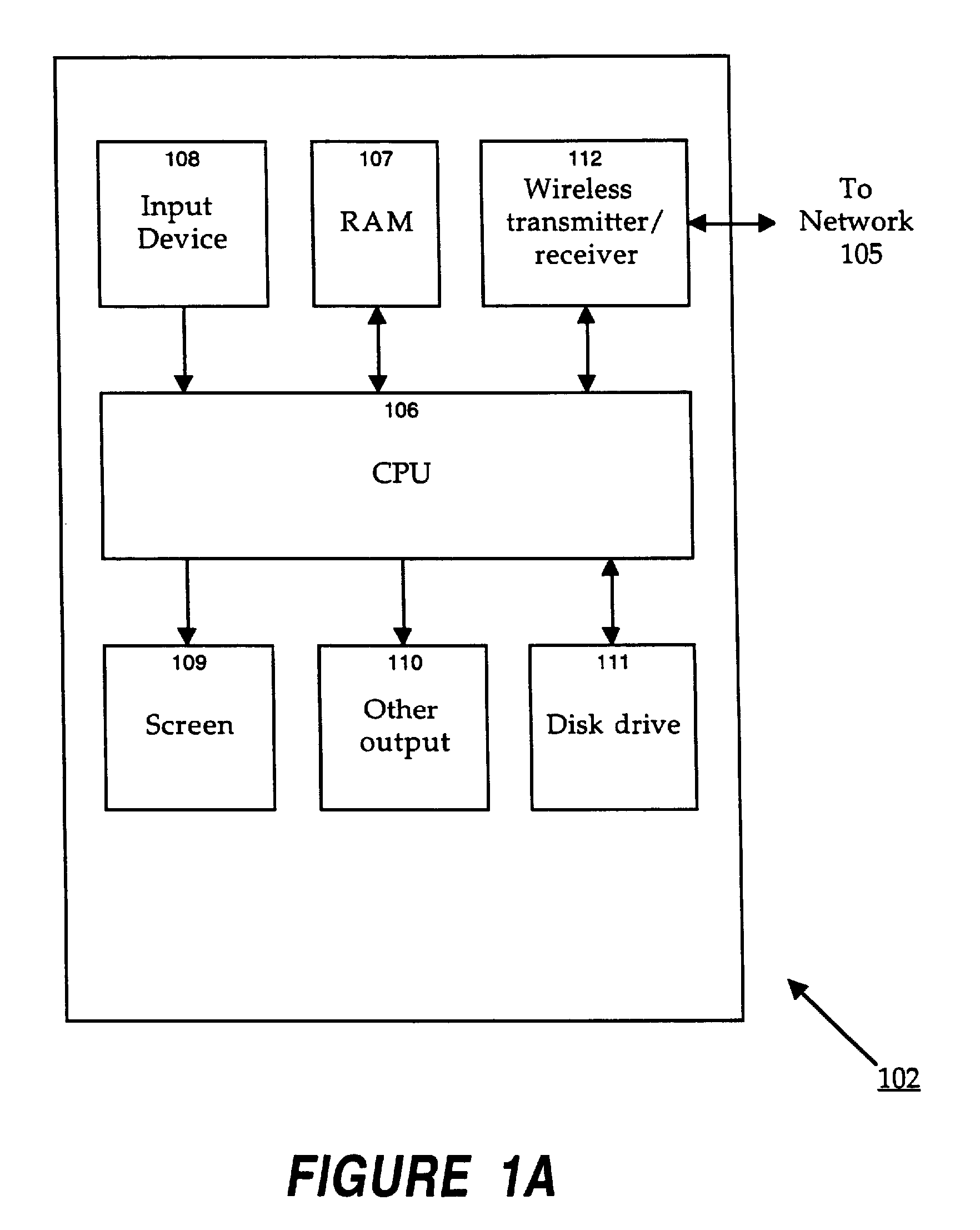

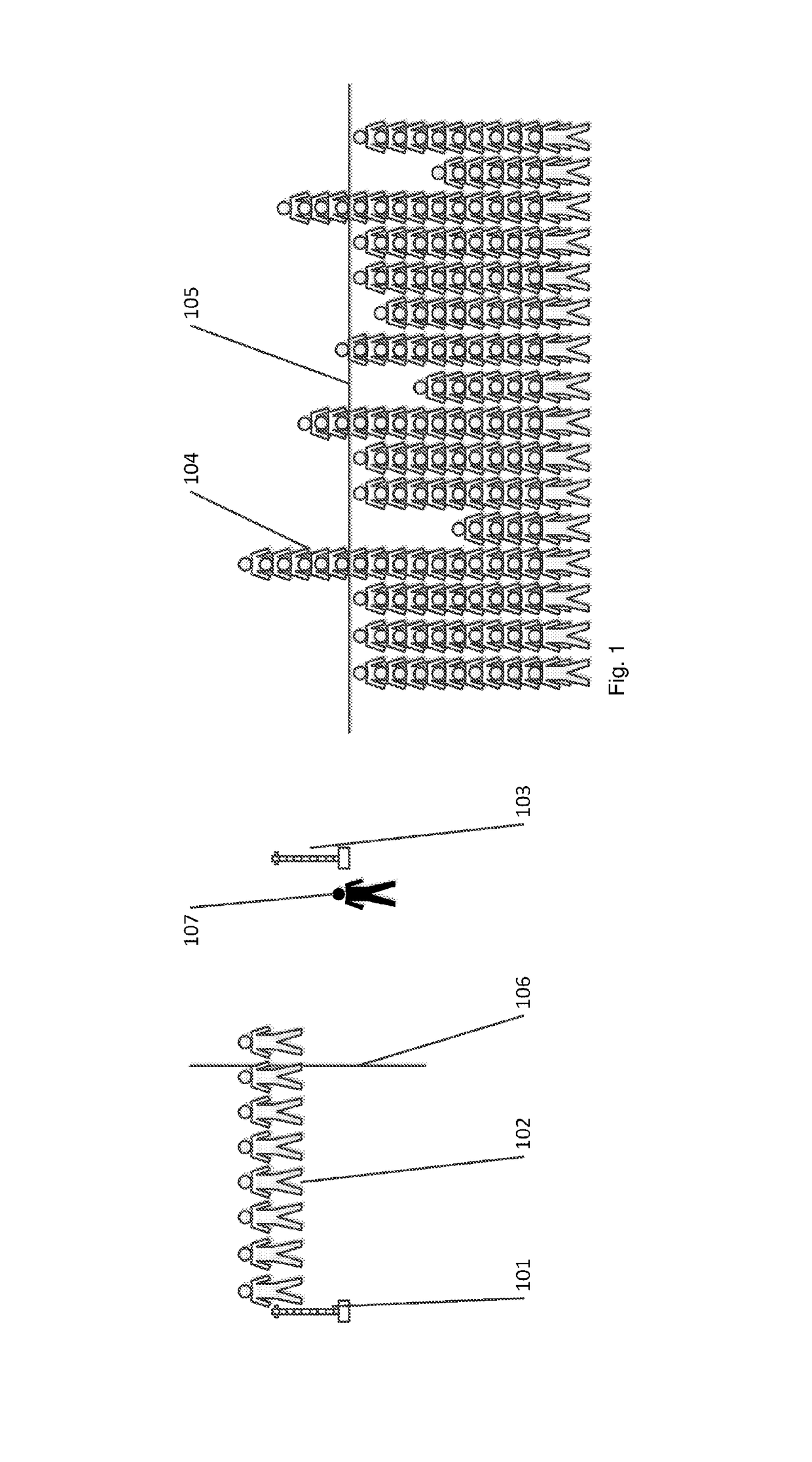

Assigning and Managing Patron Reservations for Distributed Services Using Wireless Personal Communication Devices

InactiveUS20090204449A1Facilitate communicationReservationsChecking apparatusDistributed servicesComputer science

A system and method for assigning and managing patron reservations to one or more of a plurality of attractions receive reservation requests at personal communication devices (PCDs). Reservation requests are transmitted to a computer associated with the selected attraction, which determines a proposed reservation time based on information describing the attraction, the patron, previously-made reservations maintained in a virtual queue, and the current state of a physical queue associated with the attraction. Proposed reservation time is transmitted to the PCD for confirmation or rejection by the patron. Confirmed reservations are entered in the virtual queue. Patrons are alerted by the PCD when their reservation time is approaching.

Owner:LO Q

Virtual queuing support system and method

ActiveUS7430290B2Special service for subscribersData switching by path configurationSupporting systemEnd user

A method and a Virtual Queuing Support System (VQSS) for optimizing end-user service for clients waiting for a service request to be responded and who are registered in various virtual queues of the VQSS. End-users register in a virtual queue of the VQSS, which monitors the status of the queues and the status of the service agents. When a parameter such as the number of users in a queue or the expected waiting time exceeds a pre-set threshold, the VQSS reassigns end-users from the problematic queue, and / or re-assigns service agents from other queues to the problematic queue. The VQSS comprises a memory storing the virtual queues, and a processor for managing the virtual queues.

Owner:TELEFON AB LM ERICSSON (PUBL)

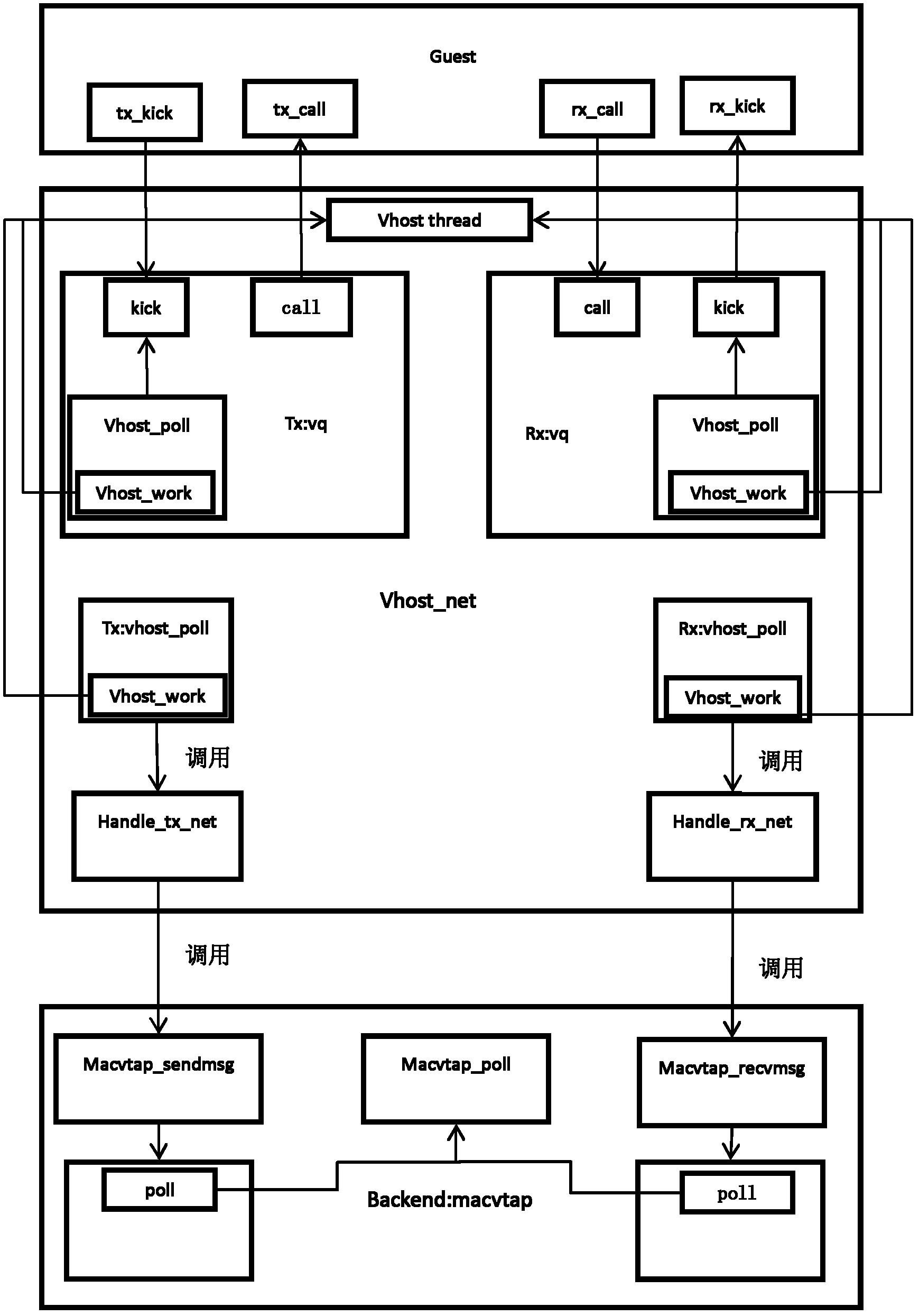

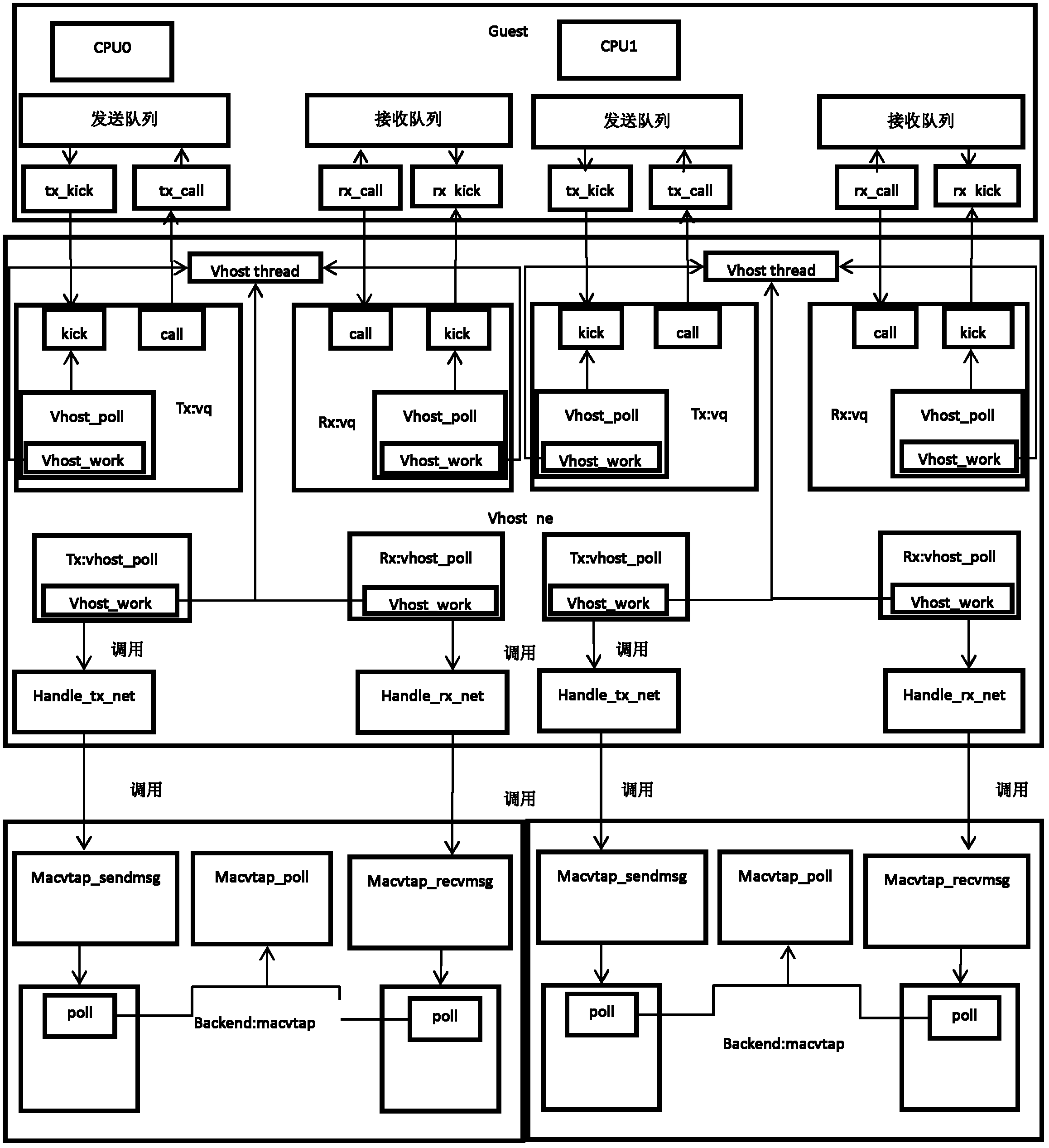

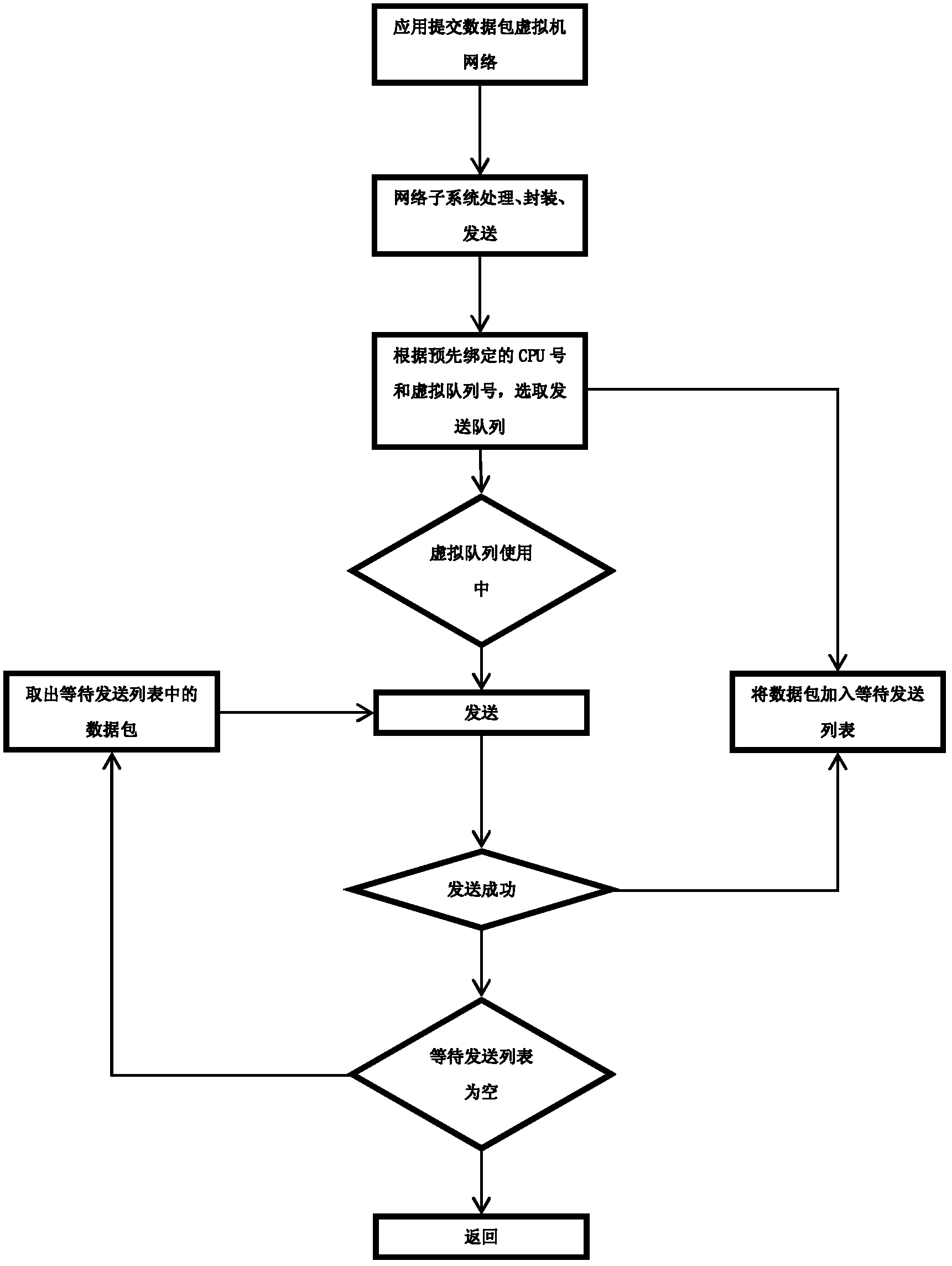

Implementing method for optimizing network performance of virtual machine by using multiqueue technology

ActiveCN102591715AImprove network transmission performanceReliable optimization methodMultiprogramming arrangementsSoftware simulation/interpretation/emulationSystem callData traffic

The invention relates to an implementing method for optimizing the network performance of a virtual machine by using a multiqueue technology, which comprises the following three steps of: a first step of modifying a network initialization part of QEMU and increasing support for multiqueue by modifying the QEMU; a step 2 of carrying out modification on the vhost multiqueue for supporting the QEMU to use the multiqueue and supporting a vhost-net multiqueue network card, which comprises modification of using one thread to carry out data transmission for one queue and modification of system call; and a step 3 of modifying the part, i.e. the vhost-net multiqueue network card, in a vhost module, which is related to the network, so that the virtual network card supports the multiqueue transmission. According to the invention, by designing and implementing a plurality of virtual queues from the virtual machine to a host, the aim of increasing the network data traffic and the throughput of the virtual machine is fulfilled. The method has ingenious, scientific and reasonable design and has high using value and wide application prospect in the technical field of computers.

Owner:中科育成(北京)科技服务有限公司

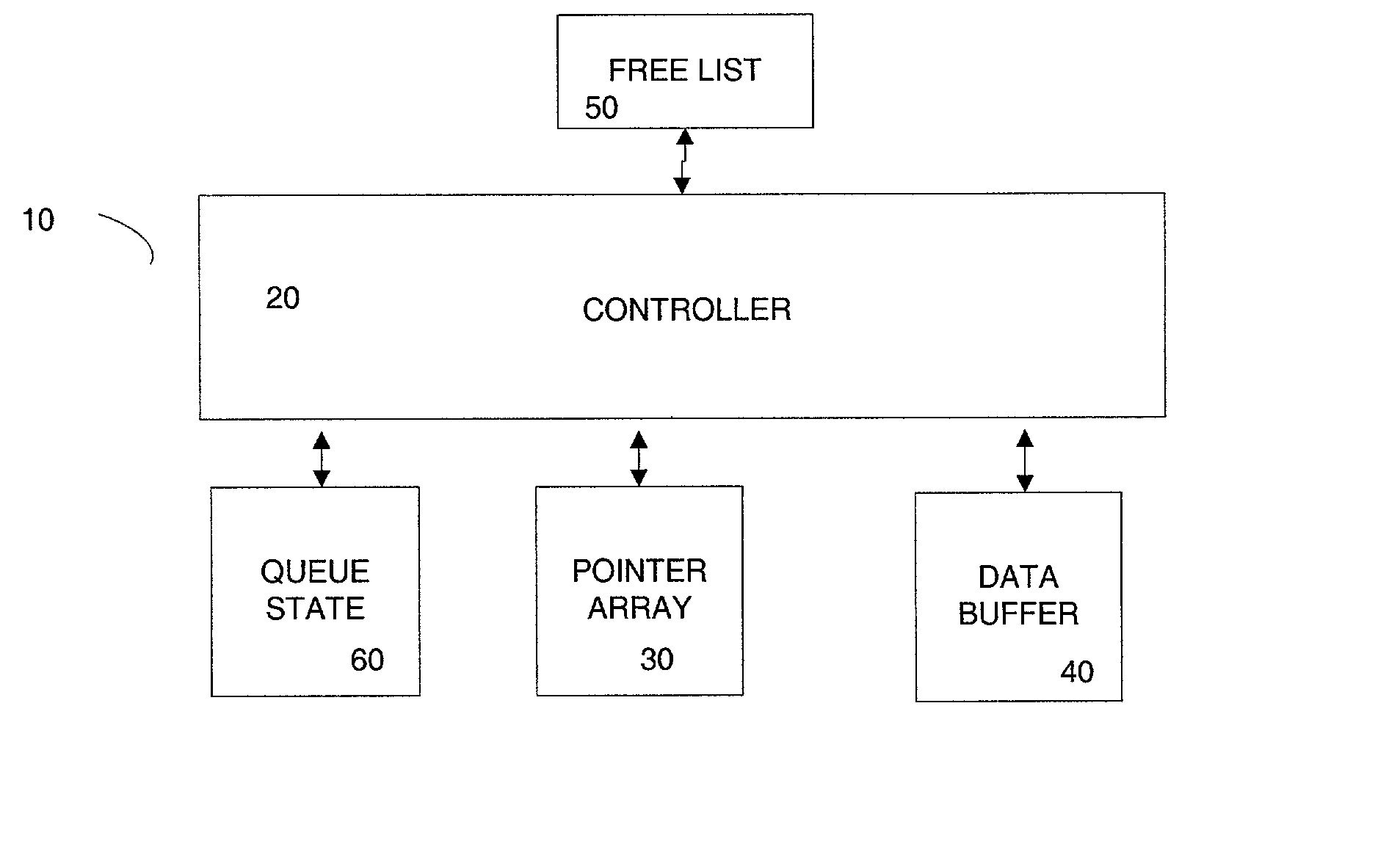

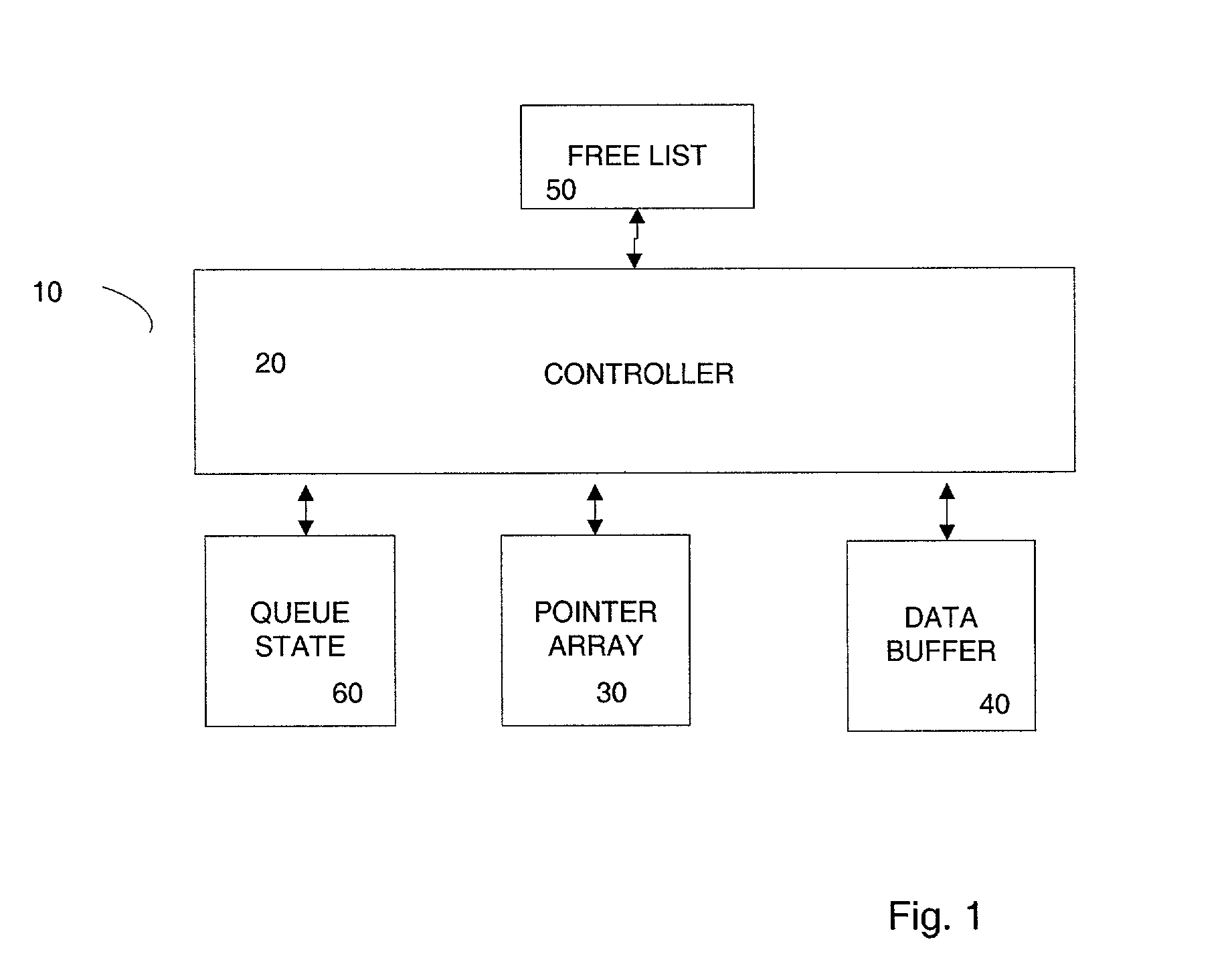

Shared resource virtual queues

InactiveUS20030145012A1Digital data information retrievalDigital data processing detailsArray data structureComputer science

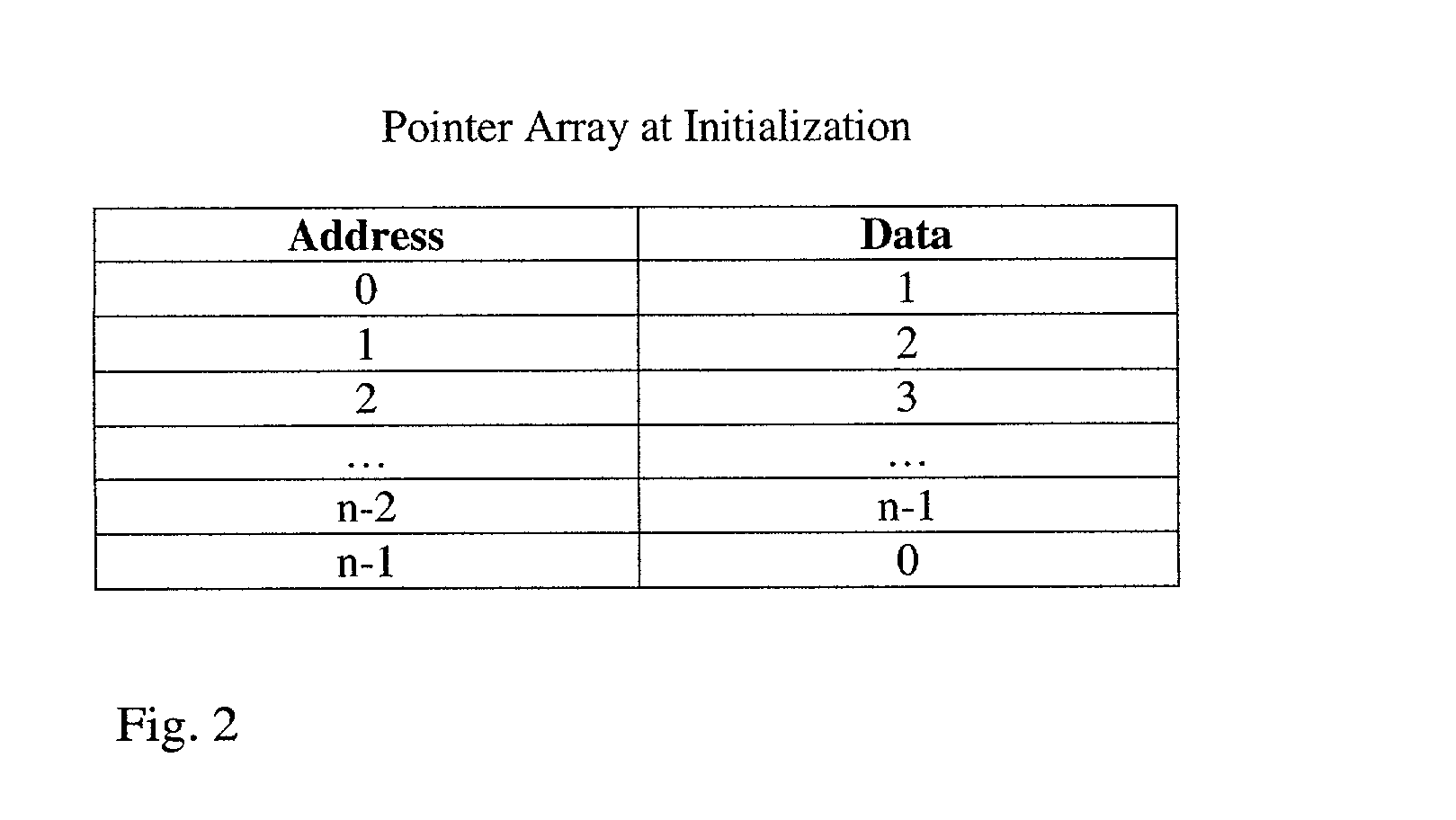

A method for sharing buffers among a plurality of queues. A pointer array contains a a linked list of free buffers. Buffers are allocated to a virtual queue by delinking a pointer from the free buffer linked list and adding the pointer to a linked list associated with the queue. When a buffer is no longer needed, the process is reversed.

Owner:SUN MICROSYSTEMS INC

Method and apparatus for improving performance in a network using a virtual queue and a switched poisson process traffic model

InactiveUS20080279207A1Error preventionFrequency-division multiplex detailsPacket arrivalTraffic model

A method for improving network performance using a virtual queue is disclosed. The method includes measuring characteristics of a packet arrival process at a network element, establishing a virtual queue for packets arriving at the network element, and modeling the packet arrival process based on the measured characteristics and a computed performance of the virtual queue.

Owner:VERIZON PATENT & LICENSING INC

Queuing system

ActiveUS20170098337A1Easy to manageImprove efficiencyReservationsChecking apparatusData access controlMedia access control

A technique for controlling access to one or more attractions is achieved using a number of access keys, each being issued to one or more users. An electronic queue management part manages a virtual queue in respect of each attraction and receives electronic requests for attraction access, each request relating to an access key and being for the users associated with it to access a particular attraction. Receipt of each request causes the respective users to be added to a corresponding virtual queue. A time at which each group of users reaches the front of the virtual queue and can access the attraction is determined. The users access the attractions by presenting an access key to an access control part, in communication with the electronic queue management part. Only a user presenting an access key at the correct time for accessing the attraction is allowed access to the attraction.

Owner:ACCESSO TECH GRP

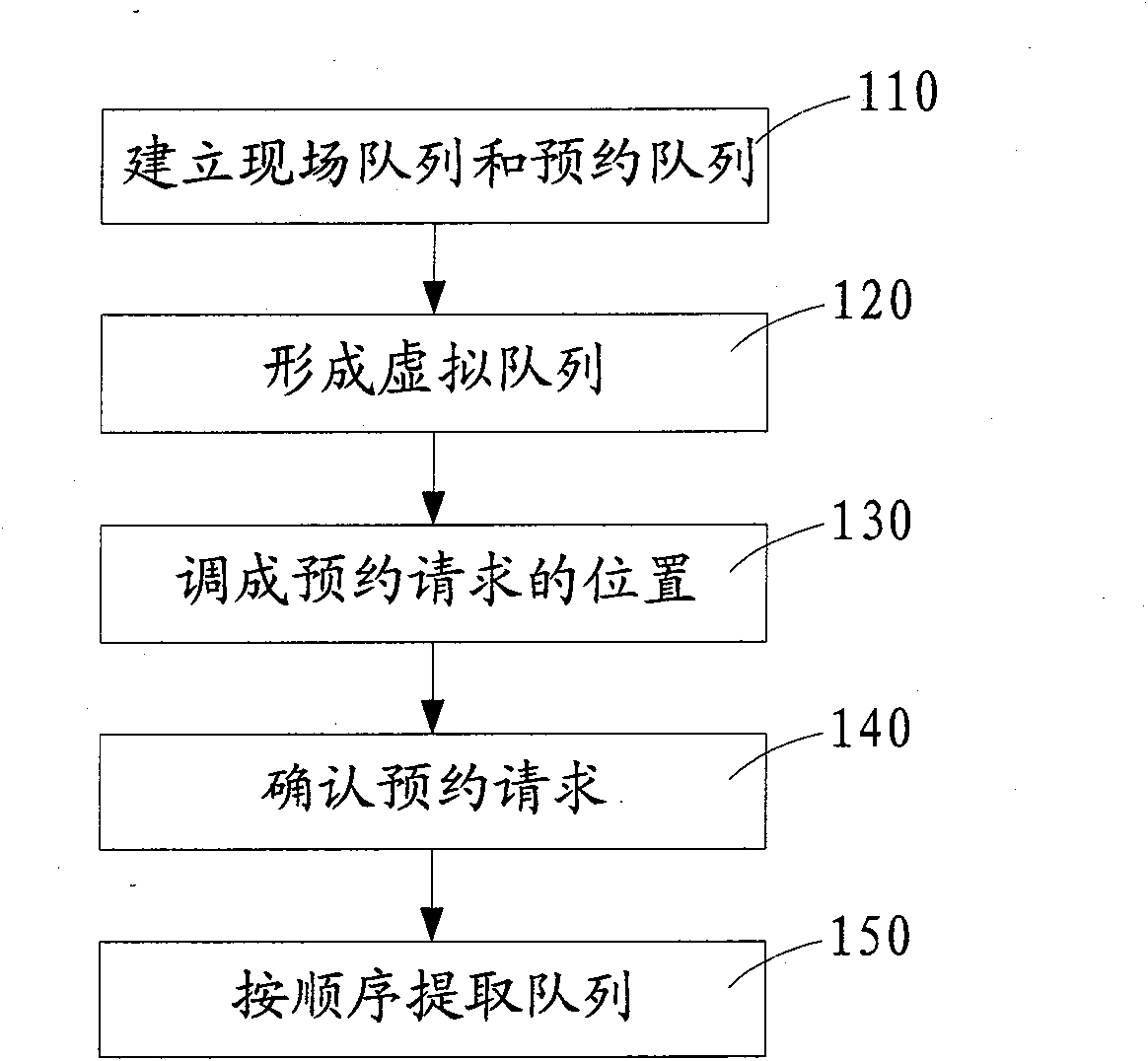

Client service reservation method

InactiveCN101807264AGuaranteed accuracyExtended waiting timeChecking apparatusAppointment timeThe Internet

The invention relates to a reservation method, in particular to a client service reservation method for service units, such as banks, hospitals, governments and the like, which comprises the following steps: establishing a field queue and a reservation queue; inserting reservation requests in the reservation queue into the field queue to form a virtual queue according to reservation time; regulating positions, in the virtual queue, of each reservation request in the reservation queue according to the processing speed of the virtual queue; enabling the reservation requests in the reservation queue to enter the field queue through the on-site confirmation of clients to form an actual queue; and extracting the actual queue in order. In the reservation method, the client reserves service transaction time before going to the bank for transacting a service through a plurality of channels and modes of mobile phone short message, the Internet, telephone voices and the like; and at the service transaction time, the client prints a queuing number on the spot and can transact the service with the number. Therefore, the method has the advantages of not only avoiding the client waiting for too long, but also avoiding the condition that the number of the client is missed when the client arrives.

Owner:深圳市华信智能科技股份有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com