Cache management method and device

A cache management and cache technology, applied in digital transmission systems, electrical components, transmission systems, etc., can solve the problems of cache space not being used, cache space waste, etc., to achieve effective use of cache space, improve utilization, and save The effect of managing space

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

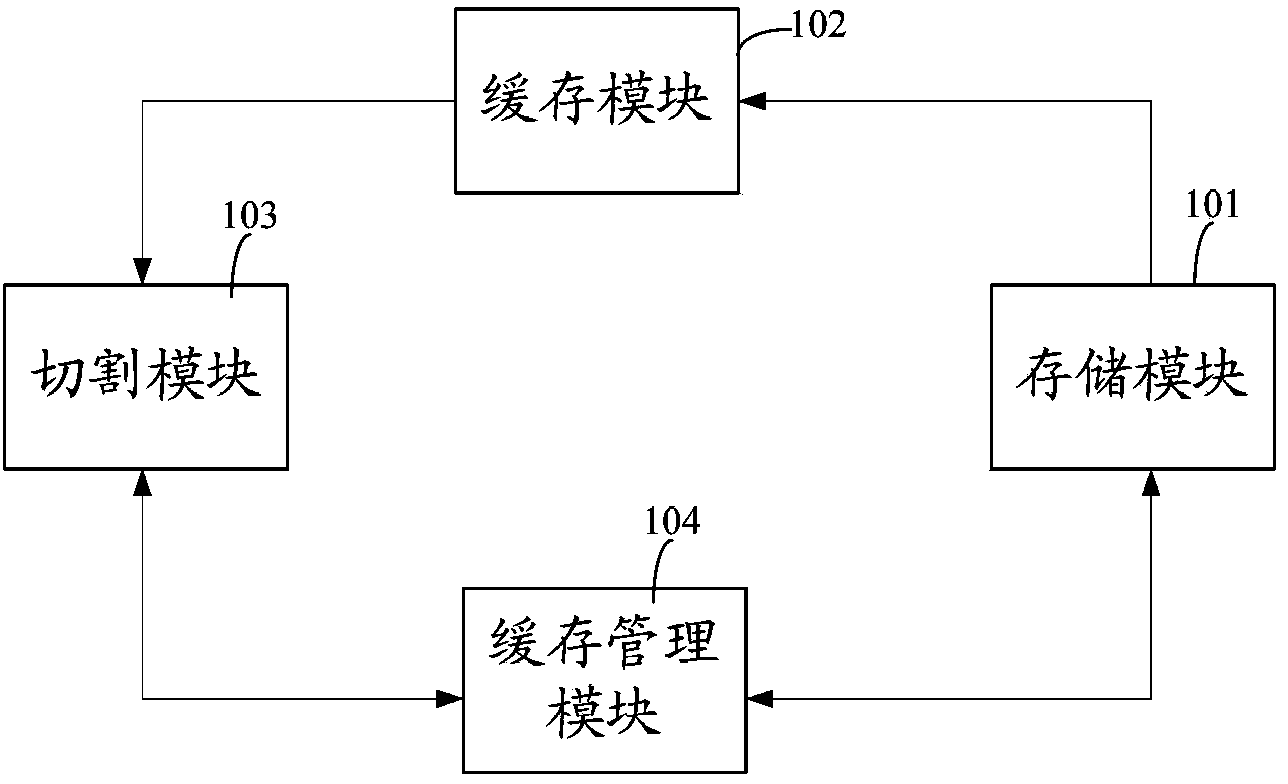

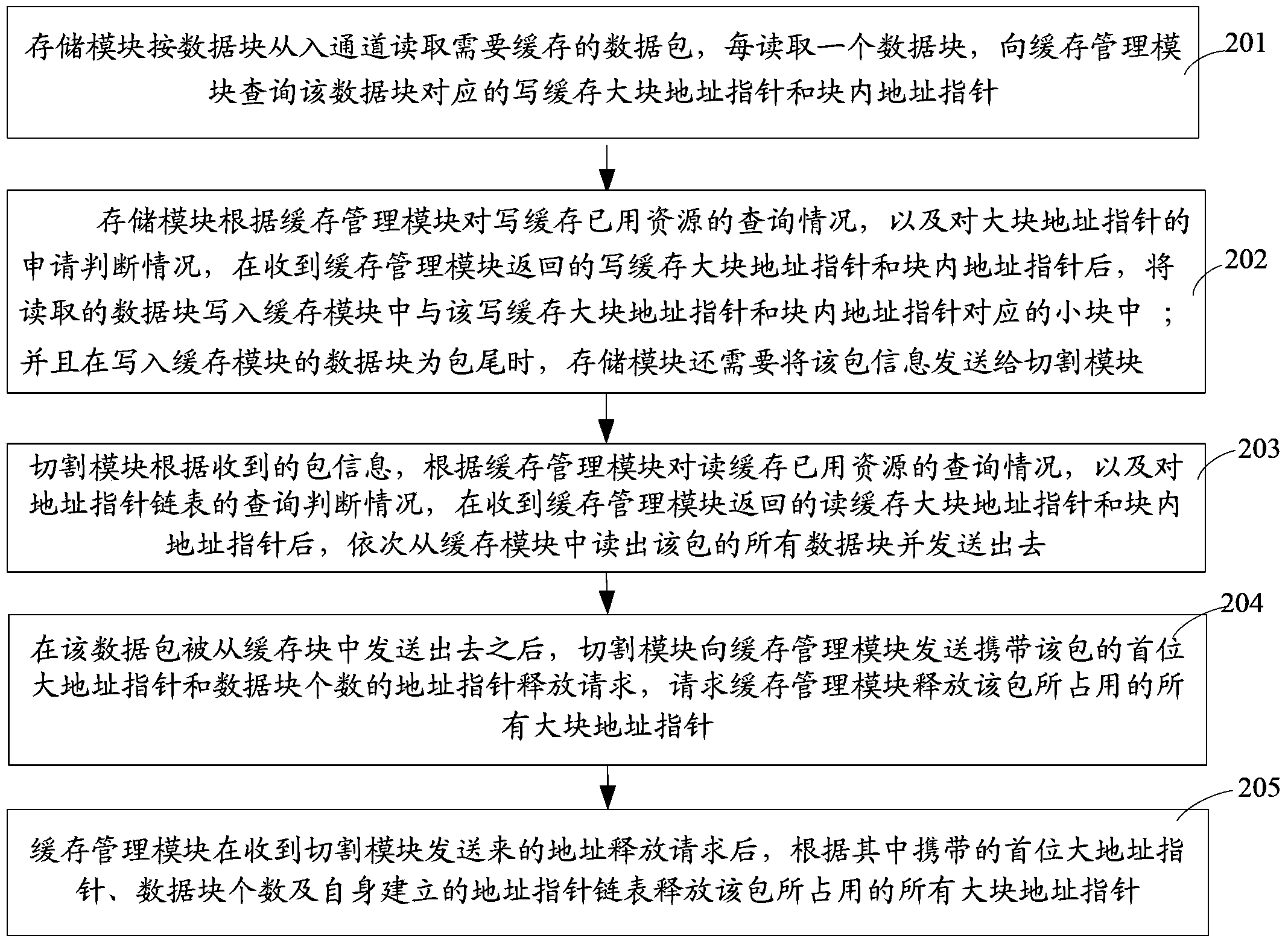

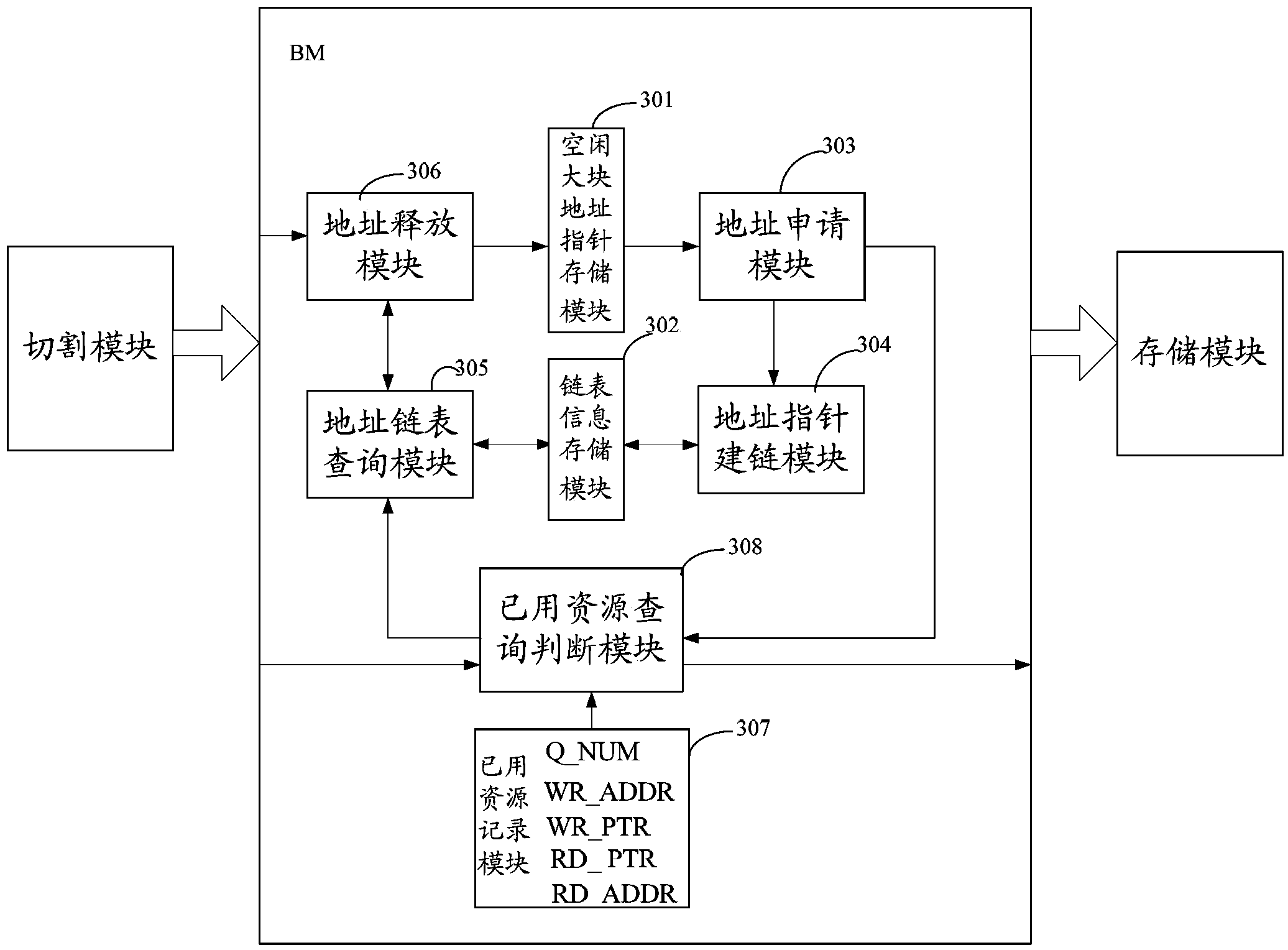

[0021] The core idea of the embodiment of the present invention is: add a used resource record module and a used resource query judgment module in the cache management module, point to the data block through the two-level address pointer, and when writing the data block, the data block is written according to the The cache large block address pointer and the block address pointer are written into the free small block one by one; when reading the data block, it is read according to the read cache large block address pointer and the block internal address pointer.

[0022] Wherein, the size of the cache block and the small block can be divided according to actual needs, but the length of the cache block should be smaller than the maximum packet length. Assuming that the maximum packet length is 16Kbyte (byte), and the size of the entire cache space is 8Gbit (bit), the cache block granularity of the first level division can be 32K bytes, and the small block granularity of the se...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com