Character input device and method based on gaze tracking and speech recognition

A speech recognition and character input technology, applied in the field of image processing, can solve problems such as low pupil image resolution, complicated character input process, confirmation process, and limited gaze accuracy, and achieve the goal of overcoming the limitations of human-computer interaction functions and gaze accuracy The effect of affecting and improving the character input rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

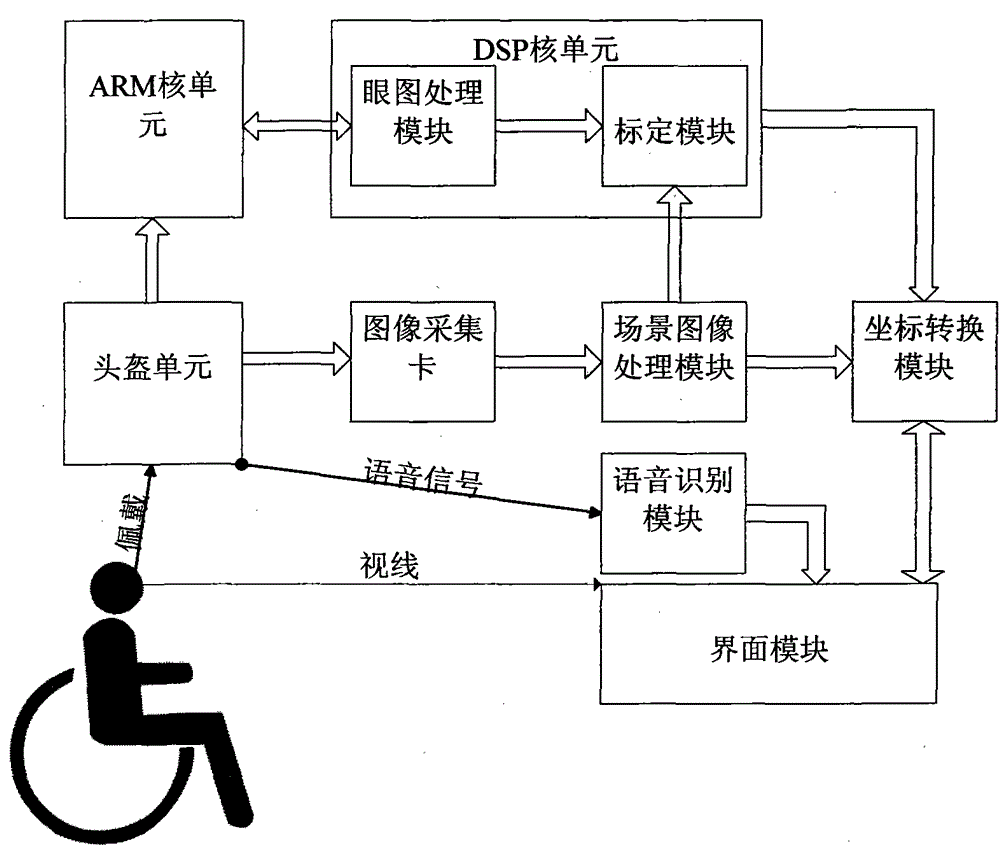

[0061] Attached below figure 1 , to further describe the device of the present invention.

[0062] The character input device based on line of sight tracking and speech recognition in the present invention includes a helmet unit, an ARM core unit, an image acquisition card, a speech recognition module, a DSP core unit, a scene image processing module, a coordinate conversion module, and an interface module; wherein, the helmet unit is respectively One-way connection with the ARM core unit, image acquisition card, and voice recognition module, and output the collected eye diagrams, scene images, and user voice signals to the ARM core unit, image acquisition card, and voice recognition module; the ARM core unit and the DSP core The unit is bidirectionally connected, the ARM core unit outputs the unprocessed eye diagram to the DSP core unit, and receives the processed eye diagram input by the DSP core unit; the image acquisition card is connected to the scene image processing mod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com