3D (three-dimensional) visualization method for coverage range based on quick estimation of attitude of camera

A coverage and camera technology, which is applied in the field of rapid camera attitude estimation and coverage 3D visualization, can solve the problems of low precision, calibration objects cannot be placed on site, and traditional methods cannot be applied, so as to avoid coverage dead spots.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

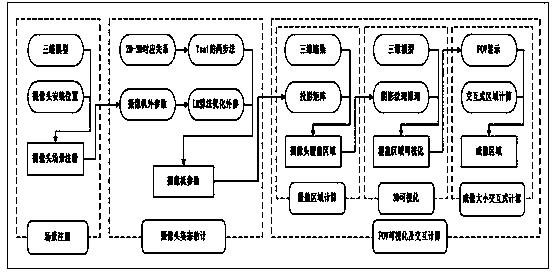

[0055] Embodiment comprises the following steps:

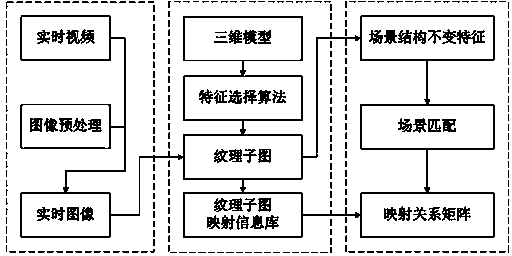

[0056] Step 1: Carry out 3D scene modeling and obtain the scene model, mainly including reconstruction and enhancement of the 3D scene model, fusion of real-time video and 3D model, so as to realize accurate reconstruction of the real scene. Process real-time video sequence frames, obtain scene texture, illumination, depth and geometric information from the frame sequence, use it to restore 3D geometric motion information, and use this information to complete the 3D scene model reconstruction work; and then further enhance the 3D scene model Processing, mainly to solve the problems of geometric consistency, illumination consistency and occlusion consistency between video and model.

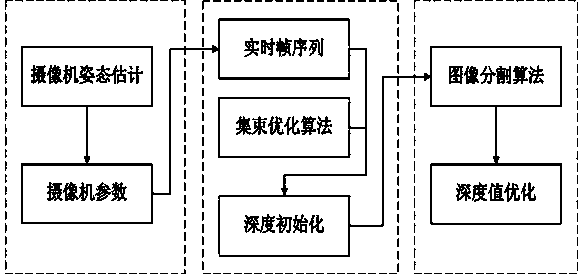

[0057] Step 2: According to the 3D scene model and the installation position of the camera, register the camera into the 3D scene model, then use the 2D-3D point pair relationship to calculate the camera pose, and optimize the camera parameters to...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com