Discriminative online target tracking method based on videos in dictionary learning

A technology of target tracking and dictionary learning, which is applied in the field of image processing and can solve problems such as poor performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

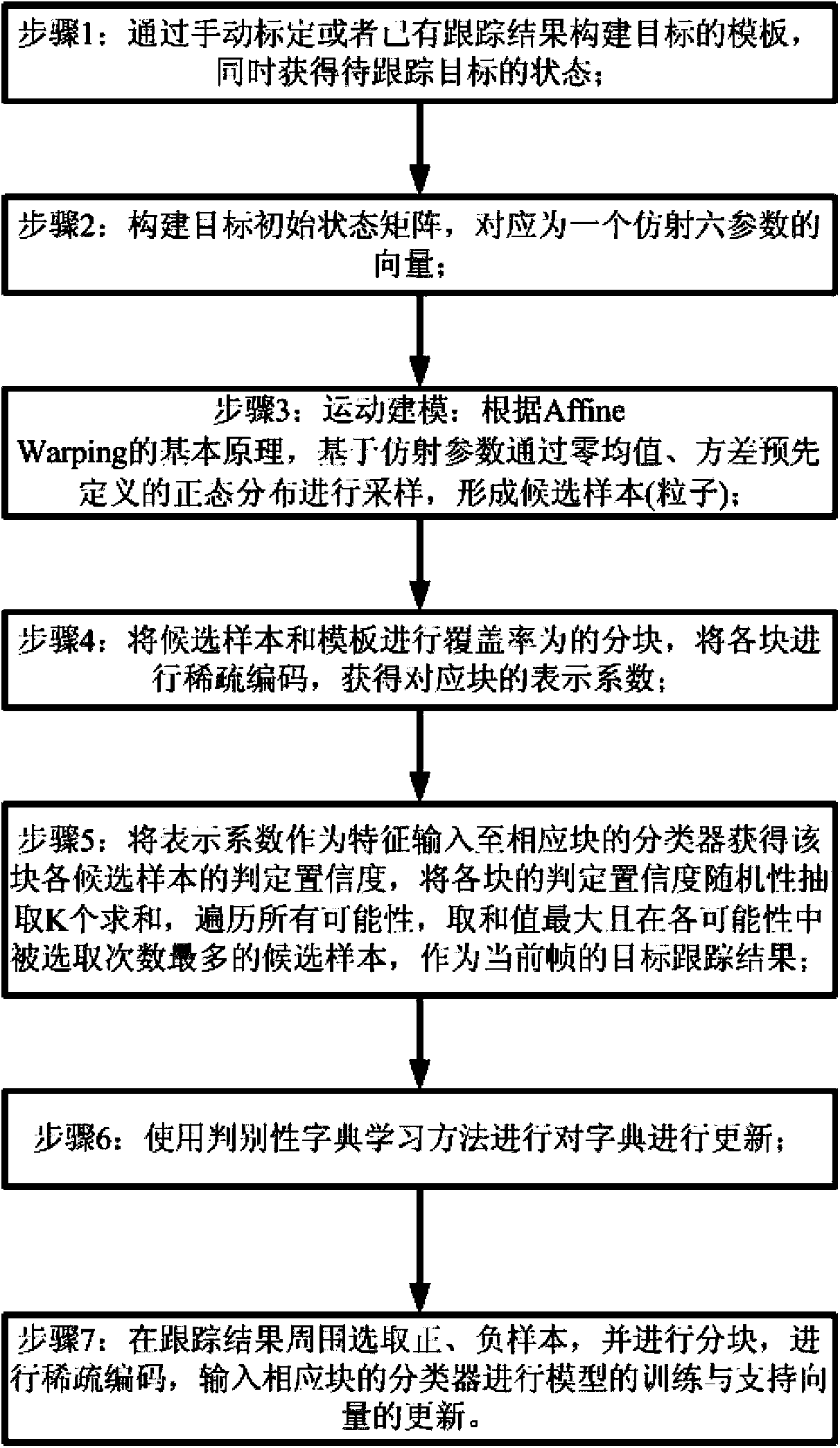

[0041] Step 1. Construct the template of the target through manual calibration or existing tracking results, and obtain the status of the target to be tracked at the same time;

[0042] Step 2: Construct the target initial state matrix, specifically: let the initial time t=0 to t=t M-1 The state of the target to be tracked is known, and M templates with a size of d×d are formed, all of which have been averaged and normalized. At this time, the state of the target to be tracked is That is, the target initial state matrix Among them: t0 is the initial state.

[0043] Step 3: Motion modeling: According to the basic principle of Affine Warping, based on the affine parameters, sampling is performed through a normal distribution with zero mean and variance predefined to form candidate samples (particles);

[0044] If the parameters of the target state at adjacent moments conform to the Gaussian distribution, satisfying: Then according to the preset Ψ 0 Sampling is performed...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com