Human motion recognition feature expression method based on depth mapping

A technology of human body activity and depth mapping, applied in the field of pattern recognition, can solve the problems of complex LDP generation, limited accuracy, and large amount of calculation, and achieve the effects of small amount of calculation, high accuracy, and overcoming inaccuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The following embodiments will further describe the present invention in conjunction with the accompanying drawings.

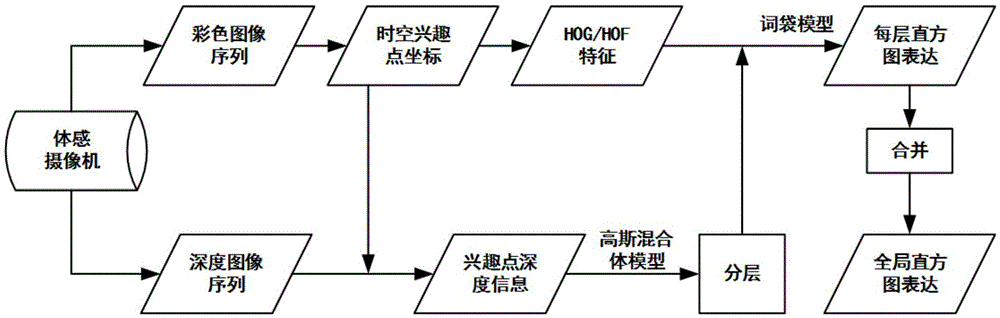

[0019] Such as figure 1 As shown, a feature expression method for human activity recognition based on a somatosensory camera includes the following steps:

[0020] S1: From the color image sequence of the somatosensory camera, extract the spatiotemporal interest point p of human activities, and its coordinates are (x, y, t);

[0021] S2: Centering on the spatiotemporal interest point p of human activities, calculate the optical flow histogram HOF feature and gradient histogram HOG feature in the color image sequence;

[0022] S3: From the depth image sequence of the somatosensory camera, find the corresponding human activity spatiotemporal interest point p', and obtain the depth value of p';

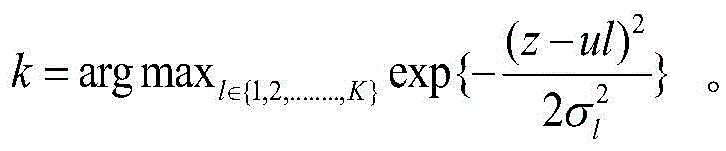

[0023] S4: Based on the Gaussian mixture model, divide p into N layers according to the depth value of p', construct a multi-channel expression based on depth m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com