A Texture-Based Depth Image Boundary Correction Method

A technology of depth image and texture image, applied in image analysis, image enhancement, image data processing, etc., can solve the problems of unsatisfactory filtering effect in boundary area and fluctuation of boundary depth value, so as to improve reliability and ensure robustness Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

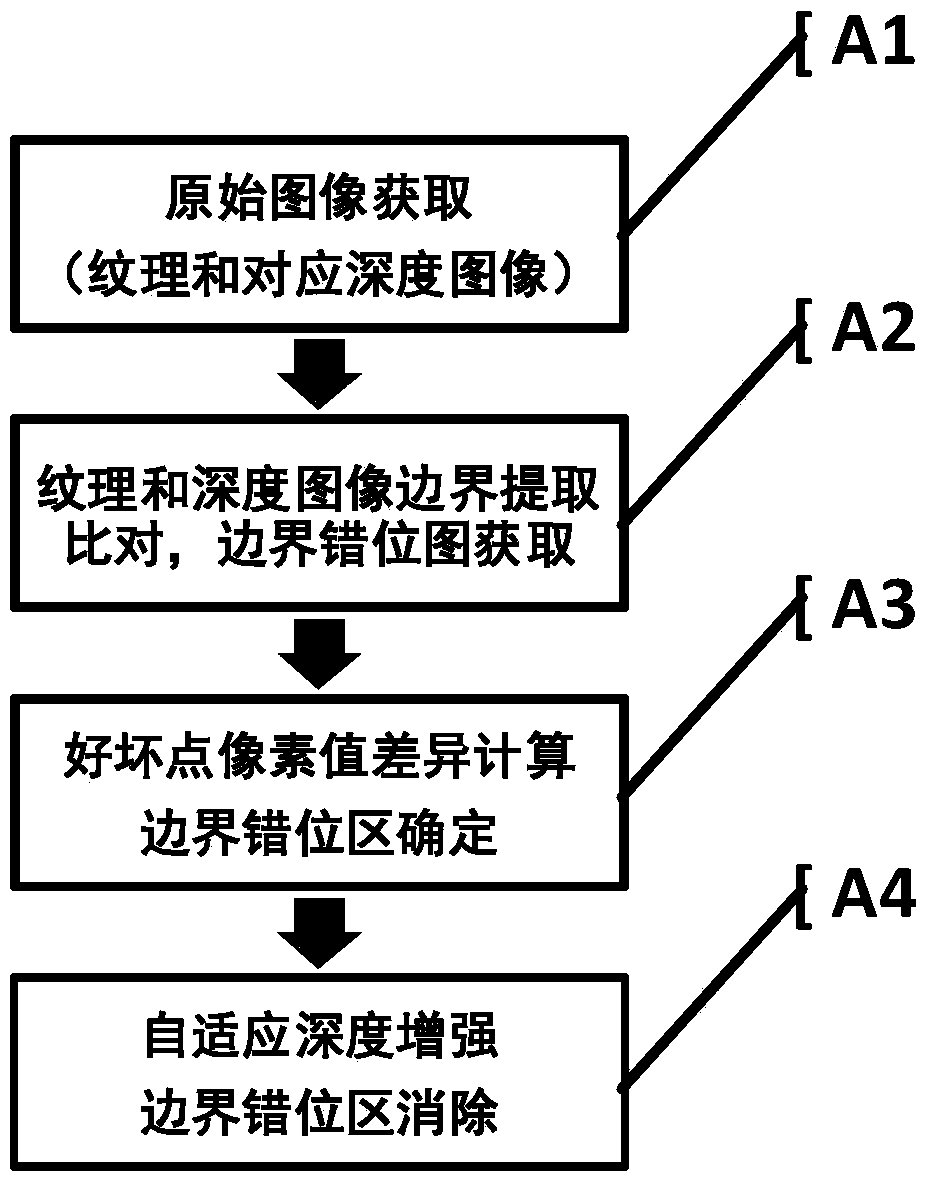

[0010] In order to obtain a high-quality depth image with clear and smooth object boundaries, complete shape, and accurate position, it is necessary to integrate the advantages of various improved depth image methods for the boundary area of the depth image and learn from each other. The idea of the embodiment of the present invention is mainly based on the two main problems of jitter and misalignment in the boundary area of the depth image. With the help of the stable and accurate corresponding texture image boundary, the depth enhancement process is performed on the boundary area to improve the accuracy of the air space and the stability of the time domain. The purpose of comprehensively suppressing the boundary errors in the depth images collected by low-end depth sensors is to facilitate the further integration of depth information into other applications.

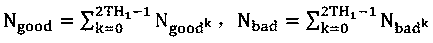

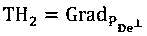

[0011] In this embodiment, by extracting the boundary of each frame of the texture image and comparing it with ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com