Robot image positioning method and system base on deep learning

A technology of deep learning and image positioning, which is applied in image enhancement, image analysis, image data processing, etc., can solve problems such as positioning failure, and achieve the effect of improving accuracy, easy extraction and matching features, and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

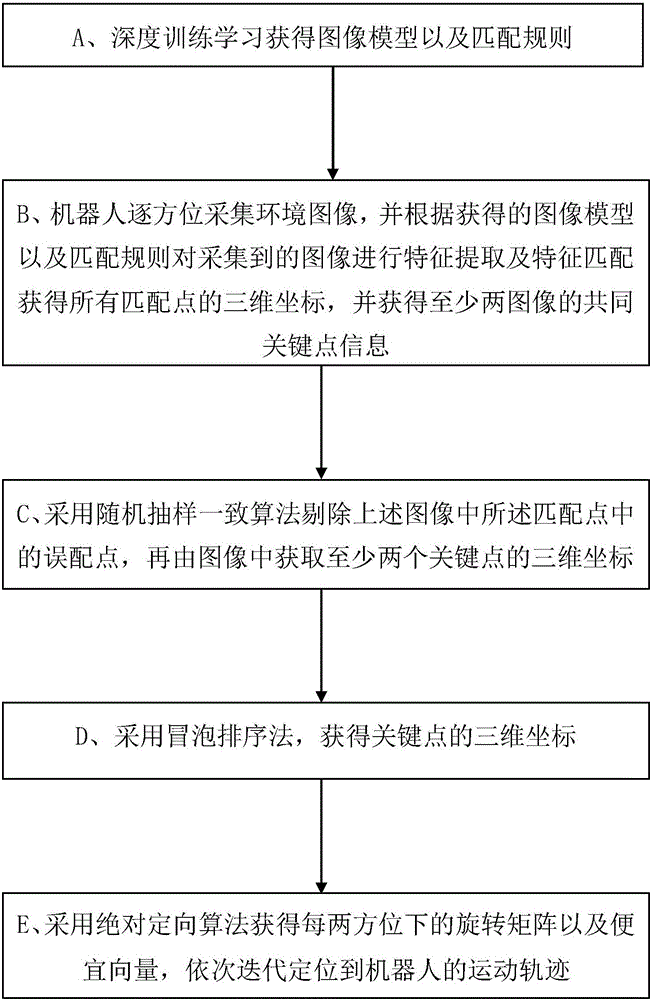

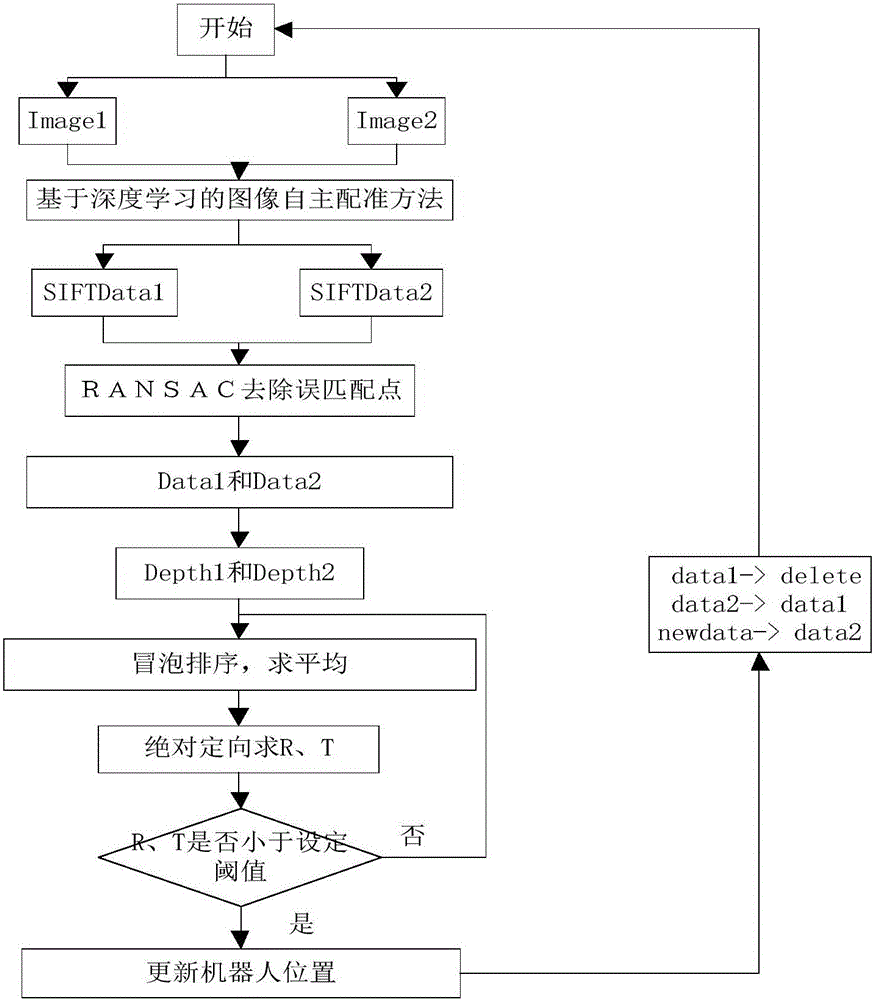

[0037] The technical solutions of the present invention will be further described below in conjunction with the embodiments and the accompanying drawings. like figure 1 Shown, be the flow chart of the robot image localization method based on deep learning of the present invention, mainly comprise the following steps in the present invention:

[0038] A. In-depth training and learning to obtain image models and matching rules;

[0039] B. The robot collects environmental images in azimuth, and performs feature extraction and feature matching on the collected images according to the obtained image model and matching rules to obtain the three-dimensional coordinates of all matching points, and obtain the common key point information of at least two images;

[0040] C. Use a random sampling consensus algorithm to eliminate the mismatched points in the matching points in the above image, and then obtain the three-dimensional coordinates of at least two key points from the image; ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com